Double-Enhanced Convolutional Neural Network for Multi-Stage Classification of Alzheimer’s Disease

Volume 9, Issue 2, Page No 09–16, 2024

Adv. Sci. Technol. Eng. Syst. J. 9(2), 09–16 (2024);

DOI: 10.25046/aj090202

DOI: 10.25046/aj090202

Keywords: Double-enhanced CNN, Multi-stage classification, Attention module, Generative adversarial network

Being known as an irreversible neurodegenerative disease which has no cure to date, detection and classification of Alzheimer’s disease (AD) in its early stages is significant so that the deterioration process can be slowed down. Generally, AD can be classified into three major stages, ranging from the “normal control” stage with no symptoms shown, the “mild cognitive impairment (MCI)” stage with minor symptoms, and the AD stage which depicts major and serious symptoms. Due to its generative features, MCI patients tend to easily progress to the AD stage if appropriate diagnosis and prevention measures are not taken. However, it is difficult to accurately identify and diagnose the MCI stage due to its mild and insignificant symptoms that often lead to misdiagnosis. In other words, the classification of multiple stages of AD has been a challenge for medical professionals. Thus, deep learning models like convolutional neural networks (CNN) have been popularly utilized to overcome this challenge. Nevertheless, they are still limited by the issue of limited medical images and their weak feature representation ability. In this study, a double-enhanced CNN model is proposed by incorporating an attention module and a generative adversarial network (GAN) to classify magnetic resonance imaging (MRI) brain images into 3 classes of AD. MRI images are obtained from the Open Access Series of Imaging Studies (OASIS) database and four experiments are done in this study to observe the classification performance of the enhanced model. From the results obtained, it can be observed that the enhanced CNN model with GAN and attention module has achieved the best performance of 99% as compared to the other models. Hence, this study has shown that the double-enhanced CNN model has effectively boosted the performance of the deep learning model and overcame the challenge in the multi-stage classification of AD.

1. Introduction

This paper is an extension work originally presented at the 2023 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET) [1]. It has been extended and improved in terms of its model for the multi-stage classification of Alzheimer’s disease (AD). Following the trend of improved performance obtained from single-enhanced convolutional neural network (CNN) models, this study proposes a double-enhanced CNN model by incorporating an attention module together with a generative adversarial network (GAN) for further improvement over existing methods.

As AD has been known as a progressive brain disorder that is still incurable to date, its early detection and diagnosis have been identified as a significant step by medical professionals [2]. Appropriate treatments can help to alleviate the deterioration process of AD even though it is impossible to fully cure an Alzheimer’s patient. World Health Organization (WHO) [3] has reported that AD is the seventh leading cause of death globally and there are currently more than 55 million affected individuals worldwide. Thus, numerous research has been done in this field throughout the years to alleviate the condition [4]. Accurate classification and diagnosis of the disease are important as they can help doctors to effectively provide suitable treatments for slowing down the deterioration process so that patients will be able to continue their daily life as usual [2]. However, it is not easy to classify and diagnose the stages of AD accurately due to the complexity of medical images and the ambiguity among the stages [5].

Generally, there are three major stages of Alzheimer’s, which range from the normal control (NC) stage with no symptoms, mild cognitive impairment (MCI) with slight or mild symptoms, and the AD stage with serious and major symptoms. The challenge in classifying between these multiple stages is the difficulty in identifying the MCI stage which consists of faint symptoms that are hardly noticed [6]. However, there is a need to accurately identify and classify this prodromal stage of AD as the symptoms might worsen over time and progress to the next serious stage of AD [7]. Existing studies have shown that multi-stage classification is more difficult to detect than binary classification which only comprises two distinct stages, NC and AD. Thus, deep learning classification models have been utilized to efficiently aid medical practitioners in the classification and diagnosis of medical images [8, 9].

In [10], the authors used a type of CNN model known as the Siamese CNN to classify multiple dementia stages. Similarly, authors in [11] and [12] also applied different types of CNN models, such as VGG-16, AlexNet, ResNet-18, and GoogleNet for the classification of MRI scans into their respective stages. Aside from the CNN model which is well-known for its great ability to learn from raw images and classify them accurately [13], other types of deep learning models have been utilized as well. For instance, in [14] and [15], the authors applied recurrent neural networks (RNN) for the classification task due to the model’s ability to solve the issue of incomplete feature extraction. On the other hand, authors in [16] used a long short-term memory (LSTM) model for multi-stage classification, while in [17], the authors utilized a GAN for image enhancement and classification tasks. All these studies that utilized various types of deep learning networks have proven that these models can effectively classify and diagnose AD stages accurately.

Nevertheless, deep learning models generally require large amounts of data for training and learning image features [18]. Hence, the complexity and limited availability of medical images have appeared to be a challenge for AD classification recently. Magnetic resonance imaging (MRI) brain images of AD patients, which are commonly used to visualize the inside of the human brain and observe any abnormalities, have a complex structure that requires much experience, time, and professionalism for analysis [5]. Besides, they are also available in limited amounts due to the privacy protection of patients’ data, leading to insufficient data for research purposes. Thus, classification models have been enhanced with data augmentation models recently to overcome this limitation. For instance, CNN models are being enhanced by incorporating a GAN model for dataset expansion before the classification task. Authors in [17], [19], and [20] have shown examples of state-of-the-art studies that applied single-enhanced CNN models using GAN for their research to overcome this challenge.

However, another issue in CNN models has arisen, which affects the accuracy of AD classification tasks. The lack of adaptive channel weighting in CNN models has been identified recently and this leads to the inability of the model to effectively learn significant features from MRI brain images to accurately classify AD [21]. Thus, to solve this issue, the CNN model needs to be enhanced using an attention module to improve its feature representation ability. The attention module is a type of enhancement module that can identify regions of interest (ROIs) in an image and apply attention to them [22]. Using the information provided by the attention module, the CNN model will be able to focus on learning the features significant to each stage of Alzheimer’s, and then accurately classify them. In [23] and [24], the authors presented studies that applied a single enhanced CNN model using an attention module in their works and achieved improved results. Thus, this study aims to propose a double-enhanced CNN classification model to overcome the mentioned challenges.

2. Methodology

2.1. Dataset

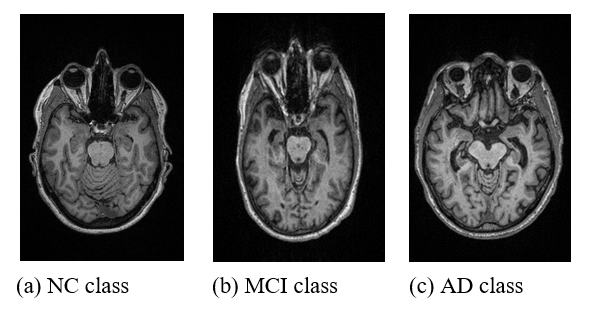

In this study, MRI data required for training the deep learning model to perform AD classification are obtained from the Open Access Series of Imaging Studies (OASIS) database (https://www.oasis-brains.org/)[25]. The OASIS database is a publicly open-access database on medical imaging which is commonly used for research purposes. Since a multi-class classification task of Alzheimer’s disease (AD) is carried out in this study, MRI data from three different classes are collected for experiment purposes. For each class, namely the normal control (NC), mild cognitive impairment (MCI), and Alzheimer’s disease (AD) classes respectively, 100 subjects each are collected to maintain a balanced dataset. Table 1 shows the demographic and clinical characteristics of the MRI data collected from the OASIS database. As seen from the table, the subjects are chosen based on a clinical dementia rating (CDR) score, where certain ranges of CDR represent different classes of AD. Moreover, all the MRI images collected are standardized by using only T1-weighted MRI sequences scanned with a 3 Tesla scanner. Figure 1 shows examples of MRI data collected from the OASIS database.

Table 1: Clinical and Demographic Characteristics of MRI Data

| Classes | AD | MCI | NC |

| Number of subjects | 100 | 100 | 100 |

| CDR score | CDR > 2 | 0.5 < CDR < 1 | CDR = 0 |

| Gender | Males and females | ||

| Age | 65 years old < Age < 74 years old | ||

| MRI sequence | T1-weighted | ||

| Scanner type | 3 Tesla scanner | ||

2.2. Proposed Methodology

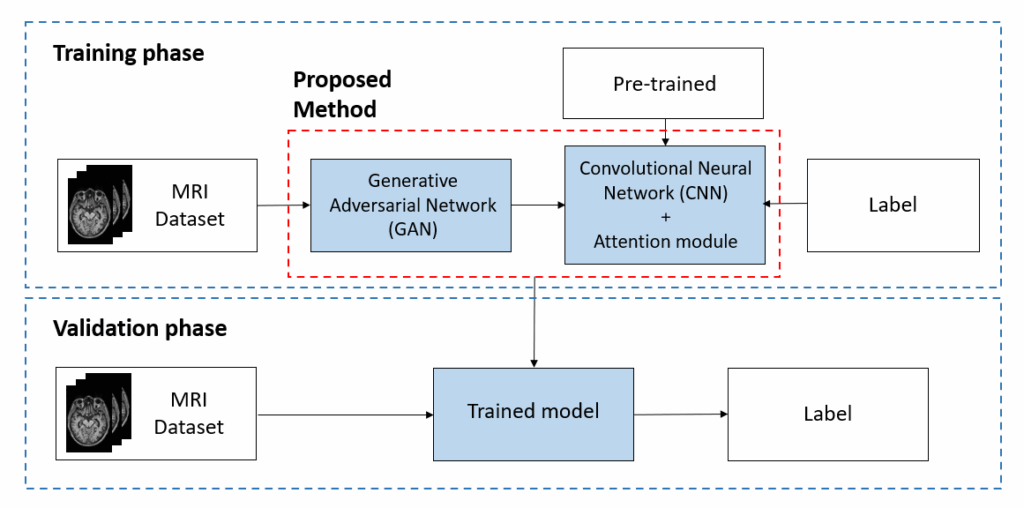

The proposed methodology for this study is an enhanced CNN model with an attention module and GAN model. It is proposed to enhance and improve the performance of the CNN model in the multi-class classification task of diagnosing AD. Figure 2 shows the overall flowchart of the proposed methodology. Firstly, the GAN model will be trained using the MRI dataset collected to expand the dataset. Then, the expanded dataset will be inputted to the CNN model with an attention module incorporated for training and validation. The attention module will be responsible for identifying the significant features and regions of interest (ROIs) that correspond to each stage of AD. With this information, the CNN model will undergo training to learn and classify MRI images into the respective 3 stages of AD. After training, the trained CNN model will be validated with an unseen set of MRI data to validate its performance and generalizability. Since there are generally 2 phases in this proposed methodology, the MRI dataset collected is distributed into 2 sets, namely training and validation, as shown in Table 2. From the 300 subjects collected from the OASIS database, a total of 3000 MRI slice images are included as the dataset for this study after filtering and choosing only the slices with significant information. Then, the dataset is split into two subsets of training and validation with a ratio of 8:2.

Table 2: Distribution of MRI Data for Training and Validation

| Classes | AD | MCI | NC | Total |

| Training | 800 | 800 | 800 | 2400 |

| Validation | 200 | 200 | 200 | 600 |

2.3. Convolutional Neural Network (CNN)

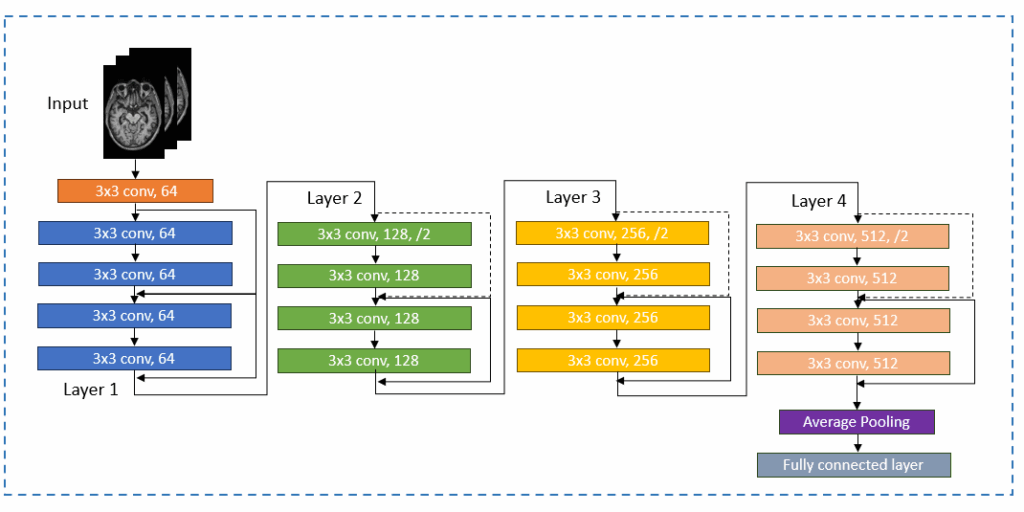

In this study, a pre-trained convolutional neural network (CNN) is used to learn and classify MRI images into 3 classes, namely NC, MCI, and AD. CNN is a type of deep neural network popularly used for image analysis and classification tasks due to its powerful ability in feature extraction and learning discriminative representations from raw data [26]. Its architecture is illustrated and shown in Figure 3.

However, current trends show that transfer learning is often being used together with CNN models recently for better training experience and performance [27]. Transfer learning can aid CNN models by providing better start value and asymptote, as there is no need to train a model from scratch. This is because the CNN models have been previously trained using ImageNet, a large dataset with millions of images [28], and thus, pre-trained models have been equipped with the optimum parameters for the best training results. With just some fine-tuning, the CNN model can be easily reused for other tasks, such as for AD diagnosis in this case.

Since there are numerous types of pre-trained CNN models available, this study has chosen to utilize a ResNet-18 model for the multi-stage classification task of AD. Due to its network architecture that uses skip connections and residual blocks, it can support many convolutional layers, enable smoother gradient flow, and solve the issue of vanishing gradients [29]. Figure 4 shows its network architecture.

2.4. Attention Module

Adapted from [1], the attention module is a popularly used enhancement module recently in deep learning applications due to its ability to aid in a network’s feature representation power. It has been successfully applied and used in various fields such as computer vision and natural language processing (NLP) [30]. The main characteristic of the attention module is that it can help to strengthen the feature extraction ability of a CNN model, and thus enhance the model’s analysis and classification performance. Since it is a computer-aided mechanism inspired by the human beings’ visualization system [31], the attention module can easily recognize which regions are significant and carry important information in an image, just like the ability of the human eye. Then, it will help the deep learning model to concentrate on learning the important information only by applying focus on these regions of interest (ROIs), such as assigning a higher degree of importance to them. Thus, during training, the CNN model will be able to learn better as they can focus on the important regions only instead of learning from the whole image which contains abundant information.

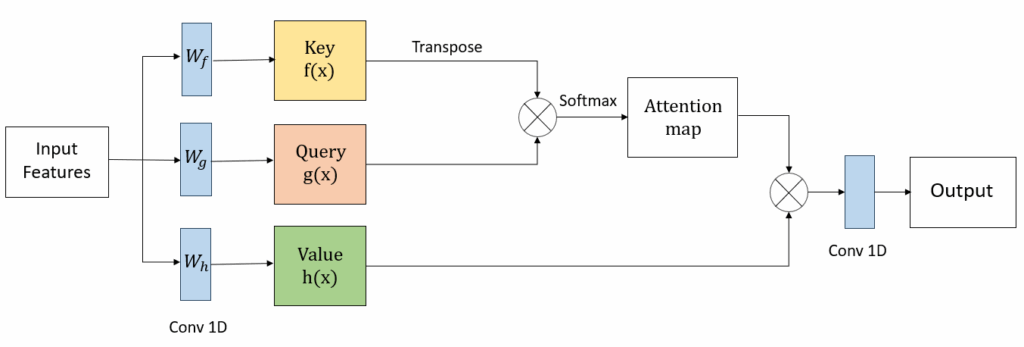

In this study, a type of attention module known as self-attention is incorporated into the CNN model for the multi-stage classification of AD. Among the various types of attention modules available, self-attention is chosen due to its characteristics that can learn global or long-range dependencies. It can help in addressing the problem of computational inefficiency brought on by CNN’s limited ability that only perform local operations. Thus, it is incorporated into the CNN model to enhance its feature-capturing ability [24]. Figure 5 shows the structure of the self-attention module.

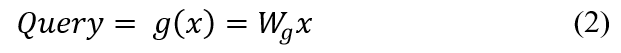

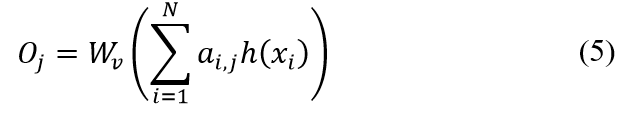

To help the CNN model capture global information efficiently, the self-attention module maps a key, query, and value to the input. The key and the value are the extracted features from the input MRI images, while the query determines the significant features to be learnt by CNN. As shown in Figure 5, the key, query, and value are transformed into vectors by using a 1x1x1 convolution filter labelled with Wf, Wg, and Wh respectively. After the transformations, they are represented as shown in (1), (2), and (3):

![]()

As shown from (1), (2), and (3), the represents the features from the original feature map, where C is the number of channels and N is the number of locations of features respectively. Then, the self-attention map is calculated as below:

, where denotes the degree of attention of the model on the i-th region during the synthesis of the j-th region. Following this, the output of the self-attention module is represented by , where , and

A 1x1x1 convolutional filter is again used to reduce the number of channels of the final output for standardization and memory efficiency as shown in Figure 5.

2.5. Generative Adversarial Network (GAN)

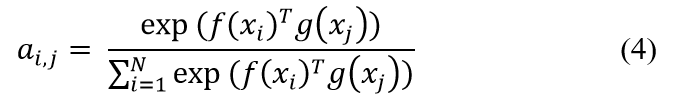

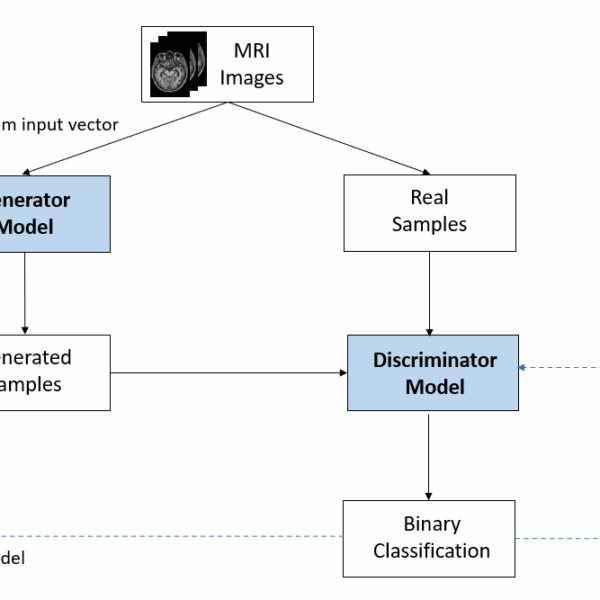

As shown in the overall methodology flowchart in Figure 2, MRI data will first be inputted into a Generative Adversarial Network (GAN) model before they are used to train the CNN model for multi-class classification of AD. GAN is a type of unsupervised deep neural network popularly used for data augmentation and expansion tasks due to its progressive nature. Introduced in [32], the author had proven that it is able to generate realistic and high-quality samples by applying the knowledge from learning the probability distribution of input variables. Thus, it has been increasingly used in recent studies that faced the problem of insufficient data, such as in this research domain where MRI images of Alzheimer’s patients are always in limited quantity [17, 19, 33]. Figure 6 shows the structure of a GAN model.

From Figure 6, it can be seen that there are generally 2 network models in a GAN, which are the generator and discriminator models respectively. The generator model typically generates new samples based on the received random input vector and then passes them to the discriminator model for the following step. On the other hand, the discriminator model receives the real MRI images from the input as well as the generated samples from the generator model. It then compares them and distinguishes the generated samples from the real ones. Both models will continuously update and improve their models to achieve a point of equilibrium where the discriminator can no longer distinguish generated samples from the real data. Equation (6) shows the loss function of the GAN where both the generator and discriminator models will continuously learn until they achieve the goal of maximizing the number of real-looking sample images.

2.6. Experiment Setup

In this study, four experiments are carried out to verify and observe the difference in the models’ performances when classifying MRI images into multiple stages of AD. Table 3 shows the model used for each experiment and the differences between them. Experiment I is done using only the CNN model without applying any enhancements. Experiment II utilizes an enhanced CNN with an attention module, while a GAN-enhanced CNN model is utilized for Experiment III. Lastly, the proposed enhanced model in this study, which is the CNN enhanced with attention module and GAN is applied in Experiment IV.

Table 3: Four experiments done in this study

| Experiment | CNN model | Enhanced with attention module | Enhanced with GAN model |

| I | / | X | X |

| II | / | / | X |

| III | / | X | / |

| IV | / | / | / |

In Experiments I to IV, each of the models is developed using PyTorch, the Python-based deep learning framework and trained using the graphics processing unit (GPU). The hyperparameters used for the models’ training are tabulated in Table 4. To avoid bias, the same amount of MRI data as shown in Table 2 is applied for models’ training and validation. However, for Experiments III and IV which involve the enhancement using the GAN model, the amount of data used for the multi-stage classification task is later increased due to the addition of generated MRI data samples by GAN, which will be further explained in the results and discussion section below.

Table 4: Training hyperparameters used in Experiments I to IV

| Hyperparameters | GAN model | CNN model |

| MRI image size | 64 x 64 x 3 | 224 x 224 x 3 |

| Epoch | 100 | 100 |

| Batch size | 64 | 8 |

| Learning rate | 0.0002 | 0.0001 |

| Optimizer | Adam optimizer | Adam optimizer |

| Loss function | Binary cross entropy | Categorical cross-entropy |

2.7. Performance Evaluation

After training the models in Experiments I to IV, their performances are measured and evaluated for comparison purposes. It is required to observe the differences in performances of the various combinations of models used in the four experiments so that the best model can be identified. It is also to validate whether the proposed model can perform as desired to achieve the objective of this study. Thus, a few performance metrics are used to evaluate and measure the performance of the 4 different models in the multi-stage classification task of AD. Equations (7) to (10) show the four types of evaluation metrics used in this study together with their method of calculations. The accuracy, precision, recall, and F1-score of the four models used in Experiments I to IV are calculated and tabulated in the results section for performance evaluation. Based on Equations (7) to (10), it can be seen that the metrics are calculated by using the true and false positives and negatives obtained from the classification tasks.

3. Result and Discussion

After carrying out four experiments by using four different combinations of models to verify the proposed methodology, all the results are tabulated as shown in Table 5. The table shows the performance of the models in terms of performance metrics such as accuracy, precision, recall, and f1-score. The different models used in the four experiments are listed below:

- Experiment I: CNN model only

- Experiment II: Enhanced CNN model with attention module only

- Experiment III: Enhanced CNN model with GAN only

- Experiment IV: Enhanced CNN model with attention module and GAN model

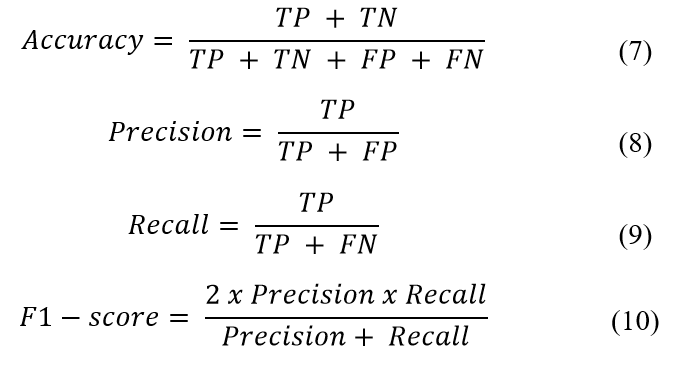

For each experiment, the CNN model is trained to classify MRI images into different stages of AD using the same number of data as shown in Table 2 to avoid bias. However, for experiments III and IV which involve the usage of GAN, the MRI dataset is expanded before being inputted into the classification model. Figure 7 shows the results from training the GAN model using MRI images obtained from the OASIS database. As shown in the figure, the grid image on the left illustrates the real MRI images from the MRI dataset, while the grid image on the right shows the fake MRI images that are generated by the GAN model. Thus, from the figure, it can be seen that the fake images look quite similar to the real images with most of the image features accurately generated by the GAN after learning them during model training. For Experiments III and IV, the generated MRI images output by the GAN model are added to the original MRI dataset before the CNN models are trained for multi-stage classification tasks. In both experiments, 160 generated MRI sample images were added to the original dataset, accounting for a total of 960 MRI images used for the CNN classification task.

Table 5 shows the complete summary of the results obtained from the multi-stage classification task of AD in terms of accuracy, precision, recall, and f1-score for Experiments I to IV. On the other hand, Table 6 shows a comparison between the performances of the four experiments, focusing on the accuracy metrics. Hence, it can be seen that in general, the results obtained from Experiment I such as the accuracy, precision, recall, and f1-score have the lowest values among the 4 experiments. This is because a basic CNN model without any enhancement is used for classification in Experiment I. Experiments II and III apply a single enhancement to the CNN models, and a slight increase in the performance metrics’ results is seen. For instance, the training accuracy has improved from 95% in Experiment I to 98% in Experiment II, while the f1-score has improved from 90% in Experiment I to 96% in Experiment III.

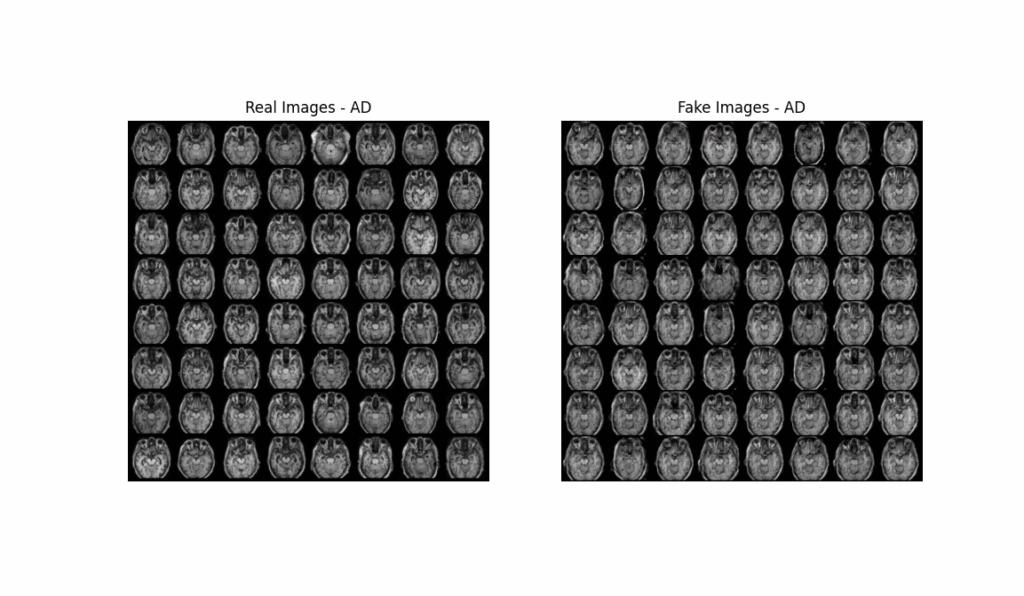

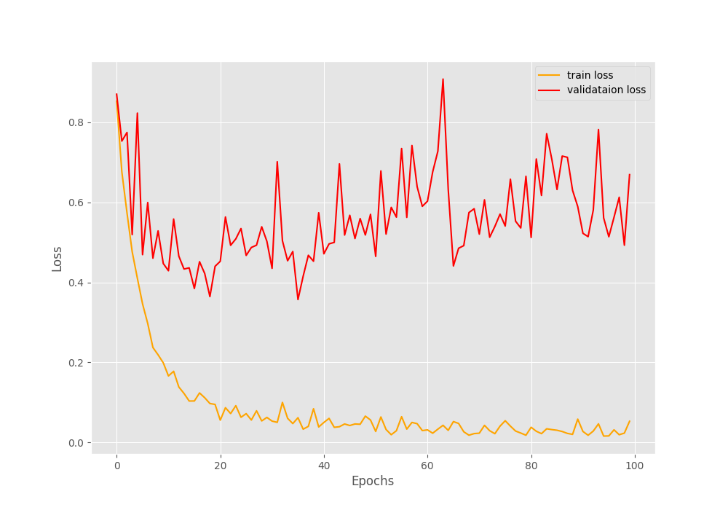

Following this, a more remarkable improvement in the results can be observed from Experiment IV which utilized a double-enhanced CNN model, as proposed in this study. From Table 5, the enhanced CNN model with attention module and GAN model has managed to output the highest accuracy at 99% during training and 92% during validation. These can also be validated from the accuracy and loss graphs that are shown in Figures 8 and 9 respectively. From Figure 8, it can be seen that the accuracy achieved by the proposed model matches the results tabulated in Tables 5 and 6, while in Figure 9, the model loss during training precisely decreases until approximately zero as the number of training epochs increases. However, a slight limitation can be observed from the graphs, which is the presence of some overfitting in the model training, as the validation performance is generally lower than the training results.

Nevertheless, great improvements in the other performance metrics such as precision, recall, and f1-score in Experiment IV can be clearly observed in Table 5 as well. The results suggest that the proposed double-enhanced CNN model achieved the highest performance as compared to the other 3 models in the other experiments. Thus, from the tables and graphs shown, it can be concluded that the classification model’s performance significantly improved when a double-enhanced CNN model with both attention module and GAN is utilized, as proposed.

Table 5: Results of performance metrics obtained from Experiments I to IV

| Accuracy | Precision | Recall | F1-Score | |

| Experiment I | ||||

| Training | 0.9580 | 0.9017 | 0.9020 | 0.9018 |

| Validation | 0.8786 | 0.8384 | 0.8369 | 0.8357 |

| Experiment II | ||||

| Training | 0.9817 | 0.9587 | 0.9587 | 0.9587 |

| Validation | 0.8917 | 0.8752 | 0.8746 | 0.8726 |

| Experiment III | ||||

| Training | 0.9743 | 0.9604 | 0.9604 | 0.9604 |

| Validation | 0.8983 | 0.8796 | 0.8803 | 0.8788 |

| Experiment IV | ||||

| Training | 0.9906 | 0.9636 | 0.9636 | 0.9636 |

| Validation | 0.9200 | 0.8838 | 0.8828 | 0.8809 |

Table 6: Comparison of accuracies obtained from different models

| Experiment | Model | Accuracy |

| Experiment I | CNN only | 0.9580 |

| Experiment II | CNN + Attention | 0.9817 |

| Experiment III | CNN + GAN | 0.9743 |

| Experiment IV | CNN + Attention + GAN | 0.9906 |

4. Conclusion

In a nutshell, the results achieved in this study as discussed in the previous section have validated and proved that the proposed objective has been achieved successfully. A double-enhanced CNN model using an attention module and a GAN model has resulted in an improved multi-stage classification performance of Alzheimer’s disease, where an accuracy as high as 99% had been achieved. As compared with unenhanced or only single-enhanced CNN models, the classification performance of this double-enhanced model is clearly improved and is the highest among the others. Thus, the significance of the double-enhanced CNN model is proved as it can appropriately solve the challenge in the multi-stage classification of AD, where the MCI stage is difficult to identify, and the issue of limited medical images available. Hence, the findings obtained in this study can significantly contribute to this research domain by verifying the importance of a double-enhanced classification model for the multi-stage classification of AD. Lastly, future research directions may involve improving the stability of the GAN model for better samples, enhancing the generalization ability of the CNN model to overcome overfitting issues, and trying to incorporate other types of enhancement modules for better classification performance.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgement

The authors would like to express their gratitude towards Universiti Teknologi Malaysia (UTM) for their financial support for this work under the Fundamental Research Grant (Q.K130000.3843.22H18).

- W. P. Ching, S. S. Abdullah, M. I. Shapiai, “Enhancing Multi-Stage Classification of Alzheimer’s Disease with Attention Mechanism”, 2023 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), 230-235, 2023, doi: 10.1109/IICAIET59451.2023.10291792.

- M. Liu, F. Li, H. Yan, K. Wang, Y. Ma, L. Shen, M. Xu, “A multi-model deep convolutional neural network for automatic hippocampus segmentation and classification in Alzheimer’s disease”, Neuroimage, 208, 116459, 2019, doi: 10.1016/j.neuroimage.2019.116459.

- W. H. Organization. Dementia. https://www.who.int/news-room/fact-sheets/detail/dementia. (2023).

- J. E. Galvin, “Prevention of Alzheimer’s Disease: Lessons Learned and Applied”, J Am Geriatr Soc, 65(10), 2128-2133, doi: 10.1111/jgs.14997.

- C. S. Lee, P. G. Nagy, S. J. Weaver, D. E. Newman-Toker, “Cognitive and system factors contributing to diagnostic errors in radiology”, AJR Am J Roentgenol, 201(3), 611-617, doi: 10.2214/ajr.12.10375.

- A. Association, “2019 Alzheimer’s disease facts and figures”, Alzheimer’s & dementia, 15(3), 321-387.

- Y. Zhang, Q. Teng, Y. Liu, Y. Liu, X. He, “Diagnosis of Alzheimer’s disease based on regional attention with sMRI gray matter slices”, Journal of Neuroscience Methods, 365, 109376, doi: 10.1016/j.jneumeth.2021.109376.

- J. Liu, M. Li, Y. Luo, S. Yang, W. Li, Y. Bi, “Alzheimer’s disease detection using depthwise separable convolutional neural networks”, Computer Methods and Programs in Biomedicine, 203, 106032, doi: 10.1016/j.cmpb.2021.106032.

- M. A. Ebrahimighahnavieh, S. Luo, R. Chiong, “Deep learning to detect Alzheimer’s disease from neuroimaging: A systematic literature review”, Computer Methods and Programs in Biomedicine, 187, 105242, doi: 10.1016/j.cmpb.2019.105242.

- A. Mehmood, M. Maqsood, M. Bashir, Y. Shuyuan, “A deep siamese convolution neural network for multi-class classification of alzheimer disease”, Brain Sciences, doi: 10.3390/brainsci10020084.

- B. C. Simon, D. Baskar, V. S. Jayanthi, “Alzheimer’s Disease Classification Using Deep Convolutional Neural Network”, 2019 9th International Conference on Advances in Computing and Communication (ICACC), doi: 10.1109/ICACC48162.2019.8986170.

- R. Jain, N. Jain, A. Aggarwal, D. J. Hemanth, “Convolutional neural network based Alzheimer’s disease classification from magnetic resonance brain images”, Cognitive Systems Research, doi: 10.1016/j.cogsys.2018.12.015.

- D. Lin, A. V. Vasilakos, Y. Tang, Y. Yao, “Neural networks for computer-aided diagnosis in medicine: A review”, Neurocomputing, 216, 700-708, doi: 10.1016/j.neucom.2016.08.039.

- R. Cui, M. Liu, A. D. N. Initiative, “RNN-based longitudinal analysis for diagnosis of Alzheimer’s disease”, Computerized Medical Imaging and Graphics, doi: 10.1016/j.compmedimag.2019.01.005.

- J. Koo, J. H. Lee, J. Pyo, Y. Jo, K. Lee, “Exploiting multi-modal features from pre-trained networks for Alzheimer’s dementia recognition”, arXiv preprint arXiv:2009.04070, doi: 10.21437/Interspeech.2020-3153.

- A. Aqeel, A. Hassan, M. A. Khan, S. Rehman, U. Tariq, S. Kadry, A. Majumdar, O. Thinnukool, “A Long Short-Term Memory Biomarker-Based Prediction Framework for Alzheimer’s Disease”, Sensors, doi: 10.3390/s22041475.

- X. Zhou, S. Qiu, P. S. Joshi, C. Xue, R. J. Killiany, A. Z. Mian, S. P. Chin, R. Au, V. B. Kolachalama, “Enhancing magnetic resonance imaging-driven Alzheimer’s disease classification performance using generative adversarial learning”, Alzheimer’s Research & Therapy, doi: 10.1186/s13195-021-00797-5.

- J. Islam, Y. Zhang, “Brain MRI analysis for Alzheimer’s disease diagnosis using an ensemble system of deep convolutional neural networks”, Brain Informatics, doi: 10.1186/s40708-018-0080-3.

- J. N. Cabreza, G. A. Solano, S. A. Ojeda, V. Munar, “Anomaly Detection for Alzheimer’s Disease in Brain MRIs via Unsupervised Generative Adversarial Learning”, 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), doi: 10.1109/ICAIIC54071.2022.9722678.

- E. Jung, M. Luna, S. H. Park, “Conditional Generative Adversarial Network for Predicting 3D Medical Images Affected by Alzheimer’s Diseases”, Predictive Intelligence in Medicine: Third International Workshop, PRIME 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, October 8, 2020, Proceedings, Lima, Peru, doi: 10.1007/978-3-030-59354-4_8.

- R. Kadri, M. Tmar, B. Bouaziz, F. Gargouri, Alzheimer’s Disease Detection Using Deep ECA-ResNet101 Network with DCGAN. In (pp. 376-385). doi: 10.1007/978-3-030-96305-7_35.

- J. Zhang, B. Zheng, A. Gao, X. Feng, D. Liang, X. Long, “A 3D densely connected convolution neural network with connection-wise attention mechanism for Alzheimer’s disease classification”, Magnetic Resonance Imaging, doi: 10.1016/j.mri.2021.02.001.

- S. H. Wang, Q. Zhou, M. Yang, Y. D. Zhang, “ADVIAN: Alzheimer’s Disease VGG-Inspired Attention Network Based on Convolutional Block Attention Module and Multiple Way Data Augmentation”, Frontiers in Aging Neuroscience, 13, doi: 10.3389/fnagi.2021.687456.

- X. Zhang, L. Han, W. Zhu, L. Sun, D. Zhang, “An explainable 3D residual self-attention deep neural network for joint atrophy localization and Alzheimer’s disease diagnosis using structural MRI”, IEEE Journal Of Biomedical And Health Informatics, 26(11), 5289-5297, doi: 10.1109/JBHI.2021.3066832.

- J. L. Pamela, L. S. B. Tammie, C. M. John, K. Sarah, H. Russ, X. Chengjie, G. Elizabeth, H. Jason, M. Krista, G. V. Andrei, E. R. Marcus, C. Carlos, M. Daniel, “OASIS-3: Longitudinal Neuroimaging, Clinical, and Cognitive Dataset for Normal Aging and Alzheimer Disease”, medRxiv, 2019.2012.2013.19014902, doi: 10.1101/2019.12.13.19014902.

- Phung, Rhee, “A High-Accuracy Model Average Ensemble of Convolutional Neural Networks for Classification of Cloud Image Patches on Small Datasets”, Applied Sciences, 9, 4500, doi: 10.3390/app9214500.

- N. Daldal, Z. Cömert, K. Polat, “Automatic determination of digital modulation types with different noises using Convolutional Neural Network based on time–frequency information”, Applied Soft Computing, 86, 105834, doi: 10.1016/j.asoc.2019.105834.

- J. Deng, W. Dong, R. Socher, L. J. Li, L. Kai, F.F. Li, “ImageNet: A large-scale hierarchical image database”, 2009 IEEE Conference on Computer Vision and Pattern Recognition, doi: 10.1109/CVPR.2009.5206848.

- K. He, X. Zhang, S. Ren, J. Sun, “Deep residual learning for image recognition”, Proceedings of the IEEE conference on computer vision and pattern recognition, doi: 10.48550/arXiv.1512.03385.

- A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, I. Polosukhin, “Attention is all you need”, Advances in neural information processing systems, 30, doi: 10.48550/arXiv.1706.03762

- T. Yang, C. Tong, “Real-time detection network for tiny traffic sign using multi-scale attention module”, Science China Technological Sciences, 65(2), 396-406, doi: 10.1007/s11431-021-1950-9.

- I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio, “Generative adversarial networks”, Communications of the ACM, 63(11), 139-144, doi: 10.48550/arXiv.1406.2661.

- T. Bai, M. Du, L. Zhang, L. Ren, L. Ruan, Y. Yang, G. Qian, Z. Meng, L. Zhao, M. J. Deen, “A novel Alzheimer’s disease detection approach using GAN-based brain slice image enhancement”, Neurocomputing, 492, 353-369, doi: 10.1016/j.neucom.2022.04.012.

- Fumiya Kinoshita, Kosuke Nagano, Gaochao Cui, Miho Yoshii, Hideaki Touyama, "A Study on Novel Hand Hygiene Evaluation System using pix2pix", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 2, pp. 112–118, 2022. doi: 10.25046/aj070210

- Kohki Nakane, Rentaro Ono, Hiroki Takada, "Numerical Analysis for Feature Extraction and Evaluation of 3D Sickness", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 949–955, 2021. doi: 10.25046/aj0602108