Forecasting the Weather behind Pa Sak Jolasid Dam using Quantum Machine Learning

Volume 8, Issue 3, Page No 54–62, 2023

Adv. Sci. Technol. Eng. Syst. J. 8(3), 54–62 (2023);

DOI: 10.25046/aj080307

DOI: 10.25046/aj080307

Keywords: Machine Learning, Quantum Machine Learning, Quantum Circuit, Variational Quantum Classifier, Pa Sak Jolasid Dam

This paper extends the idea of creating a Quantum Machine Learning classifier and applying it to real weather data from the weather station behind the Pa Sak Jonlasit Dam. A systematic study of classical features and optimizers with different iterations of parametrized circuits is presented. The study of the weather behind the dam is based on weather data from 2016 to 2022 as a training dataset. Classification is one problem that can be effectively solved with quantum gates. There are several types of classifiers in the quantum domain, such as Quantum Support Vector Machine (QSVM) with kernel approximation, Quantum Neural Networks (QNN), and Variational Quantum Classification (VQC). According to the experiments conducted using Qiskit, an open-source software development kit developed by IBM, Quantum Support Vector Machine (QSVM), Quantum Neural Network (QNN), and Variable Quantum Classification (VQC) achieved accuracy 85.3%, 52.1%, and 70.1% respectively. Testing their performance on a test dataset would be interesting, even in these small examples.

1. Introduction

Programming computers to learn from data is the subfield of artificial intelligence (AI) known as machine learning (ML). In machine learning, support vector machines (SVM) are among the most frequently used classical supervised classification models [1]. The decision boundary and the hyperplane of the data points are divided into two classes by a pair of parallel hyperplanes that are discovered by SVM [2, 3]. However, there is also machine learning at the particle level called quantum computing. Quantum computing is computation using quantum mechanical phenomena such as superposition and entanglement. The difference is from the computer we use today, which is an electronic base on binary state based on transistors. Whereas simple digital computing requires data to be encoded into a binary number where each bit is in a certain state 0 or 1, quantum computing uses quantum bits (qubit). This can be a superposition of state, both 0 and 1 simultaneously. In quantum computing, a new algorithm is required for that problem, i.e., a normal algorithm used in a classical computer cannot be copied and run on a quantum computer at all. The Quantum Computer Algorithm for popular algorithms such as Prime factorization of integers Shor’s algorithm is a quantum algorithm that can attack the algorithm RSA and encryption process of 90% of computer systems worldwide in a short period. Quantum computers can also operate on qubits using quantum gates and measurements that change the observed state. Quantum gates and problems encode input variables into quantum states. To facilitate further modeling of the quantum state, quantum algorithms often exhibit probabilities in which they provide guidance for valid only for known probabilities. Quantum machine learning (QML) is an emerging interdisciplinary research field that combines quantum physics and machine learning, using it to help optimize and speed up data processing on the quantum state. In addition to the widespread popularity of QML, there is also the variational quantum classifier (VQC) for solving classification problems. At present, IBM has developed a quantum computer open to researchers or anyone interested in using it called IBM Q Experience, with a set of instructions developed in Python called Qiskit, which has a simulated quantum computer and real 5- and 15-qubit quantum computers to develop and test circuits. In this article, we present an experiment, which is a continuation of previous experiments [4, 5], that studied the forecast of water release from the dam and the weather forecast behind Pa Sak Jolasid Dam, respectively. Both experiments used a classical machine learning process that measures all model results as model accuracy. The model results are satisfactory.”

In this paper, an experiment was performed using a Quantum Machine Learning classifier and applying it to real data, which brought information from the weather station located behind the Pa Sak Jonlasit Dam. The Pa Sak Jolasid Dam is an earth dam with a clay core, 4,860 meters long, and 31.50 meters high. The maximum storage water level is +43.00 MSL, and the water storage capacity is 960 million cubic meters. The total operational budget is 19,230.7900 million baht, and the satellite coordinates are n14.964687, e101.022677 (see Fig.1). The red dot on the map represents the location of the weather station. Studying weather conditions, especially forecasting rainy days, can benefit water inflow management from quantum machine learning classifier techniques applied to actual weather data from Table 1. The total number of data is 1743 samples, divided into 1220 samples of training data and 523 samples of testing data. The number of features has 4 samples or 4 input qubits, and the label has 2 classes. Table 2 shows a sampling of the values of the features, which are average wind, average temperature, average pressure, average humidity, and label values.

A systematic study of the classical feature and optimizer with the different iterations of the parametrized circuits is presented. The study of the weather behind the dam is based on weather data from 2016 to 2022 as a training dataset. Classification is one problem that can be effectively solved with quantum gates. There are several types of classifiers in the quantum domain, such as Quantum Support Vector Machine (QSVM) with kernel approximation, Quantum Neural Networks (QNN), and Variable Quantum Classification (VQC). According to the experiment, Quantum Support Vector Machine (QSVM), Quantum Neural Network (QNN), and Variable Quantum Classification (VQC) achieved 90% accuracy. All of these algorithms were performed using Qiskit, an open-source software development kit (SDK) developed by IBM.

The classification is one problem that can be effectively solved with quantum gates. There are several types of classifiers in the quantum domain such as Quantum Support Vector Machine (QSVM) with kernel approximation [6-8], Quantum Neural Networks (QNN) [9, 10], and Variable Quantum Classification (VQC) [11-14]. The experimental results proved that we can use QML to solve real-world problems that are classically trained and tested before encoding the feature map, evaluating the model, and optimizing it from the algorithm above.

In this article, we will discuss the origin of the theory of quantum applied in section 2, followed by the steps and methods in section 3. Section 4 discusses the experimental results and explains the reasoning. Finally, section 5 provides a summary of the experiments and recommendations.

Regarding the experiment, we applied Quantum Machine Learning classifiers to real data from the weather station located behind the Pa Sak Jolasid Dam. This earth dam has a clay core, and it is 4,860 meters long and 31.50 meters high, with a maximum storage water level of +43.00 MSL, and a water storage capacity of 960 million cubic meters. The total operational budget is 19,230.7900 million baht, with satellite coordinates: n14.964687, e101.022677 (see Fig.1). The red dot on the map represents the location of the weather station, which studies weather conditions, especially forecasting rainy days, and can benefit water inflow management from quantum machine learning classifier techniques applied to actual weather data from Table 1.

The total number of data is 1743 samples, divided into 1220 samples of training data and 523 samples of testing data. The number of features has 4 samples or 4 input Qubits, and the label has 2 classes. Table 2 shows a sampling of the values of the features, which are average wind, average temperature, average pressure, average humidity, and label values. We present a systematic study of the classical feature and optimizer with the different iterations of the parametrized circuits.

In conclusion, the experimental results demonstrate that QML can be used to solve real-world problems, which are classically trained and tested before encoding the feature map, evaluating the model, and optimizing it from the algorithm above. Therefore, the potential applications of quantum machine learning classifiers are promising, and more research in this area should be encouraged

2. Related work

Since we have the weather dataset for the dam, we can make predictions based on the training data. This is a binary classification problem with an input vector x and binary output y in {0, 1}. The goal is to build a quantum circuit that produces a quantum state based on the following study.

2.1. Quantum Computing

What exactly is a quantum computer then? In a nutshell, it could be described as a physical implementation of n qubits with precise state evolution control. A quantum algorithm, according to this definition of quantum computers, is a controlled manipulation of a quantum system followed by a measurement to obtain information from the system. This basically means that a quantum computer can be thought of as a special kind of sampling device. However, because it is a quantum state, the configurations of the experiments are very important. Any quantum evolution can be approximated by a series of elementary manipulations, known as quantum gates, according to a theorem in quantum information [15]. Quantum circuits of these quantum gates are the basis for many quantum algorithms. The idea of a qubit came from upgrading classical bits [16, 17], which are 0 or 1, to a quantum state.

However, what are qubits? Because it is a two-level system defined on , a qubit is frequently referred to as the simplest possible quantum system. This state can be formulated as with (α, β) and |α|2 + |β|2 = 1, where and are hardware-defined orthonormal states known as computational basis states. The qubit is significant because it is in a superposition—that is, it is a either or at the same time—which means that, in contrast to classical bits, it possesses a mixture of both. Using tensor products, we can generalize this to include n untangled qubits.

Figure 1: Pa Sak Jolasid Dam, Coordinates: n14.964687, e101.022677.

Table 1. Dataset Attributions

Table 2. Partial Dataset

|

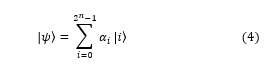

where stands for qubits. However, the state would no longer be separable if the qubits were entangled, and every qubit would either be or , resulting in

![]()

with ∈ , and = 1. Wherever we use the abbreviated notation := . To make the notation more elegant, we see that the basis states can be written as follows: ↔ ,…, ↔ giving us the straightforward equation. This allows us to translate the notation from binary numbers to integers.

As a result, {|0⟩ …, |i⟩} and n serve as the computational foundation for n qubits. Since there are 2n distinct strings, one requires 2n amplitudes to describe the state of n qubits, as we can see. In other words, quantum information is “larger” than classical information because the information stored in a quantum state with n qubits is exponential in n, whereas classical information is linear in n. which suggests quantum advancements thus far.

2.2. Quantum Circuit

We must begin by examining quantum gates in order to construct a quantum algorithm or quantum circuit [18, 19, 20], as mentioned earlier. Unitary transformations are the means by which quantum gates, or rather quantum logic gates, are produced. A straightforward transformation can serve as a quick reminder of what this means.

![]()

where and are two vector spaces in which U is a unitary operator. By “unitary,” mean that the hermitian conjugate of the operator is the inverse, U† = U-1, and that the operator is linear. This is important because we can use it to, for example, display

![]()

where, if is normalized, then by construction it is .

2.2.1 A single Qubit

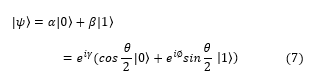

If we return to the subject of quantum gates, equation (1), the state would either be in the state , which has a probability of |α|2 or in the state , which has a probability of |β|2. Formally, 2 x 2 unitary transformations are used to describe single-qubit gates. We can begin by considering the X gate, which functions as the quantum equivalent of the classical NOT gate.

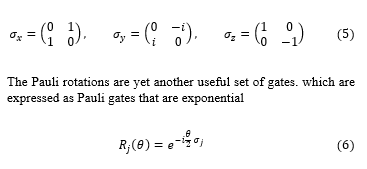

and the reverse This matrix is easily identifiable as one of the Pauli matrices, which are unitary by definition. As a result, we know that we can have at least X, Y, and Z gates with the unitary operators Pauli matrices.

where j is (x, y, z). Since the global phase ( ), the azimuthal ( ) and polar ( ) angles can be written into any quantum state,

2.2.2 Multi Qubit

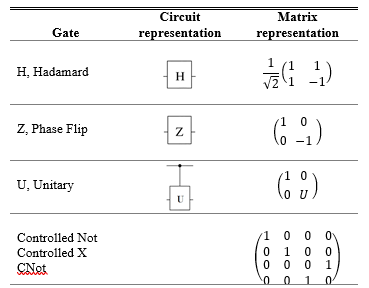

The controlled U gate is introduced because that work on multiple qubits simultaneously. where U can be any unitary gate with one qubit. For instance, the CNOT gate is obtained by setting U = x, and the NOT (X) operation is carried out when the first qubit is in state ; otherwise, nothing changes in the first qubit. A variety of quantum gates, their circuit, and how they are represented in a matrix show table 3. In a controlled gate, the U is a general unitary operator. We refer to j as (x, y, z) and denotes the appropriate Pauli matrix Eq. (5)

Table 3. Summary of all the gates in circuit and matrix representation.

2.3. Validation and Measurement

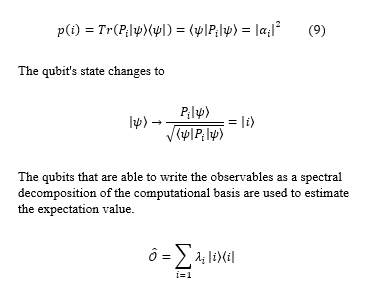

The measurement process is the final step in the theory for quantum computers regarding the quantum circuits that make up a quantum evolution [20]. From quantum mechanics, projectors of the Eigen spaces provide the probability of measuring a state. The probability of measuring i = {0, 1} is

The qubits that are able to write the observables as a spectral decomposition of the computational basis are used to estimate the expectation value.

where Pi is present. Using a Z gate, the observable yields an eigenvalue of +1 for state and -1 for state so that we can computationally determine which state it is in (9).

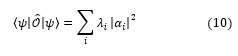

Since all that is required to determine the eigenvalues, is an estimation of the state’s amplitudes. Since statistics can be used to measure states’ amplitudes directly. They introduce a random Bernoulli variable called yij, where P(yij = 0) = 1 – and P(yij = 1) = [21]. If repeatedly prepare the state and measure it in the computational basis and collect S samples (yi1, …, yiS), additionally, be aware that the frequents estimator can estimate by

2.4. Quantum Support Vector Machine (QSVM)

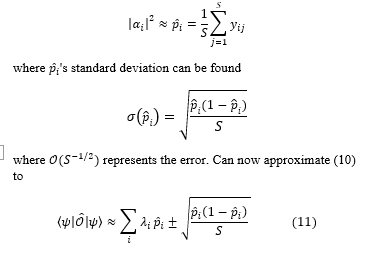

To efficiently compute kernel inputs, the quantum support vector machine QSVM and the quantum kernel estimator (QSVM-Kernel) [20, 22] make use of the quantum state space as a feature space. By applying a quantum circuit to the initial state , this algorithm nonlinearly maps the classical data x to the quantum state of n qubits:

The 2n-dimensional feature space created by the quantum circuit (where n is the number of qubits) is challenging to classically estimate. There are two consecutive layers in this circuit.

![]()

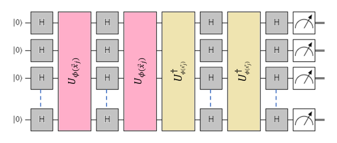

Where is a unitary operator that encodes the traditional input data, and H is a Hadamard gate Fig. 2.

Figure 2. The Circuit of QSVM

During the training phase, the kernel entries are evaluated for the training data and used to locate a separation hyperplane. After that, during the test phase, the new data x and the training data, which are used to classify the new data x according to the separation hyperplane, are used to evaluate the kernel inputs. Quantum computers evaluate the kernel inputs, while classical computers, like those used in a traditional SVM, are used for data classification and separation hyperplane optimization.

2.5. Variational Quantum Circuit

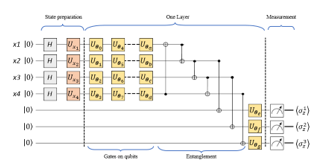

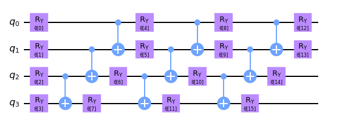

A variational circuit with four features is proposed in [22] to classify the dataset Fig. 3. The variational circuit performs the following operations. The state is used to initialize the circuit’s four qubits. The qubits are then placed in a superposition of and by applying the Hadamard gate one at a time. Then, a unitary square matrix designed for state preparation is used to perform a unitary operation on each qubit. The classical data (bits) are encoded into qubits in this method.

Figure 3. Variational Quantum Circuit

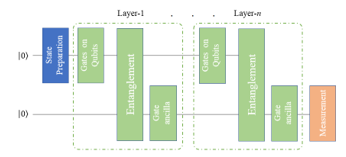

Figure 4. The Variational Quantum Circuit Architecture

Using multiple layers of interleaved rotational gates in data and auxiliary qubits, the variational circuit is designed following state preparation. Optimization is used to adjust the parameters. Fig. 4. shows the seven-layer initial implementation of the circuit as well as the architecture of the variational circuit model. The class label is obtained by processing the resulting qubits and measuring the auxiliary qubits.

2.6. Quantum Amplitude Estimation (QAE)

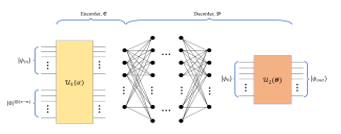

In [20, 23], a hybrid quantum autoencoder (HQA) variant of the Quantum Amplitude Estimation (QAE) algorithm was proposed [24, 25]. Quantum neural networks (QNNs) based on parameterized quantum circuits (PQC) were utilized in this model, which incorporates both classical and quantum machine learning. The model’s overall structure consists of an encoder and a subset of real vector space V of dimension v = dim(V), that transports a quantum state from Hilbert space , as well as a decoder that does the opposite of that. The encoder and decoder’s functional forms are specified, but the models themselves are not specified. As depicted in Fig. 5, the € encoder is a vector α controlled quantum circuit. The circuit applies the unitary U1(α) after receiving some states. On the system that combines the input state (v-n) with auxiliary qubits.

Figure 5. The Quantum Amplitude Estimation Architecture

3. Methodology

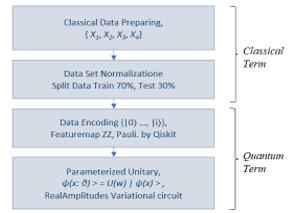

The weather dataset from the Thai Meteorological Department was used as the comparative dataset in the research. The dataset consists of 1773 data sets collected from 2016 to 2022, with 1220 of them being designated for training and 523 for testing. The data is divided into 4 variables for the features and 2 classes for the labels, as shown in Tables 2 and 3. However, there is a fairly standard approach to preprocessing. These strategies are not generally reasonable for planning adequate information for quantum classifiers while working with genuine informational collections. It has proposed a preprocessing strategy in this study, as depicted in Fig. 6, which encrypts the data before the QML algorithm uses it. Two QML classifiers are used in this article:

- A quantum support vector machine

- Build a quantum neural network (also known as Variational Classifier)

Both of the QML classifiers utilized preparation of feature maps, implementation of variational circuits, and measurement. The study analyzed the optimizer’s feature map, the depth of the variational circuit [26], and the depth of the feature map to understand why these models perform optimally, and attempted to determine if the new information can be effectively condensed.

We have using the Qiskit framework for quantum computing. A typical quantum machine learning model consists of two parts, as shown in Fig. 6, A classical part for pre- and post-processing data and a quantum part for leveraging the power of quantum mechanics to simplify certain calculations.

Figure 6: Experimental procedures

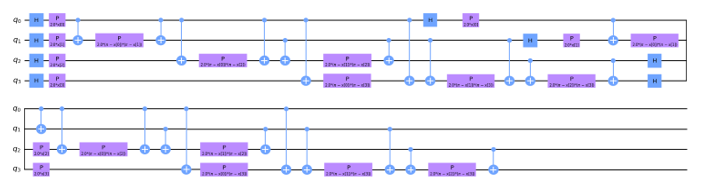

Figure 7: QSVM Featuremaps depths (2).

Figure 8: QSVM RealAmplitudes Variational circuit depths (3).

Figure 9: QNN Featuremaps and RealAmplitudes Variational

This study’s experimental design is depicted in Fig. 7. The challenge of training very complex machine learning models on large data sets is one reason for utilizing quantum machine learning.

3.1. Data preparing and normalization

We shuffle the data to ensure randomness, remove less relevant features, and normalize the information between the ranges of 0 and 2π, and 0 and 1 to properly use the Hilbert space. The data is divided into a training set for model building and a testset for model testing, with the testset size being kept at 30% of the total dataset. This is a common practice in traditional machine learning such as neural networks and support vector machines.

3.2. Data encoding

Data encoding or state preparation in quantum feature mapping is similar to a classical feature map in that it helps translate data into a different space. In the case of quantum feature mapping, the data is translated into quantum states to be input into an algorithm. The result is a quantum circuit where the parameters depend on the input data, which in our case is the classical weather behind the dam.

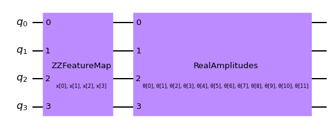

It’s worth noting that variational quantum circuits are unique in that their parameters can be optimized using classical methods. We utilized two types of feature maps pre-coded in the Qiskit circuit library, namely the ZZFeaturemap and PauliFeaturemap. To evaluate the performance of different models [27, 28], we varied the depths of these feature maps.

3.3. Variational quantum circuit

The model circuit is constructed using gates that evolve the input state. It is based on unitary operations and depends on external parameters that can be adjusted. Given a prepared state, , the model circuit U(w) maps it to another vector,

![]()

U(w) is comprised of a series of unitary gates.

4. Results & Discussion

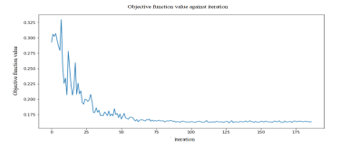

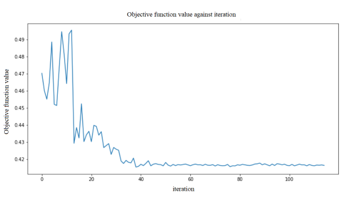

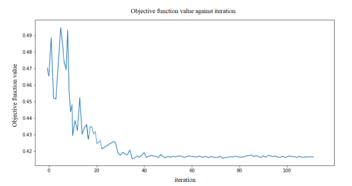

In this research, we make use of the ZZFeaturemap and PauliFeaturemap precoded featuremaps from the Qiskit circuit library. To test the effectiveness of the various models, we changed the featuremaps depths (2). We incorporate more entangle-ment into the model and repeat the encoding circuit by increasing the depth of a feature map. After we used our feature map, a classifier may locate a hyperplane to divide the input data, and a quantum computer can evaluate the data in this feature space as Fig.7. Then we utilized the RealAmplitudes variational circuit from Qiskit. By increasing the depth of the variational circuit, more trainable parameters are introduced into the model that show in Fig. 8. The variational Featuremaps and RealAmplitudes reduced form was applied to write the QNN in Fig. 9. In order to determine the experimental target value in each cycle, the objective function value per iteration of the test was shown in Fig. 10, i.e. QSVM gave less objective value than QNN and VQC in Fig. 11 and Fig. 12, respectively.

Figure 10: QSVM opjective function value per iteration.

Figure 11: QNN opjective function value per iteration.

Table 4 presents the performance of our models: QSVM, QNN, and VQC. The QSVM obtained an accuracy of 85.3%, while the quantum models QNN and VQC recorded 52.1% and 70.1% accuracy, respectively. The ZZFeaturemap encoding with RealAmplitudes technique was implemented on the model using the weather dataset, with a depth of 3 layers and 300 epochs. The validation accuracy achieved is depicted in Figure 8. Despite the use of three separate attention processes in conjunction with the VQC model, the results of this investigation were satisfactory.

Figure 12: VQC opjective function value per iteration.

Table 4. Classifier test score

| Classifier | Score |

| QSVM | 0.853 |

| QNN | 0.521 |

| VQC | 0.701 |

5. Conclusion

In this article, we implemented three quantum models using RealAmplitudes techniques. We used ZZFeaturemap encoding as an evaluation optimization, but we acknowledge that this should not be the only optimization used to improve a quantum framework. Furthermore, state preparation is just one aspect of QML algorithms to benefit from when implemented into quantum machine learning. We suggested a pre-processing approach to improve the quantum state preparation for VQC. Our results showed achieved efficiencies of 85.3%, 52.1%, and 70.1%. According to our findings, the QSVM optimizer had the best performance, followed by VQC and QNN. We used ZZFeatureMap with a depth of two and the RealAmplitudes variational form with a depth of three. Moving forward, we plan to explore the use of different data encoding techniques such as RealAmplitudes, amplitude encoding, angle encoding, or other encoding methods to enhance the QML models and increase the number of features to improve performance relative to the established models and cutting-edge techniques. The study was based on a relatively small data set. Therefore, it may influence the assessment of model effectiveness and not discuss data pre-processing techniques because we are primarily interested in the efficiency of quantum models.

Abbreviation

QML Quantum Machine Learning

QSVM Quantum Support Vector Machine

QNN Quantum Neural Networks

VQC Variational Quantum Classifier

SDK Software Development Kit

HQA Hybrid Quantum Autoencoder

QAE Quantum Amplitude Estimation

PQC Parameterized Quantum Circuits

Conflicts of Interest

The authors declare that there are no conflicts of interest.

Acknowledgements

The authors would like to acknowledge the support from the Computer Engineering Program, College of Innovative Technology and Engineering Dhurakij Pundit University Bangkok, and the support for the information data from the Meteorological Department Thailand.

- Christopher J.C. Burges A Tutorial on Support Vector Machines for Pattern Recognition Data Mining and Knowledge Discovery volume 2, pages121–167 (1998)

- Chaiyaporn Khemapatapan and Burada Nothavasi, “Service Oriented Classifying of SMS Message”, 2011 Eighth International Joint Conference on Computer Science and Software Engineering (JCSSE), May 2011, Thailand, pp101-106.

- Chaiyaporn Khemapatapan, “2-Stage Soft Defending Scheme Against DDOS Attack Over SDN Based On NB and SVM”, Proceeding of 8th International Conference from Scientific Computing to Computational Engineering, Jul 4-7, 2018, Athens Greece, pp.1-8.

- Thammanoon Thepsena and Chaiyaporn Khemapatapan “Reservoir Release Forecasting by Artificial Neural Network at Pa Sak Jolasid Dam” International STEM Education Conference (iSTEM-Ed 2022), July 6-8, 2022

- Thammanoon Thepsena, Narongdech Keeratipranon and Chaiyaporn Khemapatapan ” Rainfall Prediction over Pasak Jolasid Dam By Machine Learning Techniques ” National Conference on Wellness Management: Tourism, Technology, and Community (H.E.A.T Congress 2022), August 18-20, 2022

- Chaiyaporn Khemapatapan, Thammanoon Thepsena and Aomduan Jeamaon “A Classifiers Experimentation with Quantum Machine Lerning” The 2023 International Electrical Engineering Congress (iEECON2023) 2023,

- Valentin Heyraud, Zejian Li, Zakari Denis, Alexandre Le Boité, and Cristiano Ciuti, “Noisy quantum kernel machines.”, Phys. Rev. A 106, 052421 – Published 18 November 2022

- Shehab, Omar ; Krunic, Zoran ; Floether, Frederik ; Seegan, George ; Earnest-Noble, Nate, “Quantum kernels for electronic health records classification.”, APS March Meeting 2022, abstract id.S37.006

- W. Li, Z. Lu and D. Deng1,Quantum neural network classifiers: A tutoria, SciPost Phys. Lect.Notes 61 (2022).

- S. Laokondee, P. Chongstitvatana, Quantum Neural Network model for Token alloocation for Course Bidding, Computer Science, Physics 2021(ICSEC).

- Elham Torabian, Roman V. Krems,”Optimal quantum kernels for small data classification.”, Quantum Physics[Submitted on 25 Mar 2022] 14

- S. Aaronson and A. Ambainis, Forrelation: “A problem that

optimally separates quantum from classical computing,”, SIAMJ. Comput. 47, 982 (2018). - L.Zhou, S.T.Wang, S.Choi, H. Pichler and M.D. Lukin, “Quantum Approximate Optimization Algorithm: Performance, Mechanism, and Implementation on near term device, ” Physical Review X, vol.10, June 2020.

- E. Farhi, S. Gutmann and J. Goldstone, “A quantum approximate optimization algorithm,”, Nov 2014

- S. Nath Pushpak, S. Jain, “An Introduction to Quantum Machine Learning Techniques”, 2021 9th Interational conference on Reliability, Infocom Technologies and Optimization, Amity University, Noida, India, 2021

- Valentin Heyraud, Zejian Li, Zakari Denis, Alexandre Le Boité, and Cristiano Ciuti, “Noisy quantum kernel machines.”, Phys. Rev. A 106, 052421 – Published 18 November 2022, DOI:10.1103/PhysRevA 106.052421

- Shehab, Omar ; Krunic, Zoran ; Floether, Frederik ; Seegan, George ; Earnest-Noble, Nate, “Quantum kernels for electronic health records classification.”, APS March Meeting 2022, abstract id.S37.006 DOI:10.1109/TQE.2022.3176806

- W. Li, Z. Lu and D. Deng1,”Quantum neural network classifiers: A tutoria”, SciPost Phys. Lect.Notes 61 2022, DOI: 10.21468/SciPostPhysLectNote.61

- S.Aaronson and A. Ambainis, Forrelation: “A problem that

optimally separates quantum from classical computing,” , SIAMJ. Comput. 47, 982 (2018), DOI:10.1137/15M1050902 - L.Zhou, S.T.Wang, S.Choi, H. Pichler and M.D. Lukin, “Quantum Approximate Optimization Algorithm: Performance, Mechanism, and Implementation on near term device, ” Physical Review X, vol.10, June 2020, DOI:10.1103/PhysRevX.10.021067

- E. Farhi, S. Gutmann and J. Goldstone, “A quantum approximate optimization algorithm,”, Nov 2014, DOI10.48550/arXiv.1411.4028

- Maria Schuld and Nathan Killoran “Quantum Machine Learning in Feature Hilbert Spaces.”, Phys. Rev. Lett. 122, 040504 – Published 1 February 2019, DOI:10.1103/PhysRevLett.122.040504

- Vojtech Havlícek, Antonio D. Córcoles, Kristan Temme, Aram W. Harrow, Abhinav Kandala, Jerry M. Chow & Jay M. Gambetta, “Supervised learning with quantum-enhanced feature spaces.”, Nature volume 567, pages209–212, 2019, DOI:10.1038/s41586-019-0980-2

- M. L. LaBorde, A. C. Rogers, J. P. Dowling, Finding broken gates in quantum circuits: exploiting hybrid machine learning, Quantum

Information Processing 19 8,aug 2020, DOI:10.1007/s11128-020-02729-y - S. L. Wu, S. Sun, W. Guan, C. Zhou, J. Chan, C. L. Cheng, T. Pham, Y. Qian, A. Z. Wang, R. Zhang, et al. “Application of quantum machine learning using the quantum kernel algorithm on high energy physics analysis at the lhc 2021, DOI:10.1103/PhysRevResearch.3.033221

- A. Chalumuri, R. Kune, B. S. Manoj, A hybrid classical-quantum approach for multi-class classification, Quantum Information Processing 20, 3 mar 2021, DOI:10.1007/s11128-021-03029-9

- G. Brassard, P. Høyer, M. Mosca, A. Tapp, Quantum amplitude amplification and estimation, Quantum Computation and Information 2002, P.53–74, DOI:10.1090/conm/305/05215

- Danyai M., Daniel S., Begonya G. ” Variational Quantum Classifier for Binary Classification: Real vs Synthetic Dataset.” ieee Access. DOI:10.1109/Access.2021.3139323

- Vikas Thammanna Gowda, Landis Humphrey, Aiden Kadoch, YinBo Chen, Olivia Roberts, "Multi Attribute Stratified Sampling: An Automated Framework for Privacy-Preserving Healthcare Data Publishing with Multiple Sensitive Attributes", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 51–68, 2026. doi: 10.25046/aj110106

- David Degbor, Haiping Xu, Pratiksha Singh, Shannon Gibbs, Donghui Yan, "StradNet: Automated Structural Adaptation for Efficient Deep Neural Network Design", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 29–41, 2025. doi: 10.25046/aj100603

- Glender Brás, Samara Leal, Breno Sousa, Gabriel Paes, Cleberson Junior, João Souza, Rafael Assis, Tamires Marques, Thiago Teles Calazans Silva, "Machine Learning Methods for University Student Performance Prediction in Basic Skills based on Psychometric Profile", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 4, pp. 1–13, 2025. doi: 10.25046/aj100401

- khawla Alhasan, "Predictive Analytics in Marketing: Evaluating its Effectiveness in Driving Customer Engagement", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 3, pp. 45–51, 2025. doi: 10.25046/aj100306

- Khalifa Sylla, Birahim Babou, Mama Amar, Samuel Ouya, "Impact of Integrating Chatbots into Digital Universities Platforms on the Interactions between the Learner and the Educational Content", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 1, pp. 13–19, 2025. doi: 10.25046/aj100103

- Ahmet Emin Ünal, Halit Boyar, Burcu Kuleli Pak, Vehbi Çağrı Güngör, "Utilizing 3D models for the Prediction of Work Man-Hour in Complex Industrial Products using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 6, pp. 01–11, 2024. doi: 10.25046/aj090601

- Haruki Murakami, Takuma Miwa, Kosuke Shima, Takanobu Otsuka, "Proposal and Implementation of Seawater Temperature Prediction Model using Transfer Learning Considering Water Depth Differences", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 01–06, 2024. doi: 10.25046/aj090401

- Brandon Wetzel, Haiping Xu, "Deploying Trusted and Immutable Predictive Models on a Public Blockchain Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 72–83, 2024. doi: 10.25046/aj090307

- Anirudh Mazumder, Kapil Panda, "Leveraging Machine Learning for a Comprehensive Assessment of PFAS Nephrotoxicity", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 62–71, 2024. doi: 10.25046/aj090306

- Taichi Ito, Ken’ichi Minamino, Shintaro Umeki, "Visualization of the Effect of Additional Fertilization on Paddy Rice by Time-Series Analysis of Vegetation Indices using UAV and Minimizing the Number of Monitoring Days for its Workload Reduction", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 29–40, 2024. doi: 10.25046/aj090303

- Henry Toal, Michelle Wilber, Getu Hailu, Arghya Kusum Das, "Evaluation of Various Deep Learning Models for Short-Term Solar Forecasting in the Arctic using a Distributed Sensor Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 12–28, 2024. doi: 10.25046/aj090302

- Tinofirei Museba, Koenraad Vanhoof, "An Adaptive Heterogeneous Ensemble Learning Model for Credit Card Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 01–11, 2024. doi: 10.25046/aj090301

- Toya Acharya, Annamalai Annamalai, Mohamed F Chouikha, "Optimizing the Performance of Network Anomaly Detection Using Bidirectional Long Short-Term Memory (Bi-LSTM) and Over-sampling for Imbalance Network Traffic Data", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 144–154, 2023. doi: 10.25046/aj080614

- Renhe Chi, "Comparative Study of J48 Decision Tree and CART Algorithm for Liver Cancer Symptom Analysis Using Data from Carnegie Mellon University", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 57–64, 2023. doi: 10.25046/aj080607

- Ng Kah Kit, Hafeez Ullah Amin, Kher Hui Ng, Jessica Price, Ahmad Rauf Subhani, "EEG Feature Extraction based on Fast Fourier Transform and Wavelet Analysis for Classification of Mental Stress Levels using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 46–56, 2023. doi: 10.25046/aj080606

- Kitipoth Wasayangkool, Kanabadee Srisomboon, Chatree Mahatthanajatuphat, Wilaiporn Lee, "Accuracy Improvement-Based Wireless Sensor Estimation Technique with Machine Learning Algorithms for Volume Estimation on the Sealed Box", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 108–117, 2023. doi: 10.25046/aj080313

- Der-Jiun Pang, "Hybrid Machine Learning Model Performance in IT Project Cost and Duration Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 108–115, 2023. doi: 10.25046/aj080212

- Paulo Gustavo Quinan, Issa Traoré, Isaac Woungang, Ujwal Reddy Gondhi, Chenyang Nie, "Hybrid Intrusion Detection Using the AEN Graph Model", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 44–63, 2023. doi: 10.25046/aj080206

- Ossama Embarak, "Multi-Layered Machine Learning Model For Mining Learners Academic Performance", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 850–861, 2021. doi: 10.25046/aj060194

- Roy D Gregori Ayon, Md. Sanaullah Rabbi, Umme Habiba, Maoyejatun Hasana, "Bangla Speech Emotion Detection using Machine Learning Ensemble Methods", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 70–76, 2022. doi: 10.25046/aj070608

- Deeptaanshu Kumar, Ajmal Thanikkal, Prithvi Krishnamurthy, Xinlei Chen, Pei Zhang, "Analysis of Different Supervised Machine Learning Methods for Accelerometer-Based Alcohol Consumption Detection from Physical Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 147–154, 2022. doi: 10.25046/aj070419

- Zhumakhan Nazir, Temirlan Zarymkanov, Jurn-Guy Park, "A Machine Learning Model Selection Considering Tradeoffs between Accuracy and Interpretability", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 72–78, 2022. doi: 10.25046/aj070410

- Ayoub Benchabana, Mohamed-Khireddine Kholladi, Ramla Bensaci, Belal Khaldi, "A Supervised Building Detection Based on Shadow using Segmentation and Texture in High-Resolution Images", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 166–173, 2022. doi: 10.25046/aj070319

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Arwa Alghamdi, Graham Healy, Hoda Abdelhafez, "Machine Learning Algorithms for Real Time Blind Audio Source Separation with Natural Language Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 125–140, 2021. doi: 10.25046/aj060515

- Baida Ouafae, Louzar Oumaima, Ramdi Mariam, Lyhyaoui Abdelouahid, "Survey on Novelty Detection using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 73–82, 2021. doi: 10.25046/aj060510

- Radwan Qasrawi, Stephanny VicunaPolo, Diala Abu Al-Halawa, Sameh Hallaq, Ziad Abdeen, "Predicting School Children Academic Performance Using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 08–15, 2021. doi: 10.25046/aj060502

- Zhiyuan Chen, Howe Seng Goh, Kai Ling Sin, Kelly Lim, Nicole Ka Hei Chung, Xin Yu Liew, "Automated Agriculture Commodity Price Prediction System with Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 376–384, 2021. doi: 10.25046/aj060442

- Hathairat Ketmaneechairat, Maleerat Maliyaem, Chalermpong Intarat, "Kamphaeng Saen Beef Cattle Identification Approach using Muzzle Print Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 110–122, 2021. doi: 10.25046/aj060413

- Md Mahmudul Hasan, Nafiul Hasan, Dil Afroz, Ferdaus Anam Jibon, Md. Arman Hossen, Md. Shahrier Parvage, Jakaria Sulaiman Aongkon, "Electroencephalogram Based Medical Biometrics using Machine Learning: Assessment of Different Color Stimuli", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 27–34, 2021. doi: 10.25046/aj060304

- Dominik Štursa, Daniel Honc, Petr Doležel, "Efficient 2D Detection and Positioning of Complex Objects for Robotic Manipulation Using Fully Convolutional Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 915–920, 2021. doi: 10.25046/aj0602104

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, "Development of an EEG Controlled Wheelchair Using Color Stimuli: A Machine Learning Based Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 754–762, 2021. doi: 10.25046/aj060287

- Antoni Wibowo, Inten Yasmina, Antoni Wibowo, "Food Price Prediction Using Time Series Linear Ridge Regression with The Best Damping Factor", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 694–698, 2021. doi: 10.25046/aj060280

- Javier E. Sánchez-Galán, Fatima Rangel Barranco, Jorge Serrano Reyes, Evelyn I. Quirós-McIntire, José Ulises Jiménez, José R. Fábrega, "Using Supervised Classification Methods for the Analysis of Multi-spectral Signatures of Rice Varieties in Panama", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 552–558, 2021. doi: 10.25046/aj060262

- Phillip Blunt, Bertram Haskins, "A Model for the Application of Automatic Speech Recognition for Generating Lesson Summaries", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 526–540, 2021. doi: 10.25046/aj060260

- Sebastianus Bara Primananda, Sani Muhamad Isa, "Forecasting Gold Price in Rupiah using Multivariate Analysis with LSTM and GRU Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 245–253, 2021. doi: 10.25046/aj060227

- Byeongwoo Kim, Jongkyu Lee, "Fault Diagnosis and Noise Robustness Comparison of Rotating Machinery using CWT and CNN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1279–1285, 2021. doi: 10.25046/aj0601146

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, Md Mostafizur Rahman Komol, "Diagnosis of Tobacco Addiction using Medical Signal: An EEG-based Time-Frequency Domain Analysis Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 842–849, 2021. doi: 10.25046/aj060193

- Reem Bayari, Ameur Bensefia, "Text Mining Techniques for Cyberbullying Detection: State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 783–790, 2021. doi: 10.25046/aj060187

- Inna Valieva, Iurii Voitenko, Mats Björkman, Johan Åkerberg, Mikael Ekström, "Multiple Machine Learning Algorithms Comparison for Modulation Type Classification Based on Instantaneous Values of the Time Domain Signal and Time Series Statistics Derived from Wavelet Transform", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 658–671, 2021. doi: 10.25046/aj060172

- Carlos López-Bermeo, Mauricio González-Palacio, Lina Sepúlveda-Cano, Rubén Montoya-Ramírez, César Hidalgo-Montoya, "Comparison of Machine Learning Parametric and Non-Parametric Techniques for Determining Soil Moisture: Case Study at Las Palmas Andean Basin", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 636–650, 2021. doi: 10.25046/aj060170

- Ndiatenda Ndou, Ritesh Ajoodha, Ashwini Jadhav, "A Case Study to Enhance Student Support Initiatives Through Forecasting Student Success in Higher-Education", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 230–241, 2021. doi: 10.25046/aj060126

- Lonia Masangu, Ashwini Jadhav, Ritesh Ajoodha, "Predicting Student Academic Performance Using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 153–163, 2021. doi: 10.25046/aj060117

- Sara Ftaimi, Tomader Mazri, "Handling Priority Data in Smart Transportation System by using Support Vector Machine Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1422–1427, 2020. doi: 10.25046/aj0506172

- Othmane Rahmaoui, Kamal Souali, Mohammed Ouzzif, "Towards a Documents Processing Tool using Traceability Information Retrieval and Content Recognition Through Machine Learning in a Big Data Context", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1267–1277, 2020. doi: 10.25046/aj0506151

- Puttakul Sakul-Ung, Amornvit Vatcharaphrueksadee, Pitiporn Ruchanawet, Kanin Kearpimy, Hathairat Ketmaneechairat, Maleerat Maliyaem, "Overmind: A Collaborative Decentralized Machine Learning Framework", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 280–289, 2020. doi: 10.25046/aj050634

- Pamela Zontone, Antonio Affanni, Riccardo Bernardini, Leonida Del Linz, Alessandro Piras, Roberto Rinaldo, "Supervised Learning Techniques for Stress Detection in Car Drivers", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 22–29, 2020. doi: 10.25046/aj050603

- Kodai Kitagawa, Koji Matsumoto, Kensuke Iwanaga, Siti Anom Ahmad, Takayuki Nagasaki, Sota Nakano, Mitsumasa Hida, Shogo Okamatsu, Chikamune Wada, "Posture Recognition Method for Caregivers during Postural Change of a Patient on a Bed using Wearable Sensors", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1093–1098, 2020. doi: 10.25046/aj0505133

- Khalid A. AlAfandy, Hicham Omara, Mohamed Lazaar, Mohammed Al Achhab, "Using Classic Networks for Classifying Remote Sensing Images: Comparative Study", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 770–780, 2020. doi: 10.25046/aj050594

- Khalid A. AlAfandy, Hicham, Mohamed Lazaar, Mohammed Al Achhab, "Investment of Classic Deep CNNs and SVM for Classifying Remote Sensing Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 652–659, 2020. doi: 10.25046/aj050580

- Rajesh Kumar, Geetha S, "Malware Classification Using XGboost-Gradient Boosted Decision Tree", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 536–549, 2020. doi: 10.25046/aj050566

- Nghia Duong-Trung, Nga Quynh Thi Tang, Xuan Son Ha, "Interpretation of Machine Learning Models for Medical Diagnosis", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 469–477, 2020. doi: 10.25046/aj050558

- Oumaima Terrada, Soufiane Hamida, Bouchaib Cherradi, Abdelhadi Raihani, Omar Bouattane, "Supervised Machine Learning Based Medical Diagnosis Support System for Prediction of Patients with Heart Disease", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 269–277, 2020. doi: 10.25046/aj050533

- Haytham Azmi, "FPGA Acceleration of Tree-based Learning Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 237–244, 2020. doi: 10.25046/aj050529

- Hicham Moujahid, Bouchaib Cherradi, Oussama El Gannour, Lhoussain Bahatti, Oumaima Terrada, Soufiane Hamida, "Convolutional Neural Network Based Classification of Patients with Pneumonia using X-ray Lung Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 167–175, 2020. doi: 10.25046/aj050522

- Young-Jin Park, Hui-Sup Cho, "A Method for Detecting Human Presence and Movement Using Impulse Radar", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 770–775, 2020. doi: 10.25046/aj050491

- Anouar Bachar, Noureddine El Makhfi, Omar EL Bannay, "Machine Learning for Network Intrusion Detection Based on SVM Binary Classification Model", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 638–644, 2020. doi: 10.25046/aj050476

- Adonis Santos, Patricia Angela Abu, Carlos Oppus, Rosula Reyes, "Real-Time Traffic Sign Detection and Recognition System for Assistive Driving", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 600–611, 2020. doi: 10.25046/aj050471

- Amar Choudhary, Deependra Pandey, Saurabh Bhardwaj, "Overview of Solar Radiation Estimation Techniques with Development of Solar Radiation Model Using Artificial Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 589–593, 2020. doi: 10.25046/aj050469

- Maroua Abdellaoui, Dounia Daghouj, Mohammed Fattah, Younes Balboul, Said Mazer, Moulhime El Bekkali, "Artificial Intelligence Approach for Target Classification: A State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 445–456, 2020. doi: 10.25046/aj050453

- Shahab Pasha, Jan Lundgren, Christian Ritz, Yuexian Zou, "Distributed Microphone Arrays, Emerging Speech and Audio Signal Processing Platforms: A Review", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 331–343, 2020. doi: 10.25046/aj050439

- Ilias Kalathas, Michail Papoutsidakis, Chistos Drosos, "Optimization of the Procedures for Checking the Functionality of the Greek Railways: Data Mining and Machine Learning Approach to Predict Passenger Train Immobilization", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 287–295, 2020. doi: 10.25046/aj050435

- Yosaphat Catur Widiyono, Sani Muhamad Isa, "Utilization of Data Mining to Predict Non-Performing Loan", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 252–256, 2020. doi: 10.25046/aj050431

- Hai Thanh Nguyen, Nhi Yen Kim Phan, Huong Hoang Luong, Trung Phuoc Le, Nghi Cong Tran, "Efficient Discretization Approaches for Machine Learning Techniques to Improve Disease Classification on Gut Microbiome Composition Data", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 547–556, 2020. doi: 10.25046/aj050368

- Ruba Obiedat, "Risk Management: The Case of Intrusion Detection using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 529–535, 2020. doi: 10.25046/aj050365

- Krina B. Gabani, Mayuri A. Mehta, Stephanie Noronha, "Racial Categorization Methods: A Survey", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 388–401, 2020. doi: 10.25046/aj050350

- Dennis Luqman, Sani Muhamad Isa, "Machine Learning Model to Identify the Optimum Database Query Execution Platform on GPU Assisted Database", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 214–225, 2020. doi: 10.25046/aj050328

- Gillala Rekha, Shaveta Malik, Amit Kumar Tyagi, Meghna Manoj Nair, "Intrusion Detection in Cyber Security: Role of Machine Learning and Data Mining in Cyber Security", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 72–81, 2020. doi: 10.25046/aj050310

- Ahmed EL Orche, Mohamed Bahaj, "Approach to Combine an Ontology-Based on Payment System with Neural Network for Transaction Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 551–560, 2020. doi: 10.25046/aj050269

- Bokyoon Na, Geoffrey C Fox, "Object Classifications by Image Super-Resolution Preprocessing for Convolutional Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 476–483, 2020. doi: 10.25046/aj050261

- Johannes Linden, Xutao Wang, Stefan Forsstrom, Tingting Zhang, "Productify News Article Classification Model with Sagemaker", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 13–18, 2020. doi: 10.25046/aj050202

- Michael Wenceslaus Putong, Suharjito, "Classification Model of Contact Center Customers Emails Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 174–182, 2020. doi: 10.25046/aj050123

- Rehan Ullah Khan, Ali Mustafa Qamar, Mohammed Hadwan, "Quranic Reciter Recognition: A Machine Learning Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 173–176, 2019. doi: 10.25046/aj040621

- Mehdi Guessous, Lahbib Zenkouar, "An ML-optimized dRRM Solution for IEEE 802.11 Enterprise Wlan Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 19–31, 2019. doi: 10.25046/aj040603

- Toshiyasu Kato, Yuki Terawaki, Yasushi Kodama, Teruhiko Unoki, Yasushi Kambayashi, "Estimating Academic results from Trainees’ Activities in Programming Exercises Using Four Types of Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 321–326, 2019. doi: 10.25046/aj040541

- Nindhia Hutagaol, Suharjito, "Predictive Modelling of Student Dropout Using Ensemble Classifier Method in Higher Education", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 206–211, 2019. doi: 10.25046/aj040425

- Fernando Hernández, Roberto Vega, Freddy Tapia, Derlin Morocho, Walter Fuertes, "Early Detection of Alzheimer’s Using Digital Image Processing Through Iridology, An Alternative Method", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 126–137, 2019. doi: 10.25046/aj040317

- Abba Suganda Girsang, Andi Setiadi Manalu, Ko-Wei Huang, "Feature Selection for Musical Genre Classification Using a Genetic Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 2, pp. 162–169, 2019. doi: 10.25046/aj040221

- Konstantin Mironov, Ruslan Gayanov, Dmiriy Kurennov, "Observing and Forecasting the Trajectory of the Thrown Body with use of Genetic Programming", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 248–257, 2019. doi: 10.25046/aj040124

- Bok Gyu Han, Hyeon Seok Yang, Ho Gyeong Lee, Young Shik Moon, "Low Contrast Image Enhancement Using Convolutional Neural Network with Simple Reflection Model", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 159–164, 2019. doi: 10.25046/aj040115

- Zheng Xie, Chaitanya Gadepalli, Farideh Jalalinajafabadi, Barry M.G. Cheetham, Jarrod J. Homer, "Machine Learning Applied to GRBAS Voice Quality Assessment", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 329–338, 2018. doi: 10.25046/aj030641

- Richard Osei Agjei, Emmanuel Awuni Kolog, Daniel Dei, Juliet Yayra Tengey, "Emotional Impact of Suicide on Active Witnesses: Predicting with Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 501–509, 2018. doi: 10.25046/aj030557

- Sudipta Saha, Aninda Saha, Zubayr Khalid, Pritam Paul, Shuvam Biswas, "A Machine Learning Framework Using Distinctive Feature Extraction for Hand Gesture Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 72–81, 2018. doi: 10.25046/aj030510

- Charles Frank, Asmail Habach, Raed Seetan, Abdullah Wahbeh, "Predicting Smoking Status Using Machine Learning Algorithms and Statistical Analysis", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 184–189, 2018. doi: 10.25046/aj030221

- Sehla Loussaief, Afef Abdelkrim, "Machine Learning framework for image classification", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 1, pp. 1–10, 2018. doi: 10.25046/aj030101

- Ruijian Zhang, Deren Li, "Applying Machine Learning and High Performance Computing to Water Quality Assessment and Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 285–289, 2017. doi: 10.25046/aj020635

- Batoul Haidar, Maroun Chamoun, Ahmed Serhrouchni, "A Multilingual System for Cyberbullying Detection: Arabic Content Detection using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 275–284, 2017. doi: 10.25046/aj020634

- Yuksel Arslan, Abdussamet Tanıs, Huseyin Canbolat, "A Relational Database Model and Tools for Environmental Sound Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 145–150, 2017. doi: 10.25046/aj020618

- Loretta Henderson Cheeks, Ashraf Gaffar, Mable Johnson Moore, "Modeling Double Subjectivity for Gaining Programmable Insights: Framing the Case of Uber", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1677–1692, 2017. doi: 10.25046/aj0203209

- Moses Ekpenyong, Daniel Asuquo, Samuel Robinson, Imeh Umoren, Etebong Isong, "Soft Handoff Evaluation and Efficient Access Network Selection in Next Generation Cellular Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1616–1625, 2017. doi: 10.25046/aj0203201

- Rogerio Gomes Lopes, Marcelo Ladeira, Rommel Novaes Carvalho, "Use of machine learning techniques in the prediction of credit recovery", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1432–1442, 2017. doi: 10.25046/aj0203179

- Daniel Fraunholz, Marc Zimmermann, Hans Dieter Schotten, "Towards Deployment Strategies for Deception Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1272–1279, 2017. doi: 10.25046/aj0203161

- Arsim Susuri, Mentor Hamiti, Agni Dika, "Detection of Vandalism in Wikipedia using Metadata Features – Implementation in Simple English and Albanian sections", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 4, pp. 1–7, 2017. doi: 10.25046/aj020401

- Adewale Opeoluwa Ogunde, Ajibola Rasaq Olanbo, "A Web-Based Decision Support System for Evaluating Soil Suitability for Cassava Cultivation", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 42–50, 2017. doi: 10.25046/aj020105

- Arsim Susuri, Mentor Hamiti, Agni Dika, "The Class Imbalance Problem in the Machine Learning Based Detection of Vandalism in Wikipedia across Languages", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 16–22, 2016. doi: 10.25046/aj020103