Driver Fatigue Tracking and Detection Method Based on OpenMV

Volume 6, Issue 3, Page No 296-302, 2021

Author’s Name: Shiwei Zhou1, Jiayuan Gong1,2,3,4,a), Leipeng Qie1, Zhuofei Xia1, Haiying Zhou1,5, Xin Jin6

View Affiliations

1Institute of Automotive Engineers, Hubei University of Automotive Technology, Shiyan City, 442000, China

2Acoustic Science and Technology Laboratory, Harbin Engineering University, Harbin 150001, China

3Key Laboratory of Marine Information Acquisition and Security (Harbin Engineering University), Ministry of Industry and Information Technology, Harbin 150001, China

4College of Underwater Acoustic Engineering, Harbin Engineering University, Harbin 150001, China

5Intelligent Power System Co., LTD, Wuhan 430000, China

6Shanghai Link-E Information and Technology Co. Ltd, Shanghai 200000, China

a)Author to whom correspondence should be addressed. E-mail: rorypeck@126.com

Adv. Sci. Technol. Eng. Syst. J. 6(3), 296-302 (2021); ![]() DOI: 10.25046/aj060333

DOI: 10.25046/aj060333

Keywords: OpenMV, Driver fatigue detection, Cradle head tracking

Export Citations

Aiming at the problem of fatigue driving, this paper proposed a driver fatigue tracking and detection method combined with OpenMV. OpenMV is used for image acquisition, and the Dlib feature point model is used to locate the detected driver’s face. The aspect ratio of eyes is calculated to judge the opening and closing of eyes, and then fatigue detection is performed by PERCLOS (Percentage of Eyelid Closure over the Pupil). The cradle head system is mounted for driver fatigue tracking and detection. The results show that the dynamic tracking system can improve the accuracy to 92.10% for relaxation and 85.20% for fatigue of driver fatigue detection. This system can be applied to the monitoring of fatigue driving very effectively.

Received: 26 December 2020, Accepted: 15 May 2021, Published Online: 10 June 2021

1. Introduction

This paper is an extension of the work initially done in the conference OpenMV Based Cradle Head Mount Tracking System [1]. With the improvement of people’s living standards, cars have entered thousands of households and become an irreplaceable means of transportation. At the same time, traffic accidents have become a serious social problem faced by countries all over the world, and have been recognized as the first major public harm to human life today. According to the statistics of China’s Ministry of Transport, 48% of traffic accidents in China are caused by drivers’ fatigue driving, with direct economic losses amounting to hundreds of thousands of dollars [2, 3]. Therefore, it is very necessary and urgent to study the fatigue driving detection system.

Fatigue driving detection techniques are divided into two categories. One is the subjective evaluation method. The other is the objective evaluation method. The subjective assessment method is recording sensory changes of drivers, and fill in the FS-14 Fatigue Scale, Karolinska Sleep Scale, Pearson Fatigue Scale, and the Stanford Sleep Scale. However, too many subjective factors probably lead to inaccurate results. The objective evaluation methods are divided into three categories, namely, detection based on driver physiological parameters [4–8], detection based on vehicle behavior [9,10], and detection based on computer vision [11–20]. The detection based on driver physiological parameters, including electroencephalogram (EEG), electrocardiogram (ECG), electromyography (EMG), electrooculogram (EOG), and other parameters, can reflect the driver’s physiological state. These detection methods have high accuracy, but the equipment is expensive and may disturb the driver’s normal driving. The vehicle behavior-based detection is to monitor the driver’s state through the judgment of the vehicle’s movement track and the steering wheel’s operating speed, etc. Such detection accuracy is general and is easily affected by the weather and other external environmental factors. The fatigue detection method based on computer vision uses the camera to extract features closely related to fatigue state, such as blink. Fatigue detection method based on computer vision as a non-contact detection method, it will not distract drivers. Thus, the driver’s facial features and head posture are more precise, directly reflecting the driver’s mental state. It has good practicability and accuracy, so it is a more suitable method to detect driver fatigue.

In this paper, it mainly proposes OpenMV based fatigue driving tracking and detection method. OpenMV is used as the camera to collect images, and the images are sent to PC through serial communication. Dlib feature point model is used to locate the detected driver’s facial features, and the aspect ratio of eyes can identify whether the driver opens eyes or not. Fatigue detection is carried out by blinking frequency, and driver fatigue tracking detection is carried out by being combined with the cradle head system.

2. Materials and Method

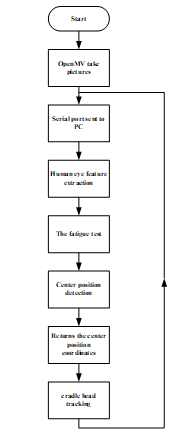

Fatigue driving cradle head tracking and detection system is mainly composed of image acquisition and transmission module, cradle head tracking face module and fatigue detection module. Its system flow chart is shown in Figure 1.

2.1. OpenMV Introduction

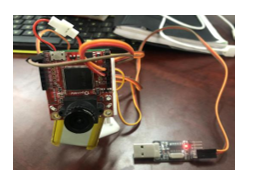

OpenMV is an open-source, low-cost and powerful machine vision module, as shown in Figure 2.

It takes STM32F427CPU as the core and integrates the OV7725 camera chip. On small hardware modules, the core machine vision algorithm is efficiently implemented in C language, and the Python programming interface is supplied. Its programming language is MicroPython. MicroPython is a type of Python. Its syntax is as easy and practical as Python. By using MicroPython, we can program our own projects. It has built-in color recognition, shape recognition, face recognition, and eye recognition modules. Users can use machine vision functions provided by OpenMV to add unique competitiveness to their products and inventions.

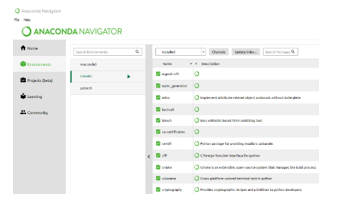

2.2. Anaconda Introduction

Anaconda is a release version that contains 180+ scientific packages and their dependencies. Scientific packages include: Conda, Numpy, Scipy, etc. Anaconda is open-source and the installation is simple. It can use Python and R languages with high performance and has free community support. When we perform driver fatigue detection, we can directly import some corresponding libraries as shown in Figure 3.

2.3. Image Acquisition and Transmission

The fatigue tracking and detection system is mainly composed of OpenMV and PC.

Firstly, image collection is carried out at the OpenMV end. UART is asked to transmit image information to the Anaconda end, and the serial port is initialized. Then, reset the sensor and camera, set the size of the picture to 240*160, and perform a series of preprocessing on the picture, such as grayscale. When installing OpenMV, the camera is placed upside down in order to ensure stability of the camera, so the flip function should be added to flip the picture. The OpenMV connection diagram is shown in Figure 4.

Figure 4: OpenMV Connection Diagram

Figure 4: OpenMV Connection Diagram

After initialization, the pictures are transmitted, using the read function to read four bytes, and if it is “snap”, a frame of image is sent.

In the anaconda side, first initialize the serial port, reset the input and output buffers, send the “snap” to get the image, and read the size and data of the image.

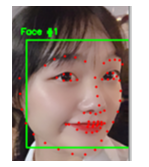

2.4. Feature Extraction and Fatigue Detection of Human Eyes

This paper is based primarily on the 68-feature point detection model of the Dlib library, and the feature point recognition test is carried out at first. Dlib is a face alignment algorithm based on regression tree. Firstly, import dependent libraries on the Anaconda side, such as OpenCV, Dlib, NumPy, etc. And import the Shape_Predictor_68_Face_Landmarks model. Read the image, import the rectangular face frame, and print out the 68 feature points in a circular manner, as shown in Figure 5.

Figure 5: Feature Point Printing

Figure 5: Feature Point Printing

The feature points used in this paper are that of both eyes, namely 36-48 points. Whether the driver is tired can be judged through the aspect ratio of eyes (EAR) [21–23]. There are 6 feature points for both left and right eyes, and the 6 feature points P1, P2, P3, P4, P5 and P6 are the points corresponding to the eyes in the facial feature points. This is shown in Figure 6.

When the state of eyes turns from open to close, the aspect ratio will change. The formula of EAR is as follows:

![]() In the formula, the numerator calculates the distance of the feature points of the eye in the vertical direction, and the denominator calculates the distance of the feature points of the eye in the horizontal direction. Since there is only one set of horizontal points and two sets of vertical points, the denominator is multiplied by 2 to ensure that both sets of feature points have the same weight.

In the formula, the numerator calculates the distance of the feature points of the eye in the vertical direction, and the denominator calculates the distance of the feature points of the eye in the horizontal direction. Since there is only one set of horizontal points and two sets of vertical points, the denominator is multiplied by 2 to ensure that both sets of feature points have the same weight.

Considering that the size of the eyes varies from person to person, the area of the eyes also changes dynamically due to the influence of the scene and the movement of the head, the degree of opening of the eyes is relative to its maximum open state. The detection results obtained after the average threshold [24–26] is set as 0.2 in the general algorithm are very inaccurate. Therefore, the average calculation method is adopted in fatigue detection in this paper: 30 times of data is collected first, and the average value is taken as the threshold, as showed in Table 1. The fatigue threshold of the driver is detected in advance, which significantly improves the reliability and accuracy of the algorithm.

Table 1: EAR Average

| Number | EAR |

| 1 | 0.23 |

| 2 | 0.26 |

| 3 | 0.27 |

| 4 | 0.30 |

| 5 | 0.32 |

| 6 | 0.33 |

| average | 0.28 |

By calculating the average aspect ratio of eyes, the threshold of eye fatigue is set as 0.28. If the EAR is bigger than it, the eyes are considered to be open. If the EAR is smaller than it, the eyes are considered to be close. See Figure 7.

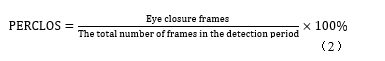

PERCLOS (Percentage of Eyelid Closure over the Pupil) is defined as the percentage of time (70% or 80%) within a unit time (generally 1 minute or 30 seconds) that the eyes are closed. The driver is considered fatigue if the following formula is met:

The calculation formula of PERCLOS is as follows:

Referring to relevant literature, the normal interval between two blinks is around 0.25 seconds, and people blink more than15 times per minute, so the PERCLOS value is about 6.25%. According to the literature[27, 28], general provisions PERCLOS is 20% for fatigue limit, when we apply this method in the human eye fatigue test, the goal of driver fatigue detection can be achieved.

Referring to relevant literature, the normal interval between two blinks is around 0.25 seconds, and people blink more than15 times per minute, so the PERCLOS value is about 6.25%. According to the literature[27, 28], general provisions PERCLOS is 20% for fatigue limit, when we apply this method in the human eye fatigue test, the goal of driver fatigue detection can be achieved.

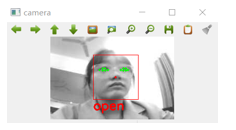

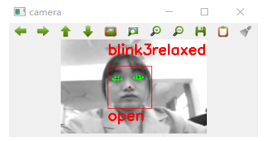

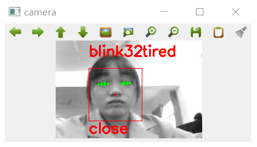

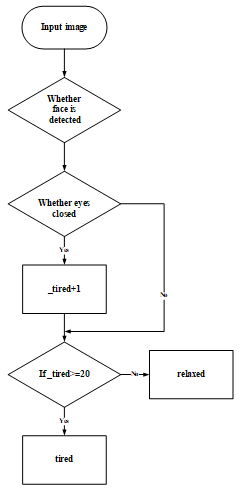

We defined 100 frames of images as a cycle. If 20 frames of closed eyes are detected in 100 frames, it would be considered as fatigue and output tired, otherwise, output relaxed, as shown in Figure 8 and Figure 9, no detection is added shown in Figure 10.

The fatigue detection flow chart is shown in Figure 11.

2.5. Cradle Head Tracking System

The cradle head system is composed of OpenMV, 3D printing parts, PCB fixing plate, two Micro Steering Gears, and a lithium battery. The cradle head system is connected with OpenMV by welding pins. The tracking head of OpenMV firstly obtains the x and y coordinates of the center of the face, sends the coordinate information of the center to OpenMV through the serial port, and then controls the movement of the twosteering gears of the head by calculating the deviation between the coordinate of the center of the face and the center of the picture, to complete the driver’s face tracking and detection.

PID control is proportional – integral – differential control, PID control is a correction method, that is, a control method defined in the principle of automatic control. Import PID and Servo control class, in which Servo(1) and Servo(2) are the upper and lower Servo, respectively. First, initialize the two parameters, set PID parameters as pan_pid = PID ( p=0.25, I =0, Imax =90 ), tilt_pid = PID ( p=0.25, I =0, Imax =90 ). When adjusting parameters, keep the values of I and Imax unchanged, and manually adjust the value of p. Face tracking is realized by controlling the pylon of the steering gear. The specific steps can be divided into the following steps: Figure 11: Fatigue Detection Flow Chart

Figure 11: Fatigue Detection Flow Chart

- OpenMV for image acquisition.

- Obtain the coordinates of the center point of the face.

- Calculate the deviation between the face center coordinates and the picture center coordinates.

- The steering gear moves to realize cradle head tracking.

3. Experiment and Results analysis

3.1. Experiment

In order to test the effectiveness of the driver fatigue tracking and detection algorithm in this paper, and the influence of the dynamic tracking system after adding the cradle head on the driver fatigue detection effect. We conducted experiments on subjects and detected the driver fatigue detection system without the cradle head and the driver fatigue detection system with the cradle head respectively under the relaxed and fatigue states of the subjects. Figure 12 shows the driver fatigue detection system without the cradle head.

Figure 12: Face Tracking and Fatigue Detection without Cradle Head

Figure 12: Face Tracking and Fatigue Detection without Cradle Head

Figure 13 shows the tracking process of the fatigue detection system after added the cradle head.

Figure 13: Face Tracking and Fatigue Detection with Cradle Head

Figure 13: Face Tracking and Fatigue Detection with Cradle Head

It is found in the experimental process that the driver fatigue detection system with the cradle head can move up, down, left, and right with the driver’s face to ensure that face recognition is always possible to monitor and distinguish the driver fatigue state in real-time.

3.2. Experimental Results

In order to test the effectiveness of the driver fatigue tracking detection algorithm in this paper, and the influence of the dynamic tracking system after adding the cradle head on the driver fatigue detection effect. We conducted experiments to identify and detect the driver fatigue detection system without the cradle head and the driver fatigue detection system with the cradle head respectively in two states of objects’ relaxation and fatigue, identified and detected 4055 frames of images in each state, as shown in Table 2 and Table 3. With the same number of tests, we can clearly compare the accuracy of both.

Table 2: Fatigue Test Results without Cradle Head

| State | Number of tests | Wrong number | Accuracy |

| relaxation | 4055 | 405 | 90.01 |

| fatigue | 4055 | 790 | 80.52 |

Table 3: Fatigue Test Results with Cradle Head

| State | Number of tests | Wrong number | Accuracy |

| relaxation | 4055 | 320 | 92.10 |

| fatigue | 4055 | 600 | 85.20 |

3.3. Analysis of Experimental Results

When the cradle head system is not added, the test results of the driver fatigue detection are listed in Table 2. In the experiment, relaxed and fatigue states were respectively detected,the accuracy of the relaxed state reached 90.01% and the fatigue state only reached 80.52%. It can be seen from the results that there are still problems in the static fatigue detection system.

An image in error detection is extracted, as shown in Figure 14. Owing to the change of the driver’s head position during the driving process, face recognition cannot be achieved, so the fatigue state of the driver cannot be detected, leading to low accuracy of fatigue detection results.

After adding the cradle head system, the driver fatigue detection test results are listed in Table 3. It can be seen from the results that the detection accuracy of the driver in the relaxed state reached 92.1%, and the accuracy of the fatigue state also reached 85.2%. Comparing with the fatigue detection results without the cradle head, accuracy improved by 2.09% in the relaxed state and 4.68% in the fatigue state. The accuracy of fatigue detection and identification is improved obviously. At the same time, the dynamic tracking effect is also very good.

4. Conclusion

In this paper, OpenMV is used as a camera to collect driver images and transmit them to the PC through the serial port. The driver’s face position was tracked by the cradle head system. The face key point detection algorithm based on integrated regression tree was adopted to locate 68-feature points in a small part of the face area, and the location information of human eye feature points was extracted from the key point information obtained. The open and closed states of human eyes were determined by EAR, the blink frequency is used to judge driver fatigue. And the detection method of open and closed eyes for the discrepancies of different drivers’ eyes sizes was proposed. Then the center point of the face is transmitted back to the head so that the system can continuously track the face.

Through experiments, this paper proposes that the cradle head system can track well. The accuracy of the driver fatigue detection system with dynamic tracking can reach 92.1% and 85.2% respectively in relaxed and fatigue states, which effectively reduces the detection errors caused by the deviation of the driver’s face position and improves the accuracy of the driver fatigue detection.

The driver fatigue detection system can detect the abnormal state of the driver as early as possible and effectively avoid the occurrence of traffic accidents.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

This work was supported by China Software Industry Association & Intel IoT Group under AEEE2020JG001, and in part by Industrial Internet innovation and development project of MIIT under TC200H033 & TC200H01F, and in part by Doctor’s Research Fund of HUAT under BK201604, and in part by Hubei Provincial Science & Technology International Cooperation Research Program under 2019AHB060, and in part by the State Leading Local Science and Technology Development Special Fund under 2019ZYYD014, and in part by Research Project of Provincial Department of Education under Q20181804.

- S. Zhou, J. Gong, H. Zhou, Z. Zhu, C. He, K. Zhou, OpenMV Based Cradle Head Mount Tracking System, 2020, doi:10.1109/DSA.2019.00085.

- S. Moslem, D. Farooq, O. Ghorbanzadeh, T. Blaschke, “Application of the AHP-BWM model for evaluating driver behavior factors related to road safety: A case study for Budapest,” Symmetry, 12(2), 2020, doi:10.3390/sym12020243.

- J. Cech, T. Soukupova, “Real-Time Eye Blink Detection using Facial Landmarks,” Center for Machine Perception, Department of Cybernetics Faculty of Electrical Engineering, Czech Technical University in Prague, 1–8, 2016.

- F. Zhou, A. Alsaid, M. Blommer, R. Curry, R. Swaminathan, D. Kochhar, W. Talamonti, L. Tijerina, B. Lei, “Driver fatigue transition prediction in highly automated driving using physiological features,” Expert Systems with Applications, 147, 113204, 2020, doi:https://doi.org/10.1016/j.eswa.2020.113204.

- Individual Differential Driving Fatigue Detection Based on Non-Intrusive Measurement Index, 2016, doi:10.19721/j.cnki.1001-7372.2016.10.011.

- P. Shangguan, T. Qiu, T. Liu, S. Zou, Z. Liu, S. Zhang, “Feature extraction of {EEG} signals based on functional data analysis and its application to recognition of driver fatigue state,” Physiological Measurement, 41(12), 125004, 2021, doi:10.1088/1361-6579/abc66e.

- J. Bai, L. Shen, H. Sun, B. Shen, Physiological Informatics: Collection and Analyses of Data from Wearable Sensors and Smartphone for Healthcare BT – Healthcare and Big Data Management, Springer Singapore, Singapore: 17–37, 2017, doi:10.1007/978-981-10-6041-0_2.

- D. de Waard, V. Studiecentrum, The Measurement of Drivers ’ Mental Workload, 1996.

- Z. Chen, “Study on Driver Fatigue Monitoring Based on BP Neural Network,” 2005.

- D. Feng, M.Q. Feng, “Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection – A review,” Engineering Structures, 156, 105–117, 2018, doi:https://doi.org/10.1016/j.engstruct.2017.11.018.

- X. Liang, Y. Shi, X. Zhan, Fatigue Driving Detection Based on Facial Features, 2018, doi:10.1145/3301551.3301555.

- J.I.A. Xiaoyun, Z. Lingyu, R.E.N. Jiang, Z. Nan, “Research on Fatigue Driving Detection Method of Facial Features Fusion AdaBoost,” 2–7, 2016.

- S. Lee, M. Kim, H. Jung, D. Kwon, S. Choi, H. You, “Effects of a Motion Seat System on Driver’s Passive Task-Related Fatigue: An On-Road Driving Study,” Sensors, 20, 2688, 2020, doi:10.3390/s20092688.

- C. Zheng, B. Xiaojuan, W. Yu, “Fatigue driving detection based on Haar feature and extreme learning machine,” The Journal of China Universities of Posts and Telecommunications, 23, 91–100, 2016, doi:10.1016/S1005-8885(16)60050-X.

- S. Computing, “An Improved Resnest Driver Head State Classification Algorithm,” 2021.

- Q. Abbas, A. Alsheddy, Driver Fatigue Detection Systems Using Multi-Sensors, Smartphone, and Cloud-Based Computing Platforms: A Comparative Analysis, Sensors (Basel, Switzerland), 21(1), 2020, doi:10.3390/s21010056.

- F. You, Y. Gong, H. Tu, J. Liang, H. Wang, “A Fatigue Driving Detection Algorithm Based on Facial Motion Information Entropy,” Journal of Advanced Transportation, 2020, 8851485, 2020, doi:10.1155/2020/8851485.

- H. Iridiastadi, “Fatigue in the Indonesian rail industry: A study examining passenger train drivers,” Applied Ergonomics, 92, 103332, 2021, doi:https://doi.org/10.1016/j.apergo.2020.103332.

- T. Zhang, Research on Multi-feature Fatigue Detection Based on Machine Vision.

- M. Zhang, Fatigue Driving Detection Based on Steering Wheel Grip Force.

- Z. Boulkenafet, Z. Akhtar, X. Feng, A. Hadid, Face Anti-spoofing in Biometric Systems BT – Biometric Security and Privacy: Opportunities & Challenges in The Big Data Era, Springer International Publishing, Cham: 299–321, 2017, doi:10.1007/978-3-319-47301-7_13.

- W.H. Tian, K.M. Zeng, Z.Q. Mo, B.Q. Lin, “Recognition of Unsafe Driving Behaviors Based on Convolutional Neural Network,” Dianzi Keji Daxue Xuebao/Journal of the University of Electronic Science and Technology of China, 48(3), 381–387, 2019, doi:10.3969/j.issn.1001-0548.2019.03.012.

- F. Yang, J. Huang, X. Yu, X. Cui, D. Metaxas, “University of Texas at Arlington,” 561–564, 2012.

- K.S.C. Kumar, B. Bhowmick, “An Application for Driver Drowsiness Identification based on Pupil Detection using IR Camera BT – Proceedings of the First International Conference on Intelligent Human Computer Interaction,” in: Tiwary, U. S., Siddiqui, T. J., Radhakrishna, M., and Tiwari, M. D., eds., Springer India, New Delhi: 73–82, 2009.

- V. Kazemi, J. Sullivan, “One millisecond face alignment with an ensemble of regression trees,” Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 1867–1874, 2014, doi:10.1109/CVPR.2014.241.

- X. Wang, J. Wu, H. Zun, “Design of vision detection and early warning system for fatigue driving based on DSP,” 68–70, 1377.

- F. Li, C.-H. Chen, G. Xu, L.P. Khoo, “Hierarchical Eye-Tracking Data Analytics for Human Fatigue Detection at a Traffic Control Center.,” IEEE Trans. Hum. Mach. Syst., 50(5), 465–474, 2020, doi:10.1109/THMS.2020.3016088.

- J. Ma, J. Zhang, Z. Gong, Y. Du, Study on Fatigue Driving Detection Model Based on Steering Operation Features and Eye Movement Features, 2018, doi:10.1109/CCSSE.2018.8724836.

Citations by Dimensions

Citations by PlumX

- Captures

- Mendeley - Readers: 2

Google Scholar

Crossref Citations

- Hai-Ping Ma, Nik Norsyahariati Nik Daud, Zainuddin Md Yusof, Wan Zuhairi Yaacob, Hu-Jun He, "Stability Analysis of Surrounding Rock of an Underground Cavern Group and Excavation Scheme Optimization: Based on an Optimized DDARF Method." Applied Sciences, vol. 13, no. 4, pp. 2152, 2023.

No. of Downloads Per Month

No. of Downloads Per Country