Efficient 2D Detection and Positioning of Complex Objects for Robotic Manipulation Using Fully Convolutional Neural Network

Volume 6, Issue 2, Page No 915–920, 2021

Adv. Sci. Technol. Eng. Syst. J. 6(2), 915–920 (2021);

DOI: 10.25046/aj0602104

DOI: 10.25046/aj0602104

Keywords: Machine Vision, Fully Convolutional Neural Net, U-Net, Machine Learning, Pick and Place

Programming industrial robots in a real-life environment is a significant task necessary to be dealt with in modern facilities. The “pick up and place” task is undeniably one of the regular robot programming problems which needs to be solved. At the beginning of the “pick and place” task, the position determination and exact detection of the objects for picking must be performed. In this paper, an advanced approach to the detection and positioning of various objects is introduced. The approach is based on two consecutive steps. Firstly, the captured scene, containing attentive objects, is transformed using a segmentation neural network. The output of the segmentation process is a schematic image in which the types and positions of objects are represented by gradient circles of various colors. Secondly, these particular circle positions are determined by finding the local maxima in the schematic image. The proposed approach is tested on a complex detection and positioning problem by evaluation of total accuracy.

1. Introduction

Automated systems have been developing rapidly for decades and they have increasingly helped to improve the reliability and productivity in all domains of industry. Machine vision, which is the family of methods used to provide imaging-based and image-processing-based inspections and analyses, is an indispensable element of automation. The implementations of machine vision approaches can be found in process control [1], automatic quality control [2], and especially in industrial robot programming and guidance [3].

Considering robot programming and guidance in an industrial environment, more and more intelligent machines are being utilized to deal with various applications. These days, a static and unchanging production environment is often being replaced by dynamically adapting production plans and conditions. Therefore, the assembly lines are managed on a daily basis and consequently, the automated systems and industrial robots need to be capable of dealing with more generalized tasks (general-purpose robotics). Although most robotic applications are still developed analytically or based on expert knowledge of the application approach [4], some industrial robotics producers have begun to implement deep learning methods in their applications like Keyence and their IV2 Vision Sensor. It is beginning to be generally recognized, that deep learning methods can play a significant role especially in the mentioned general-purpose robotics [5].

Deep learning consists of a family of modified machine learning methods aiming to solve the tasks that come naturally to human beings. Deep learning methods are performed directly on the available task-specific data in order to get a heuristic relation between the input data and the expected output. Various deep learning approaches have already been applied successfully to deal with various classification and detection tasks [6, 7] and are also utilized in other domains.

In general-purpose robotics, the “pick and place” task is the key problem to deal with. Generally, a “pick and place” task consists of a robotic manipulator (or group of manipulators) able to pick a particular object of attention and place it in a specific location with defined orientation.

In this contribution, the initial part of the “pick and place” issue is examined. To be more specific, the grasp point or grasping pose, which defines how a robotic arm end-effector should be set in order to efficiently pick up the object, is dealt with. Clearly, there is a broad group of grasp point detection techniques which can be listed either according to the type of perception sensor, or by a procedure used for object and grasp point detection and positioning. The most current methods are clearly described in survey [8]. Deep learning approaches for the detection of robotic grasping poses are summarized in review [4]. In this contribution, we propose a rapid, efficient, and accurate system for finding multiple object centers for flat and moving surfaces.

The key contribution of this article is:

- Proposal of an efficient grasp point detection method for a robotic arm capable of handling more types of objects. This method uses a classical industrial camera as the input data source.

- As an important part of the method, proposal of a deep learning-based approach to transform an RGB image of the scene of interest into a schematic grayscale frame. In this frame, the types of objects and the feasible positions of the grasp points are coded into gradient shapes of various colors. To the authors’ knowledge, this is the first application of this approach to the grasp point detection.

- The proposed grasp point detection method is insensitive to changing light conditions and highly variable surroundings. In addition, it is efficient enough to be used in real time with specific edge computing tools, such as NVIDIA Jetson Nano, Google Coral or Intel Movidius.

The structure of the article is as follows. The aim of the work is formulated, and the goals are defined in the next section. Then, the solution based on deep learning approach is proposed. After that, the case study, which should demonstrate the main features of the proposed solution, is presented. Finally, the results are summarized, and the article is finished with some conclusions. This paper is an extension of work originally presented in the 24th International Conference on System Theory, Control and Computing (ICSTCC) [9].

2. Problem Formulation

As stated already in the preceding section, we deal with the essential task of industrial robotics called the “pick and place” problem. To be more specific, we are interested in the first challenge of this problem, i.e. detection and positioning of the objects. As a very necessary and attractive problem, detection and positioning of objects of interest has been examined and researched from many points of view [10, 11]. These days, due to a greater use of laser scanner technology (e.g. Photoneo Phoxi 3D Laser Scanner, Faro Focus 3D Laser Scanner), clouds of points are often used for object detection and positioning [12, 13]. Apparently, laser scanners in combination with robust 3D object registration algorithms, provide a strong tool to be applied in “pick and place” problems. Nevertheless, solutions based on clouds of points are generally costly and for some materials (shiny metal, glass, etc.), their accuracy decreases. In addition, a large number of laser scanners provide framerates too low to be used with moving objects of interest. Therefore, we propose an alternative solution based on a classical monocular RGB industrial camera as an image acquisition sensor. Besides, we suggest a novel approach based on a fully convolutional neural network in combination with a classical image processing routine, in order to analyze the signal from the industrial camera.

The proposed solution is supposed to meet the requirements of the industrial sector, i.e. stable performance, insensivity to light conditions, quick response and reasonable cost. In order to demonstrate these requirements, we perform a case study, which is summarized at the end of this article. This case study is supposed to fulfill the following conditions:

- Objects – up to four different complex objects should be detected and located.

- Conditions – Detection and positioning accuracy should not be affected by background surface change.

- Framerate – In order to be able to register moving objects, the framerate should exceed 10 frames per second.

- Hardware – The detection and positioning system should be based on hardware suitable for industrial applications. However, the costs should not exceed $500 to be economically viable.

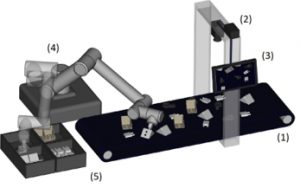

The graphic plan of our task is depicted in Figure 1.

Figure 1: The arrangement of the task – the conveyor belt (1) brings the objects, the industrial camera (2) takes the image of the area, the detection and positioning system (3) determines the grasp points for manipulation and the robotic arm (4) puts the objects to the desired positions (5)

3. Proposed Approach

We propose that the approach for object detection and positioning is composed of two parts. The first part is designed to perform the scanning of the area and it provides the visual data to be processed by the following part. The second part then processes the data and provides particular detected objects and their positions. Using this information, a parent control system should be able to manipulate the objects in order to achieve the desired positions.

3.1. Camera sensor

In order to achieve a sufficient framerate, we propose to implement an ordinary industrial monocular RGB sensor equipped with a corresponding lens as the source of input image. Ostensibly, the camera and lens should be chosen according to the situation in the specific task (the scanned scene size, light, the distance of the camera from the objects, etc.). The tutorials of a camera sensor and lens combination selection are available at the vision technology producer information sources e.g. the Basler Lens Selector provided by Basler AG.

3.2. Detection and positioning system

Various studies published in recent years prove that the family of convolutional neural network topologies (CNN) outperforms classical image processing methods in tasks of object detection and classification benchmarked on various datasets [14, 15]. Following this fact, we propose a detection and positioning system, that uses CNN to transform the original RGB image of the monitored area into a specific schematic image. The main purpose of this particular operation is to create a graphic representation, where the positions of the detected objects are highlighted as gradient circles in defined colors, while the rest of the image is black. Specifically, each pixel in an RGB image representing the scene is labeled with a float number in the range <0; 1>, where 1 means the optimal and 0 means the most unsuited grasp point position. These labels are situated in the R layer, G layer, B layer or all layers of the output of the CNN, according to the type of object. Hence, the positions of the gradient circles represent not only the positions of the detected objects, but the exact points on the object body, which are optimal for manipulation by a robotic arm (grasp points). The proposed approach is described in Figure 2.

Figure 2: The proposed approach – the original RGB image of the scanned area is transformed with a convolutional neural network into the schematic image, where the optimal grasp point positions for manipulation are highlighted by gradient circles of various colors, each particular color then represents the type of the object

The first step of the proposed procedure, i.e. the transformation of the RGB image into a graphical representation of the object positions, is a key element of our approach. We propose to implement a fully convolutional neural network connected as an encoder-decoder processor. Such a processor provides encoding the original input into a small shape and restoring it using the decoder’s capabilities. If the process is successful, the approach provides a correctly transformed image as the output from the decoder. We believe, that the correctly designed fully convolutional neural network is able to code gradient circles of defined colors on the exact positions of grasp points on the object bodies.

Therefore, in the next subsection, we introduce a specific fully convolutional neural network, that transforms an original RGB image into a graphical representation, where object grasp points are represented as radial gradients of defined colors.

3.3. Fully convolutional neural network for image transformation

Apparently, many different fully convolutional neural networks, such as ResNet [16], SegNet [17] or PSPNet [18], have been proposed to deal with various image processing tasks. From a wide family of neural network topologies, we select U-net as an initial point of development.

Figure 3: The development of the proposed topology of a fully convolutional neural network – layers removed from the original U-Net are crossed

U-Net is a fully convolutional neural network proposed initially for image segmentation tasks in biological and medical fields. However, it was then adapted to many other applications across different fields. This topology follows a typical encoder-decoder scheme with a bottleneck. In addition, it also contains a direct link between the parts of the encoder and the decoder, which is allowing the network to propagate contextual information to higher resolution layers. U-net topology is adopted from [19].

We streamline U-Net to fit our task in a more efficient way. To be more specific, U-Net originally provides the output data of the same dimensions as the input data. Such a detailed output is not required for a “pick and place” problem in industrial applications. Hence, we reduce the decoder part of U-Net topology. In our case, the output data is 16 times lower, which still provides an accuracy sufficient enough for object detection and positioning, and the topology itself is less computationally demanding. See Figure 3, where the changes applied to U-Net topology are shown in detail.

3.4. Locator for positioning of grasp points

Positioning of the gradient circles in a schematic image (last step in Figure 2) is a generic process of finding local maxima of each implemented color. These positions of the maxima directly represent the grasp points for manipulation using a robotic arm.

Generally, the process of finding local maxima in an array can be performed in several ways. The most obvious solution is to find the indices of the values, which are greater than all their neighbors. However, this approach is very sensitive to noise or small errors in the input data. Hence, it is more appropriate to implement a maximum filter operation, which dilates the original array and merges neighboring local maxima closer than the size of the dilation. Coordinates, where the original array is equal to the dilated array, are returned as local maxima. Clearly, the size of the dilation must be set. In the proposed locator, it is suitable to set it equal to the radius of the gradient circles.

4. Case Study

The aim of this section is to demonstrate the features of our detection and positioning system through the solution of a particular task. The task is properly defined in the next subsection. Subsequently, we propose a hardware implementation of the system and, as the final step of the procedure, a fully convolutional neural network is trained to be able to transform the original RGB images into a graphical representation of object types and positions.

4.1. Object detection and positioning task

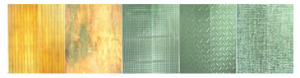

For this case study, we need to develop a system for different object detection and positioning. The four object combinations placed on five different types of surfaces were used. The objects of interest are shown in Figure 4 and the surfaces are shown in Figure 5. The objects are of a similar size. Three of them are metallic and one is of black plastic.

4.2. Hardware implementation

The system is composed from a camera sensor and a processing unit, which should process data acquired by the RGB sensor, in order to determine the types and positions of the objects of interest. In this case study, we implement a Basler acA2500-14uc industrial RGB camera as a data acquisition tool. This sensor is able to provide up to 14 5-MPx RGB frames per second. The camera is equipped with a Computar M3514-MP lens in order to monitor the 300 x 420 mm scan area from above at a distance of 500 mm.

Figure 4: Objects of interest – objects are labelled as Obj1 to Obj4

Figure 5: Various types of surfaces used in case study

The processing unit is supposed to be capable of processing images in real-time, as mentioned above. For this case study, the single-board NVIDIA Jetson NANO computer is used. It offers the NVIDIA Tegra X2 (2.0 GHz, 6 cores) CPU together with 8 GiB RAM. Furthermore, it provides wide communication possibilities (USB 2.0, 3.0, SATA, WiFi). The total price of components used for hardware implementation costs around $500.

4.3. Datasets

In this case study, we prepare 1021 original RGB images using various combinations of objects (Figure 4) and surfaces (Figure 5). In order to follow the topology of the CNN (Figure 3), we transform the images to 288 × 288 px. Then, we randomly divide the images into the training set (815 samples) and the testing set (206 samples).

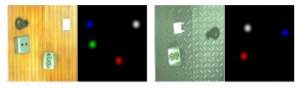

After that, the trickiest part of the development follows. The target images for the training set (the graphical representations of the object types and positions) should be manually prepared. Hence, for any RGB image, we construct a target artificial image, where the optimal grasp point of each individual object in the original image is highlighted by a colored gradient circle. Four colors (red, green, blue and white) are implemented, since we consider four types of objects in this case study. We prepared a custom labeling application to prepare target images. In this application, each input image is displayed, and a human user labels all feasible grasp points using a computer mouse. The application then generates the target images. Several examples of input-expected output pairs are demonstrated in Figure 6.

Figure 6: Two input-target pairs in training set – input image resolution is 288×288 px, target image resolution is 72×72 px

4.4. Fully convolutional neural network training

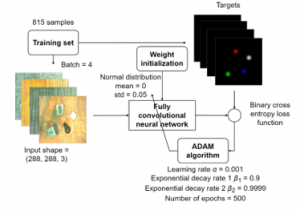

As the last step of the development, we train the network topology depicted in Figure 3.

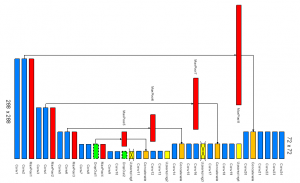

We select the ADAM algorithm for the neural network weights and biases optimization as it is generally considered as an acceptable performing technique in most of the cases [20]. The random initial weights set with gaussian distribution was used. The experiments are run fifty times in order to reduce the stochastic character of training. The best instance is then evaluated. The training process and its parameters are depicted in Figure 7.

Figure 7: Training process

4.5. Results

We select a confusion matrix as an evaluation metric for detection and positioning system performance. The prediction of the type of the object and position of the grasp point is labeled as correctly predicted, if the local maximum position of the gradient circle of the defined color directly corresponds to the original position of the particular object using the 72 x 72 px map, i.e. the map defined by the target image.

The confusion matrix for the best network trained according to the previous paragraph for the testing set, is summarized in Table 1. Note that the number of correctly predicted free spaces in the image is not present, because it is essentially a black surface that cannot be explicitly evaluated. However, as seen in the table, the detection and positioning system provides 100 % accuracy over the testing set. In addition, implementing Jetson NANO described above, the detection and positioning system is capable of processing 13 frames per second, which is more than required at the beginning of the paper.

Table 1: Confusion matrix (206 images, 611 objects in total)

| – | Obj1 pred. | Obj2 pred. | Obj3 pred. | Obj4 pred. | Free space pred. |

| Obj1 actual | 149 | 0 | 0 | 0 | 0 |

| Obj2 actual | 0 | 153 | 0 | 0 | 0 |

| Obj3 actual | 0 | 0 | 158 | 0 | 0 |

| Obj4 actual | 0 | 0 | 0 | 151 | 0 |

| Free space actual | 0 | 0 | 0 | 0 | Irrelevant |

5. Conclusion

In this contribution, we introduced a novel engineering approach to object grasp point detection and positioning for “pick and place” applications. The approach is based on two consecutive steps. At first, a fully convolutional neural network is implemented in order to transform the original input image of the monitored scene. Output of this process is a graphical representation of the types and positions of the objects present in the monitored scene. Secondly, the locator is used to analyze the graphical representation to get the explicit information of object type and position.

We also performed a case study to demonstrate the proposed approach. In this study, the proposed system provided accurate grasp point positions of four considered objects for manipulation.

In future work, we will try to enhance the system in several ways. Critically, the approach should provide not only the positions of the grasp points, but also the required pose of the robotic arm. This feature will be advantageous especially for clamp grippers. Apart from that, we will try to optimize the processing unit, both from the hardware and software point of view, in order to get the close-to-optimal topology of the fully convolutional neural network and the hardware suitable for it.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

The work was supported from ERDF/ESF ” Cooperation in Applied Research between the University of Pardubice and companies, in the Field of Positioning, Detection and Simulation Technology for Transport Systems (PosiTrans)” (No. CZ.02.1.01/0.0/0.0/17_049/0008394).

- Y. Cheng, M.A. Jafari, “Vision-Based Online Process Control in Manufacturing Applications,” IEEE Transactions on Automation Science and Engineering, 5(1), 140-153, 2008, doi:10.1109/TASE.2007.912058.

- M. Bahaghighat, L. Akbari, Q. Xin, “A Machine Learning-Based Approach for Counting Blister Cards Within Drug Packages,” IEEE Access, 7, 83785-83796, 2019, doi:10.1109/ACCESS.2019.2924445.

- H. Sheng, S. Wei, X. Yu, L. Tang, “Research on Binocular Visual System of Robotic Arm Based on Improved SURF Algorithm,” IEEE Sensors Journal, 20(20), 11849-11855, 2020, doi:10.1109/JSEN.2019.2951601.

- S. Caldera, A. Rassau, D. Chai, “Review of Deep Learning Methods in Robotic Grasp Detection,” Multimodal Technologies and Interaction, 2(3), 2018, doi:10.3390/mti2030057.

- R. Miyajima, “Deep Learning Triggers a New Era in Industrial Robotics,” IEEE MultiMedia, 24(4), 91-96, 2017, doi:10.1109/MMUL.2017.4031311.

- S. Li, W. Song, L. Fang, Y. Chen, P. Ghamisi, J.A. Benediktsson, “Deep Learning for Hyperspectral Image Classification: An Overview,” IEEE Transactions on Geoscience and Remote Sensing, 57(9), 6690-6709, 2019, doi:10.1109/TGRS.2019.2907932.

- F. Xing, Y. Xie, H. Su, F. Liu, L. Yang, “Deep Learning in Microscopy Image Analysis: A Survey,” IEEE Transactions on Neural Networks and Learning Systems, 29(10), 4550-4568, 2018, doi:10.1109/TNNLS.2017.2766168.

- A. Björnsson, M. Jonsson, K. Johansen, “Automated material handling in composite manufacturing using pick-and-place systems – a review,” Robotics and Computer-Integrated Manufacturing, 51, 222-229, 2018, doi:10.1016/j.rcim.2017.12.003.

- P. Dolezel, Petr, D. Stursa, D. Honc, “Rapid 2D Positioning of Multiple Complex Objects for Pick and Place Application Using Convolutional Neural Network,” in 2020 24th International Conference on System Theory, Control and Computing (ICSTCC), 213-217, 2020, doi:10.1109/ICSTCC50638.2020.9259696.

- C. Papaioannidis, V. Mygdalis, I. Pitas, “Domain-Translated 3D Object Pose Estimation,” IEEE Transactions on Image Processing, 29, 9279-9291, 2020, doi:10.1109/TIP.2020.3025447.

- J. Pyo, J. Cho, S. Kang, K. Kim, “Precise pose estimation using landmark feature extraction and blob analysis for bin picking,” in 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), 494-496, 2017, doi:10.1109/URAI.2017.7992786.

- J. Kim, H. Kim, J.-I. Park, “An Analysis of Factors Affecting Point Cloud Registration for Bin Picking,” in 2020 International Conference on Electronics, Information, and Communication (ICEIC), 1-4, 2020, doi:10.1109/ICEIC49074.2020.9051361.

- P. Dolezel, J. Pidanic, T. Zalabsky, M. Dvorak, “Bin Picking Success Rate Depending on Sensor Sensitivity,” in 2019 20th International Carpathian Control Conference (ICCC), 1-6, 2019, doi:10.1109/CarpathianCC.2019.8766009.

- S. Krebs, B. Duraisamy, F. Flohr, “A survey on leveraging deep neural networks for object tracking,” in 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), 411-418, 2017, doi:10.1109/ITSC.2017.8317904.

- Y. Xu, X. Zhou, S. Chen, F. Li, “Deep learning for multiple object tracking: a survey,” IET Computer Vision, 13(4), 355-368, 2019, doi:10.1049/iet-cvi.2018.5598.

- K. He, X. Zhang, S. Ren, J. Sun, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770-778, 2016, doi:10.1109/CVPR.2016.90.

- V. Badrinarayanan, A. Kendall, R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12), 2481-2495, 2017, doi:10.1109/TPAMI.2016.2644615.

- H. Zhao, J. Shi, X. Qi, X. Wang, J. Jia, “Pyramid Scene Parsing Network,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6230-6239, 2017, doi:10.1109/CVPR.2017.660.

- O. Ronneberger, P. Fischer, T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” in Medical Image Computing and Computer-Assisted Intervention (MICCAI), 234-241, 2015, doi:10.1007/978-3-319-24574-4_28.

- E.M. Dogo, O.J. Afolabi, N.I. Nwulu, B. Twala, C.O. Aigbavboa, “A Comparative Analysis of Gradient Descent-Based Optimization Algorithms on Convolutional Neural Networks,” in 2018 International Conference on Computational Techniques, Electronics and Mechanical Systems (CTEMS), 92-99, 2018, doi: 10.1109/CTEMS.2018.8769211.

- Vikas Thammanna Gowda, Landis Humphrey, Aiden Kadoch, YinBo Chen, Olivia Roberts, "Multi Attribute Stratified Sampling: An Automated Framework for Privacy-Preserving Healthcare Data Publishing with Multiple Sensitive Attributes", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 51–68, 2026. doi: 10.25046/aj110106

- David Degbor, Haiping Xu, Pratiksha Singh, Shannon Gibbs, Donghui Yan, "StradNet: Automated Structural Adaptation for Efficient Deep Neural Network Design", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 29–41, 2025. doi: 10.25046/aj100603

- Glender Brás, Samara Leal, Breno Sousa, Gabriel Paes, Cleberson Junior, João Souza, Rafael Assis, Tamires Marques, Thiago Teles Calazans Silva, "Machine Learning Methods for University Student Performance Prediction in Basic Skills based on Psychometric Profile", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 4, pp. 1–13, 2025. doi: 10.25046/aj100401

- khawla Alhasan, "Predictive Analytics in Marketing: Evaluating its Effectiveness in Driving Customer Engagement", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 3, pp. 45–51, 2025. doi: 10.25046/aj100306

- Khalifa Sylla, Birahim Babou, Mama Amar, Samuel Ouya, "Impact of Integrating Chatbots into Digital Universities Platforms on the Interactions between the Learner and the Educational Content", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 1, pp. 13–19, 2025. doi: 10.25046/aj100103

- Ahmet Emin Ünal, Halit Boyar, Burcu Kuleli Pak, Vehbi Çağrı Güngör, "Utilizing 3D models for the Prediction of Work Man-Hour in Complex Industrial Products using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 6, pp. 01–11, 2024. doi: 10.25046/aj090601

- Haruki Murakami, Takuma Miwa, Kosuke Shima, Takanobu Otsuka, "Proposal and Implementation of Seawater Temperature Prediction Model using Transfer Learning Considering Water Depth Differences", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 01–06, 2024. doi: 10.25046/aj090401

- Brandon Wetzel, Haiping Xu, "Deploying Trusted and Immutable Predictive Models on a Public Blockchain Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 72–83, 2024. doi: 10.25046/aj090307

- Anirudh Mazumder, Kapil Panda, "Leveraging Machine Learning for a Comprehensive Assessment of PFAS Nephrotoxicity", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 62–71, 2024. doi: 10.25046/aj090306

- Taichi Ito, Ken’ichi Minamino, Shintaro Umeki, "Visualization of the Effect of Additional Fertilization on Paddy Rice by Time-Series Analysis of Vegetation Indices using UAV and Minimizing the Number of Monitoring Days for its Workload Reduction", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 29–40, 2024. doi: 10.25046/aj090303

- Henry Toal, Michelle Wilber, Getu Hailu, Arghya Kusum Das, "Evaluation of Various Deep Learning Models for Short-Term Solar Forecasting in the Arctic using a Distributed Sensor Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 12–28, 2024. doi: 10.25046/aj090302

- Tinofirei Museba, Koenraad Vanhoof, "An Adaptive Heterogeneous Ensemble Learning Model for Credit Card Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 01–11, 2024. doi: 10.25046/aj090301

- Toya Acharya, Annamalai Annamalai, Mohamed F Chouikha, "Optimizing the Performance of Network Anomaly Detection Using Bidirectional Long Short-Term Memory (Bi-LSTM) and Over-sampling for Imbalance Network Traffic Data", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 144–154, 2023. doi: 10.25046/aj080614

- Renhe Chi, "Comparative Study of J48 Decision Tree and CART Algorithm for Liver Cancer Symptom Analysis Using Data from Carnegie Mellon University", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 57–64, 2023. doi: 10.25046/aj080607

- Ng Kah Kit, Hafeez Ullah Amin, Kher Hui Ng, Jessica Price, Ahmad Rauf Subhani, "EEG Feature Extraction based on Fast Fourier Transform and Wavelet Analysis for Classification of Mental Stress Levels using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 46–56, 2023. doi: 10.25046/aj080606

- Kitipoth Wasayangkool, Kanabadee Srisomboon, Chatree Mahatthanajatuphat, Wilaiporn Lee, "Accuracy Improvement-Based Wireless Sensor Estimation Technique with Machine Learning Algorithms for Volume Estimation on the Sealed Box", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 108–117, 2023. doi: 10.25046/aj080313

- Chaiyaporn Khemapatapan, Thammanoon Thepsena, "Forecasting the Weather behind Pa Sak Jolasid Dam using Quantum Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 54–62, 2023. doi: 10.25046/aj080307

- Der-Jiun Pang, "Hybrid Machine Learning Model Performance in IT Project Cost and Duration Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 108–115, 2023. doi: 10.25046/aj080212

- Paulo Gustavo Quinan, Issa Traoré, Isaac Woungang, Ujwal Reddy Gondhi, Chenyang Nie, "Hybrid Intrusion Detection Using the AEN Graph Model", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 44–63, 2023. doi: 10.25046/aj080206

- Ossama Embarak, "Multi-Layered Machine Learning Model For Mining Learners Academic Performance", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 850–861, 2021. doi: 10.25046/aj060194

- Roy D Gregori Ayon, Md. Sanaullah Rabbi, Umme Habiba, Maoyejatun Hasana, "Bangla Speech Emotion Detection using Machine Learning Ensemble Methods", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 70–76, 2022. doi: 10.25046/aj070608

- Deeptaanshu Kumar, Ajmal Thanikkal, Prithvi Krishnamurthy, Xinlei Chen, Pei Zhang, "Analysis of Different Supervised Machine Learning Methods for Accelerometer-Based Alcohol Consumption Detection from Physical Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 147–154, 2022. doi: 10.25046/aj070419

- Zhumakhan Nazir, Temirlan Zarymkanov, Jurn-Guy Park, "A Machine Learning Model Selection Considering Tradeoffs between Accuracy and Interpretability", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 72–78, 2022. doi: 10.25046/aj070410

- Ayoub Benchabana, Mohamed-Khireddine Kholladi, Ramla Bensaci, Belal Khaldi, "A Supervised Building Detection Based on Shadow using Segmentation and Texture in High-Resolution Images", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 166–173, 2022. doi: 10.25046/aj070319

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Arwa Alghamdi, Graham Healy, Hoda Abdelhafez, "Machine Learning Algorithms for Real Time Blind Audio Source Separation with Natural Language Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 125–140, 2021. doi: 10.25046/aj060515

- Baida Ouafae, Louzar Oumaima, Ramdi Mariam, Lyhyaoui Abdelouahid, "Survey on Novelty Detection using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 73–82, 2021. doi: 10.25046/aj060510

- Radwan Qasrawi, Stephanny VicunaPolo, Diala Abu Al-Halawa, Sameh Hallaq, Ziad Abdeen, "Predicting School Children Academic Performance Using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 08–15, 2021. doi: 10.25046/aj060502

- Zhiyuan Chen, Howe Seng Goh, Kai Ling Sin, Kelly Lim, Nicole Ka Hei Chung, Xin Yu Liew, "Automated Agriculture Commodity Price Prediction System with Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 376–384, 2021. doi: 10.25046/aj060442

- Hathairat Ketmaneechairat, Maleerat Maliyaem, Chalermpong Intarat, "Kamphaeng Saen Beef Cattle Identification Approach using Muzzle Print Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 110–122, 2021. doi: 10.25046/aj060413

- Md Mahmudul Hasan, Nafiul Hasan, Dil Afroz, Ferdaus Anam Jibon, Md. Arman Hossen, Md. Shahrier Parvage, Jakaria Sulaiman Aongkon, "Electroencephalogram Based Medical Biometrics using Machine Learning: Assessment of Different Color Stimuli", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 27–34, 2021. doi: 10.25046/aj060304

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, "Development of an EEG Controlled Wheelchair Using Color Stimuli: A Machine Learning Based Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 754–762, 2021. doi: 10.25046/aj060287

- Antoni Wibowo, Inten Yasmina, Antoni Wibowo, "Food Price Prediction Using Time Series Linear Ridge Regression with The Best Damping Factor", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 694–698, 2021. doi: 10.25046/aj060280

- Javier E. Sánchez-Galán, Fatima Rangel Barranco, Jorge Serrano Reyes, Evelyn I. Quirós-McIntire, José Ulises Jiménez, José R. Fábrega, "Using Supervised Classification Methods for the Analysis of Multi-spectral Signatures of Rice Varieties in Panama", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 552–558, 2021. doi: 10.25046/aj060262

- Phillip Blunt, Bertram Haskins, "A Model for the Application of Automatic Speech Recognition for Generating Lesson Summaries", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 526–540, 2021. doi: 10.25046/aj060260

- Sebastianus Bara Primananda, Sani Muhamad Isa, "Forecasting Gold Price in Rupiah using Multivariate Analysis with LSTM and GRU Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 245–253, 2021. doi: 10.25046/aj060227

- Byeongwoo Kim, Jongkyu Lee, "Fault Diagnosis and Noise Robustness Comparison of Rotating Machinery using CWT and CNN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1279–1285, 2021. doi: 10.25046/aj0601146

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, Md Mostafizur Rahman Komol, "Diagnosis of Tobacco Addiction using Medical Signal: An EEG-based Time-Frequency Domain Analysis Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 842–849, 2021. doi: 10.25046/aj060193

- Reem Bayari, Ameur Bensefia, "Text Mining Techniques for Cyberbullying Detection: State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 783–790, 2021. doi: 10.25046/aj060187

- Inna Valieva, Iurii Voitenko, Mats Björkman, Johan Åkerberg, Mikael Ekström, "Multiple Machine Learning Algorithms Comparison for Modulation Type Classification Based on Instantaneous Values of the Time Domain Signal and Time Series Statistics Derived from Wavelet Transform", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 658–671, 2021. doi: 10.25046/aj060172

- Carlos López-Bermeo, Mauricio González-Palacio, Lina Sepúlveda-Cano, Rubén Montoya-Ramírez, César Hidalgo-Montoya, "Comparison of Machine Learning Parametric and Non-Parametric Techniques for Determining Soil Moisture: Case Study at Las Palmas Andean Basin", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 636–650, 2021. doi: 10.25046/aj060170

- Ndiatenda Ndou, Ritesh Ajoodha, Ashwini Jadhav, "A Case Study to Enhance Student Support Initiatives Through Forecasting Student Success in Higher-Education", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 230–241, 2021. doi: 10.25046/aj060126

- Lonia Masangu, Ashwini Jadhav, Ritesh Ajoodha, "Predicting Student Academic Performance Using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 153–163, 2021. doi: 10.25046/aj060117

- Sara Ftaimi, Tomader Mazri, "Handling Priority Data in Smart Transportation System by using Support Vector Machine Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1422–1427, 2020. doi: 10.25046/aj0506172

- Othmane Rahmaoui, Kamal Souali, Mohammed Ouzzif, "Towards a Documents Processing Tool using Traceability Information Retrieval and Content Recognition Through Machine Learning in a Big Data Context", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1267–1277, 2020. doi: 10.25046/aj0506151

- Puttakul Sakul-Ung, Amornvit Vatcharaphrueksadee, Pitiporn Ruchanawet, Kanin Kearpimy, Hathairat Ketmaneechairat, Maleerat Maliyaem, "Overmind: A Collaborative Decentralized Machine Learning Framework", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 280–289, 2020. doi: 10.25046/aj050634

- Pamela Zontone, Antonio Affanni, Riccardo Bernardini, Leonida Del Linz, Alessandro Piras, Roberto Rinaldo, "Supervised Learning Techniques for Stress Detection in Car Drivers", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 22–29, 2020. doi: 10.25046/aj050603

- Kodai Kitagawa, Koji Matsumoto, Kensuke Iwanaga, Siti Anom Ahmad, Takayuki Nagasaki, Sota Nakano, Mitsumasa Hida, Shogo Okamatsu, Chikamune Wada, "Posture Recognition Method for Caregivers during Postural Change of a Patient on a Bed using Wearable Sensors", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1093–1098, 2020. doi: 10.25046/aj0505133

- Khalid A. AlAfandy, Hicham Omara, Mohamed Lazaar, Mohammed Al Achhab, "Using Classic Networks for Classifying Remote Sensing Images: Comparative Study", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 770–780, 2020. doi: 10.25046/aj050594

- Khalid A. AlAfandy, Hicham, Mohamed Lazaar, Mohammed Al Achhab, "Investment of Classic Deep CNNs and SVM for Classifying Remote Sensing Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 652–659, 2020. doi: 10.25046/aj050580

- Rajesh Kumar, Geetha S, "Malware Classification Using XGboost-Gradient Boosted Decision Tree", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 536–549, 2020. doi: 10.25046/aj050566

- Nghia Duong-Trung, Nga Quynh Thi Tang, Xuan Son Ha, "Interpretation of Machine Learning Models for Medical Diagnosis", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 469–477, 2020. doi: 10.25046/aj050558

- Oumaima Terrada, Soufiane Hamida, Bouchaib Cherradi, Abdelhadi Raihani, Omar Bouattane, "Supervised Machine Learning Based Medical Diagnosis Support System for Prediction of Patients with Heart Disease", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 269–277, 2020. doi: 10.25046/aj050533

- Haytham Azmi, "FPGA Acceleration of Tree-based Learning Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 237–244, 2020. doi: 10.25046/aj050529

- Hicham Moujahid, Bouchaib Cherradi, Oussama El Gannour, Lhoussain Bahatti, Oumaima Terrada, Soufiane Hamida, "Convolutional Neural Network Based Classification of Patients with Pneumonia using X-ray Lung Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 167–175, 2020. doi: 10.25046/aj050522

- Young-Jin Park, Hui-Sup Cho, "A Method for Detecting Human Presence and Movement Using Impulse Radar", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 770–775, 2020. doi: 10.25046/aj050491

- Anouar Bachar, Noureddine El Makhfi, Omar EL Bannay, "Machine Learning for Network Intrusion Detection Based on SVM Binary Classification Model", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 638–644, 2020. doi: 10.25046/aj050476

- Adonis Santos, Patricia Angela Abu, Carlos Oppus, Rosula Reyes, "Real-Time Traffic Sign Detection and Recognition System for Assistive Driving", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 600–611, 2020. doi: 10.25046/aj050471

- Amar Choudhary, Deependra Pandey, Saurabh Bhardwaj, "Overview of Solar Radiation Estimation Techniques with Development of Solar Radiation Model Using Artificial Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 589–593, 2020. doi: 10.25046/aj050469

- Maroua Abdellaoui, Dounia Daghouj, Mohammed Fattah, Younes Balboul, Said Mazer, Moulhime El Bekkali, "Artificial Intelligence Approach for Target Classification: A State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 445–456, 2020. doi: 10.25046/aj050453

- Shahab Pasha, Jan Lundgren, Christian Ritz, Yuexian Zou, "Distributed Microphone Arrays, Emerging Speech and Audio Signal Processing Platforms: A Review", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 331–343, 2020. doi: 10.25046/aj050439

- Ilias Kalathas, Michail Papoutsidakis, Chistos Drosos, "Optimization of the Procedures for Checking the Functionality of the Greek Railways: Data Mining and Machine Learning Approach to Predict Passenger Train Immobilization", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 287–295, 2020. doi: 10.25046/aj050435

- Yosaphat Catur Widiyono, Sani Muhamad Isa, "Utilization of Data Mining to Predict Non-Performing Loan", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 252–256, 2020. doi: 10.25046/aj050431

- Hai Thanh Nguyen, Nhi Yen Kim Phan, Huong Hoang Luong, Trung Phuoc Le, Nghi Cong Tran, "Efficient Discretization Approaches for Machine Learning Techniques to Improve Disease Classification on Gut Microbiome Composition Data", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 547–556, 2020. doi: 10.25046/aj050368

- Ruba Obiedat, "Risk Management: The Case of Intrusion Detection using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 529–535, 2020. doi: 10.25046/aj050365

- Krina B. Gabani, Mayuri A. Mehta, Stephanie Noronha, "Racial Categorization Methods: A Survey", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 388–401, 2020. doi: 10.25046/aj050350

- Dennis Luqman, Sani Muhamad Isa, "Machine Learning Model to Identify the Optimum Database Query Execution Platform on GPU Assisted Database", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 214–225, 2020. doi: 10.25046/aj050328

- Gillala Rekha, Shaveta Malik, Amit Kumar Tyagi, Meghna Manoj Nair, "Intrusion Detection in Cyber Security: Role of Machine Learning and Data Mining in Cyber Security", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 72–81, 2020. doi: 10.25046/aj050310

- Ahmed EL Orche, Mohamed Bahaj, "Approach to Combine an Ontology-Based on Payment System with Neural Network for Transaction Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 551–560, 2020. doi: 10.25046/aj050269

- Bokyoon Na, Geoffrey C Fox, "Object Classifications by Image Super-Resolution Preprocessing for Convolutional Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 476–483, 2020. doi: 10.25046/aj050261

- Johannes Linden, Xutao Wang, Stefan Forsstrom, Tingting Zhang, "Productify News Article Classification Model with Sagemaker", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 13–18, 2020. doi: 10.25046/aj050202

- Michael Wenceslaus Putong, Suharjito, "Classification Model of Contact Center Customers Emails Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 174–182, 2020. doi: 10.25046/aj050123

- Rehan Ullah Khan, Ali Mustafa Qamar, Mohammed Hadwan, "Quranic Reciter Recognition: A Machine Learning Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 173–176, 2019. doi: 10.25046/aj040621

- Mehdi Guessous, Lahbib Zenkouar, "An ML-optimized dRRM Solution for IEEE 802.11 Enterprise Wlan Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 19–31, 2019. doi: 10.25046/aj040603

- Toshiyasu Kato, Yuki Terawaki, Yasushi Kodama, Teruhiko Unoki, Yasushi Kambayashi, "Estimating Academic results from Trainees’ Activities in Programming Exercises Using Four Types of Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 321–326, 2019. doi: 10.25046/aj040541

- Nindhia Hutagaol, Suharjito, "Predictive Modelling of Student Dropout Using Ensemble Classifier Method in Higher Education", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 206–211, 2019. doi: 10.25046/aj040425

- Fernando Hernández, Roberto Vega, Freddy Tapia, Derlin Morocho, Walter Fuertes, "Early Detection of Alzheimer’s Using Digital Image Processing Through Iridology, An Alternative Method", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 126–137, 2019. doi: 10.25046/aj040317

- Abba Suganda Girsang, Andi Setiadi Manalu, Ko-Wei Huang, "Feature Selection for Musical Genre Classification Using a Genetic Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 2, pp. 162–169, 2019. doi: 10.25046/aj040221

- Konstantin Mironov, Ruslan Gayanov, Dmiriy Kurennov, "Observing and Forecasting the Trajectory of the Thrown Body with use of Genetic Programming", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 248–257, 2019. doi: 10.25046/aj040124

- Bok Gyu Han, Hyeon Seok Yang, Ho Gyeong Lee, Young Shik Moon, "Low Contrast Image Enhancement Using Convolutional Neural Network with Simple Reflection Model", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 159–164, 2019. doi: 10.25046/aj040115

- Zheng Xie, Chaitanya Gadepalli, Farideh Jalalinajafabadi, Barry M.G. Cheetham, Jarrod J. Homer, "Machine Learning Applied to GRBAS Voice Quality Assessment", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 329–338, 2018. doi: 10.25046/aj030641

- Richard Osei Agjei, Emmanuel Awuni Kolog, Daniel Dei, Juliet Yayra Tengey, "Emotional Impact of Suicide on Active Witnesses: Predicting with Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 501–509, 2018. doi: 10.25046/aj030557

- Sudipta Saha, Aninda Saha, Zubayr Khalid, Pritam Paul, Shuvam Biswas, "A Machine Learning Framework Using Distinctive Feature Extraction for Hand Gesture Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 72–81, 2018. doi: 10.25046/aj030510

- Charles Frank, Asmail Habach, Raed Seetan, Abdullah Wahbeh, "Predicting Smoking Status Using Machine Learning Algorithms and Statistical Analysis", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 184–189, 2018. doi: 10.25046/aj030221

- Sehla Loussaief, Afef Abdelkrim, "Machine Learning framework for image classification", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 1, pp. 1–10, 2018. doi: 10.25046/aj030101

- Ruijian Zhang, Deren Li, "Applying Machine Learning and High Performance Computing to Water Quality Assessment and Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 285–289, 2017. doi: 10.25046/aj020635

- Batoul Haidar, Maroun Chamoun, Ahmed Serhrouchni, "A Multilingual System for Cyberbullying Detection: Arabic Content Detection using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 275–284, 2017. doi: 10.25046/aj020634

- Yuksel Arslan, Abdussamet Tanıs, Huseyin Canbolat, "A Relational Database Model and Tools for Environmental Sound Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 6, pp. 145–150, 2017. doi: 10.25046/aj020618

- Loretta Henderson Cheeks, Ashraf Gaffar, Mable Johnson Moore, "Modeling Double Subjectivity for Gaining Programmable Insights: Framing the Case of Uber", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1677–1692, 2017. doi: 10.25046/aj0203209

- Moses Ekpenyong, Daniel Asuquo, Samuel Robinson, Imeh Umoren, Etebong Isong, "Soft Handoff Evaluation and Efficient Access Network Selection in Next Generation Cellular Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1616–1625, 2017. doi: 10.25046/aj0203201

- Rogerio Gomes Lopes, Marcelo Ladeira, Rommel Novaes Carvalho, "Use of machine learning techniques in the prediction of credit recovery", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1432–1442, 2017. doi: 10.25046/aj0203179

- Daniel Fraunholz, Marc Zimmermann, Hans Dieter Schotten, "Towards Deployment Strategies for Deception Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 3, pp. 1272–1279, 2017. doi: 10.25046/aj0203161

- Arsim Susuri, Mentor Hamiti, Agni Dika, "Detection of Vandalism in Wikipedia using Metadata Features – Implementation in Simple English and Albanian sections", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 4, pp. 1–7, 2017. doi: 10.25046/aj020401

- Adewale Opeoluwa Ogunde, Ajibola Rasaq Olanbo, "A Web-Based Decision Support System for Evaluating Soil Suitability for Cassava Cultivation", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 42–50, 2017. doi: 10.25046/aj020105

- Arsim Susuri, Mentor Hamiti, Agni Dika, "The Class Imbalance Problem in the Machine Learning Based Detection of Vandalism in Wikipedia across Languages", Advances in Science, Technology and Engineering Systems Journal, vol. 2, no. 1, pp. 16–22, 2016. doi: 10.25046/aj020103