Super Resolution Based Underwater Image Enhancement by Illumination Adjustment and Color Correction with Fusion Technique

Volume 6, Issue 2, Page No 36-42, 2021

Author’s Name: Md. Ashfaqul Islam1,a), Maisha Hasnin2, Nayeem Iftakhar3, Md. Mushfiqur Rahman4

View Affiliations

1Department of Electrical and Electronic Engineering, Bangladesh Army University of Engineering and Technology (BAUET), Natore, 6431, Bangladesh

2Department of Information and Communication Technology, Bangladesh University of Professional (BUP), Dhaka, 1216, Bangladesh

3Department of Computer Science and Engineering, Bangladesh Army University of Engineering and Technology (BAUET), Natore, 6431, Bangladesh

4Department of Computer Science and Engineering, Rajshahi University of Engineering and Technology (RUET), Rajshahi, 6204, Bangladesh

a)Author to whom correspondence should be addressed. E-mail: niloy064@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 6(2), 36-42 (2021); ![]() DOI: 10.25046/aj060205

DOI: 10.25046/aj060205

Keywords: Underwater Image, Illumination Adjustment, Color Correction, Fusion, Neural Network, Super Resolution

Export Citations

In underwater photographs are look like low-quality images, the main reason is behind that due to attenuation of the propagated light, absorption and scattering effect. The absorption significantly reduces the light energy, while the dispersion causes changes in the light propagation path. They result in foggy appearance and degradation of contrast, causing misty distant objects. So, for getting the most effective result from that type of image, there must be an enhancement technique that has to be applied. We propose an efficient technique to enhance the images captured underwater by applying a fusion-based technique using super-resolution. For enhancing images, we have followed two steps. The first one illumination adjustment and another one is color correction. Then fusion technique is applied to the resultant image from illumination adjustment and color correction as two inputs and combined them with their maximum coefficient value and received output from there. After that, on the fused output image, we used the Super-Resolution method. In the Super-resolution procedure, low resolution and high-resolution images are used then a bi-cubic interpolation algorithm and finally, VDSR (very-deep super-resolution) neural network has been used to get the most effective result from an obscure underwater image. For getting the most effective result from an obscure image, a new high-quality and efficient image enhancement method has been proposed in this paper.

Received: 08 November 2020, Accepted: 17 February 2021, Published Online: 10 March 2021

1.Introduction

Under water photographs have been commonly used in marine life under water studies in recent years. Underwater photography has jointly been a primary objective of concern in various technology and science branches, such as scrutiny of underwater infrastructures and cables, object tracking, underwater vessel control, marine biology review, and archeology [1]. Underwater images are primarily depicted by their poor visibility as a result of the light is exponentially weakened within the underwater atmosphere [2]. The entities at a distance more than ten meters are almost undetectable in common seawater pictures; the colors get faded [1]. Water absorbs light, reducing light rays’ energy, resulting in image under-exposure [3]. The reasoning for the color cast is that there is a differing absorption rate for the varying wavelengths of light [4]. The key challenges, including color casting, fuzz, and under-exposure, can be faced by underwater imaging processing. It ought to be handled to recoup the comparatively true color and normal aberrance to make the collected photographs more appropriate for observation [5]. This can be done by either image restoration or image enhancement procedures [6]. For enhancing the underwater images, it is the most important part to develop some algorithms like illumination adjust, color correction, fusion technique & restoration process to get the most effective result from a nun-uniform image. We do super-resolution by revising the arbitrary visual appearance of the image by generating higher images from low-resolution pictures [4].

2. Previous Research Work

To solve the obscurity of a photograph shot in an underwater setting, a lot of work has already been completed. Three big problems occur in underwater photography, including color casting, under-exposure, and fuzz. In [1], the author proposes a new method formed by two components: correction of color and illumination modification. In order to overcome the color cast, they used an appropriate color enhancement technique and then followed the Retinex model to make the illumination adjustment by successively applying a gamma correction on and removing the illumination map. In order to eliminate the haze in underwater photographs that do not require advanced hardware or information about the underwater environments or scene composition. Their approach relies on the fusion of multiple inputs, but by contrast correcting and enhancing the sharpness of a white-balanced version of a single native input image, they derive the two inputs to merge [7]. Eliminating the color cast by white balancing to create a realistic appearance of the underwater pictures, and the multi-scale application of the method of fusion results in an artifact-free blending. To dramatically upgrade the color of underwater images, the author used contrast stretching and HSI color space [8]. Again, author of [1] implemented a fusion approach that used the global min-max windowing strategy for maximizing contrast. In [6], the author order to distinguish less contrast, researchers have used histogram expanding technique and CLAHE for color correction and contrast enhancement techniques. The unsupervised color correction method is applied by the author of [9] to increase the resolution of a low-quality picture.

For increasing the accuracy of the images of underwater, we are doing this because it has been used in many research sectors in recent years. By reviewing some previous works, we can see that they have separately worked with different techniques, but in our work, we have combined the different techniques – illumination adjustment and color correction with fusion technique and super resolution for the better output than others.

In our article, we have worked on the various procedures for enhancing an underwater image. There we have worked on illumination adjustment, color correction first to enhance the image. Then the fusion technique is applied to the modified image we get by balancing the illumination and correcting the color, and finally, we have used the super-resolution process on fused output image to get the best effective result from the input image.

3. Proposed Architecture

Basically, there are four sections in the proposed architecture. In the first section, we have described the illumination adjustment technique. In section two we have described the color correction part. In section three, the fusion technique has been described and finally, we have briefly described the super-resolution process in section four.

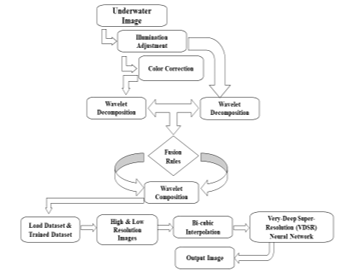

Figure 1: Proposed System Architecture.

Figure 1: Proposed System Architecture.

3.1. Illumination Adjustment

Illumination adjustment basically is to falling the light on objects and making them visible as they are illuminated, it happens for scaling up the light in the water. Explaining M×N underwater images illumination of a by illuminant is possible, etc. [6]

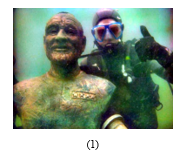

This is our input image 1 we are working on.

In the illumination adjustment process for removing illumination, firstly there we have used the grayscale algorithm to convert the original image into a grayscale image.

In the illumination adjustment process for removing illumination, firstly there we have used the grayscale algorithm to convert the original image into a grayscale image.

We then used the Adaptive Histogram Equalization on the grayscale image to control the local contrast of the pixels from the adjoining area, essentially it is used to increase the image contrast.

We then used the Adaptive Histogram Equalization on the grayscale image to control the local contrast of the pixels from the adjoining area, essentially it is used to increase the image contrast.

In order to fix the image luminance, we subtracted the background from the Adaptive Histogram image and applied the gamma correction algorithm to the image. After that we used median filter on the image, it is generally used for removing noise (unwanted pixel) from the image. The total progression, from background subtracting to image filtering, is repeated twice (three times in total) to change back to an image’s RGB color space.

In order to fix the image luminance, we subtracted the background from the Adaptive Histogram image and applied the gamma correction algorithm to the image. After that we used median filter on the image, it is generally used for removing noise (unwanted pixel) from the image. The total progression, from background subtracting to image filtering, is repeated twice (three times in total) to change back to an image’s RGB color space.

After that, the three results we got from filtered images are correlated with the processing from the illumination grayscale image of the illumination attuned RGB image.

After that, the three results we got from filtered images are correlated with the processing from the illumination grayscale image of the illumination attuned RGB image.

Here we have shown the same procedure for a another input image for better understanding.

Here we have shown the same procedure for a another input image for better understanding.

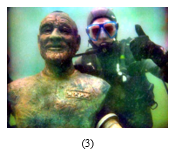

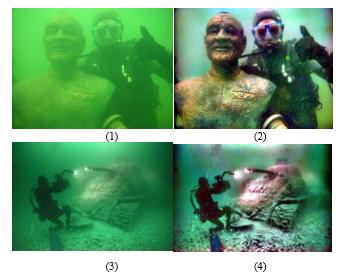

Figure 2: (1) Input image 1, (2) Gray scale conversion of input image 1, (3) Adaptive Histogram Equalized image 1, (4) Illumination adjusted gray scale image 1, (5) Illumination adjusted output image 1, (6) Input image 2, (7) Gray scale conversion of input image 2, (8) Adaptive Histogram Equalized image 2, (9) Illumination adjusted gray scale image 2 (10) Illumination adjusted output image 2

Figure 2: (1) Input image 1, (2) Gray scale conversion of input image 1, (3) Adaptive Histogram Equalized image 1, (4) Illumination adjusted gray scale image 1, (5) Illumination adjusted output image 1, (6) Input image 2, (7) Gray scale conversion of input image 2, (8) Adaptive Histogram Equalized image 2, (9) Illumination adjusted gray scale image 2 (10) Illumination adjusted output image 2

3.2. Color Correction

In the procedure of color correction, the illumination adjusted images’ output is used as the input image of color correction.

Images are influenced by low contrast because of dispersion in the underwater environment. Firstly, the RGB space of the illumination adjusted image is converted into HSV (Hue Saturation Value).

Images are influenced by low contrast because of dispersion in the underwater environment. Firstly, the RGB space of the illumination adjusted image is converted into HSV (Hue Saturation Value).

Then HSV is prepared to point up the maximum visible portion of the output we have with max luminance. It is a useful technique that is used to increase the contrast of an image for balancing image intensity after Histogram Equalization is used on the processed image.

Then HSV is prepared to point up the maximum visible portion of the output we have with max luminance. It is a useful technique that is used to increase the contrast of an image for balancing image intensity after Histogram Equalization is used on the processed image.

However, sometimes demolitions disclose parts and present a nonuniform result. For this reason, here we use Adaptive Histogram Equalization on the Histogram Equalized image of the input image of the color enrichment. Adaptive Histogram is the up-gradation process of Histogram Equalization.

However, sometimes demolitions disclose parts and present a nonuniform result. For this reason, here we use Adaptive Histogram Equalization on the Histogram Equalized image of the input image of the color enrichment. Adaptive Histogram is the up-gradation process of Histogram Equalization.

Adaptive Histogram Equalization operates by contemplating only a small component and produces a contrast enhancement of this part based on the surrounding CDF. Histogram Equalization technique is performed to measure the intensity of the individual structure of the image, this. and especially changes each RGB channels color.

Adaptive Histogram Equalization operates by contemplating only a small component and produces a contrast enhancement of this part based on the surrounding CDF. Histogram Equalization technique is performed to measure the intensity of the individual structure of the image, this. and especially changes each RGB channels color.

The total color correction process for input image 2.

The total color correction process for input image 2.

Figure 3: (1) Contrast enhancement (Illumination adjusted image) Input image 1, (2) HSV image for image 1, (3) Histogram equalized image for image 1, (4) AHE image for image 1, (5) Contrast enhanced image for image 1, (6) Input image 2 for contrast enhancement, (7) HSV image for image 2, (8) Histogram equalized image for image 2, (9) AHE image for image 2, (10) Contrast enhanced image for image 2.

Figure 3: (1) Contrast enhancement (Illumination adjusted image) Input image 1, (2) HSV image for image 1, (3) Histogram equalized image for image 1, (4) AHE image for image 1, (5) Contrast enhanced image for image 1, (6) Input image 2 for contrast enhancement, (7) HSV image for image 2, (8) Histogram equalized image for image 2, (9) AHE image for image 2, (10) Contrast enhanced image for image 2.

3.3. Fusion Procedure

Combination procedure fundamentally may be a method of combining apocope data from two or more images into a single image, the yield image will represent more data than the input image. Within the Combination method, Wavelet Changes are fundamentally the developments of the plausibility of Tall pass sifting. The way towards applying the Discrete Wavelet Transform (DWT) can be talked to as a bank of channels. At respectively degree of decay, the flag is separated into tall recurrence and low-frequency elements, and the low-frequency portion can be moreover decayed till we get the perfect determination. The Wavelet change is deliberate to urge awesome recurrence determination for low-frequency elements and tall common determination for high-frequency components.

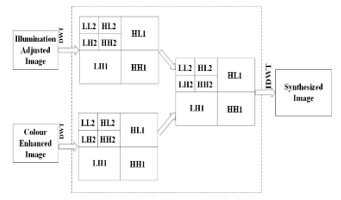

Here two input images are taken. Firstly, we took illumination adjusted images output and secondly, we took the color corrected image as input. Then we separated the high-frequency and low-frequency elements from the image. Whereas the wavelet transforms both dimension of is used. Firstly, the high pass and low pass filter used on the rows, after that it is used on the columns. At a point it provides horizontal approximation value when a low pass filter is used on the rows and we get horizontal details by using high pass filter. The sub-signal has the highest frequency equivalent to half of the first, created from the low pass filter on the columns of the horizontal approximation and horizontal information, low pass filters and high pass filters are once again used, carrying around four sub-images.[10]: rough picture, horizontal detail, vertical detail, and diagonal detail separately. In figure 4 the one level decomposition of the input picture has shown [1].

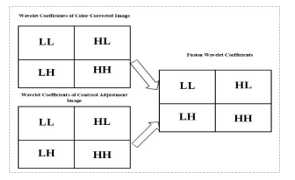

Figure 4: 2D wavelet-based image decomposition of level two.

Figure 4: 2D wavelet-based image decomposition of level two.

The pictures are used as an input for their coefficient to be decomposed and composed inversely. Then the wavelet transform is used separately to get the modified image as a final image. Up sampling completes the decomposition of wavelet transformation, and down sampling is used for the converse composition.

Figure 5: 2D decomposition of level two, fusion of coefficients and Output Image

Figure 5: 2D decomposition of level two, fusion of coefficients and Output Image

The output images of illumination adjusted image and the color-enhanced image are used as the input for DWT decomposition in figure 5. From there, no opposite change is applied to the coefficients of its decomposition and fused utilizing the maximum estimations of the coefficients.

Figure 6: 2D decomposition of level two, fusion of coefficients and synthesized image (1) & (2) of the proposed method

Figure 6: 2D decomposition of level two, fusion of coefficients and synthesized image (1) & (2) of the proposed method

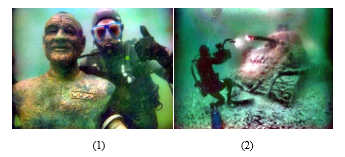

Figure 7: (1) Fusion Output 1 (2) Fusion Output 2

Figure 7: (1) Fusion Output 1 (2) Fusion Output 2

3.4. Super Resolution

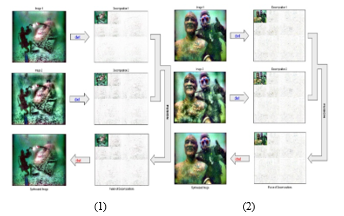

Super-resolution could be a method of imaging that progresses the resolution of an imaging framework, additionally, it could be a prepare of combining a sequence of low-resolution pictures to create the next determination picture or arrangement. In arrange to demonstrate the execution of this strategy, it was being utilized on the stacked dataset(images), compared with diverse strategies there had been prepared the taking after stack dataset(images) utilizing code.

After applying the bi-cubic interpolation procedure, the VDSR (Very-Deep Super Resolution) method has been applied to the image. VDSR refers to a convolutional neural network engineering intended to perform single image super-resolution [11]. The VDSR learns the retailing among low-and high-goals images. This retailing is conceivable on the grounds that low-resolutions and high-resolution images have comparative image content and vary principally in high-recurrence subtleties. VDSR utilizes a leftover learning methodology, implying that the system figures out how to evaluate a lingering image. With regards to super-resolution, a leftover image is a distinction between a high-resolution reference picture and a low-resolution image that has been upscaled utilizing bi-cubic interpolation to coordinate the size of the allusion image. A remaining image holds data of high-frequency subtleties of an image. The residual image from the luminance of a color image is found by the VDSR network. VDSR is prepared to utilize just the luminance channel since human understanding is more sensitive to alternate in brilliance than to alternate in color. For a scale factor, the size of the reference image to the size of the low-resolution image is expected. As the scale factor expands, SISR turns out to be all the more not well presented in light of the fact that the low-goals image loses more data about the high-frequency image content. By utilizing a huge responsive field VDSR takes care of this issue. This model trains a VDSR network with different scale factors utilizing scale augmentation. Scale augmentation improves the outcomes at bigger scope factors on the grounds that the network can exploit the image context from littler scale factors. Also, the VDSR system can sum up to acknowledge images with inter-scale factors.

4. Result and Discussion

The results are described as follows;

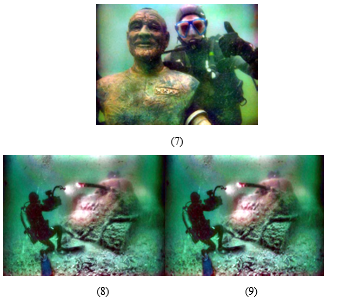

Figure 8: (1) Fusion output of input image 1, (2) Low Resolution input image 1 (3) Bi-Cubic Interpolation of input image 1, (4) Residual Image from VDSR of input image 1 (5) High-Resolution Image of input image 1 Obtained Using VDSR (6) High-Resolution Results of input image 1 using Bicubic Interpolation (7) Final Output of input image 1, (8) Fusion output of input image 2, (9) Low Resolution of input image 2 (10) Bi-Cubic Interpolation of input image 2, (11) Residual Image from VDSR of input image 2 (12) High-Resolution of input image 2 Obtained Using VDSR (13) High-Resolution Result of input image 2 using Bicubic Interpolation (14) Final Output of input image 2

Figure 8: (1) Fusion output of input image 1, (2) Low Resolution input image 1 (3) Bi-Cubic Interpolation of input image 1, (4) Residual Image from VDSR of input image 1 (5) High-Resolution Image of input image 1 Obtained Using VDSR (6) High-Resolution Results of input image 1 using Bicubic Interpolation (7) Final Output of input image 1, (8) Fusion output of input image 2, (9) Low Resolution of input image 2 (10) Bi-Cubic Interpolation of input image 2, (11) Residual Image from VDSR of input image 2 (12) High-Resolution of input image 2 Obtained Using VDSR (13) High-Resolution Result of input image 2 using Bicubic Interpolation (14) Final Output of input image 2

Figure 9: (1) Input image 1, (2) Output for input image 1, (3) Input image 2, (4) Output for input image 2 by, applying super-resolution Process.

Figure 9: (1) Input image 1, (2) Output for input image 1, (3) Input image 2, (4) Output for input image 2 by, applying super-resolution Process.

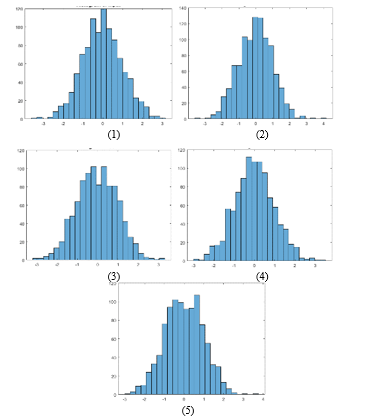

An Image of Histogram outlines a chart that will be acclimated assess the standard of an image by looking at the values of an image some time recently and after upgrade [11]. The histogram of underwater input image is darker and so the improved output images’ histogram has brighter pixel values. It is the mixture of the image modified by illumination and the image corrected by color. As a consequence of adding super resolution, another source image has brighter pixel values.

Figure 10: Histogram Curve of (1) Input Image, (2) Illumination Output Image, (3) Color Correction Output Image (4) Fusion Output Image (5) Super-Resolution Output Image.

Figure 10: Histogram Curve of (1) Input Image, (2) Illumination Output Image, (3) Color Correction Output Image (4) Fusion Output Image (5) Super-Resolution Output Image.

Table 1: Result of comparison table

| Image | Proposed | ||||

| Entropy | PSNR | MSE | RMSE | SSIM | |

| Input | 7.5844 | 21.9562 | 416.8655 | 98.0544 | 0.9996 |

| Illumination | 7.5812 | 21.9416 | 420.6785 | 106.3258 | 0.9744 |

| Color Correction | 7.9011 | 21.9430 | 413.1758 | 15.7525 | 0.9535 |

| Fusion | 7.5812 | 21.9343 | 423.3366 | 15.8607 | 0.9507 |

| Super Resolution | 7.8738 | 21.8837 | 415.2216 | 15.7598 | 0.9926 |

Table 2: Numerical Result for Entropy of output Image and compare with [1] and [12] Methods

| Image | Entropy | ||

| [1] | [12] | Proposed | |

| Output | 6.8683 | 7.5112 | 7.8738 |

5. Conclusion

In this article, by illumination improvement and color correction with fusion technique, we defined super resolution-based underwater image enhancement that will ensure successful clarity enhancement and recovery of blurred underwater image. An image taken underwater faces so many problems like blurring and distraction, attenuation in water etc. For recovering this problem, we have proposed a method that has been used to correct all faced problems. Initially we took a hazy underwater image then we used illumination adjustment and color correction method on the image, after getting two outputs from illumination adjustment and color correction, we have used the fusion technique on those two images and combined them by the fusion technique to get an effective fused image as output. That clarity of the image has been improved after that. Then we have applied the super resolution method on the image obtained from fusion technique. Applying super resolution, the clarification of the image has been more-high. On the other-hand if there is any blur or noise in the image it will be removed and sharpened and we will get a high-resolution image. In Table 1 shows that the performance of the Entropy, Peak Signal-to-Noise Ratio (PSNR), Mean Square Error (MSE), Root Mean Square Error (RMSE) and Structural Similarity Index (SSIM) result for the images which are used for analysis. The comparison between our output image and [1], [12]authors output image in Table 2, and its clearly describes that the output image has a higher entropy value from them, and it’s as desired for the quality of a good image. From here we can clearly clarify that we can achieve a more effective clear image from a hazy image.

6. Future Works

Our plan is to focus on addressing the inhomogeneous dispersion and artificial lighting problems in Super Resolution, in future. On the other hand, in addition to hydrophyte robot inspection and IOT, particularly in vision systems, underwater inspection and observation tasks.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

We the authors of Department of Electrical and Electronic Engineering, Department of Computer Science and Engineering and Department of Information and Communication Technology, Bangladesh Army University of Engineering and Technology and Bangladesh University of Professionals are very grateful for the tremendous support and guideline during the process of this work.

- C.O. Ancuti, C. Ancuti, C. De Vleeschouwer, P. Bekaert, “Color Balance and Fusion for Underwater Image Enhancement,” IEEE Transactions on Image Processing, 27(1), 379–393, 2018, doi:10.1109/TIP.2017.2759252.

- C. Li, J. Guo, R. Cong, Y. Pang, B. Wang, “Underwater Image Enhancement by Dehazing With Minimum Information Loss and Histogram Distribution Prior,” IEEE Transactions on Image Processing, 25(12), 5664–5677, 2016, doi:10.1109/TIP.2016.2612882.

- S.Q. Duntley, “Light in the Sea*,” Journal of the Optical Society of America, 53(2), 214, 1963, doi:10.1364/josa.53.000214.

- W. Zhang, G. Li, Z. Ying, “A new underwater image enhancing method via color correction and illumination adjustment,” in 2017 IEEE Visual Communications and Image Processing (VCIP), 1–4, 2017, doi:10.1109/VCIP.2017.8305027.

- R. Khan, Fusion based underwater image restoration system, 2014.

- X. Wang, R. Nian, B. He, B. Zheng, A. Lendasse, “Underwater image super-resolution reconstruction with local self-similarity analysis and wavelet decomposition,” in OCEANS 2017 – Aberdeen, 1–6, 2017, doi:10.1109/OCEANSE.2017.8084745.

- A.O. and A.Z.T. Kashif Iqbal, Rosalina Abdul Salam, “Underwater Image Enhancement Using an Integrated Colour Model,” IAENG International Journal Of Computer Science, 32(2), 239–244, 2007.

- K. Iqbal, M. Odetayo, A. James, R.A. Salam, A.Z.H. Talib, “Enhancing the low quality images using Unsupervised Colour Correction Method,” in 2010 IEEE International Conference on Systems, Man and Cybernetics, 1703–1709, 2010, doi:10.1109/ICSMC.2010.5642311.

- R.SwarnaLakshmi, B. Loganathan, “An Efficient Underwater Image Enhancement Using Color Constancy Deskewing Algorithm,” International Journal of Innovative Research in Computer and Communication Engineering, 3, 7164–7168, 2015.

- R. Singh, M. Biswas, “Contrast and color improvement based haze removal of underwater images using fusion technique,” in 2017 4th International Conference on Signal Processing, Computing and Control (ISPCC), 138–143, 2017, doi:10.1109/ISPCC.2017.8269664.

- J. Huang, A. Singh, N. Ahuja, “Single image super-resolution from transformed self-exemplars,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5197–5206, 2015, doi:10.1109/CVPR.2015.7299156.

- R. Singh, M. Biswas, “Adaptive histogram equalization based fusion technique for hazy underwater image enhancement,” in 2016 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), 1–5, 2016, doi:10.1109/ICCIC.2016.7919711.