Balancing Exploration-Exploitation in the Set Covering Problem Resolution with a Self-adaptive Intelligent Water Drops Algorithm

Volume 6, Issue 1, Page No 134-145, 2021

Author’s Name: Broderick Crawford1,a), Ricardo Soto1, Gino Astorga2, José Lemus-Romani1, Sanjay Misra3, Mauricio Castillo1, Felipe Cisternas-Caneo1, Diego Tapia1, Marcelo Becerra-Rozas1

View Affiliations

1Pontificia Universidad Catolica de Valpara ́ıso, Valpara ́ıso, 2340000, Chile

2Universidad de Valpara ́ıso, Valpara ́ıso, 2340000, Chile

3Covenant University, Ota, 100001, Nigeria

a)Author to whom correspondence should be addressed. E-mail: broderick.crawford@pucv.cl

Adv. Sci. Technol. Eng. Syst. J. 6(1), 134-145 (2021); ![]() DOI: 10.25046/aj060115

DOI: 10.25046/aj060115

Keywords: Metaheuristics, Autonomous Search, Combinatorial Optimization, Set Covering Problem, Intelligent Water Drops

Export Citations

The objective of the metaheuristics, together with obtaining quality results in reasonable time, is to be able to control the exploration and exploitation balance within the iterative processes of these methodologies. Large combinatorial problems present ample search space, so Metaheuristics must efficiently explore this space; and exploits looking in the vicinity of good solutions previously located. The objective of any metaheuristic process is to achieve a ”proper” balance between intensive local exploitation and global exploration. In these processes two extreme situations can occur, on the one hand an imbalance with a bias towards exploration, which produces a distributed search in the search space, but avoiding convergence, so the quality of the solutions will be low, the other case is the bias towards exploitation, which tends to converge prematurely in local optimals, impacting equally on the quality of the solutions. To make a correct balance of exploration and exploitation, it is necessary to be able to control adequately the parameters of the Metaheuristics, in order to infer in the movements taking advantage of the maximum capacity of these. Among the most widely used optimization techniques to solve large problems are metaheuristics, which allow us to obtain quality results in a short period of time. In order to facilitate the use of the tools provided by the metaheuristic optimization techniques, it is necessary to reduce the difficulties in their configuration. For this reason, the automatic control of parameters eliminates the difficult task of obtaining a correct configuration. In this work we implemented an autonomous component to the Intelligent Water Drops algorithm, which allows the control of some parameters dynamically during the execution of the algorithm, achieving a good exploration-exploitation balance of the search process. The correct functioning of the proposal is demonstrated by the Set Covering Problem, which is a classic problem present in the industry, along with this we have made an exhaustive comparison between the standard algorithm and the autonomous version that we propose, using the respective statistical tests. The proposal presents promising results, along with facilitating the implementation of these techniques to industry problems.

Received: 31 August 2020, Accepted: 23 December 2020, Published Online: 15 January 2021

1. Introduction

This paper is an extension of work ”An Adaptive Intelligent Water Drops Algorithm for Set Covering Problem”, originally presented in 19th International Conference on Computational Science and Its Applications (ICCSA) [1].

The post-pandemic economic recovery brings with it a number of challenges for the industry, ranging from rethinking business [2]–[6].

There are various economic sectors where there are problems that need to be optimised such as the airport sector where, given the environmental restrictions, it is becoming increasingly difficult to build new airports and for this reason it is necessary to optimise each of the tasks of the current installations [7]–[9]. Another example is the educational sector, in particular the universities where it is necessary to optimize the use of rooms and the curriculum of students [10, 11]. In summary, we can find a series of problems that can be treated through optimization techniques that have a positive impact on economic recovery.

In this sense the metaheuristics that are supervised heuristics [12], are a real option to give solution to the industrial optimization problems since their attraction is that they allow to deliver a high quality solution that can even be the optimal one in limited computation times. There is a great variety of metaheuristics which have been used to solve NP-Hard problems [13]–[16].

In general, metaheuristics have a great amount of parameters, which allow controlling the high and low level strategies of these, having direct relation with the performance of the techniques in the different problems [17], the optimal configuration of parameters constitutes in itself an optimization problem [18, 19]. The importance of a good parameter assignment to metaheuristic algorithms impacts on the quality of solutions, but on the other hand it is not possible to consider transversal parameters for all the problems to be solved, since the assignment of values depends directly on the problem and the instances to be solved [20].

The values that can assume the parameters can have a great amount of combinations, for that reason we can occupy different strategies to approach the optimal configuration of parameters, that according to the literature are divided in two great groups, off-line configuration and on-line control. The off-line configuration of parameters corresponds to the determination of the metaheuristic algorithm outside the run, that is, before its execution begins [21], while the on-line control dynamically updates the values of the algorithm during the execution [22].

Given the current context of using metaheuristic techniques to solve the problems of the industry, we must facilitate the use of these techniques to non-expert users, so the use of autonomous techniques for the control of parameters, is a great contribution to bring this type of tools to all users of the industry.

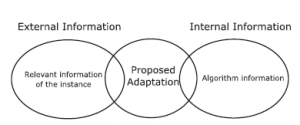

The present work, solves a classic problem of the real world, as it is it Set Convering Problem (SCP) [23], which has diverse applications in the industry, using metaheuristic techniques to solve problems of great dimension. We have used the metaheuristic technique Intelligent Water Drops (IWD), which is inspired by the physical behavior of water droplets in a river bed [24], Along with this, we have incorporated autonomous elements for the on-line control of its parameters, which has two main components, the first is the obtaining of external information on the problems to be solved, and the second is the obtaining of internal information on the behaviour of the algorithm. With this we can better combine the information of each problem to be solved, along with the internal behavior of the algorithm, which generates that our proposal is adapted to the various problems that are solved.

The work is structured in the following form, in Section 2 what has been done regarding parameter tuning is presented, in Section 3 the functioning of IWD is explained, while in Section 4 the adaptive implementation proposed for IWD is detailed, in Section 5 set covering problem is shown, in Section 6 the corresponding experiments and statistical analyses are presented, ending in Section 7 with the conclusions and future work.

2. Parameter Tuning

In this section we will review the various techniques of parameterization of algorithms in order to find a correct configuration.

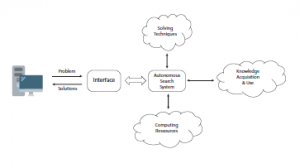

The proper selection of parameter values for the algorithms is not an easy task and has an important impact. Usually a great deal of time is spent, using previous experiences and expert knowledge for the correct assignment of values which also constitutes a not easy task for non-expert users. In consideration of the latter, the ideal condition from the point of view of the non-expert user is that he or she should give general information about the problem and with the least possible interaction receive an adequate response (Fig. 1). This concept, called Autonomous Search, was proposed by [18], using indicators to determine the best strategy to use.

Figure 1: Autonomous Search.

The techniques for finding a good configuration for the parameters were initially grouped into two categories: offline configuration and online control. However, a new proposal is made in [21]: Simple Generate-Evaluate Methods, Iterative Generate-Evaluate Methods and High-Level Generate-Evaluate Methods.

2.1 Offline configuration

Finding a convenient parameter configuration previous to the execution of the algorithm is known as offline configuration.

This is primarily a trial and error process and can consume considerable time in research. The efficiency of this depends mainly on the insight and knowledge of the researcher or author of the algorithm. These processes are typically undocumented and are not reproducible, often driving to an unequal adjustment of different algorithms.

The following techniques exist within this group:

- F-race is an inspired method of racing algorithms in automatic learning, in particular, races in [25, 26]. This algorithm was presented in [27] and studied in detail in Birattari’s PhD thesis [28]. The purpose of these methods is to iteratively evaluate a set of candidate configurations for a given set of instances. A set is removed when there is sufficient statistical evidence and there are survivors within the continuous race. This method is used after each evaluation of the candidate configurations, in which nonparametric Friedman two-way variance analysis [29] determines if there is evidence that at less than one of the configurations is significantly different from the other ones. If the hypothesis is null and there is no difference, it is rejected; Friedman’s subsequent tests are applied to remove these candidate configurations that are significantly worse than the best. This approach had been used by the ACO in [30].

- ParamILS was introduced by [31] as a versatile stochastic local search approach for automatic algorithm configuration. ParalILS includes methods to optimize the performance of an algorithmic, deterministic or stochastic object in a particular class of problems by switching a set of ordinal and/or categorical parameters. This proposal is based on an iterated local search algorithm that uses a combination of configurations chosen, a priori or at random, at initialization. The underlying idea of local search is an iterative ”best first” method, which uses a variety of random movements to disturb the solution and avoid getting into local minima. The method always accepts configurations that improve or match the performance of the best configuration and restarts the search from a random configuration under a certain probability. The local search method moves through the search space by modifying only one parameter each time[32]. This approach has been used to configure ACO to solve transportation planning problems in [33].

- The other approach to optimization, focused on the sequential paradigm, includes improving the parameter initial values by the alternation of experimental design and the parameter recognition.

In this paradigm, statistical significance takes a preponderant role, since each new experiment contributes with information about the performance of the parameters used, which are then referenced to the new stages.

In the case of a parallel method, different experiments are formulated concurrently (all of them taking the same parameter nominal values); experiments are then carried out. The parameters are calculated and their suitability tested using the data obtained in all parallel experiments. If the data obtained from the parallel experiments are inadequate after the parameter estimation the procedure can be replicated [34, 35].

- In [36], the author used the graphic radial technique. The four basic metrics are employed for this method: worst case, best case, average case and average run-time. The region under the radar plot curve is obtained with these four metrics to set the best setting.

- The meta-optimization method was initially studied by [37] and is characterized by using an algorithm to optimize the parameters of another optimization algorithm, that is, there is an algorithm at a higher level that optimizes the parameters of a lower-level algorithm. This method has been used for covering problems in facility locations in [38], stand management in [39], and parameter selection in machine learning in [40].

2.2 Online configuration

One of the areas of research that has taken great strength in recent years is the online configuration, as it gives the algorithms the ability to adapt to the characteristics of a particular instance for better performance. For this online configuration to be useful, the exploration and exploitation phases must be clearly identified because the internal adjustments may be different for each of the phases. Thus, the online configuration can improve the results when the algorithms are used in situations very different from those that were built.

Different techniques exist, and the most simple is to define the parameter variation rules before executing the algorithm. One possibility is the use of parameter adaptation, where the parameter modification scheme is defined for some behavior statistics of the algorithm.

- Absolute evidence: An example is the threshold of the distance between the solutions.

- Relative evidence: Considers that the relative difference in the performance with parameters of different values adapt to those with the best behavior.

2.3 Simple Generate-Evualate Methods

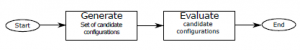

In this method the principle of generating and evaluating is used. Candidate configurations are occupied and then each of the parameters are evaluated to find the best configuration (Figure 2).

Figure 2: Simple Generate-Evualate Methods.

2.4 Iterative Generate-Evualate Methods

Unlike the first method, this one repeatedly performs the generation and evaluation steps. In this method the historical information allows to guide the generation, so that the search space for parameters is better explored and is more efficient in large search spaces (Figure 3).

Figure 3: Iterative Generate-Evaluate Methods.

![]()

Figure 4: High-Level Generate-Evualate Methods.

2.5 High-Level Generate-Evualate Methods

This approach generates candidate configurations to then evaluate them and select the best configuration. The idea is to generate a set of high quality configurations quickly using few computational resources and select the best one. The idea is to greatly reduce the resources used when exploring candidate configurations and use them to thoroughly evaluate these configurations (Figure 4).

3. Intelligent Water Drops Algorithm

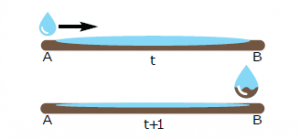

During the year 2007 in [24] he presented the algorithm that is classified as constructive since it builds a solution from 0. Its inspiration is based on nature and corresponds to the displacement that water drops make through the flow of a river and its friction with the earth, considering that when a drop is moved from one point to another it displaces earth from the river bed making the friction less and less which makes the drops increase their speed and in turn incorporate part of the extracted earth.

In the Figure 5, we can see how the soil of the river behaves, on the one hand during the passage of the drop is removed soil from the bottom of the river and on the other hand is incorporated into the drop which directly influences the speed that is acquired, this is demonstrated in that two drops of similar size but with different speeds at the end of its movement are with different size because of the soil that is incorporated. This abstraction of the behavior of water drops in river soil, allows us to build diversified solutions at the beginning, while during the execution of the algorithm, these solutions converge to promising search spaces, which allows to intensify the search.

Figure 5: Water drop removing riverbed soil and adding to its own soil.

This algorithm has been used to solve mainly scheduling problems: Cooperative Search Path Optimization problem [41], Work Flow Scheduling Algorithm for Infrastructure as a Service (IaaS) cloud [42], Capacitated Vehicle Routing Problem [43], Trajectory Planning of Unmanned Combat Aerial Vehicle (UCAV) is a rather complicated Global Optimum Problem in UCAV Mission Planning [44], Method for Optimal Location and Sizing of Distributed Generation (DG) [45].

This algorithm has an initialization phase where both the static parameters number of drops, number of iterations, initial soil, initial speed and constants as, bs, cs, av, bv, cv are initialized and also the dynamic parameters Speed of drop k and soil value of drop k are initialized.

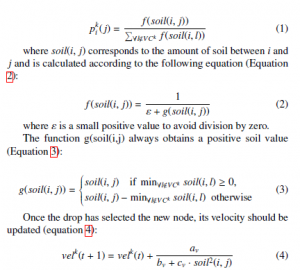

Then there is a construction phase where every drop builds a solution by visiting the nodes. The Eq. 1 is used to determine which node to visit:

where HUD is a heuristic that measures the degree of undesirability of the drop to jump from one node to another.

The Reinforcement phase is responsible for updating the global soil with the best drop of the iteration, and the following equation is used (8):

![]()

where piwd is a small positive value between 0 and 1, and q(TIB) is the fitness value.

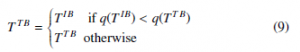

The best solution for each iteration is compared with the best global solution and updated according to the following equation (9):

Finally, the Termination phase is responsible for the completion of the algorithm process when the stop condition is met, which can be the number of iterations.

In 6 the original algorithm of the metaheuristics is shown.

Figure 6: Intelligent Water Drops Algorithm.

4. Adaptative Intelligent Water Drops

The configuration of initial parameters is a costly task, carried out by an expert user and performed prior to the execution of the algorithm. We can reduce the cost of this task based on the concepts from Autonomous Search [18], which obtains information from two different sources, internal, corresponding to the operation of the algorithm and external, corresponding to the problem we are solving. The use of these data sources allows us to compare the quality of the solution in the current iteration (fIB) with the quality of the best found solution (fTB).

The general scheme for obtaining information to guide the adjustment of parameters is presented in Figure 7.

Figure 7: Information required for the proposal.

The internal information source considers the performance of the algorithm. In our proposal, we collect the number of iterations in which no improvement in the quality of the solutions is detected. The external information source comes from the data corresponding to the instance of the problem we are solving. We consider the number of columns and the density of the instance, which allows us to differentiate them. A weight is assigned to the columns of the instance that allows us to assign importance to them, multiplying it by the density, given in the Figure 8).

The algorithm has two initial parameters to which we assign values, these correspond to the Soil and Initial Speed (SoilInitial and VelocityInitial respectively).

In the first case we assign the value considering the number of columns of the instance and its density, which is done as follows:

To determine the first value we consider information of the instance considering on one hand the number of columns available and on the other hand the density that is presented. This value is obtained in the following way:

![]()

Where SoilInitial = 1, nColumn corresponds to the number of columns in the instance group and Density corresponds to the percentage of nonzeros in the matrix.

![]()

For the case of the initial speed VelocityInitial the value is determined by a series of 10 previous executions of the original algorithm.

For the adaptation, in this work, it is considered the quality of the solution for which a weight is calculated that will allow us to update the local velocity, avoiding that the solution remains trapped in an optimal local. This percentage will be calculated by comparing the solution delivered in each iteration with the best solution obtained.

It has been experimentally determined that a low initial drop velocity impacts the convergence of solutions, making it more pronounced. This characteristic is taken into account in the parameter configuration.

Initial soil and velocity parameters update rule is given by the following equation:

![]()

where fIB is the best solution obtained at the current iteration,

and fTB is the best global solution found.

![]()

![]()

where VelocityInitial is the available initial velocity at current

iteration and β is a random number in the interval [0,1].

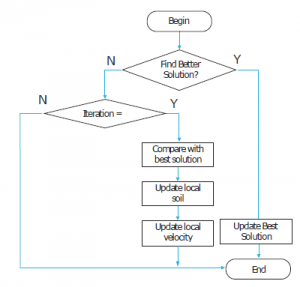

Figure 9 shows the proposed adaptation for the local soil parameter, aiming to improve the metaheuristic performance.

Figure 9: Parameter adaptation proposal.

At each iteration the improvement of the best solution found is evaluated. If an improvement is detected, the previous best solution found is replaced. Otherwise, a counter for not improved iterations is increased. An α value is set for the maximum number of iterations without improvement. If the counter is equal compared with the α parameter, the percentage difference of the current solution compared to the best solution is obtained and used to increase the local soil for the next iteration.

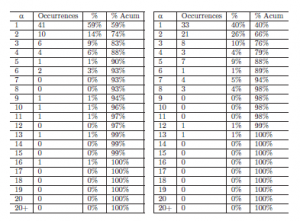

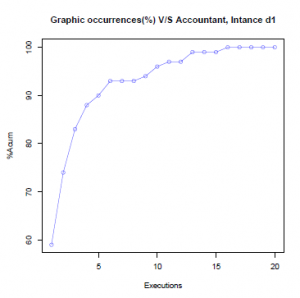

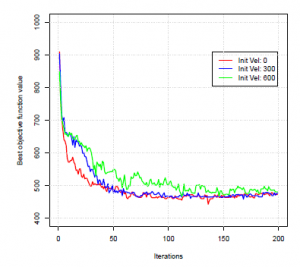

To obtain the value for the α parameter we perform 10 executions of the standard algorithm for each instance. The elapsed iteration number until a fitness improvement was evaluated. The analysis of this number allows us to determine the value for the α parameter to 5. A greater α value causes the fitness improvement probability to decrease, and the computation cost increases. The results of this analysis is shown in Figure 10, the column % represents the occurence percentage for each α, and %Acum column shows the accumulated percentage for each α. Figure 11 shows the fitness change for two instances.

Figure 10: Experiments to determine the alpha value.

Figure 11: Chart of fitness change based on the number of iterations.

After a series of tests, we show the convergence for different initial velocities in Figure 12. When the velocity is low, the convergence shows to be premature. For this case, the initial velocity was adjusted, multiplying it by a β value in range [0,1].

Figure 12: Convergence chart of different velocities.

5. Problem to solve

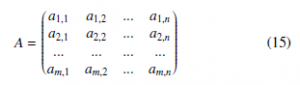

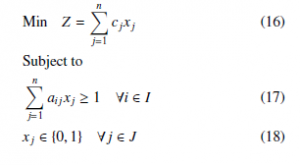

SCP is defined as a binary matrix (A) of size m-rows and n-columns, where ai,j ∈ {0,1} is the value of each cell in the matrix A; i and j are of the size m-rows and n-columns, respectively:

Defining column j satisfies a row i, if aij is equal to 1, which is a contrary case if aij is equal to 0. In addition, an associated cost c ∈ C is incurred, where C = {c1,c2,…cn}, together with i = 1,2,…,m and j = 1,2,…,n, which are the sets of rows and columns, respectively.

The problem has an objective of minimizing the cost of the subset S ⊆ J, with the constraint that all rows i ∈ I are covered by at least one column j ∈ J. When column j is in the subset of solution S, this is equal to 1 and is 0 otherwise.

The SCP can be defined as follows:

6. Experiments

6.1 Benchmark Instances

In order to validate the proposed approach, the 9 sets of the Beasleys library were used, executing 55 instances that are known and that allow a finished experimental evaluation (Figure 8).

The proposed algorithm was built using Java Language and was executed on an Intel(R) Core(TM) i7-6700 CPU with a speed of 3.40 GHz, with 16GB of memory and using a 64-bit Windows 10 operating system.

6.2 Parameter Setting

As far as the configuration of the parameters used by this algorithm is concerned, IWD, as it is generally known, is a very difficult task that requires a great use of resources. Our approach takes care of working two of the most important parameters in IWD operation which are the initial soil and the initial speed, IntSoil and InitVelocity respectively. To verify the impact of these two parameters, a series of experiments with different values for the selected parameters were carried out. The idea is to adapt these two parameters dynamically in order to use the quality of the solution as an indicator of change. The action occurs if the best overall solution does not change after a defined number of iterations.

The parameters used for the different experiments are shown in the following Figure 13.

Figure 13: Experimental parameters.

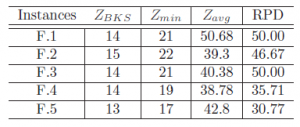

6.3 Experimental results

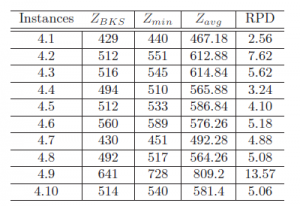

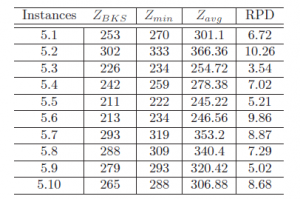

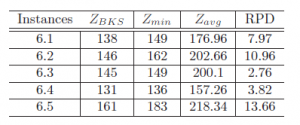

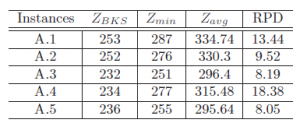

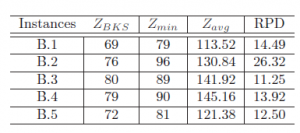

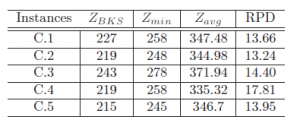

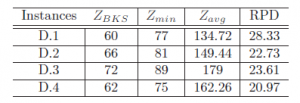

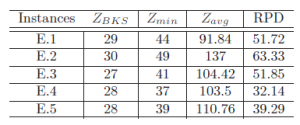

The results obtained with the adaptive test are shown in the tables

14 to 22 and the comparison between the original and adaptive algorithm is shown in the Figure 23.-.

Figure 14: Results of Group 4.

Figure 15: Results of Group 5.

Figure 16: Results of Group 6.

Figure 17: Results of Group A.

Figure 18: Results of Group B.

Figure 19: Results of Group C.

Figure 20: Results of Group D.

Figure 21: Results of Group E.

Figure 22: Results of Group F.

6.4 Statistical Analysis

Figure 24 shows a general scheme of the existing statistical techniques, where in this study the statistical analysis included the different tests:

- Kolmogorov-Smirnov-Lilliefors [46] is used to establish the independence of the samples.

- Wilcoxon’s Signed Rank [47] is used to verify that the IWD algorithm with AD is better than the Standard IWD algorithm.

Both statistical tests considered a significance level of 0.05, so that values lower than 0.05 indicate that the null hypothesis cannot be assumed, i.e., H0 is rejected.

Figure 23: Results IWDSTD AND IWDAD.

Figure 24: Statistical techniques.

For the determination of data independence the following hypothesis is used:

H0 = The data follow a normal distribution.

H1 = The data do not follow a normal distribution.

Given the P values obtained in the tests, the hypothesis is rejected. That is, the data do not follow a normal distribution.

Figure 25: Statistical Analysis Results.

When it is obtained that the data does not follow a normal distribution, the Wilcoxon-Mann-Whitney [47] test is applied. This test is applied to verify that the version with AD is better, where the hypotheses are as follows:

H0 = Standard IWD algorithm ≥ IWD algorithm with AD

H1 = Standard IWD algorithm < IWD algorithm with AD

To obtain the p-values we use the programming language R, where obtaining a p-value < 0.05 implies rejecting H0, and accepting H1. The AD version is statistically better than the standard version, which extends to each instance of the benchmark (Figure 25. In addition, what is indicated by the statistical tests supports what has been obtained through verification by RPD.

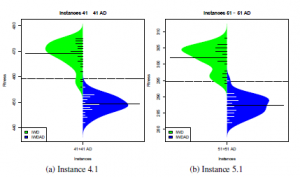

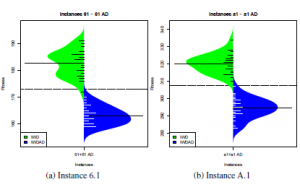

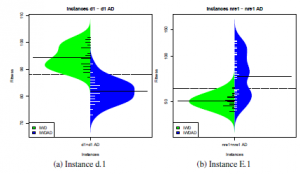

The results of each instance can be seen graphically in the figure 26 to 30. IWD algorithm with AD has better results in all instances except for instances E and F.

Figure 26: Instances 4.1 and 5.1.

Figure 27: Instances 6.1 and A.1.

Figure 28: Instances B.1 and C.1.

Figure 29: Instances D.1 and E.1.

7. Conclusion

In this work, we have presented an adaptive version of the Intelligent Water Drops algorithm, which is a tool that facilitates the use of metaheuristic techniques to non-expert users, reducing the difficulties in the configuration of parameters when implementing a metaheuristic in a real problem, a situation that will become increasingly common in the scenario of recovery from the economic crisis in post-pandemic times.

Figure 30: Instance F.1.

The adaptive element incorporated, obtains the necessary information to make decisions, based on external sources, coming from the problem and internal sources, typical of the normal functioning of the Intelligent Water Drops algorithm. With the correct combination of this information, it was possible to improve the balance of the exploration and exploitation of the search space of most of the solved instances, for Set Covering Problem, where the improvement was in average of 13.04%. In addition, the difference obtained by our adaptive proposal is significant in 77,78% of the compared instances, compared to the corresponding statistical comparisons.

The adaptive configuration is an interesting focus of future work, since there are a large number of static parameters that can be selfadjusting, in order to improve the performance of implementations, in order to decrease the influence of the off-line configuration in the performance when solving diverse instances. Along with this, it is necessary to replicate the implementation developed in this work, for other NP-Hard problems, validating the correct functioning of the incorporated adaptive components. Moreover, in the face of the growing line of research concerning the interaction between metaheuristics and machine learning techniques, a wide range of machine learning possibilities is presented in the decision making of the values to be taken by the adaptive parameters, adding to this, the option of choosing between different ways of calculating parameters according to internal and external information.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

Felipe Cisternas-Caneo and Marcelo Becerra-Rozas are supported by Grant DI Investigacion´ Interdisciplinaria del Pregrado/VRIEA/PUCV/039.324/2020. Broderick Crawford is supported by Grant CONICYT/FONDECYT/REGULAR/1171243. Ricardo Soto is supported by Grant CONICYT/FONDECYT/REGULAR/1190129. Jose Lemus-Romani is supported by National Agency for Research´ and Development (ANID)/Scholarship Program/DOCTORADO NACIONAL/2019-21191692.

- B. Crawford, R. Soto, G. Astorga, J. Lemus-Romani, S. Misra, J.-M. Rubio, “An Adaptive Intelligent Water Drops Algorithm for Set Covering Problem,” in 2019 19th International Conference on Computational Science and Its Applica- tions (ICCSA), 39–45, IEEE, 2019, doi:10.1109/ICCSA.2019.000-6.

- A. Aloi, B. Alonso, J. Benavente, R. Cordera, E. Echa´niz, F. Gonza´lez, C. Ladisa, R. Lezama-Romanelli, A´ . Lo´pez-Parra, V. Mazzei, et al., “Ef- fects of the COVID-19 Lockdown on Urban Mobility: Empirical Evidence from the City of Santander (Spain),” Sustainability, 12(9), 3870, 2020, doi: 10.3390/su12093870.

- J. B. Sobieralski, “COVID-19 and airline employment: Insights from historical uncertainty shocks to the industry,” Transportation Research Interdisciplinary Perspectives, 100123, 2020, doi:10.1016/j.trip.2020.100123.

- M. Sigala, “Tourism and COVID-19: impacts and implications for advanc- ing and resetting industry and research,” Journal of Business Research, 2020, doi:10.1016/j.jbusres.2020.06.015.

- F. Hao, Q. Xiao, K. Chon, “COVID-19 and China’s Hotel Industry: Impacts, a Disaster Management Framework, and Post-Pandemic Agenda,” International Journal of Hospitality Management, 102636, 2020, doi:10.1016/j.ijhm.2020. 102636.

- T. Laing, “The economic impact of the Coronavirus 2019 (Covid-2019): Impli- cations for the mining industry,” The Extractive Industries and Society, 2020, doi:10.1016/j.exis.2020.04.003.

- D. Wang, X. Zhao, L. Shen, Z. Yang, “Industry choice for an airport economic zone by multi-objective optimization,” Journal of Air Transport Management, 88, 101872, 2020, doi:10.1016/j.jairtraman.2020.101872.

- L. Adacher, M. Flamini, M. Guaita, E. Romano, “A model to optimize the airport terminal departure operations,” Transportation Research Procedia, 27, 53–60, 2017, doi:10.1016/j.trpro.2017.12.151.

- N. A. Ribeiro, A. Jacquillat, A. P. Antunes, A. R. Odoni, J. P. Pita, “An optimization approach for airport slot allocation under IATA guidelines,” Transportation Research Part B: Methodological, 112, 132–156, 2018, doi: 10.1016/j.trb.2018.04.005.

- C. Castro, B. Crawford, E. Monfroy, “A quantitative approach for the design of academic curricula,” in Symposium on Human Interface and the Management of Information, 279–288, Springer, 2007, doi:10.1007/978-3-540-73354-6 31.

- C. Castro, B. Crawford, E. Monfroy, “A genetic local search algorithm for the multiple optimisation of the balanced academic curriculum problem,” in Inter- national Conference on Multiple Criteria Decision Making, 824–832, Springer, 2009, doi:10.1007/978-3-642-02298-2 119.

- C. Blum, A. Roli, “Metaheuristics in combinatorial optimization: Overview and conceptual comparison,” ACM computing surveys (CSUR), 35(3), 268–308, 2003, doi:10.1145/937503.937505.

- C. Va´squez, B. Crawford, R. Soto, J. Lemus-Romani, G. Astorga, S. Misra, Salas-Ferna´ndez, J.-M. Rubio, “Galactic Swarm Optimization Applied to Reinforcement of Bridges by Conversion in Cable-Stayed Arch,” in Interna- tional Conference on Computational Science and Its Applications, 108–119, Springer, 2019, doi:10.1007/978-3-030-24308-1 10.

- B. Crawford, R. Soto, G. Astorga, J. Lemus, A. Salas-Fernandez, “Self- configuring Intelligent Water Drops Algorithm for Software Project Scheduling Problem,” in International Conference on Information Technology & Systems, 274–283, Springer, 2019, doi:10.1007/978-3-030-11890-7 27.

- R. Soto, B. Crawford, F. Gonza´lez, E. Vega, C. Castro, F. Paredes, “Solving the manufacturing cell design problem using human behavior-based algorithm supported by autonomous search,” IEEE Access, 7, 132228–132239, 2019, doi:10.1109/ACCESS.2019.2940012.

- S. Valdivia, R. Soto, B. Crawford, N. Caselli, F. Paredes, C. Castro, R. Oli- vares, “Clustering-Based Binarization Methods Applied to the Crow Search Algorithm for 0/1 Combinatorial Problems,” Mathematics, 8(7), 1070, 2020, doi:10.3390/math8071070.

- M. Birattari, J. Kacprzyk, Tuning metaheuristics: a machine learning perspec- tive, volume 197, Springer, 2009, doi:10.1007/978-3-642-00483-4.

- Y. Hamadi, E. Monfroy, F. Saubion, “What is autonomous search?” in Hybrid Optimization, 357–391, Springer, 2011, doi:10.1007/978-1-4419-1644-0 11.

- M. Lo´pez-Iba´n˜ez, J. Dubois-Lacoste, L. P. Ca´ceres, M. Birattari, T. Stu¨ tzle, “The irace package: Iterated racing for automatic algorithm configuration,” Op- erations Research Perspectives, 3, 43–58, 2016, doi:10.1016/j.orp.2016.09.002.

- F. Hutter, H. H. Hoos, K. Leyton-Brown, “Automated configuration of mixed in- teger programming solvers,” in International Conference on Integration of Arti- ficial Intelligence (AI) and Operations Research (OR) Techniques in Constraint Programming, 186–202, Springer, 2010, doi:10.1007/978-3-642-13520-0 23.

- C. Huang, Y. Li, X. Yao, “A survey of automatic parameter tuning methods for metaheuristics,” IEEE Transactions on Evolutionary Computation, 24(2), 201–216, 2019, doi:10.1109/TEVC.2019.2921598.

- R. Battiti, M. Brunato, F. Mascia, Reactive search and intelligent optimiza- tion, volume 45, Springer Science & Business Media, 2008, doi:10.1007/ 978-0-387-09624-7.

- J. E. Beasley, “An algorithm for set covering problem,” European Journal of Op- erational Research, 31(1), 85–93, 1987, doi:10.1016/0377-2217(87)90141-X.

- H. Shah-Hosseini, “Intelligent water drops algorithm: A new optimiza- tion method for solving the multiple knapsack problem,” International Jour- nal of Intelligent Computing and Cybernetics, 1(2), 193–212, 2008, doi: 10.1108/17563780810874717.

- O. Maron, A. W. Moore, “Hoeffding races: Accelerating model selection search for classification and function approximation,” in Advances in neural information processing systems, 59–66, 1994.

- O. Maron, A. W. Moore, “The racing algorithm: Model selection for lazy learners,” in Lazy learning, 193–225, Springer, 1997, doi:10.1023/A: 1006556606079.

- M. Birattari, T. Stu¨ tzle, L. Paquete, K. Varrentrapp, “A racing algorithm for configuring metaheuristics,” in Proceedings of the 4th Annual Conference on Genetic and Evolutionary Computation, 11–18, Morgan Kaufmann Publishers Inc., 2002, doi:10.5555/2955491.2955494.

- M. Birattari, M. Dorigo, “The problem of tuning metaheuristics as seen from a machine learning perspective,” 2004, doi:10.1007/978-3-642-00483-4.

- W. Conover, “Statistics of the Kolmogorov-Smirnov type,” Practical nonpara- metric statistics, 428–473, 1999.

- T. Liao, T. Stu¨tzle, M. A. M. de Oca, M. Dorigo, “A unified ant colony optimiza- tion algorithm for continuous optimization,” European Journal of Operational Research, 234(3), 597–609, 2014, doi:10.1016/j.ejor.2013.10.024.

- F. Hutter, H. H. Hoos, T. Stu¨ tzle, “Automatic algorithm configuration based on local search,” in Aaai, volume 7, 1152–1157, 2007, doi:10.5555/1619797. 1619831.

- F. Hutter, H. H. Hoos, K. Leyton-Brown, T. Stu¨tzle, “ParamILS: an automatic algorithm configuration framework,” Journal of Artificial Intelligence Research, 36, 267–306, 2009, doi:10.1613/jair.2861.

- P. Lin, J. Zhang, M. A. Contreras, “Automatically configuring ACO using mul- tilevel ParamILS to solve transportation planning problems with underlying weighted networks,” Swarm and Evolutionary Computation, 20, 48–57, 2015, doi:10.1016/j.swevo.2014.10.006.

- G. Franceschini, S. Macchietto, “Model-based design of experiments for pa- rameter precision: State of the art,” Chemical Engineering Science, 63(19), 4846–4872, 2008, doi:10.1016/j.ces.2007.11.034.

- F. Hutter, H. H. Hoos, K. Leyton-Brown, “Sequential model-based opti- mization for general algorithm configuration,” in International Conference on Learning and Intelligent Optimization, 507–523, Springer, 2011, doi: 10.1007/978-3-642-25566-3 40.

- J. Garc´ia, B. Crawford, R. Soto, C. Castro, F. Paredes, “A k-means bina- rization framework applied to multidimensional knapsack problem,” Applied Intelligence, 48(2), 357–380, 2018, doi:10.1007/s10489-017-0972-6.

- R. E. Mercer, J. Sampson, “Adaptive search using a reproductive meta-plan,” Kybernetes, 7(3), 215–228, 1978, doi:10.1108/eb005486.

- B. Crawford, R. Soto, E. Monfroy, G. Astorga, J. Garc´ia, E. Cortes, “A meta- optimization approach for covering problems in facility location,” in Work- shop on Engineering Applications, 565–578, Springer, 2017, doi:10.1007/ 978-3-319-66963-2 50.

- X. Jin, T. Pukkala, F. Li, “Meta optimization of stand management with population-based methods,” Canadian Journal of Forest Research, 48(6), 697– 708, 2018, doi:10.1139/cjfr-2017-0404.

- M. Camilleri, F. Neri, M. Papoutsidakis, “An algorithmic approach to parameter selection in machine learning using meta-optimization techniques,” WSEAS Transactions on systems, 13(2014), 202–213, 2014.

- X. Sun, C. Cai, S. Pan, Z. Zhang, Q. Li, “A cooperative target search method based on intelligent water drops algorithm,” Computers & Electrical Engineer- ing, 80, 106494, 2019, doi:10.1016/j.compeleceng.2019.106494.

- M. Adhikari, T. Amgoth, “An intelligent water drops-based workflow schedul- ing for IaaS cloud,” Applied Soft Computing, 77, 547–566, 2019, doi: 10.1016/j.asoc.2019.02.004.

- E. Teymourian, V. Kayvanfar, G. M. Komaki, M. Zandieh, “Enhanced intel- ligent water drops and cuckoo search algorithms for solving the capacitated vehicle routing problem,” Information Sciences, 334, 354–378, 2016, doi: 10.1016/j.ins.2015.11.036.

- H. Duan, S. Liu, J. Wu, “Novel intelligent water drops optimization approach to single UCAV smooth trajectory planning,” Aerospace science and technology, 13(8), 442–449, 2009, doi:10.1016/j.ast.2009.07.002.

- D. R. Prabha, T. Jayabarathi, R. Umamageswari, S. Saranya, “Optimal lo- cation and sizing of distributed generation unit using intelligent water drop algorithm,” Sustainable Energy Technologies and Assessments, 11, 106–113, 2015, doi:10.1016/j.seta.2015.07.003.

- S. S. Shapiro, M. B. Wilk, “An analysis of variance test for normality (complete samples),” Biometrika, 52(3/4), 591–611, 1965, doi:10.2307/2333709.

- H. B. Mann, D. R. Whitney, “On a test of whether one of two random variables is stochastically larger than the other,” The annals of mathematical statistics, 50–60, 1947, doi:10.1214/aoms/1177730491.