SH-CNN: Shearlet Convolutional Neural Network for Gender Classification

Volume 5, Issue 6, Page No 1328-1334, 2020

Author’s Name: Chaymae Ziania), Abdelalim Sadiq

View Affiliations

MISC Laboratory, Faculty of Sciences, Ibn Tofail University, Kenitra, 14000, Morocco

a)Author to whom correspondence should be addressed. E-mail: chema.zin@gmail.com, chaymae.ziani@uit.ac.ma

Adv. Sci. Technol. Eng. Syst. J. 5(6), 1328-1334 (2020); ![]() DOI: 10.25046/aj0506158

DOI: 10.25046/aj0506158

Keywords: CNN, Shearlet transform, Gender prediction, Features extraction

Export Citations

Gender detection and age estimation become an active research area and a very important field today, wish has been widely used in various applications including them: biometrics, social network, Targeted advertising, access control, human-computer interaction, electronic customer, etc. The need to further improve the recognition or classification rate keeps increasing day after day. In this paper, we explore how deep learning techniques can help in the classification of gender from human face images and moreover raise the recognition rate. We propose in this contribution an approach called SH-CNN based on Discrete Shearlet Transform (DST) as a first step of feature extraction layer, and Deep Convolutional Neural Network (DCNN) as a second automatic feature extraction layer and also a classification step. The idea behind our contribution is to generate trough DST several features (in different decomposition and orientation) of an image. These features of each image will be the input of the DCNN, to enrich the training step, and so, improve the recognition rate. The obtained results have shown that the proposed approach import a significant enhancement.

Received: 04 September 2020, Accepted: 01 December 2020, Published Online: 16 December 2020

1. Introduction

This paper is an extension of work originally presented in The Third International Conference on Intelligent Computing in Data Sciences ICDS2019 [1].

Automatic age and gender classification of humans through their image faces is a fundamental task in computer vision. It is an important research focus this last years, and plays a key role in a large variety of applications. In this last years, The Convolutional Neural Networks (CNNs) model has shown high level classification capacity, and mainly in cases of face images taken in real-world conditions. The performance of this model result in the ensured high level feature extraction and classification, through the used layers.

The Feature Extraction Function (FEF) is the key factor that determines the efficiency of a Face Recognition System and considered as one of the important parts. Several feature extraction function have been proposed in this domain of face feature extraction, such as, wavelets [2], SIFT [3] and other [4], [5]. Until today, the need for more efficient feature extraction function increases, seen the use of face gender recognition system in sensitive and important area. The Shearlet transform as feature extraction function allows generating more sensitive and significant features [6]-[8].

The field of learning has undergone a revolution with the appearance of CNN because the CNN can extract features of different complexities. Unlike the learning technique, convolutional neural networks learn the features of each image automatically, and that’s where their strength lies. Today, the most successful models for image classification are those based on CNN. Since the model is based on learning, so we will enrich this phase with features extracting by DST. The Discrete Shearlet Transform (DST) is used in our paper as a first feature extraction layer for our proposed SH-CNN. The idea is to extract more significant features of the input face, to further enhance the gender recognition rate.

2. Related work

There is already a rich literature on the subject of classification by age and gender in an attempt to further improve the recognition rate. We briefly reviewed the age and gender classification studies that use neural network or Convolutional neural network. The authors in [9] present a structure including CNN and Extreme Learning Machine (ELM), where the CNN is used to extract the features from the input images and ELM, to classify the interposed results, and to combine the co-action of the two classifiers, to perform age and gender classification. In [10], the authors implement a CNN of 3 convolutional layers and 3 fully connected layers for the tasks of estimating age and gender, it is trained exclusively on Audience. For the forecast, they do several crops around the face in different sizes. The final prediction is the average prediction value for all of these crops. They achieve an 86.8% rate of good classifications on the audience test images. A Deep gender classification is performed in [11], which is based on AdaBoost-fusion of isolated facial features and foggy faces. They applies an approach based on local features of the face by separating it into several parts (mouths, eyes, nose). They also include the discriminating features surrounding the face while blurring it. These images are then used to train several CNNs followed by an Adaboost classifier grouping the classification scores of the CNNs. This method yielded 91.75% on LFW and 83.06% on Adience using Gallagher as training data. In [12] the authors address the estimations problem with a classification based model which considered the age value as an independent etiquette and a separate class, and propose a deep convolutional neural network model learns the pertinent informative age and gender characterizations from the image pixel. A Male/female identification method from very low resolution face images by using neural network have proposed in [13]. In [14], the authors proposed a method named the full component CNN, which holds the full faces and the facial component networks, to obtain human age/gender classification through the facial image data. For age and gender classification, the authors in [15] propose a method applying deep learning based learning, the feature extractor HOG is applying on the input image on the network. The structure of the network used in this study is GoogLeNet. In [16], the authors present a review and a study of the advanced research technique for gender classification, which can be useful for researchers who work on gender classification. In [17], a convolutional neural network is used for the estimation of age and gender of a person from the facial image, and the PCA is applied to reduce the dimensions of the extracted features.

Among the research works countable on fingers that use CNN and shearlet transform, we find a contribution in [18], where the authors use an algorithm based on CNN and Shearlet transform, to classify the patient having Alzheimer’s disease and early or late mild cognitive impairment using the florbetapir PET (positron emission tomography) amyloid imaging data. The authors had concatenated shearlet coefficients into the convolution layers on the CNN.

Figure 1: Example of a DCNN architecture for gender prediction

In our knowledge, this is the first time that Shearlets are used as first pretreatment, in order to enrich the training phase of the DCNN. The idea is to add as first treatment a DST, to exploit the calculated features extracted through decompositions and orientations. These operations allow us to generate from one input image, several feature views. Based on these generated features, we enrich the training phase, by injecting to DCNN the image and its feature views (the DST decompositions and orientations). The proposed approach will allow us to enhance the gender recognition, seen that the training phase is improved.

3. Deep Convolutional neural network and shearlet transform

3.1. DCNN

Convolutional Neural Networks [19] was proposed mainly to treat 2D shapes variability. They have been used widely in computer vision and they showed the best results and outperformed all other technique. Now, DCNN has become a major research orientation for researchers in the domain of computer vision, especially for image classification.

DCNN is powerful model, which is capable to capture effective information due to his architecture (Figure 1).

DCNN architecture is divided into 2 steps, the first step is learning and extracting features and the second step is classification. There are four types of layers for a CNN: the convolutional, the pooling, the ReLU correction, and the fully-connected.. These components work together to learn a dense feature representation of an input.

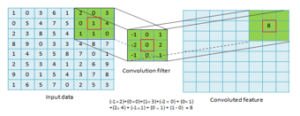

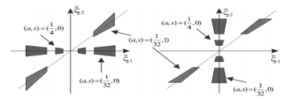

- The convolution layer (Figure 2) is the important component of CNN, and always constitutes their first layer. Its goal is to identify features in the images received as input. To do this, convolution filtering is applied, by sliding window that represents feature, and by computing the convolution product of each image part and feature. Then, a feature is viewed as a filter. An activation map is obtained for each pair (image, filter) which indicates where the features are located in the image, when the value elevated, the more the corresponding place in the image looks like the feature. And then, these feature maps are normalized (with an activation function) [20].

- The ReLU correction layer used as an activation function, it is defined by ReLU (x) = max (0, x) [20], to replace all negative values with zeros

Figure 2: Convolution filter applied on input image

- The pooling layer (Figure 3): feature maps are injected as input to this layer. The principal of pooling is to reduce image size without losing the essential characteristics. In general, the pooling layer is nested in two convolution layers, and aims to enhance network-learing, by minimizing over-calculations [20].

- As last layer of the network. The fully-connected layer [20] has as input a vector, on which it applies linear combination and activation function, and so, generated output vector. The returned vector by this layer to classify the input image represents the possible output classes of the classification system. Each value of the vector represents the corresponding probability of the input image to a class. The input matrix resulting from the previous layer contains an activation map, which represents the position of feature in the image. According to the position of feature in the image, the fully-connected layer can calculate the corresponding probability to each output classes. In general, for the DCNN classification systems, the softmax method is often used at the end of the network [21].

Figure 3: Example of a max pooling operation [22]

3.2. Shearlet transform

A multidimensional version of wavelet transform were invented in 2006 [23] named Shearlet, that encode anisotropic features in multivariate problem classes. The limitation of wavelets is the incapacity of capturing anisotropic features. Therefore, this transform provided optimal sparse representation for images with edges, surpassing the wavelet.

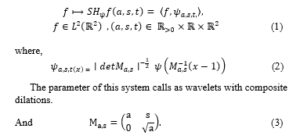

The successful use of shearlets in many image processing applications, especially face detection, feature extraction and denoising [24]-[26], is due to their properties: parabolic scaling, shearing, and translation. Expressly, the Continuous Shearlet Transform (CST) [27] is defined as:

The matrix is factorized as , where is a shearing matrix and is a parabolic scaling matrix (or anisotropic dilation matrix).

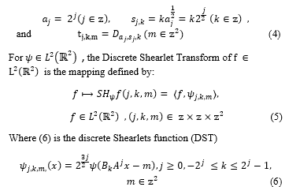

The Shearlets transform function is based on three variables (Figure 4): is the scale parameter measuring the resolution level, is the shear parameter measuring the directionality and is the translation parameter measuring the position.

To get a frame (or Parseval Frame) for , a CST sampling is applied on a suitable discrete set of the three parameters: scale, shear, and translation. A discrete shearlets can be generated by sampling those three parameters:

and is discretized as . Thus, maps the function to the coefficients correlated with the indexes of scale j, the orientation k, and the position m.

Figure 4: The horizontal and vertical shearlets Frequency support [28]

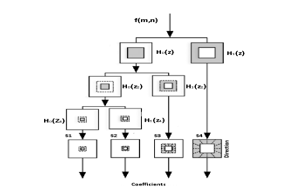

Figure 5: The principle of Shearlet transform (4 scales example) [29].

The shearlet transformation is a decomposition function based on scales and direction set. As shown in Figure 5, the decomposition is done by 4 scales levels denoted S1, S2, S3, and S4 with 6 directional filtered coefficients. At first, repetitively, a decomposition of the input image is done to a low-pass image and a high-pass image, based on a decomposition of two-channel non-subsampled [30], [31]. The used filters are: H0(z) for low pass filter function, and H1(z) for high pass filter function. The decomposition steps are: first, a frequency transformation domain applied on the high pass image, using two dimensional Fourier transform. Secondly, a cartesian coordinate system with six directions is used, to generate six directional subbands from the frequency domain. For the low pass image, a decomposition of two-channel non-subsampled is applied again, and the same decomposition steps are repeated. The shearlet coefficients are generated as result of this transformation [29].

4. Proposed Approach

The idea which motivated us to propose this solution is the objective of enriching the learning phase to improve gender recognition. To enrich the DCNN learning input sample. We proposed to use as the first preprocessing of the CNN, a layer which uses the DST. The latter allows us to generate from a CNN learning input image, several views made up of the features generated from the decompositions and orientations of the DST. At this point, in the learning phase, the input will be represented not just by the images but also by their feature views. In this way, we will increase the input sample from the same number of starting images. Such a technique will be very useful in the case where the image sample in the training phase is small.

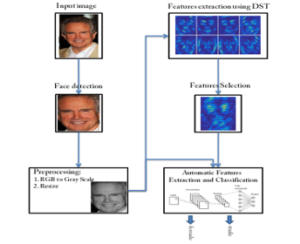

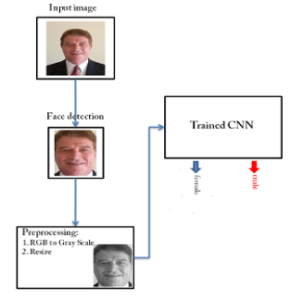

In our proposed approach, the training and the testing phase of the system performs essential operations. Each operation contributes to ensure a high recognition level. Figure 6 and Figure 7 showed these operations:

- Face detection

- Preprocessing

- Features extraction using DST

- Automatic features extraction and classification

Figure 6: Proposed SH-CNN approach (training phase)

4.1. Face detection

In our approach, haar-cascades [32] are used for face detection in order to eliminate any unusable information in the image. As a result, we will focus just on faces.

4.2. Preprocessing

At this level, two main treatments are applied on each face photo. The first treatment is to convert the photo from a RGB color level to a Gray level. The second treatment is to reduce the size of the faces photos. These steps reduce the calculation time in extracting features, and also in CNN layers.

Figure 7: Proposed SH-CNN approach (testing phase)

4.3. Features extraction using DST

The Feature Extraction Function (FEF) is a key factor that determines the efficiency of gender Recognition System. DST as a FEF is applied in each face image; the images will decompose along a number of directions and scales to extract important facial features. At the end, for each image we get a set of images in different orientations. That will enrich the CNN’s learning step and facilitate the classification phase. And that is the goal for combining it with the CNN. This operation is used only in training phase, and so, we will not increase the processing time in the testing phase.

4.4. Automatic features extraction and classification (CNN)

The idea is very simple. The trainable system consists of a series of modules, each one representing a processing step. Each module is trainable, with adjustable parameters similar to the weights of linear classifiers. The system is trained from end to end: in each instance, all parameters of all modules are adjusted to bring the output produced by the system closer to the desired output.

To enrich the training phase, we inject as an input in our CNN the face and the corresponding coefficients calculated using DST. This operation allows the CNN to adapt more the weights and to increase the learning step.

5. Experiment results

This section presents the experimental results of our proposed approach. Our method is implemented using Python as a high-level programming language, with the Tensorflow [33] ( an OSS framework for machine learning on decentralized data) and Keras( is a deep learning API) [34] libraries for CNN learning and classification.

5.1. UTKFace dataset

UTKFace dataset [35] is composed from over 20,000 face images (e.g. Figure 8), which are tagged by age and gender. The images of this dataset present multiple facial expressions. Certain images are with high and low resolution, things that allow testing our system well. The UTKFace dataset is frequently used in gender/age classification and face detection, etc.

The images size is 200×200 pixels. We have resized all the face with the dimension of 100×100 pixels.

Figure 8: faces from UTKface dataset

5.2. Operations of extracting features and classification

In the previous section, we presented our approach with successive operations. In this section, we present more details on the proposed SH-CNN approach for automatic gender recognition.

The DST ensures a good representation level of images with edges. At the features extraction step, the pre-treated images were decomposed into 4 levels (Table 1), by varying shear parameter from to , and scale parameter from 0 to 3, and hence we obtained shearlet coefficients on many directions (Figure 9).As we said before these coefficients will be used later as input of a CNN. We repeat this procedure for all faces of the training database.

Table 1: Used DST parameters.

| Decomposition levels | Directions | Shearing filters size |

| Level 1 | 4 | 32×32 |

| Level 2 | 8 | 32×32 |

| Level 3 | 16 | 16×16 |

| Level 4 | 16 | 16×16 |

In our proposed approach, and during all experiments for gender classification, we used a CNN composed from three convolutional layers and one fully-connected layer. Gender classification on the face dataset requires distinguishing between two classes, that the reason why we choose a smaller network.

The input layer is a face image of size 100 × 100 pixels. The three convolutional layers are respectively of 96@7 × 7, 256@5 × 5, and 384@3 × 3 feature maps. After each one of these convolution layer, a ReLU function and a max pooling layer (the max value of 3×3) are applied. To generate the needed 512 neurons for the fully connected layer, a flatten layer is used. The next layer is a fully connected, that maps to the final gender classes. As a last step, a soft-max layer is applied to attribute a corresponding probability for each class. The class having the probability max value represents the closest prediction

The used criteria in this experiment results take into consideration our need in terms of features extraction part and CNN classification part. For the DST feature extraction part, we have used four level of decomposition with directions (4, 8, 16, and 16). In general in the existing works, and as the recommended values, the used decomposition number is approximatively 3, 4 or 5 levels, and for directions we found (4, 8, 16, and 32) [6]-[8], [26]. In our contribution we have used four level with directions (4, 8, 16, 16), which have clearly shown the added value of our contribution. For the CNN classification part, we have used three convolutional layers with a kernel size of 7×7, 5×5 and 3×3, in order to reduce the training time. And for the fully connected layer, we used 512 neurons, since using larger values in case of 100×100 input image size will not improve the results, contrary, it will increase the calculation time.

Figure 9: (a) Detected face photo (b) 1st decomposition level with 4 orientations applied on the detected face (c) 2nd decomposition level with 8 orientations applied on the detected face.

Figure 10: Proposed CNN architecture

5.3. Results and discussion

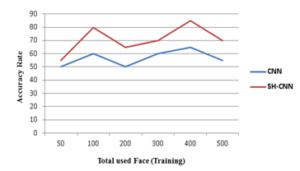

Regarding the comparison phase of the proposed approach (SH-CNN), we carried out a direct comparison study with the CNN, without seeing the other models that already exist, proposed as part of the improvement of the gender recognition, and based on CNNs. It is seen that our first objective was to test the added value of our SH-CNN compared to CNN. Add to this that the proposed preprocessing layer concatenated with the CNN can be concatenated with all the proposed models based on the CNN as well. Because the add layer plays the role of an enrichment phase of the sample of the learning part of the CNN. From this explanation, we understand that the comparison must be made with CNN directly.

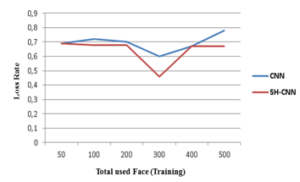

The obtained results in terms of accuracy rate and loss rate (see Figure 11 and 12), shown clearly the added value of our proposed approach. The generated coefficients for each face using DST, enrich the training phase as expected, and so, increase the recognition rate. In this simulation, we have used just one generated coefficient of DST as input with the associated face. If we use more coefficient as input to enrich the training phase, we may obtain more efficient recognition system, and this will be our coming research work. Also, in our simulation, we have used just 3 epochs, to show the capacity of our approach to increase more the accuracy rate within a few epochs.

Figure 11: The obtained Accuracy Rate (CNN vs SH-CNN)

Figure 12: The obtained Loss Rate (CNN vs SH-CNN)

6. Conclusion

The need to increase the gender recognition rate has pushed us to search lower-cost solutions (processing time, dataset size, etc.). In this paper, we have proposed an approach based on CNN and especially on DST as a first layer. The idea behind this solution is to enrich more the training phase, by using the generated coefficients of each image using DST. The obtained results have shown the clear added value of this approach. The ensured accuracy rate of our system is also related to the capacity of DST to extract the significant features of each image.

In our future work, we will add also the age as output of our proposed system. We will also use high and low values of epoch, and explore the capacity of our proposal in others databases (FERET, etc.).

- C. Ziani, A. Sadiq, “Shearlet Convolutional Neural Network Approach for Age and Gender recognition,” in 2019 Third International Conference on Intelligent Computing in Data Sciences (ICDS), 1-4, 2019, doi: 10.1109/ICDS47004.2019.8942359.

- K. Ramesha, K. B. Raja, “Face Recognition System Using Discrete Wavelet Transform and Fast PCA,” in Information Technology and Mobile Communication, 147, 13-18, 2011, https://doi.org/10.1007/978-3-642-20573-6_3.

- P. G. Ambhore , L. Bijole, “Face Sketch to Photo Matching Using LFDA,”. International Journal of Science and Research (IJSR), 3(4), 11-14, 2014,

- J .Qian, J. Yang, “A Novel Feature Extraction Method for Face Recognition under Different Lighting Conditions,” in Chinese Conference on Biometric Recognition (CCBR), 7098, 17-24 , 2011, https://doi.org/10.1007/978-3-642-25449-9_3.

- T. T. Do, T. H. Le, ” Facial Feature Extraction Using Geometric Feature and Independent Component Analysis,” In Knowledge Acquisition: Approaches, Algorithms and Applications, 5465, 231-241, 2009, https://doi.org/10.1007/978-3-642-01715-5_20.

- Z. Zeng, J. Hu, “Face recognition based on shearlets transform and principle component analysis,” in 2013 5th International Conference on Intelligent Networking and Collaborative Systems, 697-701, 2013, doi: 10.1109/INCoS.2013.134.

- A. Danti, K. M. Poornima, “Face Reconition using Shearlets,” in 2012 IEEE 7th International Conference on Industrial and Information Systems, 1-6, 2012, doi: 10.1109/ICIInfS.2012.6304796..

- X. Sun, X. Wu, and Y. Wei, “Face Recognition Based on Shearlet Transform and Fast ICA,” in 2014 Fourth International Conference on Instrumentation and Measurement, Computer, Communication and Control, 832–835, 2014, doi: 10.1109/IMCCC.2014.175.

- M. Duan, K. Li, C. Yang, K. Li, “A hybrid deep learning cnn–elm for age and gender classification” , Neurocomputing, 275, 448–461, 2018, https://doi.org/10.1016/j.neucom.2017.08.062

- G. Levi , T. Hassncer, “Age and gender classification using convolutional neural networks,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 34-42, 2015, doi: 10.1109/CVPRW.2015.7301352.

- A. Mahmoud ,A. Abdelhamed, “AFIF4: Deep Gender Classification based on AdaBoost-based Fusion of Isolated Facial Features and Foggy Faces,” Journal of Visual Communication and Image Representation, 62, 77-86, 2019 , https://doi.org/10.1016/j.jvcir.2019.05.001.

- O. Agbo-Ajala, S. Viriri, “ Age Group and Gender Classification of Unconstrained Faces,” In Advances in Visual Computing, 11844, 418-429 , 2019 https://doi.org/10.1007/978-3-030-33720-9_32.

- S. Tamura, H. Kawai, H. Mitsumoto, “Male/female identification from 8 × 6 very low resolution face images by neural network,” Pattern Recognition. 29(2), 331-335, 1996, https://doi.org/10.1016/0031-3203(95)00073-9

- C. Huang, Y. Chen, R. Lin , C. J. Kuo, “Age/gender classification with whole-component convolutional neural networks (WC-CNN),” 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), 1282-1285, 2017, doi: 10.1109/APSIPA.2017.8282221.

- S. Lim. “Estimation of Gender and Age Using CNN-Based Face Recognition Algorithm.” International Journal of Advanced Smart Convergence , 9(2) , 203–211, 2020, doi:10.7236/IJASC.2020.9.2.203.

- C. P. Divate, S. Z. Ali, “Study of Different Bio-Metric Based Gender Classification Systems,” in 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), 347-353, 2018, doi: 10.1109/ICIRCA.2018.8597340.

- B. Agrawal, M. Dixit, “Age Estimation and Gender Prediction Using Convolutional Neural Network,” In: Intelligent Computing Applications for Sustainable Real-World Systems ,Proceedings in Adaptation, Learning and Optimization, 13, 163-175, 2020, https://doi.org/10.1007/978-3-030-44758-8_15.

- E. Jabason, M. O. Ahmad, M. N. S. Swamy, “Shearlet based Stacked Convolutional Network for Multiclass Diagnosis of Alzheimer’s Disease using the Florbetapir PET Amyloid Imaging Data,” in 2018 16th IEEE International New Circuits and Systems Conference (NEWCAS), 344-347, 2018, doi: 10.1109/NEWCAS.2018.8585550.

- Y. Lecun, L. Bottou, Y. Bengio, P. Haffner, “Gradient-based learning applied to document recognition,” in Proceedings of the IEEE, 86(11), 2278-2324, 1998, doi: 10.1109/5.726791.

- R. Waseem , Z. Wang. “Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review,” Neural Computation, 29(9), 2352-2449, 2017, https://doi.org/10.1162/neco_a_00990

- “Machine Learning, Max Pooling With Color Images”, https://dev.to/sandeepbalachandran/machine-learning-max-pooling-with-color-images-2i21, 2020

- A. Krizhevsky, I. Sutskever, G. E. Hinton, ”ImageNet classification with deep convolutional neural networks,” Commun.ACM, 60(6), 84–90, 2017, https://doi.org/10.1145/3065386

- G. Kanghui, G. Kutyniok, D. Labate, “Sparse multidimensional representations using anisotropic dilation and shear operators,” Wavelets and Splines , Nashboro Press, Nashville, 189–201, 2006.

- G.R. Easley, D. Labate, “Critically sampled wavelets with composite dilations,” IEEE Trans. Image Process, 21(2), 550-561, 2012, 10.1109/TIP.2011.2164415.

- G.R. Easley, D. Labate ,V. Patel, “Directional multiscale processing of images using wavelets with composite dilations,” J. Math. Imag. Vision, 48, 13-44, 2014, https://doi.org/10.1007/s10851-012-0385-4.

- S. Yi, D.Labate, G.R. Easley, H. Krim, “A Shearlet approach to Edge Analysis and Detection,” IEEE Trans. Image Process, 18(5), 929–941, 2009, doi: 10.1109/TIP.2009.2013082.

- G. Kutyniok , D. Labate, “Resolution of the wavefront set using continuous shearlets,” Transactions of the American Mathematical Society, 361(5), 2719-2754, 2009, http://www.jstor.org/stable/40302874.

- C. Liu, D.Wang, J.Sun, T.Wang, “Crossline-direction reconstruction of multi-component seismic data with shearlet sparsity constraint,” Journal of Geophysics and Engineering, 15(5), 1929–1942, 2018, https://doi.org/10.1088/1742-2140/aac097.

- F.Yuan, L. Po , M.Liu, X. Xu, W. Jian., K. Wong, K. Cheung , “Shearlet Based Video Fingerprint for Content-Based Copy Detection,” Journal of Signal and Information Processing, 7, 84-97. doi: 10.4236/jsip.2016.72010.

- J.P.Zhou, A.L. Cunha, M.N. Do, “Nonsubsampled Contourlet Transform Construction and Application Enhancement,” IEEE International Conference on Image Processing, 1-469, 2005, doi: 10.1109/ICIP.2005.1529789.

- G.Easley, D.Labate, W.Q.Lim , ”Sparse Directional Image Representations Using the Discrete Shearlet Transform,” Applied and Computational Harmonic Analysis, 25, 25-46, 2007, http://dx.doi.org/10.1016/j.acha.2007.09.003

- P. Viola, M. J. Jones, “Rapid Object Detection using a Boosted Cascade of Simple Features”, IEEE Computer Society, 1, 511-518, 2001, doi: 10.1109/CVPR.2001.990517.

- “keras”, https://keras.io/, 2020

- “Tenserflow”, https://www.tensorflow.org/federated, 2020

- “UTKface database”, https://susanqq.github.io/UTKFace/, 2020