Using Classic Networks for Classifying Remote Sensing Images: Comparative Study

Volume 5, Issue 5, Page No 770–780, 2020

Adv. Sci. Technol. Eng. Syst. J. 5(5), 770–780 (2020);

DOI: 10.25046/aj050594

DOI: 10.25046/aj050594

Keywords: Remote Sensing Images, ResNet, VGG, DenseNet, CNN, NASNet, Deep Learning, Machine Learning

This paper presents a comparative study for using the classic networks in remote sensing images classification. There are four deep convolution models that used in this comparative study; the DenseNet 196, the NASNet Mobile, the VGG 16, and the ResNet 50 models. These learning convolution models are based on the use of the ImageNet pre-trained weights, transfer learning, and then adding a full connected layer that compatible with the used dataset classes. There are two datasets are used in this comparison; the UC Merced land use dataset and the SIRI-WHU dataset. This comparison is based on the inspection of the learning curves to determine how well the training model is and calculating the overall accuracy that determines the model performance. This comparison illustrates that the use of the ResNet 50 model has the highest overall accuracy and the use of the NASNet Mobile model has the lowest overall accuracy in this study. The DenseNet 169 model has little higher overall accuracy than the VGG 16 model.

Introduction

With rapid development of communication sciences especially satellites and cameras, the remote sensing images appeared with the importance of processing and dealing with this type of images (remote sensing images). One of these important image processing is classification which done by machine learning technology. Machine learning is one of the artificial intelligence branches that based on training computers using real data which result that computers will have good estimations as an expert human for the same type of data [1]. In 1959 the machine learning had a definition that “The machine learning is the field of study that gives computers the ability to learn without being explicitly programmed”, this definition was made by Arthur Samuel [2]. Where the declaration of machine learning problem was known as “a computer program is said to learn from experience E with respect to some task T and some performance measure P”, this declaration was made by Tom Mitchell in 1998 [3]. Deep learning is a branch of machine learning that depends on the Artificial Neural Networks (ANNs) which can be used in the remote sensing images classification [4]. In the recent years, with the appearance of the latest satellites versions and its updated cameras with high spectral and spatial resolution, the very high resolution (VHR) remote sensing images appeared. The redundancy pixels in the VHR remote sensing images can cause an over-fitting problem through training process where used the ordinary machine learning or ordinary deep learning in classification. So it must optimize the ANNs and have a convenient feature extraction from remote sensing images as a preprocessing before training the dataset [5-6]. The convolution Neural Networks (CNNs) is derived from the ANNs but its layers are not fully connected like the ANNs layers; it has an excited rapid advance in computer vision [7]. It is based on some blocks can applied on an image as filters and then extract convolution object features from this image, these features can be used in solving many of computer vision problems, one of these problems is classification [8]. The need of processing the huge data, which appeared with the advent of the VHR remote sensing images and its rapid development, produced the need of CNNs deep architectures that can produce a high accuracy in classification problems. It caused the appearance of the classic networks. There are many classic networks that are mentioned in the research papers which are established by researchers. However, this paper will inspect four of the well-known classic networks; the DenseNet 169, the NASNet Mobile, the VGG 16, and the ResNet 50 models. These four network models are used in the classification researches for the remote sensing images in many research papers. In [9], authors used the DenseNet in their research to propose a new model for improved the classification accuracy. In [10], authors used the DenseNet model to build dual channel CNNs for hyper-spectral images feature extraction. In [11], authors proposed a convolutional network based on the DenseNet model for remote sensing images classification. They build a small number of convolutional kernels using dense connections to produce a large number of reusable feature maps, which made the network deeper, but did not increase the number of parameters. In [12], authors proposed a remote sensing image classification method that based on the NASNet model. In [13], authors used the NASNet model as a feature descriptor which improved the performance of their trained network. In [14], authors proposed the RS-VGG classifier for classifying the remote sensing images which used the VGG model. In [15], authors proposed a combination between the CNNs algorithms outputs, one of these algorithms outputs is the outputs of the VGG model, and then constructed a representation of the VHR remote sensing images for resulting VHR remote sensing images understanding. In [16], authors used the pre-trained VGG model to recognize the airplanes using the remote sensing images. In [17], authors performed a fully convolution network that based on the VGG model for classifying the high spatial resolution remote sensing images. They fine-tuned their model parameters by using the ImageNet pre-trained VGG weights. In [18], authors proposed the use of the ResNet model to generate a ground scene semantics feature from the VHR remote sensing images, then concatenated with low level features to generate a more accurate model. In [19], authors proposed a classification method based on collaborate the 3-D separable ResNet model with cross-sensor transfer learning for hyper-spectral remote sensing images. In [20], authors used the ResNet model to propose a novel method for classifying forest tree species using high resolution RGB color images that captured by a simple grade camera mounted on an unmanned aerial vehicle (UAV) platform. In [21], authors proposed an aircraft detection methods based on the use of the deep ResNet model and super vector coding. In [22], authors proposed a remote sensing image usability assessment method based on the ResNet model by combining edge and texture maps.

The aim of this paper is to compare the using of the deep convolution models classic networks in classifying the remote sensing images. The used networks in this comparison are the DenseNet 196, the NASNet Mobile, the VGG 16, and the ResNet 50 models. This comparative study is based on inspecting the learning curves for the training and validation loss and training and validation accuracy through training process for each epoch. This inspection is done to determine the efficient of the model hyper-parameters selection. It is based also on calculating the overall accuracy (OA) of these four models in remote sensing images classification to determine the learning model performance. There are two use datasets in this comparative; the UC Merced Land use dataset and the SIRI-WHU dataset.

The rest of this paper is organized as follow. Section 2 gives the methods. The experimental results and setup are shown in section 3. Section 4 presents the conclusions followed by the most relevant references.

The Methods

In this section the used models, in this comparative study, will explained with its structures. The classic networks that used in this study will illustrated in brief as literature review, ending with how to assess the performance of the learning models.

The Used Models

The feature extraction of remote sensing images is provided an important basis in remote sensing images analysis. So, in this study the deep classic convolution networks outputs considered as the main features that extracted from the remote sensing images. In these four networks, we used the ImageNet pre-trained weights because the train of new CNNs models requires a large amount of data. We transfer learning, add full connected (FC) layers with the output layer containing neurons number that equal the dataset classes number (21 for the UC Merced land use dataset and 12 for the SIRI-WHU dataset), and then train these (FC) layers.

In the DenseNet 169 model we transfer learning to the last hidden layer before the output layer (has 1664 neurons) and get the output of this network with the ImageNet pre-trained weights, then adding FC layer (output layer) with softmax activation.

In the NASNet Mobile model we transfer learning to the last hidden layer before the output layer (has 1056 neurons) and get the output of this network with the ImageNet pre-trained weights, then adding FC layer (output layer) with softmax activation.

In the VGG 16 model we transfer learning to the output of last max pooling layer (has shape 7, 7, 512) in block 5 that before the first FC layer and get the output of this network with the ImageNet pre-trained weights, then adding FC layer (output layer) with softmax activation.

In the ResNet 50 model we transfer learning to the last hidden layer before the output layer (has 2048 neurons) and get the output of this network with the ImageNet pre-trained weights, then adding FC layer (output layer) with softmax activation.

The Convolution Neural Networks (CNNs)

The CNNs are taken from the ANNs with exception that it is not fully connected layers. The CNNs are the best solution for computer vision which based on some of filters to reduce the image height and width and increase the number of channels together, then processing the output with full connected neural network layers (FCs) which reduce the input layer neurons, reduce training time, and increase the training model performance [23-25]. These filters values are initialized with many random functions which can be optimized.

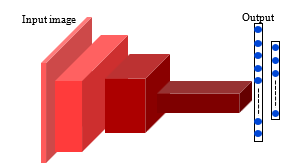

Figure 1: One of a CNNs model structures [25]

The filters design is based on the use of self-intuition as with ANNs structure design and learning hyper-parameters coefficient choice, which increasing the difficulty to reach the best solution for the learning problems [25]. Figure 1 shows an example of a CNNs model structure [24].

The DenseNet Model

In 2017, the DenseNets was proposed in the CVPR 2017 conference (Best Paper Award) [26]. They started from attempted to build a deeper network based on an idea that if the convolution network contains shorter connections between its layers close to the input and those close to the output, this deep convolution network can be more accurate and efficient to train. Other than the ResNet model which adds a skip-connection that bypass the nonlinear transformation, the DenseNet add a direct connection from any layer to any subsequent layer. So the l^th layer receives the feature-maps of all former layers x_0 to x_(l-1) as (1) [26].

![]()

where [x_0,〖 x〗_1,…,〖 x〗_(l-1) ] refers to the spectrum of the feature-map produced in the layers 0,1,2,…,l-1.

Figure 2 shows the 5-layers dense block architecture and Table 1 shows the DenseNet 169 architectures for ImageNet. [26].

| Table 1: the DenseNet 169 model architectures for ImageNet [26] | ||

| Layers | Output Size | DenseNet 169 |

| Convolution | 112 112 | 7 7 conv, stride 2 |

| Pooling | 56 56 | 3 3 max pool, stride 2 |

| Dense Block (1) | 56 56 | |

| Transition Layer (1) | 56 56 | 1 1 conv |

| 28 28 | 2 2 average pool, stride 2 | |

| Dense Block (2) | 28 28 | |

| Transition Layer (2) | 28 28 | 1 1 conv |

| 14 14 | 2 2 average pool, stride 2 | |

| Dense Block (3) | 14 14 | |

| Transition Layer (3) | 14 14 | 1 1 conv |

| 7 7 | 2 2 average pool, stride 2 | |

| Dense Block (4) | 7 7 | |

| Classification Layer | 1 1 | 7 7 global average pool |

| 1000 | 1000D fully-connected, softmax | |

The NASNet Model

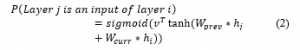

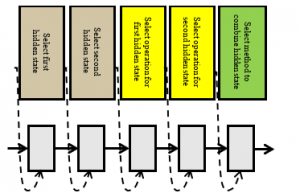

In 2017, the NASNet (Neural Architecture Search Net) model was proposed in the ICLR 2017 conference [27]. They used the recurrent neural networks (RNNs) to generate the model description of the neural networks and trained the RNNs with reinforcement learning to improve the accuracy of the generated architectures on a validation set. The NASNet model is based on indicting the previous layers that elected to be connected by adding an anchor point which has N-1 content-based sigmoid using (2). Figure 3 shows one block of a NASNet convolutional cell [27].

where h_j refers to the hidden state of the controller at anchor point for the j^th layer and j=[0,1,2,…,N-1].

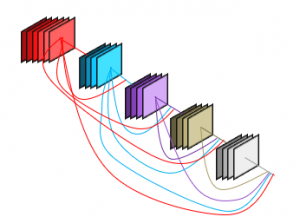

Figure 2: The 5 layers Dense block architecture [26]

Figure 3: One block of a NASNet convolutional cell [27]

The VGG Model

In 2015, the VGG network was proposed in the ICLR 2015 conference [28]. They began with investigated the effect of the convolution network depth on its accuracy in the large-scale images. Through they evaluated the deeper networks architecture using (3×3) convolution filters, they showed that an expressive growth on the prior-art configurations can be achieved by pushed the depth to 16-19 weight layers. Table 2 shows the VGG 16 model architectures for the ImageNet [28].

| Table 2: The VGG 16 model architectures for ImageNet [28] | |||

| Block | Layers | Output Size | VGG 16 |

| Input | 224 224 3 | ||

| Block 1 | Convolution | 224 224 64 | 3 3 conv 64, stride 1 |

| Convolution | 224 224 64 | 3 3 conv 64, stride 1 | |

| Pooling | 112 112 64 | 2 2 max pool, stride 2 | |

| Block 2 | Convolution | 112 112 128 | 3 3 conv 128, stride 1 |

| Convolution | 112 112 128 | 3 3 conv 128, stride 1 | |

| Pooling | 56 56 128 | 2 2 max pool, stride 2 | |

| Block 3 | Convolution | 56 56 256 | 3 3 conv 256, stride 1 |

| Convolution | 56 56 256 | 3 3 conv 256, stride 1 | |

| Convolution | 56 56 256 | 3 3 conv 256, stride 1 | |

| Pooling | 28 28 256 | 2 2 max pool, stride 2 | |

| Block 4 | Convolution | 28 28 512 | 3 3 conv 512, stride 1 |

| Convolution | 28 28 512 | 3 3 conv 512, stride 1 | |

| Convolution | 28 28 512 | 3 3 conv 512, stride 1 | |

| Pooling | 14 14 512 | 2 2 max pool, stride 2 | |

| Block 5 | Convolution | 14 14 512 | 3 3 conv 512, stride 1 |

| Convolution | 14 14 512 | 3 3 conv 512, stride 1 | |

| Convolution | 14 14 512 | 3 3 conv 512, stride 1 | |

| Pooling | 7 7 512 | 2 2 max pool, stride 2 | |

| FC | 4096 | ||

| FC | 4096 | ||

| Output | 1000, softmax | ||

The VGG network is a deeper convolution network that trained on the ImageNet dataset. The input images of this network is (224×244×3). This network consists of five convolution blocks, each block is containing convolution layers and pooling layer, then ending with two FC layers (each layer has 4096 neurons) then the output layer with softmax activation. The VGG network doesn’t contain any layers connections or bypasses such as the ResNet, the NASNet or the DenseNet models, and at the same time gives high classification accuracy with the large scale images [28].

The ResNet Model

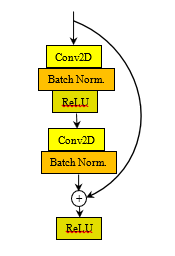

In 2016, the ResNet was proposed in the CVPR 2016 conference [29]. They combined the degradation problem by introducing a deep residual learning framework. Instead of intuiting each few stacked layers directly fit a desired underlying mapping. The ResNet is based on skip connections between deep layers. These skip connections can skipping one or more layers. The outputs of these connections are added to the outputs of the network stacked layers as (3) [29].

![]()

where H(x) is the final block output, x is the output of the connected layer, and F(x) is the output of the stacked networks layer in the same block.

Figure 4 shows the ResNet one building block. Tables 3 shows the ResNet 50 network architectures for the ImageNet [29].

| Table 3: the ResNet 50 model architectures for ImageNet [29] | ||

| Layers | Output Size | ResNet 50 |

| Conv 1 | 112 112 | 7 7 conv 64, stride 2 |

| Conv 2_x | 56 56 | 3 3 max pool, stride 2 |

| Conv 3_x | 28 28 | |

| Conv 4_x | 14 14 | |

| Conv 5_x | 7 7 | |

| Classification Layer | 1 1 | 7 7 global average pool |

| 1000 | 1000D fully-connected, softmax | |

Figure 4: The ResNet building block [29]

The Performance Assessment

There are many matrices for gauge the performance of the learning models. One of these is the OA. The OA is the main classification accuracy assessment [30]. It is measure the percentage ratio between the corrected estimation test data objects and all the test data objects in the used dataset. The OA is calculated using (4) [30, 31].

OA= (Number of Correctly Estimations)/(Total number of Test Data Objects) ×100 (4)

The learning curves for the loss and the accuracy are very important indicator for determining the power of learning models through training process by using the corrected hyper-parameters [32]. By using these curves, you can determine if a problem exist on your learning model such as the over-fitting or the under-fitting problems. These curves represent the calculation of the loss and the accuracy values for the learning model at each epoch through the training process using training and validation data [32, 33].

Experimental Results and Setup

This comparative study is based on calculating the OA and plotting the learning curves to determine the power of the used hyper-parameter to achieved the better learning model performance. The UC Merced Land use dataset and the SIRI-WHU dataset are the used datasets in this study. The details of these datasets will introduce in this section and then the experiments setup details and the results.

Figure 5: Image examples from the 21 classes in the UC Merced land use dataset [34]

The UC Merced Land Use Dataset

The UC Merced Land use dataset is a collection of remote sensing images which has been prepared in 2010 by the University of California, Merced [34]. It consists of 2100 remote sensing images divided into 21 classes with 100 images for each class. These images were manually extracted from large images from the USGS National Map Urban Area Imagery collection for various urban areas around the USA. Each image in this dataset is Geo-tiff RGB image with 256×256 pixels resolution and 1 square foot (0.0929 square meters) spatial resolution [34]. Figure 5 shows image examples from the 21 classes in the UC Merced land use dataset [34].

The SIRI-WHU Dataset

The SIRI-WHU dataset is a collection of remote sensing images which the authors of [35] used this dataset in their classification problem research in 2016. This dataset consists of two versions that must complete each other. The total images in this dataset are 2400 remote sensing images divided into 12 classes with 200 images for each class. These images were extracted from Google Earth (Google inc.) and mainly cover urban areas in China. Each image in this dataset is Geo-tiff RGB image with 200×200 pixels resolution and 2 square meters (21.528 square foot) spatial resolution [35]. Figure 6 shows image examples with 12 classes in the SIRI-WHU dataset [35].

Figure 6: Image examples from the 12 classes in the SIRI-WHU dataset [35].

The Experimental Setup

The aim of this paper is to present a comparative study of using the classic networks for classifying the remote sensing images. This comparison is based on plotting the learning curves for the loss and the accuracy values, with every epoch, that be calculated through training process for the training and the validation data to determine the efficiency of the hyper-parameters values. The comparison is also based on the OA to assess each model performance. The used datasets in this study are the UC Merced land use dataset and the SIRI-WHU dataset where their details are stated in sections 3.1 and 3.2. All tests were performed using Google-Colab. The Google-Colab is a free cloud service hosted by Google inc. to encourage machine learning and artificial intelligence researches [36]. It is acts as a virtual machine (VM) that using 2-cores Xeon CPU with 2.3 GHz, GPU Tesla K80 with 12 GB GPU memory, 13 GB RAM, and 33GB HDD with Python 3.3.9. The maximum lifetime of this VM is 12 hours and it will be idled after 90 minutes time out [37]. Performing tests has been done by connecting to this VM online through ADSL internet line with 4Mbps communication speed. This connection was done using an Intel® coreTMi5 CPU M450 @2.4GHz with 6 GB RAM and running Windows 7 64-bit operating system. This work is limited by used the ImageNet pre-trained weights because the train of new CNNs models needs a huge amount of data and more sophisticated hardware, this is unlike the lot of needed time consumed for this training process. The other limitation is that the input images shape is mustn’t less than 200×200×3 and not greater than 300×300×3 because of the limitations of the pre-trained classic networks. The preprocessing step according to each network requirements is necessary to get efficient results; it must be as done on ImageNet dataset before training the models and produce the ImageNet pre-trained weights. The ImageNet pre-trained weights classic networks that used in this paper have input shape (224, 224, 3) and output layers 1000 nodes according to the ImageNet classes (1000 classes) [38, 39]. So, it must perform modifications on the learning algorithms that used these networks to be compatible with the used remote sensing images datasets as stated in section 2.1. The data in the used datasets were divided into 60% training set, 20% validation set, and 20% test set before training the last FC layers in each network model. The training process, using the training set and the validation set, is done for the model with a supposed number of epochs to determine the number of epochs that achieved the minimum validation loss. Thus, we consider that the assembling of the training set and the validation set are the new training data and then retrain the model with this new training set and the predetermined number of epochs that achieved the minimum validation loss. Finally test the model using the test set. It must be notice that, the learning parameters values, such as learning rate and batch size, were determined by intuition with taking in consideration the learning parameters values that used through training these models with the ImageNet dataset through producing the ImageNet pre-trained weights [26-29], where the used optimizer, the number of epochs, the additional activation and regularization layers, and the dropout regularization rates were determined by iterations and intuition. The classifier models are built using python 3.3.9, in addition to the use of the Tensorflow library for the preprocessing step and the Keras library for extracting features, training the last FC layers, and testing the models.

In the DenseNet 196 model, the image resizing was done on the dataset images to have shape (224, 224, 3) to be compatible with the pre-trained DenseNet 196 model input shape, perform transfer learning to the 7×7 global average pooling layer that above the 1000D FC layer, and then add a FC layer, which has number of neurons equal to the used dataset classes, with softmax activation layer. The last layer weights were retrained with learning rate = 0.001, Adam optimizer and batch size = 256 with 100 epochs. The normalization preprocessing must be done on the dataset images before using on the DenseNet 169 model. Figure 7 shows the flow chart of the experimental algorithm that used the DenseNet 169 model.

In the NASNet Mobile model, the image resizing was done on the dataset images to have shape (224, 224, 3) to be compatible with the pre-trained NASNet Mobile model input shape, perform transfer learning to the 7×7 global average pooling layer that above the 1000D FC layer, add a ReLU activation layer, dropout regularization layer with rate 0.5, and then adding a FC layer, which has number of neurons equal to the used dataset classes, with softmax activation layer. The last layer weights were retrained with learning rate = 0.001, Adam optimizer, and batch size = 64 with 200 epochs. The normalization preprocessing must be done on the dataset images before using on the NASNet Mobile model. Figure 8 shows the flow chart of the experimental algorithm that used the NASNet Mobile model.

Figure 7: The flow chart of the experimental algorithm that used the DenseNet 169 model

Figure 8: The flow chart of the experimental algorithm that used the NASNet Mobile model

In the VGG 16 model, the image resizing was done on the dataset images to have shape (224, 224, 3) to be compatible with the pre-trained VGG 16 model input shape, perform transfer learning to the 2×2 max pooling layer in block 5, flatten the pooling layer output, add a ReLU activation layer, dropout regularization layer with rate 0.77, and then add a FC layer, which has number of neurons equal to the used dataset classes, with softmax activation layer. The last layer weights were retrained with learning rate = 0.001, Adam optimizer, and batch size = 64 with 100 epochs. The image conversion to the BGR mode preprocessing must be done on the dataset images before using on the VGG 16 model. Figure 9 shows the flow chart of the experimental algorithm that used the VGG 16 model.

Figure 9: The flow chart of the experimental algorithm that used the VGG 16 model

In the ResNet 50 model, the image resizing was done on the dataset images to have shape (224, 224, 3) to be compatible with the pre-trained ResNet 50 model input shape, perform a transfer learning to the 7×7 global average pooling layer that above the 1000D FC layer, and then add a FC layer, which has number of neurons equal to the used dataset classes, with softmax activation layer. The last layer weights were retrained with learning rate = 0.1, Adam optimizer, and batch size = 64 with 200 epochs. The image conversion to the BGR mode preprocessing must be done on the dataset images before using on the ResNet 50 model. Figure 10 shows the flow chart of the experimental algorithm that used the ResNet 50 model.

Figure 10: The flow chart of the experimental algorithm that used the ResNet 50 model

The Experimental Results

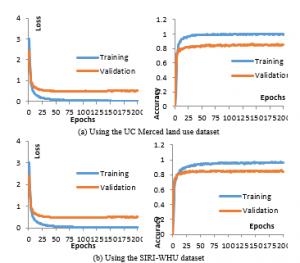

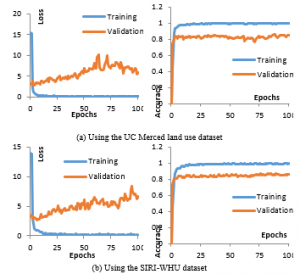

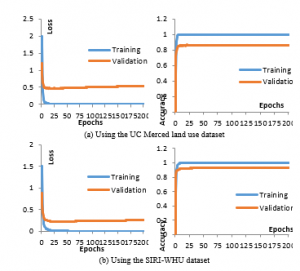

With the use of the stated convolution models in this study for classifying remote sensing images, through the training process we used the training data (60% from the used dataset) and the validation data (20% from the used dataset). Figure 11 shows the loss and the accuracy learning curves respectively for training the DenseNet 169 model, (a) for using the UC Merced land use dataset and (b) for using the SIRI-WHU dataset. Figure 12 shows the loss and the accuracy learning curves respectively for training the NASNet Mobile model, (a) for using the UC Merced land use dataset and (b) for using the SIRI-WHU dataset. Figure 13 shows the loss and the accuracy learning curves respectively for training the VGG 16 model, (a) for using the UC Merced land use dataset and (b) for using the SIRI-WHU dataset. Figure 14 shows the loss and the accuracy learning curves respectively for training the ResNet 50 model, (a) for using the UC Merced land use dataset and (b) for using the SIRI-WHU dataset.

Figure 11: The loss and the accuracy learning curves for training the DenseNet 169 model.

Figure 12: The loss and the accuracy learning curves for training the NASNet Mobile model.

Figure 13: The loss and the accuracy learning curves for training the VGG 16 model.

Figure 14: The loss and the accuracy learning curves for training the ResNet 50 model.

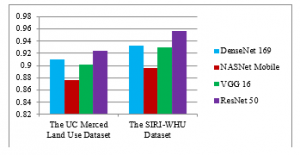

From the learning curves it can be determine the number of epochs that achieved the minimum validation loss in each model from the four models that discussed in this study. So, we consider that the training data and the validation data, 80% from dataset, are the new training data. Then, we repeat the same training process for each model with the same hyper-parameters values and the both datasets, and then calculate the OA using the predictions of the test data, 20% from the training set, to assess the performance of each model. Table 4 and figure 15 show the OA for each model using the both datasets.

| Table 4: The OA for each model using the both datasets | ||

| The UC Merced land use Dataset | The SIRI-WHU Dataset | |

| The DenseNet 169 model | 0.91 | 0.933 |

| The NASNet Mobile model | 0.876 | 0.896 |

| The VGG 16 model | 0.902 | 0.929 |

| The ResNet 50 model | 0.924 | 0.956 |

As shown from these results, the ResNet 50 model had the higher OA in this comparative study where the NASNet Mobile model had the lowest OA. In the other hand the OA for the DenseNet 169 model had little higher OA than the VGG 16 model. The use of the SIRI-WHU dataset had higher OA than the use of the UC Merced land use dataset. These results illustrated that the OA had an opposite relation with the dataset image resolution and the dataset number of classes, so the use of the SIRI-WHU dataset which has 12 classes, image resolution 200×200 pixels, and spatial resolution 2 square meters gave higher OA than the use of the UC Merced land use dataset which has 21 classes, image resolution 256×256 pixels, and spatial resolution 0.0929 square meter. The deeper convolution networks give considerable accuracy but the connections between layers may have another influence. The VGG 16 model gave good OA so it had efficient results but its learning curves had some degradation in its validation curves and near to the over-fitting in its training curves. So it may give better results with some additional researches that adjust the optimizations and regularization hyper-parameters. In the other hand the ResNet 50 model, that gave the higher OA in this study, had good learning curves with some little over-fitting. So, with more research and adjusting the regularization hyper-parameters, it is not easy to achieve higher results using this model without any major development. The ResNets are based on the skip layers connections so the layer connections can raise the classification accuracy. The DenseNets may have more connections but still the ResNet 50 model had the higher OA in this comparative study. The NASNets models have layers connections but not more as the DenseNets models, these connections only to determine the previous layer, so its results are low compared with other models in this study. In the other hand the NASNet’s model learning curves are good, no degradation and no over-fitting, but with increasing the epochs the validation loss may be raised, so it must have an attention observed for epochs and validation curves through training this model. As a total the deeper convolution networks may give better accuracies but the deeper networks that have layers connections may give the best accuracies.

Figure 15: The OA for each model using the both datasets

Conclusions

This paper presented a comparative study for the use of the deep convolution models classic networks in classifying the remote sensing images. This comparison illustrated that what the classic network was more accurate for classifying the VHR remote sensing images. The used classic networks in this study were the DenseNet 169, the NASNet Mobile, the VGG 16, and the ResNet 50 models. There were two used datasets in this study; the UC Merced land use dataset and the SIRI-WHU dataset. This comparison was based on the learning curves to check that how much effectiveness of the hyper-parameters values selection and the overall accuracy to assess the classification model performance. This comparison illustrated that the ResNet 50 model was more accurate than other models that stated in this study, which the overall accuracy of the DenseNet 169 model was little higher than the VGG 16 model. The NASNet Mobile model had the lowest OA in this study. The learning curves elucidated that the adjustment of the hyper-parameters of the VGG 16 model can lead to better overall accuracy where it is not easy to achieve better overall accuracy in the ResNet 50, the DenseNet 169 and the NASNet Mobile models without major developments in these models. The overall accuracy had an opposite relation with the remote sensing images resolution (pixel or spatial) and the number of dataset classes.

In the future, the FC layers can be replaced by other classifiers, and then train these models.

Conflict of Interest

The authors declare no conflict of interest.

- M. Bowling et al., “Machine Learning and Games”, Machine Learning, Springer, 63(3), 211-215, 2006. http://doi.org/10.1007/s10994-006-8919-x

- I. ElNaqa and M.J. Murphy, “What is Machine Learning”, Machine Learning in Radiation Oncology, Cham, Springer, 3-11, 2015. https://doi.org/10.1007/978-3-319-18305-3_1

- Tom M. Mitchell, “The Discipline of Machine Learning, Pittsburgh”, PA: Carnegie Mellon University, School of Computer Science, Machine Learning Department, 9, 2006.

- Kyung Hwan Kim and Sung June Kim, “Neural Spike Sorting Under Nearly 0-dB Signal-to-Noise Ratio Using Nonlinear Energy Operator and Artificial Neural-Network Classifier”, IEEE Transactions on Medical Engineering, 47(10), 1406-1411, 2000. http://doi.org/10.1109/10.871415

- T.Blaschke, “Object Based Image Analysis for Remote Sensing”, ISPRS Journal of Photogrammetry and Remote Sensing, Elsevier, 65(1), pp. 2-16, 2010. https://doi.org/10.1016/j.isprsjprs.2009.06.004

- S.M. Nair, J.S. Bindhu, “Supervised Techniques and Approaches for Satellite Image Classification”, International Journal of Computer Applications (IJCA), 134(16), 1-6, 2016. . https://doi.org/10.5120/ijca2016908202

- W. Rawat, and Z. Wang, “Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review”, Neural computation, 29(9), 2352-2449, 2017. http://doi.org/10.1162/NECO_a_00990

- K.A. Al-Afandy et al., “Artificial Neural Networks Optimization and Convolution Neural Networks to Classifying Images in Remote Sensing: A Review”, in The 4th International Conference on Big Data and Internet of Things (BDIoT’19), 23-24 Oct, Rabat, Morocco, 2019. http://doi.org/10.1145/3372938.3372945

- Y. Tao, M. Xu, Z. Lu, and Y. Zhong, “DenseNet-Based Depth-Width Double Reinforced Deep Learning Neural Network for High-Resolution Remote Sensing Images Per-Pixel Classification”, Remote Sensing, 10(5), 779-805, 2018. http://doi.org/10.3390/rs10050779

- G. Yang, U. B. Gewali, E. Ientilucci, M. Gartley, and S.T. Monteiro, “Dual-Channel DenseNet for Hyperspectral Image Classification”, in IGARSS 2018 – 2018 IEEE International Geoscience and Remote Sensing Symposium, 05 Nov, Valencia, Spain, 2595-2598, 2018. http://doi.org/10.1109/IGARSS.2018.8517520

- J. Zhang et al., “A Full Convolutional Network based on DenseNet for Remote Sensing Scene Classification”, Mathematical Biosience and Engineering,, 6(5), 3345-3367, 2019. http://doi.org/10.3934/mbe.2019167

- L. Li, T. Tian, and H. Li, “Classification of Remote Sensing Scenes Based on Neural Architecture Search Network”, in 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), 19-21 Jul, Wuxi, China, 176-180, 2019. http://doi.org/10.1109/SIPROCESS.2019.8868439

- A. Bahri et al., “Remote Sensing Image Classification via Improved Cross-Entropy Loss and Transfer Learning Strategy Based on Deep Convolutional Neural Networks”, IEEE Geoscience and Remote Sensing Letters, vol. 17(6), 1087-1091, 2019. http://doi.org/10.1109/LGRS.2019.2937872

- X. Liu et al., “Classifying High Resolution Remote Sensing Images by Fine-Tuned VGG Deep Networks”, in IGARSS 2018 – 2018 IEEE International Geoscience and Remote Sensing Symposium, 22-27 Jul, Valencia, Spain, 7137-7140, 2018. http://doi.org/10.1109/IGARSS.2018.8518078

- S. Chaib et al., “Deep Feature Fusion for VHR Remote Sensing Scene Classification”, IEEE Transactions on Geoscience and Remote Sensing, 55(8), 4775-4784, 2017. http://doi.org/10.1109/TGRS.2017.2700322

- Z. Chen, T. Zhang, and C. Ouyang, “End-to-End Airplane Detection Using Tansfer Learning in Remote Sensing Images”, Remote Sensing, 10(1), 139-153, 2018. http://doi.org/10.3390/rs10010139

- G. Fu, C. Liu, R. Zhou, T. Sun, and Q. Zhang, “Classification for High Resolution Remote Sensing Imegery Using a Fully Convolution Network”, Remote Sensing, 9(5), 498-518, 2017. http://doi.org/10.3390/rs9050498

- M. Wang et al., “Scene Classification of High-Resolution Remotely Sensed Image Based on ResNet”, Journal of Geovisualization and Spatial Analysis, Springer, 3(2), 16-25, 2019. http://doi.org/10.1007/s41651-019-0039-9

- Y. Jiang et al., “Hyperspectral image classification based on 3-D separable ResNet and transfer learning”, IEEE Geoscience and Remote Sensing Letters, 16(12), 1949-1953, 2019. http://doi.org/10.1109/LGRS.2019.2913011

- S. Natesan, C. Armenakis, and U. Vepakomma, “ResNet-Based Tree Species Classification Using UAV Images”, International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, XLII, 2019. http://doi.org/10.5194/isprs-archives-XLII-2-W13-475-2019

- J. Yang et al., “Aircraft Detection in Remote Sensing Images Based on a Deep Residual Network and Super-Vector Coding”, Remote Sensing Letters, Taylor & Francis, 9(3), 229-237, 2018. http://doi.org/10.1080/2150704X.2017.1415474

- L. Xu and Q. Chen, “Remote-Sensing Image Usability Assessment Based on ResNet by Combining Edge and Texture Maps”, IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 12(6), 1825-1834, 2019. http://doi.org/10.1109/JSTARS.2019.2914715

- A.F. Gad, “Practical Computer Vision Applications Using Deep Learning with CNNs”, Apress, Springer, Berkeley, CA, 2018. https://doi.org/10.1007/978-1-4842-4167-7

- Y. Chen et al., “Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks”, IEEE Transactions on Geoscience and Remote Sensing, 54(10), 6232-6251, 2016. http://doi.org/10.1109/TGRS.2016.2584107

- E. Maggiori et al., “Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification”, IEEE Transactions on Geoscience and Remote Sensing, 55(2), 645-657, 2017. http://doi.org/10.1109/TGRS.2016.2612821

- G. Huang et al., “Densely Connected Convolutional Networks”, in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 21-26 Jul, Honolulu, HI, USA, 2261-2269, 2017. http://doi.org/10.1109/CVPR.2017.243

- B. Zoph and Q.V. Le, “Neural architecture search with reinforcement learning”, in International Conference on Learning Representations (ICLR 2017), 24-26 April, Toulon, France, 2017. https://arxiv.org/abs/1611.01578

- K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition”, in International Conference on Learning Representations (ICLR 2015), 7-9 May, San Diego, USA, 2015. https://arxiv.org/abs/1409.1556

- K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition”, in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 27-30 Jun, Las Vegas, NV, USA, 770-778, 2016. http://doi.org/10.1109/CVPR.2016.90

- G. Banko, “A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data and of Methods Including Remote Sensing Data in Forest Inventory”, in International Institution for Applied Systems Analysis (IIASA), Laxenburg, Austria, IR-98-081, 1998. http://pure.iiasa.ac.at/id/eprint/5570/

- W. Li et al., “Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images”, Remote Sensing, 9(1), 22-34, 2017. http://doi.org/10.3390/rs9010022

- J.N. Rijn et al., “Fast Algorithm Selection Using Learning Curves”, in International symposium on intelligent data analysis, 9385, Springer, Cham, 22-24 Oct, Saint Etienne, France, 298-309, 2015. http://doi.org/10. 1007/978-3-319-24465-5 26

- M. Wistuba and T. Pedapati, “Learning to Rank Learning Curves”, arXiv:2006.03361v1, 2020. https://arxiv.org/abs/2006.03361

- Y. Yang and S. Newsam, “Bag-Of-Visual-Words and Spatial Extensions for Land-Use Classification”, in the 18th ACM SIGSPATIAL international conference on advances in geographic information systems, 2-5 Nov, San Jose California, USA, 270-279, 2010. http://doi.org/10.1145/1869790.1869829

- B. Zhao et al., “Dirichlet-Derived Multiple Topic Scene Classification Model for High Spatial Resolution Remote Sensing Imagery”, IEEE Transactions on Geoscience and Remote Sensing, 54(4), 2108-2123, 2016. http://doi.org/10.1109/TGRS.2015.2496185

- E. Bisong, “Building Machine Learning and Deep Learning Models on Google Cloud Platform: A Comprehensive Guide for Beginners”, Apress, Berkeley, CA, 2019.

- T. Carneiro et al., “Performance Analysis of Google Colaboratory as a Tool for Accelerating Deep Learning Applications”, IEEE Access, 6, 61677-61685, 2018. http://doi.org/10.1109/ACCESS.2018.2874767

- O. Russakovsky and L. Fei-Fei, “Attribute Learning in Large-Scale Datasets”, in European Conference on Computer Vision, 6553, Springer, Berlin, Heidelberg, 10-11 Sep, Heraklion Crete, Greece, 1-14, 2010. http://doi.org/10.1007/978-3-642-35749-7_1

- J. Deng et al., “What Does Classifying More Than 10,000 Image Categories Tell Us? “, in The 11th European conference on computer vision, 6315, Springer, Berlin, Heidelberg, 5-11 Sep, Heraklion Crete, Greece, 71-84, 2010. http://doi.org/10.1007/978-3-642-15555-0_6

- Vikas Thammanna Gowda, Landis Humphrey, Aiden Kadoch, YinBo Chen, Olivia Roberts, "Multi Attribute Stratified Sampling: An Automated Framework for Privacy-Preserving Healthcare Data Publishing with Multiple Sensitive Attributes", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 51–68, 2026. doi: 10.25046/aj110106

- Kohinur Parvin, Eshat Ahmad Shuvo, Wali Ashraf Khan, Sakibul Alam Adib, Tahmina Akter Eiti, Mohammad Shovon, Shoeb Akter Nafiz, "Computationally Efficient Explainable AI Framework for Skin Cancer Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 11–24, 2026. doi: 10.25046/aj110102

- David Degbor, Haiping Xu, Pratiksha Singh, Shannon Gibbs, Donghui Yan, "StradNet: Automated Structural Adaptation for Efficient Deep Neural Network Design", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 29–41, 2025. doi: 10.25046/aj100603

- Jenna Snead, Nisa Soltani, Mia Wang, Joe Carson, Bailey Williamson, Kevin Gainey, Stanley McAfee, Qian Zhang, "3D Facial Feature Tracking with Multimodal Depth Fusion", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 5, pp. 11–19, 2025. doi: 10.25046/aj100502

- Glender Brás, Samara Leal, Breno Sousa, Gabriel Paes, Cleberson Junior, João Souza, Rafael Assis, Tamires Marques, Thiago Teles Calazans Silva, "Machine Learning Methods for University Student Performance Prediction in Basic Skills based on Psychometric Profile", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 4, pp. 1–13, 2025. doi: 10.25046/aj100401

- khawla Alhasan, "Predictive Analytics in Marketing: Evaluating its Effectiveness in Driving Customer Engagement", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 3, pp. 45–51, 2025. doi: 10.25046/aj100306

- François Dieudonné Mengue, Verlaine Rostand Nwokam, Alain Soup Tewa Kammogne, René Yamapi, Moskolai Ngossaha Justin, Bowong Tsakou Samuel, Bernard Kamsu Fogue, "Explainable AI and Active Learning for Photovoltaic System Fault Detection: A Bibliometric Study and Future Directions", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 3, pp. 29–44, 2025. doi: 10.25046/aj100305

- Surapol Vorapatratorn, Nontawat Thongsibsong, "AI-Based Photography Assessment System using Convolutional Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 2, pp. 28–34, 2025. doi: 10.25046/aj100203

- Khalifa Sylla, Birahim Babou, Mama Amar, Samuel Ouya, "Impact of Integrating Chatbots into Digital Universities Platforms on the Interactions between the Learner and the Educational Content", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 1, pp. 13–19, 2025. doi: 10.25046/aj100103

- Win Pa Pa San, Myo Khaing, "Advanced Fall Analysis for Elderly Monitoring Using Feature Fusion and CNN-LSTM: A Multi-Camera Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 6, pp. 12–20, 2024. doi: 10.25046/aj090602

- Ahmet Emin Ünal, Halit Boyar, Burcu Kuleli Pak, Vehbi Çağrı Güngör, "Utilizing 3D models for the Prediction of Work Man-Hour in Complex Industrial Products using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 6, pp. 01–11, 2024. doi: 10.25046/aj090601

- Joshua Carberry, Haiping Xu, "GPT-Enhanced Hierarchical Deep Learning Model for Automated ICD Coding", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 21–34, 2024. doi: 10.25046/aj090404

- Haruki Murakami, Takuma Miwa, Kosuke Shima, Takanobu Otsuka, "Proposal and Implementation of Seawater Temperature Prediction Model using Transfer Learning Considering Water Depth Differences", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 01–06, 2024. doi: 10.25046/aj090401

- Brandon Wetzel, Haiping Xu, "Deploying Trusted and Immutable Predictive Models on a Public Blockchain Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 72–83, 2024. doi: 10.25046/aj090307

- Anirudh Mazumder, Kapil Panda, "Leveraging Machine Learning for a Comprehensive Assessment of PFAS Nephrotoxicity", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 62–71, 2024. doi: 10.25046/aj090306

- Nguyen Viet Hung, Tran Thanh Lam, Tran Thanh Binh, Alan Marshal, Truong Thu Huong, "Efficient Deep Learning-Based Viewport Estimation for 360-Degree Video Streaming", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 49–61, 2024. doi: 10.25046/aj090305

- Sami Florent Palm, Sianou Ezéckie Houénafa, Zourkalaïni Boubakar, Sebastian Waita, Thomas Nyachoti Nyangonda, Ahmed Chebak, "Solar Photovoltaic Power Output Forecasting using Deep Learning Models: A Case Study of Zagtouli PV Power Plant", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 41–48, 2024. doi: 10.25046/aj090304

- Taichi Ito, Ken’ichi Minamino, Shintaro Umeki, "Visualization of the Effect of Additional Fertilization on Paddy Rice by Time-Series Analysis of Vegetation Indices using UAV and Minimizing the Number of Monitoring Days for its Workload Reduction", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 29–40, 2024. doi: 10.25046/aj090303

- Henry Toal, Michelle Wilber, Getu Hailu, Arghya Kusum Das, "Evaluation of Various Deep Learning Models for Short-Term Solar Forecasting in the Arctic using a Distributed Sensor Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 12–28, 2024. doi: 10.25046/aj090302

- Tinofirei Museba, Koenraad Vanhoof, "An Adaptive Heterogeneous Ensemble Learning Model for Credit Card Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 01–11, 2024. doi: 10.25046/aj090301

- Pui Ching Wong, Shahrum Shah Abdullah, Mohd Ibrahim Shapiai, "Double-Enhanced Convolutional Neural Network for Multi-Stage Classification of Alzheimer’s Disease", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 2, pp. 09–16, 2024. doi: 10.25046/aj090202

- Toya Acharya, Annamalai Annamalai, Mohamed F Chouikha, "Enhancing the Network Anomaly Detection using CNN-Bidirectional LSTM Hybrid Model and Sampling Strategies for Imbalanced Network Traffic Data", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 67–78, 2024. doi: 10.25046/aj090107

- Toya Acharya, Annamalai Annamalai, Mohamed F Chouikha, "Optimizing the Performance of Network Anomaly Detection Using Bidirectional Long Short-Term Memory (Bi-LSTM) and Over-sampling for Imbalance Network Traffic Data", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 144–154, 2023. doi: 10.25046/aj080614

- Renhe Chi, "Comparative Study of J48 Decision Tree and CART Algorithm for Liver Cancer Symptom Analysis Using Data from Carnegie Mellon University", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 57–64, 2023. doi: 10.25046/aj080607

- Ng Kah Kit, Hafeez Ullah Amin, Kher Hui Ng, Jessica Price, Ahmad Rauf Subhani, "EEG Feature Extraction based on Fast Fourier Transform and Wavelet Analysis for Classification of Mental Stress Levels using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 46–56, 2023. doi: 10.25046/aj080606

- Nizar Sakli, Chokri Baccouch, Hedia Bellali, Ahmed Zouinkhi, Mustapha Najjari, "IoT System and Deep Learning Model to Predict Cardiovascular Disease Based on ECG Signal", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 08–18, 2023. doi: 10.25046/aj080602

- Zobeda Hatif Naji Al-azzwi, Alexey N. Nazarov, "MRI Semantic Segmentation based on Optimize V-net with 2D Attention", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 4, pp. 73–80, 2023. doi: 10.25046/aj080409

- Kohei Okawa, Felix Jimenez, Shuichi Akizuki, Tomohiro Yoshikawa, "Investigating the Impression Effects of a Teacher-Type Robot Equipped a Perplexion Estimation Method on College Students", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 4, pp. 28–35, 2023. doi: 10.25046/aj080404

- Yu-Jin An, Ha-Young Oh, Hyun-Jong Kim, "Forecasting Bitcoin Prices: An LSTM Deep-Learning Approach Using On-Chain Data", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 186–192, 2023. doi: 10.25046/aj080321

- Kitipoth Wasayangkool, Kanabadee Srisomboon, Chatree Mahatthanajatuphat, Wilaiporn Lee, "Accuracy Improvement-Based Wireless Sensor Estimation Technique with Machine Learning Algorithms for Volume Estimation on the Sealed Box", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 108–117, 2023. doi: 10.25046/aj080313

- Chaiyaporn Khemapatapan, Thammanoon Thepsena, "Forecasting the Weather behind Pa Sak Jolasid Dam using Quantum Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 54–62, 2023. doi: 10.25046/aj080307

- Der-Jiun Pang, "Hybrid Machine Learning Model Performance in IT Project Cost and Duration Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 108–115, 2023. doi: 10.25046/aj080212

- Ivana Marin, Sven Gotovac, Vladan Papić, "Development and Analysis of Models for Detection of Olive Trees", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 87–96, 2023. doi: 10.25046/aj080210

- Paulo Gustavo Quinan, Issa Traoré, Isaac Woungang, Ujwal Reddy Gondhi, Chenyang Nie, "Hybrid Intrusion Detection Using the AEN Graph Model", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 44–63, 2023. doi: 10.25046/aj080206

- Víctor Manuel Bátiz Beltrán, Ramón Zatarain Cabada, María Lucía Barrón Estrada, Héctor Manuel Cárdenas López, Hugo Jair Escalante, "A Multiplatform Application for Automatic Recognition of Personality Traits in Learning Environments", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 30–37, 2023. doi: 10.25046/aj080204

- Ossama Embarak, "Multi-Layered Machine Learning Model For Mining Learners Academic Performance", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 850–861, 2021. doi: 10.25046/aj060194

- Fatima-Zahra Elbouni, Aziza EL Ouaazizi, "Birds Images Prediction with Watson Visual Recognition Services from IBM-Cloud and Conventional Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 181–188, 2022. doi: 10.25046/aj070619

- Roy D Gregori Ayon, Md. Sanaullah Rabbi, Umme Habiba, Maoyejatun Hasana, "Bangla Speech Emotion Detection using Machine Learning Ensemble Methods", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 70–76, 2022. doi: 10.25046/aj070608

- Bahram Ismailov Israfil, "Deep Learning in Monitoring the Behavior of Complex Technical Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 5, pp. 10–16, 2022. doi: 10.25046/aj070502

- Deeptaanshu Kumar, Ajmal Thanikkal, Prithvi Krishnamurthy, Xinlei Chen, Pei Zhang, "Analysis of Different Supervised Machine Learning Methods for Accelerometer-Based Alcohol Consumption Detection from Physical Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 147–154, 2022. doi: 10.25046/aj070419

- Nosiri Onyebuchi Chikezie, Umanah Cyril Femi, Okechukwu Olivia Ozioma, Ajayi Emmanuel Oluwatomisin, Akwiwu-Uzoma Chukwuebuka, Njoku Elvis Onyekachi, Gbenga Christopher Kalejaiye, "BER Performance Evaluation Using Deep Learning Algorithm for Joint Source Channel Coding in Wireless Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 127–139, 2022. doi: 10.25046/aj070417

- Zhumakhan Nazir, Temirlan Zarymkanov, Jurn-Guy Park, "A Machine Learning Model Selection Considering Tradeoffs between Accuracy and Interpretability", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 72–78, 2022. doi: 10.25046/aj070410

- Ayoub Benchabana, Mohamed-Khireddine Kholladi, Ramla Bensaci, Belal Khaldi, "A Supervised Building Detection Based on Shadow using Segmentation and Texture in High-Resolution Images", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 166–173, 2022. doi: 10.25046/aj070319

- Tiny du Toit, Hennie Kruger, Lynette Drevin, Nicolaas Maree, "Deep Learning Affective Computing to Elicit Sentiment Towards Information Security Policies", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 152–160, 2022. doi: 10.25046/aj070317

- Kaito Echizenya, Kazuhiro Kondo, "Indoor Position and Movement Direction Estimation System Using DNN on BLE Beacon RSSI Fingerprints", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 129–138, 2022. doi: 10.25046/aj070315

- Jayan Kant Duggal, Mohamed El-Sharkawy, "High Performance SqueezeNext: Real time deployment on Bluebox 2.0 by NXP", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 70–81, 2022. doi: 10.25046/aj070308

- Sreela Sreekumaran Pillai Remadevi Amma, Sumam Mary Idicula, "A Unified Visual Saliency Model for Automatic Image Description Generation for General and Medical Images", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 2, pp. 119–126, 2022. doi: 10.25046/aj070211

- Idir Boulfrifi, Mohamed Lahraichi, Khalid Housni, "Video Risk Detection and Localization using Bidirectional LSTM Autoencoder and Faster R-CNN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 6, pp. 145–150, 2021. doi: 10.25046/aj060619

- Seok-Jun Bu, Hae-Jung Kim, "Ensemble Learning of Deep URL Features based on Convolutional Neural Network for Phishing Attack Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 291–296, 2021. doi: 10.25046/aj060532

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Arwa Alghamdi, Graham Healy, Hoda Abdelhafez, "Machine Learning Algorithms for Real Time Blind Audio Source Separation with Natural Language Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 125–140, 2021. doi: 10.25046/aj060515

- Baida Ouafae, Louzar Oumaima, Ramdi Mariam, Lyhyaoui Abdelouahid, "Survey on Novelty Detection using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 73–82, 2021. doi: 10.25046/aj060510

- Radwan Qasrawi, Stephanny VicunaPolo, Diala Abu Al-Halawa, Sameh Hallaq, Ziad Abdeen, "Predicting School Children Academic Performance Using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 08–15, 2021. doi: 10.25046/aj060502

- Fatima-Ezzahra Lagrari, Youssfi Elkettani, "Traditional and Deep Learning Approaches for Sentiment Analysis: A Survey", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 01–07, 2021. doi: 10.25046/aj060501

- Zhiyuan Chen, Howe Seng Goh, Kai Ling Sin, Kelly Lim, Nicole Ka Hei Chung, Xin Yu Liew, "Automated Agriculture Commodity Price Prediction System with Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 376–384, 2021. doi: 10.25046/aj060442

- Kanjanapan Sukvichai, Chaitat Utintu, "An Alternative Approach for Thai Automatic Speech Recognition Based on the CNN-based Keyword Spotting with Real-World Application", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 278–291, 2021. doi: 10.25046/aj060431

- Hathairat Ketmaneechairat, Maleerat Maliyaem, Chalermpong Intarat, "Kamphaeng Saen Beef Cattle Identification Approach using Muzzle Print Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 110–122, 2021. doi: 10.25046/aj060413

- Anjali Banga, Pradeep Kumar Bhatia, "Optimized Component based Selection using LSTM Model by Integrating Hybrid MVO-PSO Soft Computing Technique", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 62–71, 2021. doi: 10.25046/aj060408

- Md Mahmudul Hasan, Nafiul Hasan, Dil Afroz, Ferdaus Anam Jibon, Md. Arman Hossen, Md. Shahrier Parvage, Jakaria Sulaiman Aongkon, "Electroencephalogram Based Medical Biometrics using Machine Learning: Assessment of Different Color Stimuli", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 27–34, 2021. doi: 10.25046/aj060304

- Nadia Jmour, Slim Masmoudi, Afef Abdelkrim, "A New Video Based Emotions Analysis System (VEMOS): An Efficient Solution Compared to iMotions Affectiva Analysis Software", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 990–1001, 2021. doi: 10.25046/aj0602114

- Bakhtyar Ahmed Mohammed, Muzhir Shaban Al-Ani, "Follow-up and Diagnose COVID-19 Using Deep Learning Technique", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 971–976, 2021. doi: 10.25046/aj0602111

- Showkat Ahmad Dar, S Palanivel, "Performance Evaluation of Convolutional Neural Networks (CNNs) And VGG on Real Time Face Recognition System", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 956–964, 2021. doi: 10.25046/aj0602109

- Dominik Štursa, Daniel Honc, Petr Doležel, "Efficient 2D Detection and Positioning of Complex Objects for Robotic Manipulation Using Fully Convolutional Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 915–920, 2021. doi: 10.25046/aj0602104

- Binghan Li, Yindong Hua, Yifeng Liu, Mi Lu, "Dilated Fully Convolutional Neural Network for Depth Estimation from a Single Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 801–807, 2021. doi: 10.25046/aj060292

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, "Development of an EEG Controlled Wheelchair Using Color Stimuli: A Machine Learning Based Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 754–762, 2021. doi: 10.25046/aj060287

- Susanto Kumar Ghosh, Mohammad Rafiqul Islam, "Convolutional Neural Network Based on HOG Feature for Bird Species Detection and Classification", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 733–745, 2021. doi: 10.25046/aj060285

- Abraham Adiputra Wijaya, Inten Yasmina, Amalia Zahra, "Indonesian Music Emotion Recognition Based on Audio with Deep Learning Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 716–721, 2021. doi: 10.25046/aj060283

- Antoni Wibowo, Inten Yasmina, Antoni Wibowo, "Food Price Prediction Using Time Series Linear Ridge Regression with The Best Damping Factor", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 694–698, 2021. doi: 10.25046/aj060280

- Javier E. Sánchez-Galán, Fatima Rangel Barranco, Jorge Serrano Reyes, Evelyn I. Quirós-McIntire, José Ulises Jiménez, José R. Fábrega, "Using Supervised Classification Methods for the Analysis of Multi-spectral Signatures of Rice Varieties in Panama", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 552–558, 2021. doi: 10.25046/aj060262

- Phillip Blunt, Bertram Haskins, "A Model for the Application of Automatic Speech Recognition for Generating Lesson Summaries", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 526–540, 2021. doi: 10.25046/aj060260

- Sebastianus Bara Primananda, Sani Muhamad Isa, "Forecasting Gold Price in Rupiah using Multivariate Analysis with LSTM and GRU Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 245–253, 2021. doi: 10.25046/aj060227

- Prasham Shah, Mohamed El-Sharkawy, "A-MnasNet and Image Classification on NXP Bluebox 2.0", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1378–1383, 2021. doi: 10.25046/aj0601157

- Byeongwoo Kim, Jongkyu Lee, "Fault Diagnosis and Noise Robustness Comparison of Rotating Machinery using CWT and CNN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1279–1285, 2021. doi: 10.25046/aj0601146

- Alisson Steffens Henrique, Anita Maria da Rocha Fernandes, Rodrigo Lyra, Valderi Reis Quietinho Leithardt, Sérgio D. Correia, Paul Crocker, Rudimar Luis Scaranto Dazzi, "Classifying Garments from Fashion-MNIST Dataset Through CNNs", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 989–994, 2021. doi: 10.25046/aj0601109

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, Md Mostafizur Rahman Komol, "Diagnosis of Tobacco Addiction using Medical Signal: An EEG-based Time-Frequency Domain Analysis Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 842–849, 2021. doi: 10.25046/aj060193

- Reem Bayari, Ameur Bensefia, "Text Mining Techniques for Cyberbullying Detection: State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 783–790, 2021. doi: 10.25046/aj060187

- Anass Barodi, Abderrahim Bajit, Taoufiq El Harrouti, Ahmed Tamtaoui, Mohammed Benbrahim, "An Enhanced Artificial Intelligence-Based Approach Applied to Vehicular Traffic Signs Detection and Road Safety Enhancement", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 672–683, 2021. doi: 10.25046/aj060173

- Inna Valieva, Iurii Voitenko, Mats Björkman, Johan Åkerberg, Mikael Ekström, "Multiple Machine Learning Algorithms Comparison for Modulation Type Classification Based on Instantaneous Values of the Time Domain Signal and Time Series Statistics Derived from Wavelet Transform", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 658–671, 2021. doi: 10.25046/aj060172

- Carlos López-Bermeo, Mauricio González-Palacio, Lina Sepúlveda-Cano, Rubén Montoya-Ramírez, César Hidalgo-Montoya, "Comparison of Machine Learning Parametric and Non-Parametric Techniques for Determining Soil Moisture: Case Study at Las Palmas Andean Basin", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 636–650, 2021. doi: 10.25046/aj060170

- Imane Jebli, Fatima-Zahra Belouadha, Mohammed Issam Kabbaj, Amine Tilioua, "Deep Learning based Models for Solar Energy Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 349–355, 2021. doi: 10.25046/aj060140

- Ndiatenda Ndou, Ritesh Ajoodha, Ashwini Jadhav, "A Case Study to Enhance Student Support Initiatives Through Forecasting Student Success in Higher-Education", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 230–241, 2021. doi: 10.25046/aj060126

- Lonia Masangu, Ashwini Jadhav, Ritesh Ajoodha, "Predicting Student Academic Performance Using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 153–163, 2021. doi: 10.25046/aj060117

- Sinh-Huy Nguyen, Van-Hung Le, "Standardized UCI-EGO Dataset for Evaluating 3D Hand Pose Estimation on the Point Cloud", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1–9, 2021. doi: 10.25046/aj060101

- Xianxian Luo, Songya Xu, Hong Yan, "Application of Deep Belief Network in Forest Type Identification using Hyperspectral Data", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1554–1559, 2020. doi: 10.25046/aj0506186

- Sara Ftaimi, Tomader Mazri, "Handling Priority Data in Smart Transportation System by using Support Vector Machine Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1422–1427, 2020. doi: 10.25046/aj0506172

- Chaymae Ziani, Abdelalim Sadiq, "SH-CNN: Shearlet Convolutional Neural Network for Gender Classification", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1328–1334, 2020. doi: 10.25046/aj0506158

- Othmane Rahmaoui, Kamal Souali, Mohammed Ouzzif, "Towards a Documents Processing Tool using Traceability Information Retrieval and Content Recognition Through Machine Learning in a Big Data Context", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1267–1277, 2020. doi: 10.25046/aj0506151

- Gede Putra Kusuma, Jonathan, Andreas Pangestu Lim, "Emotion Recognition on FER-2013 Face Images Using Fine-Tuned VGG-16", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 315–322, 2020. doi: 10.25046/aj050638

- Lubna Abdelkareim Gabralla, "Dense Deep Neural Network Architecture for Keystroke Dynamics Authentication in Mobile Phone", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 307–314, 2020. doi: 10.25046/aj050637

- Puttakul Sakul-Ung, Amornvit Vatcharaphrueksadee, Pitiporn Ruchanawet, Kanin Kearpimy, Hathairat Ketmaneechairat, Maleerat Maliyaem, "Overmind: A Collaborative Decentralized Machine Learning Framework", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 280–289, 2020. doi: 10.25046/aj050634

- Helen Kottarathil Joy, Manjunath Ramachandra Kounte, "A Comprehensive Review of Traditional Video Processing", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 274–279, 2020. doi: 10.25046/aj050633

- Andrea Generosi, Silvia Ceccacci, Samuele Faggiano, Luca Giraldi, Maura Mengoni, "A Toolkit for the Automatic Analysis of Human Behavior in HCI Applications in the Wild", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 185–192, 2020. doi: 10.25046/aj050622

- Fei Gao, Jiangjiang Liu, "Effective Segmented Face Recognition (SFR) for IoT", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 36–44, 2020. doi: 10.25046/aj050605

- Pamela Zontone, Antonio Affanni, Riccardo Bernardini, Leonida Del Linz, Alessandro Piras, Roberto Rinaldo, "Supervised Learning Techniques for Stress Detection in Car Drivers", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 22–29, 2020. doi: 10.25046/aj050603

- Kodai Kitagawa, Koji Matsumoto, Kensuke Iwanaga, Siti Anom Ahmad, Takayuki Nagasaki, Sota Nakano, Mitsumasa Hida, Shogo Okamatsu, Chikamune Wada, "Posture Recognition Method for Caregivers during Postural Change of a Patient on a Bed using Wearable Sensors", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1093–1098, 2020. doi: 10.25046/aj0505133

- Daniyar Nurseitov, Kairat Bostanbekov, Maksat Kanatov, Anel Alimova, Abdelrahman Abdallah, Galymzhan Abdimanap, "Classification of Handwritten Names of Cities and Handwritten Text Recognition using Various Deep Learning Models", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 934–943, 2020. doi: 10.25046/aj0505114

- Khalid A. AlAfandy, Hicham, Mohamed Lazaar, Mohammed Al Achhab, "Investment of Classic Deep CNNs and SVM for Classifying Remote Sensing Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 652–659, 2020. doi: 10.25046/aj050580

- Chigozie Enyinna Nwankpa, "Advances in Optimisation Algorithms and Techniques for Deep Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 563–577, 2020. doi: 10.25046/aj050570

- Rajesh Kumar, Geetha S, "Malware Classification Using XGboost-Gradient Boosted Decision Tree", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 536–549, 2020. doi: 10.25046/aj050566

- Alami Hamza, Noureddine En-Nahnahi, Said El Alaoui Ouatik, "Contextual Word Representation and Deep Neural Networks-based Method for Arabic Question Classification", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 478–484, 2020. doi: 10.25046/aj050559