Posture Recognition Method for Caregivers during Postural Change of a Patient on a Bed using Wearable Sensors

Volume 5, Issue 5, Page No 1093-1098, 2020

Author’s Name: Kodai Kitagawa1,a), Koji Matsumoto1, Kensuke Iwanaga1, Siti Anom Ahmad2, Takayuki Nagasaki3, Sota Nakano4, Mitsumasa Hida1,5, Shogo Okamatsu1,6, Chikamune Wada1

View Affiliations

1Graduate School of Life Science and System Engineering, Kyushu Institute of Technology, Kitakyushu, 8080196, Japan

2Malaysian Research Institute of Ageing (MyAgeing™), Universiti Putra Malaysia, Serdang, Selangor, 43400, Malaysia

3Department of Rehabilitation, Tohoku Bunka Gakuen University, Sendai, 9818550, Japan

4Department of Rehabilitation, Kyushu University of Nursing and Social Welfare, Tamana, 8650062, Japan

5Department of Physical Therapy, Osaka Kawasaki Rehabilitation University, Kaizuka, 5970104, Japan

6Department of Physical Therapy, Kitakyushu Rehabilitation College, Kanda, 8000343, Japan

a)Author to whom correspondence should be addressed. E-mail: kitagawakitagawa156@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 5(5), 1093-1098 (2020); ![]() DOI: 10.25046/aj0505133

DOI: 10.25046/aj0505133

Keywords: Posture recognition, Caregiver, Patient handling, Lower back pain, Wearable sensor, Machine learning

Export Citations

Caregivers experience lower back pain due to their awkward postures while handling patients. Therefore, a monitoring system to supervise caregivers’ postures using wearable sensors is being developed. This study proposed a postural recognition method for caregivers during postural change while handling a patient on a bed. The proposed method recognizes foot positions and arm movements by a machine learning algorithm using inertial data on the trunk and foot pressure data obtained from wearable sensors. An experiment was conducted to evaluate whether the proposed method could recognize three foot positions and three arm movements. Participants provided postural change for a simulated patient on a bed (patient: supine to lateral recumbent) under nine conditions, including different combinations of the three foot positions and three arm movements; the experiment was repeated ten times for each condition. Experimental results showed that the proposed method using an artificial neural network with all features obtained from an inertial measurement unit and insole pressure sensors could recognize arm movements and foot positions with an accuracy of approximately 0.75 and 0.97, respectively. These results suggest that the proposed method can be used in a monitoring system tracking a caregiver’s posture.

Received: 09 September 2020, Accepted: 10 October 2020, Published Online: 20 October 2020

1. Introduction

Recently, many caregivers have experienced lower back pain owing to frequent patient handling [1–2]. Previous studies have suggested that caregivers should maintain an appropriate posture based on body mechanics during patient handling [3–4]. Accordingly, previous studies have developed monitoring systems to supervise the postures of caregivers to prevent lower back pain [5–8].

Lin et al. developed a robot patient comprising an inertial measurement unit (IMU), angular position sensors, and servo motor encoders and found that the robot could classify correct and incorrect transfer methods [5]. Huang et al. developed an automatic evaluation method for patient transfer skills using two Kinect RGB-D sensors [6, 7]. Their study showed that this method could improve the patient transfer skill of students [7]. Itami et al. developed a monitoring system using electromyography, goniometer, and inclination sensor [8]. This system could monitor skills and lumbar loads of patient handling [8]. These systems are useful for monitoring caregivers’ postures; however, these systems have limitations in terms of workspace and usability because they require vision-based devices, multiple specialized sensors, or robots. Previous study reported that assistive devices for caregivers should be time-efficient, cost-effective, and suitable for various workspaces [9]. Hence, there is a need to develop wearables and simple monitoring systems for caregivers’ postures.

PostureCoach is a wearable and simple system for preventing lower back pain among caregivers [10]. This system can provide real-time biofeedback using the trunk angle obtained from only two IMUs [10]. Previous investigations have shown that PostureCoach can reduce the lumbar spine flexion in a beginner who handles patients [10]. However, this system cannot suggest caregiver ways to adjust to an appropriate posture because the system measures only the trunk angle. Several factors, such as the foot position and arm movement, are useful to achieve suitable postures to prevent lower back pain during patient handling [11–13]. Therefore, a simple and wearable system that monitors the trunk angle, foot position, and arm movement is being develop [14].

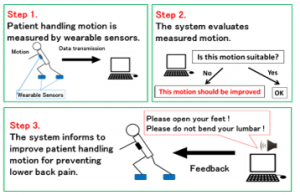

Figure 1 shows the flow of the proposed monitoring system for caregivers’ postures. The proposed system monitors a caregiver’s posture using wearable sensors mounted on the caregiver’s trunk and shoes (Step 1). The proposed method evaluates several factors such as trunk angle, foot positions, and arm movements using sensor data obtained from the wearable sensors (Step 2). If the evaluated factors are unsuitable, the proposed system informs the caregiver to adjust their posture (Step 3).

Our previous study proposed a postural recognition method during sit-to-stand assistive motions but could not consider other patient-handling aspects and various foot positions and arm movements [14]. Several patient-handling aspects, such as providing postural change to turn a patient on a bed, cause lumber load among caregivers [12, 15]. Such a type of patient handling causes lower back pain among caregivers as a patient is frequently repositioned on a bed [15]. As explained above, arm movements and foot positions are important factors for patient handling [11-13]. Thus, this study proposes the recognition method for various foot positions and arm movements of caregivers using a postural recognition method during postural change for a patient on a bed.

Figure 1: Monitoring system for patient-handling motion.

This section discusses the background, previous studies of this paper. The remainder of this paper is organized as follows. Section 2 mentions the objective and contribution of this paper. Section 3 explains the proposed method for postural recognition during postural change while handling a patient on a bed. Section 4 describes the experiments conducted to evaluate whether the proposed method can recognize a caregiver’s posture during patient handling. Section 5 presents experimental results and discussions. Finally, section 6 presents conclusions.

2. Objective and Contribution

The objective of this study is proposal of a novel posture recognition method for wearable monitoring system to prevent lower back pain among caregivers. Furthermore, this study explores appropriate combination of wearable sensors and machine learning algorithms for the proposed method.

The contribution of this study is as follows. The proposed method will be applied to suggest an appropriate posture for caregivers because this method recognizes both arm movements and foot positions. This study presents the novel posture recognition method using sensor fusion consisted of IMU and insole pressure sensor. As a scientific contribution, this method using sensor fusion of wearable sensor has a potential to be applied to posture recognition related to occupational health. In addition, the appropriate combination of wearable sensors and machine learning algorithms shown in this study will be useful in the research area of computer science and wearable systems.

3. Proposed Method

3.1. Overview

Figure 2 shows a block diagram of the proposed postural recognition method. The proposed method measured postural information by wearable sensors. Machine learning algorithm using features obtained from wearable sensors generated function for postural recognition. Caregiver’s posture such as arm movement and foot position were recognized by generated function. The details of each component are described below.

3.2. Input (wearable sensors)

The IMU attached on the trunk and insole pressure sensors measured postural information during patient handling. The IMU (Logical Product Co., Japan) measures the three-axis acceleration and angular velocity of the trunk for features of the machine learning algorithm. Insole pressure sensors are fabricated by arranging eight FlexiForce sensors (Tekscan, USA) on each foot. Because the FlexiForce sensors are thin and flexible, they are suitable for measuring forces between various surfaces, such as an insole [16]. Furthermore, the FlexiForce sensors can be used in real time applications owing to the lack of linearity, non-repeatability, and hysteresis [17]. Front and rear forces on each foot are measured using insole pressure sensors as features for the machine learning algorithm. These sensor data were measured at a 1-kHz sampling rate.

3.3. Machine learning-based recognition

Seven features (mean, maximum, minimum, standard deviation, root mean square, kurtosis and skewness) were extracted for machine learning algorithm. These features were extracted from each sensor data using a sliding window technique with a 1.0-s window size and 50% overlap. These features, window size, and overlap were selected based on the previous researches about posture recognition using wearable sensors [18–19].

The machine learning algorithm recognized three arm movements and three foot positions during postural change while handling a patient on a bed. In this study, artificial neural network, decision tree, and support vector machine were selected as machine learning algorithm for the proposed method because these three algorithms were used for wearable sensor-based posture recognition [19-20]. Details of the recognition models were described below.

Artificial neural network is applied to complex relationship between input and output by nonlinear and flexible decision boundary [19-21]. The artificial neural network-based model consists of input layer, hidden layer and output layer [19-20]. In our proposed method, the features obtained from the wearable sensors were inputted to the input layer, and the recognized posture was outputted from the output layer. Hidden layer was set function for learning the relationship between features obtained wearable sensor and postures. The specifications and parameters in artificial neural network of proposed method is shown in Table 1.

Decision tree provides if-then rules which have free of ambiguity [22-24]. If-then rules generated by decision tree consist of root, internal and leaf nodes [22]. The root and internal nodes have threshold of features for recognition. Position and features of these nodes are determined based on comparison of entropy for all features [23]. Leaf nodes are defined as recognition output via root and internal nodes. When these nodes are too many and complex, recognition model has problem such as over fitting [24]. Thus, decision tree has several parameters to adjust number of nodes [24]. The specifications and parameters of decision tree in our proposed method is shown in Table 2.

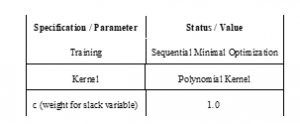

Support vector machine is applied to nonlinear data without over fitting by large margin separation and kernel function [25]. Kernel function provides efficient calculation in nonlinear feature spaces [25]. Large margin separation is required for generalization performance of support vector machine [25]. The specifications and parameters of support vector machine in our proposed method is shown in Table 3.

Comparison of these machine learning algorithms was necessary in order to determine the appropriate algorithm depends on the target postures [19–20]. In this study, the proposed method was compared to investigate which algorithm could recognize patient handling motion using these wearable sensors.

Table 1: Specifications and parameters for artificial neural network of the proposed method.

3.4. Output (recognized posture)

In the proposed method, arm movements and foot positions were recognized as postural factors. The monitoring system shown in previous section will provide caregiver the appropriate posture based on recognition results.

Figure 2: Proposed postural recognition method.

Table 2: Specifications and parameters for decision tree of the proposed method.

Table 3: Specifications and parameters for support vector machine of the proposed method.

4. Experiment

Four young healthy males (24.75 ± 0.83 years, 1.72 ± 0.05 m, 67.00 ± 10.79 kg) were participants as caregivers. One young healthy male (25.00 years, 1.69 m, 70.00 kg) participated as a simulated patient. All participants provided their verbal informed consent to the experiment.

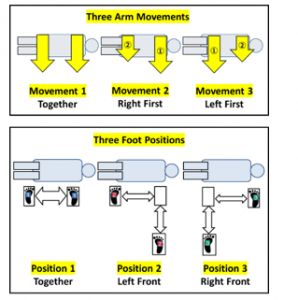

The four participants (caregivers) were asked to perform a postural change for the simulated patient on a bed under nine postural conditions. The nine conditions were a combination of the three arm movements and three foot positions shown in Figure 3. Each participant (caregiver) repeated this motion ten times for each condition. Data for the proposed method were measured by wearable sensors (IMU and insole pressure sensors) at 1-kHz sampling frequency for each trial.

The proposed method recognized the three arm movements and three foot positions by processing data obtained from the sensors using the machine learning algorithm. This experiment compared the recognition performance of three machine learning algorithms to determine the appropriate algorithm for the proposed method. Furthermore, the recognition performances of three feature patterns (“IMU,” “Insole Sensor,” and “All”) were compared to verify the effectiveness of the sensors and features.

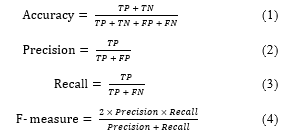

The metrics of accuracy and F-measure were used to evaluate the recognition performance of the algorithms. These evaluation metrics were calculated according to

where TP denotes true positive and represents the number of correct recognition for positive samples, TN denotes true negative and represents the number of correct recognition for negative samples, FP denotes false positive represents the number of samples incorrectly recognized as positive in actual negative samples, and FN denotes false negative and represents the number of samples incorrectly recognized as negative in actual positive samples. The accuracy of the proposed method was evaluated based on the recognition performance of each algorithm using the feature patterns. The F-measure of each arm movement and foot position was calculated using the harmonic mean of precision and recall as the overall performance. These metrics were calculated by ten-fold cross validation using data obtained from all trials and participants.

Figure 3: Postural conditions in the experiment.

5. Results and Discussion

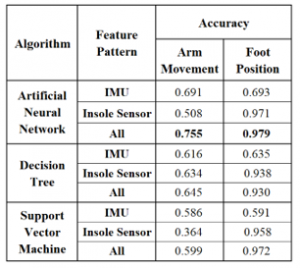

Tables 4 and 5 show the accuracy and F-measure of the proposed method. The results show that the combination of artificial neural network and all features obtained from both IMU and insole pressure sensors achieved the best performance for postural recognition. In this combination, the proposed method could recognize arm movements and foot positions with an accuracy of approximately 0.75 and 0.97, respectively. These results suggest the possibility of using the proposed recognition method in monitoring systems to prevent lower back pain.

A comparison between the machine leaning algorithms showed that the artificial neural network exhibited the best performance for both accuracy and F-measure. Therefore, the artificial neural network was deemed the most suitable machine learning algorithm for the proposed method. The artificial neural network’s best performance was attributed to its nonlinear and flexible decision boundary, which was effective for wearable sensor data [19-21].

A comparison between feature patterns showed that the proposed method yielded the best performance for accuracy and F-measure when all features obtained from both IMU and insole pressure sensors were used. These results indicated that both IMU and insole pressure sensors were effective and necessary for the proposed method. Moreover, in foot position recognition, the proposed method using only insole pressure sensors achieved a great performance. These findings indicate that the insole pressure sensors could detect the force distribution depending on the foot positions. However, the proposed method could not recognize arm movements using only the insole pressure sensors. Thus, IMU was also considered necessary for the proposed method. Table 6 shows the confusion matrices of the proposed method using the artificial neural network and all features for postural recognition. These results showed that the proposed method achieved good performance for foot position recognition. The reason for this performance, as explained earlier, was that insole pressure sensors in the shoes could detect relevant features related to foot positions. However, the proposed method incorrectly recognized arm movement between movements 1 and 3 because there was no sensor on the upper limbs. Placing additional sensors on the upper limbs could obstruct care activities. Thus, the optimal position to place an IMU on the trunk to detect arm movement without additional sensors should be explored.

The limitation of this study is that the proposed method was not evaluated in an actual workspace and under various aspects of patient handling, such as assisting transfer. Moreover, the proposed method should be tested for female participants, patients of different ages, and caregivers. Furthermore, other feature selection patterns and algorithms should be examined to improve the performance of the proposed postural recognition method. Following further tests and improvements on the proposed method, the postural monitoring system to prevent lower back pain will be implemented based on the proposed postural recognition method.

Table 4: Accuracy of the proposed method.

Table 5: F-measure of the proposed method.

In future work, the optimal placement of sensors will be explored to improve the accuracy of postural recognition in the proposed method. Moreover, other machine learning algorithms will be tested to determine a more suitable algorithm for the proposed method. Furthermore, a modified version of the proposed recognition method should be evaluated under various conditions. Finally, a monitoring system will be implemented based on the modified postural recognition method to prevent lower back pain due to patient handling.

Table 6: Confusion matrices of posture recognition

(using an artificial neural network with all features).

6. Conclusion

In this study, the posture recognition method for caregivers during postural change for a patient on a bed was proposed. The experimental results showed that the proposed method could recognize a caregiver’s posture. The proposed method using novel sensor fusion with machine learning will also be useful for posture recognition in other various application.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

The first author is grateful for a scholarship from the Nakatani Foundation for Advancement of Measuring Technologies in Biomedical Engineering.

- S. Kai, “Consideration of low back pain in health and welfare workers,” Journal of Physical Therapy Science, 13(2), 149–152, 2001, doi:10.1589/jpts.13.149.

- A. Holtermann, T. Clausen, M.B. Jørgensen, A. Burdorf, L.L. Andersen, “Patient handling and risk for developing persistent low-back pain among female healthcare workers,” Scandinavian Journal of Work, Environment & Health, 39(2), 164–169, 2013, doi:10.5271/sjweh.3329.

- R. Ibrahim, O.E.A.E. Elsaay, “The effect of body mechanics training program for intensive care nurses in reducing low back pain,” IOSR Journal of Nursing and Health Science, 4(5), 81–96, 2015, doi:10.9790/1959-04548196.

- A. Karahan, N. Bayraktar, “Determination of the usage of body mechanics in clinical settings and the occurrence of low back pain in nurses,” International Journal of Nursing Studies, 41(1), 67–75, 2004, doi:10.1016/s0020-7489(03)00083-x.

- C. Lin, T. Ogata, Z. Zhong, M. Kanai-Pak, J. Maeda, Y. Kitajima, M. Nakamura, N. Kuwahara, J. Ota, “Development and Validation of Robot Patient Equipped with an Inertial Measurement Unit and Angular Position Sensors to Evaluate Transfer Skills of Nurses,” International Journal of Social Robotics, 1–19, 2020, doi:10.1007/s12369-020-00673-6.

- Z. Huang, A. Nagata, M. Kanai-Pak, J. Maeda, Y. Kitajima, M. Nakamura, K. Aida, N. Kuwahara, T. Ogata, J. Otha, “Automatic evaluation of trainee nurses’ patient transfer skills using multiple kinect sensors,” IEICE TRANSACTIONS on Information and Systems, 97(1), 107–118, 2014, doi:10.1587/transinf.E97.D.107.

- Z. Huang, A. Nagata, M. Kanai-Pak, J. Maeda, Y. Kitajima, M. Nakamura, K. Aida, N. Kuwahara, T. Ogata, J. Otha, “Self-help training system for nursing students to learn patient transfer skills,” IEEE Transactions on Learning Technologies, 7(4), 319–332, 2014, doi: 10.1109/TLT.2014.2331252.

- K. Itami, T. Yasuda, Y. Otsuki, M. Ishibashi, T. Maesako, “Development of a checking system for body mechanics focusing on the angle of forward leaning during bed making,” Educational Technology Research, 33(1–2), 63–71, 2010, doi: 10.15077/etr.KJ00006713267.

- S. Sivakanthan, E. Blaauw, M. Greenhalgh, A.M. Koontz, R. Vegter, R.A. Cooper, “Person transfer assist systems: a literature review. Disability and Rehabilitation,” Assistive Technology, 1–10, 2019, doi: 10.1080/17483107.2019.1673833.

- M. Owlia, M. Kamachi, T. Dutta, “Reducing lumbar spine flexion using real-time biofeedback during patient handling tasks,” Work, 66(1), 41–51, 2020, doi: 10.3233/WOR-203149.

- K. Kitagawa, T. Nagasaki, S. Nakano, M. Hida, S. Okamatsu, C. Wada, “Optimal foot-position of caregiver based on muscle activity of lower back and lower limb while providing sit-to-stand support,” Journal of Physical Therapy Science, 32(8), 534–540, 2020, doi:10.1589/jpts.32.534.

- B. Schibye, A.F. Hansen, C.T. Hye-Knudsen, M. Essendrop, M. Böcher, J. Skotte, “Biomechanical analysis of the effect of changing patient-handling technique,” Applied Ergonomics, 34(2), 115–123, 2003, doi: 10.1016/S0003-6870(03)00003-6.

- Q. An, J. Nakagawa, J. Yasuda, W. Wen, H. Yamakawa, A. Yamashita, H. Asama, “Skill Extraction from Nursing Care Service Using Sliding Sheet,” International Journal of Automation Technology, 12(4), 533–541, 2018, doi:10.20965/ijat.2018.p0533.

- K. Kitagawa, T. Uezono, T. Nagasaki, S. Nakano, C. Wada, “Classification Method of Assistance Motions for Standing-up with Different Foot Anteroposterior Positions using Wearable Sensors,” in 2018 International Conference on Information and Communication Technology Robotics (ICT-ROBOT2018), 1–3, 2018, doi:10.1109/ICT-ROBOT.2018.8549912.

- H. Wardell, “Reduction of injuries associated with patient handling,” Aaohn Journal, 55(10), 407–412, 2007, doi:10.1177/216507990705501003.

- A.M. Almassri, W.Z. Wan Hasan, S.A. Ahmad, A.J. Ishak, A.M. Ghazali, D.N. Talib, C. Wada, “Pressure sensor: state of the art, design, and application for robotic hand,” Journal of Sensors, 2015, 846487, 2015, doi:10.1155/2015/846487.

- A.M. Almassri, W.Z. Wan Hasan, C. Wada, “Evaluation of a Commercial Force Sensor for Real Time Applications,” ICIC Express Letters, Part B: Applications, 11(5), 421–426, 2020, doi:10.24507/icicelb.11.05.421.

- S. Pirttikangas, K. Fujinami, T. Nakajima, “Feature selection and activity recognition from wearable sensors,” in 2006 International Symposium on Ubiquitous Computing Systems (UCS2006), 516–527, doi:10.1007/11890348_39.

- M.F. Antwi-Afari, H. Li, Y. Yu, L. Kong, “Wearable insole pressure system for automated detection and classification of awkward working postures in construction workers,” Automation in Construction, 96, 433–441, 2018, doi:10.1016/j.autcon.2018.10.004.

- M.F. Antwi-Afari, H. Li, J. Seo, A.Y.L. Wong, “Automated detection and classification of construction workers’ loss of balance events using wearable insole pressure sensors,” Automation in Construction, 96, 189–199, 2018, doi:10.1016/j.autcon.2018.09.010.

- S. Dreiseitl, L. Ohno-Machado, “Logistic regression and artificial neural network classification models: a methodology review,” Journal of Biomedical Informatics, 35(5–6), 352–359, 2002, doi:10.1016/S1532-0464(03)00034-0.

- Y.Y. Song, L.U. Ying, “Decision tree methods: applications for classification and prediction,” Shanghai archives of psychiatry, 27(2):130-135, 2015, doi:10.11919/j.issn.1002-0829.215044.

- Y. Zhang, S. Lu, X. Zhou, M. Yang, L. Wu, B. Liu, P. Phillips, D. Wang, “Comparison of machine learning methods for stationary wavelet entropy-based multiple sclerosis detection: decision tree, k-nearest neighbors, and support vector machine,” Simulation, 92(9), 861-871, 2016, doi:10.1177/0037549716666962.

- T. Wang, Z. Qin, Z. Jin, S. Zhang, “Handling over-fitting in test cost-sensitive decision tree learning by feature selection, smoothing, and pruning,” Journal of Systems and Software. 83(7), 1137-1147, 2010, doi: 10.1016/j.jss.2010.01.002.

- A. Ben-Hur, C.S. Ong, S. Sonnenburg, B. Schölkopf, G. Rätsch, “Support vector machines and kernels for computational biology,” PLoS Comput Biol, 4(10), p.e1000173, 2008, doi: 10.1371/journal.pcbi.1000173.