Deaf Chat: A Speech-to-Text Communication Aid for Hearing Deficiency

Volume 5, Issue 5, Page No 826-833, 2020

Author’s Name: Mandlenkosi Shezia), Abejide Ade-Ibijola

View Affiliations

Formal Structures, Algorithms and Industrial Applications Research Cluster, Department of Applied Information Systems, University of Johannesburg, Bunting Road Campus, Johannesburg, 2006, South Africa

a)Author to whom correspondence should be addressed. E-mail: mandlashezifbi@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 5(5), 826-833 (2020); ![]() DOI: 10.25046/aj0505100

DOI: 10.25046/aj0505100

Keywords: Speech-to-Text, Hearing Aids, Communication Aids, Multiple-Speaker Classification, AI for Social Good

Export Citations

Hearing impairments have a negative impact in the lives of individuals living with them and those around such individuals. Different applications and technological tools have been developed to help reduce this negative impact. Most mobile applications that have been developed that use Speech-to-Text technology have been inconsistent such that they are not inclusive of all types of hearing impaired individuals, only work under specifically predefined environments and do not support conversations with multiple participants. This makes the present tools less effective and makes hearing impaired participants feel like they are not completely part of the conversation. This paper presents a model that aims to address this by introducing the use of Multiple-Speaker Classification technology in the design of mobile applications for hearing impaired people. Furthermore we present a prototype of a mobile application called Deaf Chat that uses the newly designed model. A survey was conducted in order to evaluate the potential that this application has to address the needs of hearing-impaired people. The results of the evaluation presented a good user acceptance and proved that a platform like Deaf Chat could be useful for the greater good of those who have hearing impairment.

Received: 31 July 2020, Accepted: 15 September 2020, Published Online: 12 October 2020

1. Introduction

Hearing is a very important ability that many people take for granted until it weakens, or they lose it [1]. Communication impairments have been studied and proven numerous times to have a negative impact on many aspects of an individual’s life such that most communication impaired individuals experience mental and emotional challenges [2]–[5].

Hearing disorders have led into negative effects on the social and professional lives of older adults [6, 7]. Hearing impairment has a mass effect on the performance of individuals irrespective of their age group. Children who have any form of hearing impairment have been found to be experiencing continuous behavioral problems and issues with language development [8]. While examining hearingimpaired children, it was found that those who have hearing aids perform far way better compared to those who do not [9]. Using hearing aids reduces listening effort and the possibility of going through mental fatigue as less effort is made for processing speech [10]. This shows the greater potential that technology-based hearing aids could play on the lives of hearing-impaired individuals.

On many instances hearing-impaired individuals must depend on interpreters (mostly family members) to help them communicate

with other people [11, 12]. Some also resort to using sign language for communication and others even go to the extent of lip reading [13, 14]. Despite the efforts made, the failure to accommodate the hearing-impaired and the use of insufficient and ineffective communication methods further contributes to depression, loneliness and social anxiety [15]. Alternatively, some people use SMS messaging to communicate with hearing impaired individuals [16], but that is only effective when both parties are at the same geographical location. It is highly imperative to note that SMS is not that much effective when individuals who are engaging in a conversation are not at the same place because one can never know if the other party viewed or received the message or not [17].

The understanding of the problems that hearing-impaired individuals of different ages face gave us motivation to create a platform that can help reduce these problems. With advances in Artificial Intelligence (AI), there has been a movement to create more AI tools for social good. Here, we introduce a model and a tool (named Deaf

Chat to communicate with hearing impaired individuals based on

- AI for Social Good can be indelicately described as AI that aims to improve people’s lives in more meaningful ways [18]. Hence, creating an AI-powered software tool to support people with hearing impairment is considered as AI for Social Good [19].

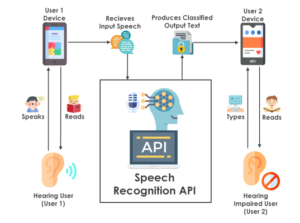

Figure 1: Graphical representation of messaging using the Deaf Chat application

Figure 1 gives a high level overview of how Deaf Chat facilitates communication between both hearing and hearing-impaired users. Deaf Chat supports both one-to-one chat and group chats, in both modes speech is analysed and classified in the interest of confirming if more than one voice is embedded in a voice note. The process is as follows. A hearing user, pair or group of hearing users will record their speech and the speaker recognition API will process the speech then convert it into classified text. The output text will then be sent to the hearing-impaired user in place of the voice note. Depending on the classification of the hearing-impairment and the preference of the hearing-impaired user, he or she will respond using either speech or text.

The following are the contributions of this paper. We have:

- conducted an extensive literature review on previous tools used as hearing aids and tools,

- developed a prototype of a mobile based messaging application called Deaf Chat that uses Multiple Speaker Recognition technology to serve as a communication aid for people with hearing deficiency, and

- evaluated Deaf Chat using a survey in order to analyse its potential impact.

This paper is organized as follows. The following section will explore the Background. Section 3 will then follow with Related Work. Section 4 presents the design of the Deaf Chat application. Section 5 presents Implementation and Results. Section 6 presents the evaluation. Lastly, Section 7 will conclude the paper by presenting the Conclusion, Recommendations and directions for Future Work.

2. Background

This section provides a detailed view of all the tools that are similar to Deaf Chat. The first subsection discusses our problem statement which shows why the development of this solution is necessary.

2.1. Problem Statement

Mobile applications that have been developed to aid hearingimpaired individuals to communicate with each other and with people without disabilities have been focused on creating solutions that are not inclusive of all categories of hearing-impaired individuals, most of them are scenario based and none of them currently support multiple speakers. In order to explore and solve the following problem, the following questions will be addressed:

- How has technology been used in the design of hearing aids and tools previously?

- How can Multiple-Speaker Recognition software be incorporated in the design of a new tool to enhance communication with hearing deficient individuals?

- Can we evaluate this new tool, and show that it can be helpful for hearing impaired individuals?

The following subsection gives a brief background on previously developed tools and how they affect the design of this new tool.

2.2. Classifying Hearing Impaired Individuals

Hearing impairments are one of the most well-known types of impairments around the world. According to the World Health Organization (WHO) [20], there are about 6.1% people who are suffering from hearing loss around the world. It is very important to note that there are different types of hearing impairments, these are distinguished according to the degree of hearing loss one is experiencing [21]. Firstly, some people experience partial hearing loss, an individual living with such a condition is labelled as one who is “hard of hearing” [22]. Secondly, other hearing-impaired individuals cannot hear a thing but can speak, an individual of such a group is called “deaf” [23]. Thirdly, some hearing-impaired individuals can not hear nor speak, and they are called “deaf mute” or “deaf and mute” [24]. Most people use the term “deaf” to describe all three groups but they are not the same.

It is important to understand this as it is a contributing factor that should be considered in the design of mobile applications for the hearing-impaired. The following subsection discusses the two types of hearing aids which are normally the primary aid that hearing-impaired individuals use.

2.3. Types of Hearing Aids

The most common hearing aids that exist are of two types, namely analog and digital hearing aids. Analog hearing aids are less expensive but what makes them unique is that when they amplify sounds, they do not filter noise. Digital hearing aids on the other hand are more advantageous because they can remove unwanted signals of which eliminates noise [25]. Digital hearing aids have been developed spontaneously such that newer versions of them have also gave birth to “smart hearing aid systems” [1]. Smart hearing aid systems consist of digital hearing aids and software for mobile applications that help with providing more flexibility and performance. Smart hearing aid systems enable users to set their environments using the mobile applications of which enables their digital hearing aids to filter noise more suitably [26, 27].

Technology is continuously evolving as time moves. It is important to note that, hearing aids are better suited for people who are living with or rather are experiencing partial hearing loss. This stresses the need for assistive technologies as they are more inclusive. The following section discusses these technologies.

2.4. Assistive Technologies

Assistive technologies can be used to aid people with different special needs and they have created new possibilities for people with living with limiting conditions [27]. These possibilities include help with day-to-day activities, rehabilitative services and others. As a result, these technologies make it possible or rather easier for people with disabilities to complete tasks that were previously difficult, or close to impossible. In the context of hearing-impaired individuals, these are better suited because they cater for all three groups of hearing-impaired individuals.

Some assistive technologies have been developed for educational purposes. GameOhm [28] is a serious game that was developed for electronic engineering students which is based on the Android platform. A serious game is a game which is developed with the purpose of contributing to teaching and learning. Serious games have been applied in numerous fields such as STEM (Science, Technology, Engineering and Medicine), education, business and more. We can easily categorize students as young people as the dominating age group of students is them. On the other hand, it is important to note that assistive technologies are not solely designed for young people.

Elderly people must also be included [26]. Bearing in mind that it is highly possible for elderly people to have more than one disability, the design of assistive mobile application technologies then becomes a major concern. Typically, most elderly people do not easily welcome technology. Making applications that are not user-friendly for them would further contribute negatively. Upon developing mobile applications for the hearing-impaired, developers must go for a user-centered approach and designs must be familiar to that of familiar applications [26, 29]. This enables the user training process to be less complex, which also increases motivation and user acceptance [30].

Upon collecting requirements for assistive technologies, it is important to consider both hardware and software capabilities in accordance to the needs of the targeted disabled users [31]. Different types of software could be used in accordance to the different classifications of hearing-impaired users such as Haptic Feedback software and Speech and Language Processing software. Haptic software technology uses touch to communicate with operators. The most common Haptic software implementations are that of vibration responses made by devices upon different user inputs. The following subsection discusses Speech and Language Processing.

2.5. Overview of Speech and Language Processing

Speech and Language Processing is basically concerned with the conversion of Speech-to-Text and Text-to-Speech. Text-to-Speech software synthesizes written natural language into an audio output, in simple terms it uses Natural Language Processing (NLP) technology to read written text. On the other hand, Speech-to-Text software does the same process but the other way around, it synthesizes spoken language and processes it into written natural language using NLP technology. Such software is often incorporated in the designs of voice assistants, automatic speech recognition (ASR) engines and speech analytic tools [31].

Applications that use Speech and Language Processing technology must be able to convert and transfer messages timely. Time, message transfer and real-time presentation of written text are some of the major challenges of converting speech-to-text in real time [32]. Instant speech-to-text conversion aims to synthesize spoken language into written text nearly concurrently. This is very important as it would enable people living with hearing-impairments to take part in conversations as they would have normally done if they were not experiencing any hearing problems. This would enable their conversations to be more engaging as they do not feel like they slow down participation because of taking too much time to process statements.

It is highly imperative to note that there are some challenges that might arise when converting speech to text in real-time [33]. Challenges are often caused by the nature of how Automatic Speech

Recognition (ASR) based tools are built. Automatic speech recognition software typically needs one to train their speech recognition engine [34, 35]. It is advisable to have a minimum signal-to-noise ratio as noisy environments are inadequately fit. The amount of time spent training the speech recognition engine is directly proportional to the speech recognition engine’s performance. However, this condition does not apply in the context of this research. Automatic speech recognition can be used for various purposes ranging from speaker identification [36], speaker classification [37] to speaker verification. This research is mainly concerned with speaker classification.

Speaker classification can separate audio input according to the voices of different speakers. It is not necessary to train a speech recognition engine for speaker classification because speaker classification is aimed at separating speech input from different voices not identifying and verifying predefined speakers. All speakers’ voices are treated the same and are not compared against anything as they are viewed as new every time a voice input is synthesized during the use of a newly created speech client. No literature currently exists on the application of speaker classification techniques for the design of mobile applications for hearing-impaired individuals but the following subsection discusses some similar work.

3. Related Work

In this section we present an overview of current tools that are similar to Deaf Chat and how our tool differs.

3.1. Overview of Current Tools

The most common tools that exist in the market are mobile applications that use Automatic Speech Recognition software to help convert speech to text [17]. The requirements for these tools must be further studied in order to improve usability and usefulness [38]. Assistive technologies for the hearing-impaired must be designed to fit for all and more commercialised as many are only designed for research purposes [39, 27, 40]. Many solutions that are present are flawed as most are one-sided, they either work for a certain group of hearing deficient individuals (deaf, hard of hearing, or mute) or they work only under specifically predefined situations. This is evident in tools like Sign Support [41], which works as an aid in pharmacies only and LifeKey [42], which only works in cases of emergency.

3.2. Examples of Existing Tools

VoIPText is a mobile application that was developed to assist deaf and hard of hearing people. It attempted to apply VoIP (Voice Over IP), speech to text software and instant Automatic Speech Recognition (ASR) functionalities to include hearing deficient individuals in the use of VoIP technology for communication [17]. This tool was based online (dependent on internet connectivity) and its development realized the potential VoIP technology can assist hearing impaired people.

BridgeApp is a mobile application that was designed to assist deaf and mute Filipino Sign Language and American Sign Language speaking people from the Philippines, their user acceptance was positive, and the tool was deemed as useful [31]. This tool makes use of Text-to-Speech, Speech-to-Text, Text-to-Image and Haptic Feedback technology and it only works offline. The tool was only applicable to only predefined scenarios such as being at church, the office and others. Another tool called Ubider was developed for Cypriotic deaf people and it had the ability to convert a natural language into Cypriotic Sign Language [21]. This tool is dependent on the internet. The following subsection shows the gap that exists in current tools.

3.3. The Gap

Many tools have been developed to assist hearing-impaired people and the most common characteristics of these tools are that they are either:

- online or offline,

- dedicated only to a specific audience (deaf, mute, hard of hearing or any combination of two of the three categories),

- they make use of speech to text, text to speech, and automatic speech recognition software.

A model that is inclusive of all three categories of hearingimpaired individuals (deaf, mute or hard of hearing) would make a greater contribution to their lives. This is what we present in this paper — Deaf Chat.

4. Design of Deaf Chat

The biggest distinguishing factors that represent how Deaf Chat is unique are the algorithm behind it and technologies that it uses. The following subsection gives a brief explanation of the algorithm that represent the app flow in Deaf Chat.

4.1. The Deaf Chat Algorithm

Deaf Chat has a unique design compared to other messaging applications that exist. It uses speaker diarisation techniques recognize and classify speakers before sending converted speech to text from one user to another. Speaker diarisation refers to the process of separating different voices on a voice input. This term is also spelled as ”diarization” and this paper uses both spellings interchangeably. Algorithms 1-3 give a high-level overview of the algorithms behind how Deaf Chat was designed and implemented. Algorithm 1 shows how a message is sent from one user to another and how the dialogue process works on a Chat Activity.

|

Algorithm 1: Chat Activity Data: Text Message Result: Delivery Notification initialisation; message = MakeInput(); SEND message; if User 2 responds then MakeInput(); else do nothing; end |

Algorithm 2 gives the possibility of two input types which are either text or voice input. This algorithm is a function that takes an argument and results in a text output that is used in Algorithm 1. In the case where a user decides to type text, the input will just be sent as is. In contrast to that, if the user decides to record a voice note the speech will be sent to the Process Speech function.

|

Algorithm 2: Make Input Function Function MakeInput(): begin Data: txt input or voice input Result: text message if (input==txt input) then begin text message = txt input; end else begin text message = ProcessSpeech(voice input) end end return text message end |

As called in Algorithm 2 (MakeInput Function), this algorithm shows how Deaf Chat interacts with the API which facilitates the classification of speakers. Algorithm 3 takes the voice input from the Make Input function and sends it to the API which then transcribes the speech and returns a string of words. This is continued until the user decides to either stop the recording using the stop button or by just simply sending the currently transcribed text.

|

Algorithm 3: Process Speech Function Function ProcessSpeech(): begin Data: voice input Result: text text = “ ”; while text = “ ” do begin SEND request to API; text = RECEIVED response from API; return text end end end |

The following subsection explains the graph structure of Deaf Chat.

4.2. Deaf Chat’s Operation Flow

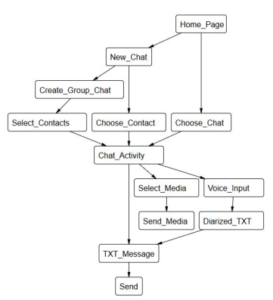

Figure 2 shows how users can interact with each other through all the functionalities that Deaf Chat has. Users can simply send a text message, media or make use of the diarisation functionality by recording a voice note. In order to save time in development, our prototype showcases the core functionality of our application of which is the separation of speakers from a voice input. The following section will give more thorough insights about the technologies that were used of which is a discussion about what they are and how they were implemented.

Figure 2: A Graph diagram, showing the operation flow of Deaf Chat

5. Implementation and Results

As indelicately mentioned on the previous section, our prototype showcases the core functionality of the Deaf Chat tool. By applying the core functionality we improved the quality of the results we were to obtain because incorporating an online chat service would have impacted the response time of the application. The following subsections discuss the two core technologies that we used in the implementation of Deaf Chat.

5.1. Android Studio

We used Android Studio IDE to develop our tool. We used the

Java programming language to create all our back-end functions and XML (Extensible Markup Language) to create the front end in our activities. Figure 3 shows the chat activity whereby a voice input has been made and processed. The activity has an easy to use interface of which enhances the user experience. In order for a user or a group of users to make an input, one simply has to click the record button and start talking. There is no limit as to how long the user can speak. Furthermore, a user is allowed to edit the output text that has been produced before sending the generated text to the next user.

5.2. IBM Watson API

The IBM Watson API has a number of different features that can be used to build different applications ranging from Chat bots, Textto-Speech engines, Speech-to-Text engines and more. In the implementation of our tool, we used the IBM Watson Speech to Text API. It facilitated the conversion of speech from voice notes into classified text.

Figure 3: A Chat Activity showing processed voices of four distinct speakers.

The IBM Watson Speech to Text API manages to do this by applying Deep Neural Network (DNN) models. Within this context, Deep Neural Networks process speech by using powerful algorithms that can identify unique voices and further convert speech into text. The algorithms behind the API also filter noise by excluding unwanted sounds on the transcribed text. The IBM API only supports about 19 language models which include English (USA, Australia and UK), Mandarin, Dutch, French and more. We used the US language model. In order to identify different speakers we enabled diarization on our model and further created some methods that assisted us in formatting our output in a way that is easy to understand for different users.

6. Evaluation

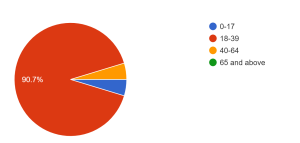

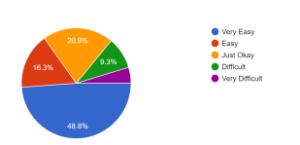

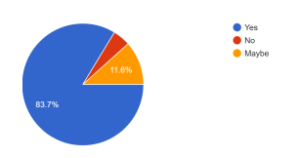

This section presents the results we obtained from numerous individuals who tested the Deaf Chat prototype. We uploaded the application’s installation file on the internet and then attached a link to an online survey which had 7 questions that respondents had to complete after testing the app. The results can be seen from Figure 4-10. Most of our respondents were between the ages 18-39, and they found the application useful and about 83.7% said that they would recommend it to a hearing impaired person. Only 14% of our respondents found it hard to navigate through the app of which shows that it is user friendly.

According to the results of the survey, Deaf Chat can convert Speech to Text and Identify Speakers successfully up to 86% and 88.5%, respectively. We accumulated those results by adding all participants who rated Deaf Chat 5 and above. Some respondents who experienced challenges with speech processing outlined that it might be because of their African accents. Some recommendations also included comments that said that Deaf Chat would be better if it could support local languages. A small percentage of our respondents outlined that the response rate is slow. Overall, we can conclude that Deaf Chat is very useful and performed even better than we had anticipated.

7. Conclusion and Future Work

7.1. Conclusion and Recommendations

In this paper, we have presented a new model and a software prototype for facilitating of communication with hearing-impaired individuals. Our tool can be used to communicate with all three types of hearing-impaired individuals and further makes a contribution into improving the lives of the targeted users. We evaluated our tool using a survey which included participants who are not hearing impaired. Most of our participants found the tool very useful and made recommendations as to how we can improve it so that it is more useful. Some participants had a bad experience because of their accents and we would like to recommend the use of a speech recognition engine that responds faster and supports more accents, and more especially those that are African.

There are many perceptions that the people of South Africa and

Africa as a whole have about AI because it has not been used for much social good in South Africa. According to Stats SA [43], about 7.5% people in the population of South Africa are living with a disability. Furthermore, the South African National Deaf Association (SANDA) [44], certifies that it represents about four million deaf and hard of hearing people in South Africa which shows the greater need for more AI tools for Social Good. Deaf Chat is one of the first South African AI tools that were developed for Social Good and we perceive that it will open more opportunities for innovative research and development for this particular purpose.

7.2. Future Work

We are going to implement our model and design in an iOS and web based version of Deaf Chat. Moreover, we will add additional features such as quick access to emergency services for hearing impaired users.

Acknowledgment The authors would like to give special thanks to Nikita Patel for the rich illustration on this contribution and the whole Formal Structures Lab team for their support during the commencement of this study.

Figure 4: Age group of participants

Figure 5: Ease of navigation

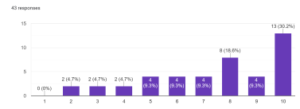

Figure 6: Rating of Speech-to-Text functionality

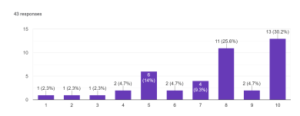

Figure 7: Rating of Speaker Diarization functionality

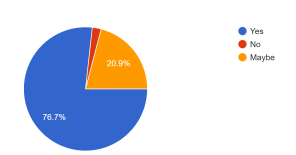

Figure 8: Usefulness of tool to hearing impaired people

Figure 9: Likeliness to recommend app to hearing impaired person

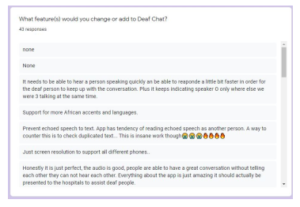

Figure 10: Recommendations

- W. Shehieb, M. O. Nasri, N. Mohammed, O. Debsi, K. Arshad, “Intelligent Hearing System using Assistive Technology for Hearing-Impaired Patients,” in 2018 IEEE 9th Annual Information Technology, Electronics and Mobile Communication Conference, IEMCON 2018, 2018, doi:10.1109/IEMCON. 2018.8615021.

- D. G. Blazer, D. L. Tucci, “Hearing loss and psychiatric disorders: A review,” Psychological Medicine, 2019, doi:10.1017/S0033291718003409.

- A. D. Palmer, P. C. Carder, D. L. White, G. Saunders, H. Woo, D. J. Graville, J. T. Newsom, “The impact of communication impairments on the social relationships of older adults: Pathways to psychological well- being,” Journal of Speech, Language, and Hearing Research, 2019, doi: 10.1044/2018 JSLHR-S-17-0495.

- N. Shoham, G. Lewis, G. Favarato, C. Cooper, “Prevalence of anxiety disorders and symptoms in people with hearing impairment: a system- atic review,” Social Psychiatry and Psychiatric Epidemiology, 2019, doi: 10.1007/s00127-018-1638-3.

- M. I. Wallhagen, W. J. Strawbridge, G. A. Kaplan, “6-year impact of hearing impairment on psychosocial and physiologic functioning.” 1996.

- A. D. Palmer, J. T. Newsom, K. S. Rook, “How does difficulty communi- cating affect the social relationships of older adults? An exploration using data from a national survey,” Journal of Communication Disorders, 2016, doi:10.1016/j.jcomdis.2016.06.002.

- L. C. Gellerstedt, B. Danermark, “Hearing impairment, working life condi- tions, and gender,” Scandinavian Journal of Disability Research, 2004, doi: 10.1080/15017410409512654.

- J. Stevenson, D. McCann, P. Watkin, S. Worsfold, C. Kennedy, “The rela- tionship between language development and behaviour problems in children with hearing loss,” Journal of Child Psychology and Psychiatry and Allied Disciplines, 2010, doi:10.1111/j.1469-7610.2009.02124.x.

- T. Most, “The effects of degree and type of hearing loss on children’s performance in class,” Deafness and Education International, 2004, doi: 10.1179/146431504790560528.

- B. W. Hornsby, “The effects of hearing aid use on listening effort and mental fa- tigue associated with sustained speech processing demands,” Ear and Hearing, 2013, doi:10.1097/AUD.0b013e31828003d8.

- M. A. HARVEY, “Family Therapy with Deaf Persons: The Systemic Utiliza- tion of an Interpreter,” Family Process, 1984, doi:10.1111/j.1545-5300.1984. 00205.x.

- P. Kumar, S. Kaur, “Sign Language Generation System Based on Indian Sign Language Grammar,” ACM Transactions on Asian and Low-Resource Lan- guage Information Processing (TALLIP), 19(4), 1–26, 2020.

- C. N. Asoegwu, L. U. Ogban, C. C. Nwawolo, “A preliminary report of the audiological profile of hearing impaired pupils in inclusive schools in Lagos state, Nigeria,” Disability, CBR and Inclusive Development, 2019, doi: 10.5463/dcid.v30i2.821.

- R. Conrad, “Lip-reading by deaf and hearing children,” British Journal of Educational Psychology, 47(1), 60–65, 1977.

- J. F. Knutson, C. R. Lansing, “The relationship between communication prob- lems and psychological difficulties in persons with profound acquired hearing loss,” Journal of Speech and Hearing Disorders, 1990, doi:10.1044/jshd.5504. 656.

- F. Bakken, “SMS Use Among Deaf Teens and Young Adults in Norway,” in The Inside Text, 2005, doi:10.1007/1-4020-3060-6 9.

- B. Shirley, J. Thomas, P. Roche, “VoIPText: Voice chat for deaf and hard of hearing people,” in IEEE International Conference on Consumer Electronics – Berlin, ICCE-Berlin, 2012, doi:10.1109/ICCE-Berlin.2012.6336486.

- Google, “AI for Social Good– Google AI,” 2020.

- J. Cowls, T. King, M. Taddeo, L. Floridi, “Designing AI for Social Good: Seven Essential Factors,” SSRN Electronic Journal, 2019, doi:10.2139/ssrn.3388669.

- WHO, “Estimates,” 2018.

- K. Pieri, S. V. G. Cobb, “Mobile app communication aid for Cypriot deaf peo- ple,” Journal of Enabling Technologies, 2019, doi:10.1108/JET-12-2018-0058.

- A. Stratiy, “The Real Meaning of “Hearing Impaired”,” Deaf World: A Histori- cal Reader and Primary Sourcebook, 203, 2001.

- T. K. Holcomb, “Deaf epistemology: The Deaf way of knowing,” American Annals of the Deaf, 2010, doi:10.1353/aad.0.0116.

- A. Ballin, The deaf mute howls, volume 1, Gallaudet University Press, 1998.

- P. V. Praveen Sundar, D. Ranjith, T. Karthikeyan, V. Vinoth Kumar, B. Jeyaku- mar, “Low power area efficient adaptive FIR filter for hearing aids using dis- tributed arithmetic architecture,” International Journal of Speech Technology, 2020, doi:10.1007/s10772-020-09686-y.

- A. Holzinger, G. Searle, A. N. Witzer, “On some aspects of improving mobile applications for the elderly,” in Lecture Notes in Computer Science (includ- ing subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2007, doi:10.1007/978-3-540-73279-2 103.

- G. Kbar, A. Bhatia, M. H. Abidi, I. Alsharawy, “Assistive technologies for hearing, and speaking impaired people: a survey,” 2017, doi:10.3109/17483107. 2015.1129456.

- A. Jaramillo-Alcazar, C. Guaita, J. L. Rosero, S. Lujan-Mora, “Towards an ac- cessible mobile serious game for electronic engineering students with hearing impairments,” in EDUNINE 2018 – 2nd IEEE World Engineering Education Conference: The Role of Professional Associations in Contemporaneous Engi- neer Careers, Proceedings, 2018, doi:10.1109/EDUNINE.2018.8450948.

- C. Siebra, T. B. Gouveia, J. Macedo, F. Q. Da Silva, A. L. Santos, W. Cor- reia, M. Penha, F. Florentin, M. Anjos, “Toward accessibility with usabil- ity: Understanding the requirements of impaired uses in the mobile con- text,” in Proceedings of the 11th International Conference on Ubiquitous Information Management and Communication, IMCOM 2017, 2017, doi: 10.1145/3022227.3022233.

- B. Adipat, D. Zhang, “Interface design for mobile applications,” 2005.

- M. J. C. Samonte, R. A. Gazmin, J. D. S. Soriano, M. N. O. Valencia, “BridgeApp: An Assistive Mobile Communication Application for the Deaf and Mute,” in ICTC 2019 – 10th International Conference on ICT Con- vergence: ICT Convergence Leading the Autonomous Future, 2019, doi: 10.1109/ICTC46691.2019.8939866.

- S. Wagner, “Intralingual Speech-to-text conversion in real-time: Challenges and Opportunities,” in Challenges of Multidimensional Translation Conference Proceedings, 2005.

- S. Rossignol, O. Pietquin, “Single-speaker/multi-speaker co-channel speech classification,” in Eleventh Annual Conference of the International Speech Communication Association, 2010.

- J. P. Campbell, “Speaker recognition: A tutorial,” Proceedings of the IEEE, 85(9), 1437–1462, 1997.

- H. Beigi, “Speaker recognition: Advancements and challenges,” New trends and developments in biometrics, 3–29, 2012.

- H. E. Secker-Walker, B. Liu, F. V. Weber, “Automatic speaker identification using speech recognition features,” 2017, uS Patent 9,558,749.

- P. Matejka, O. Glembek, O. Novotny, O. Plchot, F. Grezl, L. Burget, J. H. Cernocky, “Analysis of DNN approaches to speaker identification,” in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing – Proceedings, 2016, doi:10.1109/ICASSP.2016.7472649.

- S. S. Nathan, A. Hussain, N. L. Hashim, “Studies on deaf mobile application: Need for functionalities and requirements,” Journal of Telecommunication, Electronic and Computer Engineering, 2016.

- S. Hermawati, K. Pieri, “Assistive technologies for severe and profound hear- ing loss: Beyond hearing aids and implants,” Assistive Technology, 2019, doi:10.1080/10400435.2018.1522524.

- M. C. Offiah, S. Rosenthal, M. Borschbach, “Assessing the utility of mobile applications with support for or as replacement of hearing aids,” in Procedia Computer Science, 2014, doi:10.1016/j.procs.2014.07.079.

- M. B. Motlhabi, M. Glaser, M. Parker, W. D. Tucker, “SignSupport: A Limited Communication Domain Mobile Aid for a Deaf patient at the Pharmacy,” in Southern African Telecommunication Networks & Applications Conference, 2013.

- L. Slyper, M. K. Kim, Y. Ko, I. Sobek, “LifeKey: Emergency communication tool for the deaf,” in Conference on Human Factors in Computing Systems – Proceedings, 2016, doi:10.1145/2851581.2890629.

- S. SA, “Stats SA profiles persons with disabilities,” 2020. ge,” 2018.

- SANDA, “Homepage,” 2018.