Customer Satisfaction Recognition Based on Facial Expression and Machine Learning Techniques

Volume 5, Issue 4, Page No 594–599, 2020

Adv. Sci. Technol. Eng. Syst. J. 5(4), 594–599 (2020);

DOI: 10.25046/aj050470

DOI: 10.25046/aj050470

Keywords: Facial expression, customer emotion, Emotion recognition Machine

Nowadays, Customer satisfaction is important for businesses and organizations. Manual methods exist, namely surveys and distributing questionnaires to customers. However, marketers and businesses are looking for quick ways to get effective and efficient feedback results for their potential customers. In this paper, we propose a new method for facial emotion detection to recognize customer’s satisfaction using machine-learning techniques. We used a facial landmark point; we extract geometric features from customer’s emotional faces using distances from landmarks points. Indeed, we used distances between the neutral side and the negative or positive feedback. After that, we classified these distances by using different classifier, namely Support Vector Machine (SVM), KNN, Random Forest, Adaboost, and Decision Tree. To assess our method, we verified our algorithm by using JAFFE datasets. The proposed method reveals 98,66% as accuracy for the most performance SVM classifier.

1. Introduction

This paper is an extension of work originally presented in world conference on complex systems (WCCS’19) [1]. We decide to develop this work because, we observe in the last decade, business primarily has an important impact based on the way, how customers observe their products and services [2]. Customer satisfaction can be measured using manuals methods such as the satisfaction survey, the interview, and the focus group. These methods are not efficient and effective to the cost, time and reliability of the data. Facial expressions are used to communicate non-verbally. They are a special way to express our emotions and appreciations. In the context of customer satisfaction, a negative feedback emotion is often related to a lower perceived quality of service. The facial expression contributes 55% to communicate with a speech. More than that, 70-95% of negative feedback can be understood verbally [3]. Companies have long been interested in understanding the purchasing decision-making process of consumers [4]. In this work, we aim at detecting the positive or negative emotions customers from the analysis of facial expressions. In this way, this type of information is useful. For example, we can calculate the statistics about the products as well as the replacement of their exposures. Appreciated products must be brought to the fore, while negatively appreciated products must be replaced or otherwise requested. For this reason, we are so existed to propose a new method for facial emotion detection to recognize customers’ satisfaction using facial expression and machine learning techniques.

The remainder of this paper is structured as follows: Firstly, we offer an outline of the related work. Secondly, we introduce our approach that contains a system for customer satisfaction using facial expression and machine learning techniques. We reserved a separate section to the experimental results. In the last section, we sum up our work.

2. Related work

Currently in the field of marketing and advertising, facial recognition is used to study consumers’ emotions in two forms: positive and negative emotions. Charles Darwin was the first to provide robust basis for emotions, representing their significance, their usefulness and communication. Already, many of facial expressions of emotions have an adaptive significance that serve to communicate something, most emotions are expressed alike on the human face nerveless of culture or race [5], his work “Expression of emotions in humans and animals”, remains a reference for many scientists. In [6], authors proposed method to classify muscle movement to code the facial expression. The facial movements are determined through action units (AU). Each AU is based on the affinity of the muscles. This method, used mostly in classifying facial movement named Facial Action Coding

System (FACS).We can define six universal basic emotions: happy, sadness, anger, surprise, fear and disgust. Various researchers have supported the universality of the expression of emotions. Generally, in reaction to similar stimuli, people prompt similar expressions with local variations [7]. Frequently, we used two methods: motion-based method and deformation of face. The change of face is taken into account for the motion-based method [8, 9]. On the contrary, we take into account a neutral image and another image for the deformation-based method [10, 11]. In [10], authors use FACS (Face Action Code System) as features to classify emotions. Their model consists to count images, which classified correctly and weighted by the system. They found that, 2% of images (6 image) are failed completely in tracking. They also reported 91% as average correct recognition ratio. Otherwise, in [11] a model which able to use also speech content is developed. They create a system to compute the Human Computer Interaction (HCI). In order to test their model, they utilize 38 subjects of affect recognition approach with 11 HCI-related affect states. Their model a gave 90% for the bimodal fusion as an average recognition accuracy.

In [12] feature extraction contains geometric and appearance-based models. The first model involves feature extraction about eye, mouth, nose, eyebrow…However; the second model covers the specific part of the face. They also evaluate their model by employing SVM, ANN, KNN, HMM, Bayesian network, and sparse representation-based classification. The experiment results report that, sparse representation-based classification is the more performant classifier for their model.

Deep learning algorithms are also used to extract pattern from facial expressions. More specifically, we develop a convolutional neural network to detect features from faces, and to classify these features to different emotions. This type of network contains two parts. The first part contains convolutional layers, which are based on applying mathematic convolution operation. The result of this part is features extracted from faces. The second part contains feed-forward network. This part of network is able to classify features extracted to different facial expression. In [13, 14] the based results researches of this algorithm is proposed. For example, authors, in [13], apply KNN to classify emotions. After that, they find 76.7442% and 80.303% as accuracy in the JAFFE and CK+, respectively, which illustrate viability and success of their model. In [14], authors employ also Decision Tree and Multi-Layer Perceptron to classify emotions. However, they find that, Convolutional Neural Network report the greatest recognition accuracy. An Affdex by Affectiva [15] is one of the most implements for visual emotional analysis. Which permits giving the emotional trend of a subject, through detection emotions [16], and the Microsoft Cognitive Services based on the Azure platform. These two studies are able to perceive age, sex and ethnicity and depend on Convolutional and/or Recurrent Neural Network [17]. In addition, they find that, the proposed model is yields performance increases for facial expression recognition using CK+ dataset. A multimodal affect recognition system was developed to determine if the customer exhibits negative affect such as being unhappy, disgusted, frustrated, angry or positive affect such as happy, satisfied and content with the product being offered [18]. Furthermore, authors apply Support Vector Machine an emotion template to evaluate their algorithm. They test the real-time performance, which evaluate the feedback assessment in order to compute accuracy and viability for multimodal recognition. To appreciate customer satisfaction special facial emotion namely” happy”,” surprised” and “neutral” is proposed in [19]. They evaluate their model by using datasets as Radboud Faces and Cohn-Kanade (CK+). Output results report that their proposed algorithm a gave an important recognition accuracy utilizing Action Unit (AU) features, Support Vector Machine (SVM) and K-Nearest Neighbors (KNN). In the last years, eye-tracking system has been developed to analyze the research of customer [20]. Companies invest intensely within the advancement and publicizing of products, the return on investment (ROI) must be justified by the organization. Hence, the specific observing of the fascination of a product by the customer amid its promotion, and how to develop their promotion campaigns. Eye tracking is very promising in navigation advertising. In [21], authors developed an algorithm aims to compute sales assistant, which depends specially on emotion detection. Otherwise, [22] present a study, which contains products features impact the costumer’s emotional. Furthermore, they used 21 emotional categories of products.

3. Our Approach

In this section, we present an algorithm to recognize the emotions of customers towards a given product. In order to analyze the facial expressions of a client to derive satisfaction from the product offered, our algorithm is based on three essential steps. The first is to acquire the image of the face and the second allows extracting the expressions in the form of geometric features. To obtain these features, our algorithm transforms the input image into geometric primitives such as points and curves. These primitives are located via distinctive patterns such as the eyes, mouth, nose and chin by measuring their relative position, their width and other parameters such as the distance between the nose, eyes, mouth. Our contribution is therefore to propose a method for selecting the optimal distances that can make the difference between facial expression linked to emotion and customer satisfaction. In the last step, these different distances are used to classify a client’s emotions towards a product, into three types; positive, negative or neutral appreciation. These results will be analyzed and provided to the company to understand how customers perceive the product.

Otherwise, the pipeline of our algorithm begin by detecting the face into an input image. After that, we compute the key point of the face by applying a mask, in order to compute the distances between key points. We aim to measure the variation of face’s shape in order to classify appreciation of customer’s toward a product. For this raison, our algorithm is based on computing face’s key points to calculate distances between those landmarks. Finally, we employ a set of classifier to evaluate our algorithm

3.1. Features extraction (Geometric features)

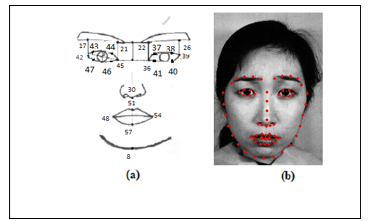

In order to represent the emotions of a face, this step consists in locating the facial landmarks (for example the mouth, the eyes, the eyebrows, the nose and the chin). The model presented in figure (1) allows us to locate the face marks. This model has a drawback; the deformation of these marks modifies the facial expressions.

Figure1: (a) Facial model depicting the position of the chosen landmark point, (b) Landmark point on the test subject face

Figure1: (a) Facial model depicting the position of the chosen landmark point, (b) Landmark point on the test subject face

| Table1: Definitions of Geometric features |

| Distance | Description | Formula | Distance | Description | Formula |

| D1 | Mouth Width | d(X48,X54) | D12 | Right eye height | d(X38,X40) |

| D2 | Mouth height | d(X51,X57) | D13 | Outer left eyebrow distance to eye | d(X17,X42) |

| D3 | Chin to mouth distance | d(X8,X57) | D14 | Outer right eyebrow distance to eye | d(X26,X39) |

| D4 | Chin to nose distance | d(X8,X30) | D15 | Inner right eyebrow distance to eye | d(X22,X36) |

| D5 | Left eyebrow width | d(X17,X21) | D16 | Inner left eyebrow distance to eye | d(x21,x45) |

| D6 | Right eyebrow width | d(X22,X26) | D17 | Nose distance to left eye | d(X45,X30) |

| D7 | Left eye width | d(X42,X45) | D18 | Nose distance to right eye | d(X36,X30) |

| D8 | Right eye width | d(X36,X39) | |||

| D9 | Left eye height | d(X43,X47) | |||

| D10 | Left eye height | d(X44,X46) | |||

| D11 | Right eye height | d(X37,X41) |

However, we used twenty-two facial landmarks points to calculate eighteen distances. Therefore, a vector of eighteen values will represent each face. The selected distance are:

For the eyes: six features

Left eye width, Right eye width, Right eye height, left eye height, Right eye height, left eye height

For the mouth: two features

Mouth Width, mouth height

For the chin: two features

Chin to nose distance, Chin to mouth distance

For the eyebrow: six features

Left eyebrow width

Right eyebrow width

Outer left eyebrow distance to eye

Inner left eyebrow distance to eye

Outer right eyebrow distance to eye

Inner right eyebrow distance to eye

For the nose: two features

Nose distance to left eye

Nose distance to right eye

3.2. Training and classification

Using the most used algorithms in machine learning, namely: SVM, RANDOM FOREST, Decision Tree KNN, and Adaboost classifiers, the geometric features presented in the previous sections will be classified into three classes according to customer’s satisfaction.

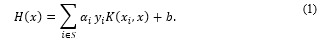

- SUPPORT VECTOR MACHINE (SVM): SVM is a discriminative algorithm, which used to develop a supervised model. It used to classify or to predict regression [23]. In this algorithm, we aim to find a separator, which can classify neural, positive, and negative emotion class. This separator is baptized hyperplane. To find a very performant hyperplane, we tested kernel methods. We found that RBF Kernel is the best kernel for our model. We utilized xi, as a vector support, and σ, as positive float, which is, specify a priori, to compute RBF Kernel. Alternatively, RBF . We used also S as a set of vectors support, which contains xi,b as bias, and Lagrangian coefficient αi, is a vector support, to compute hyperplane equation:

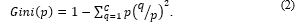

RANDOM FOREST: Random forest [24] is an algorithm constructed by combination between trees. This algorithm used decision trees concept to classify. In decision Tree, we calculate Gini index or Gain ratio to construct the tree. In Random Forest, we compute just Gini index to find the most popular attributes founded by Decision Trees [25]. To compute Gini index, we used the probability, which link between class k and selected case . Or, Gini index can written as follow:

RANDOM FOREST: Random forest [24] is an algorithm constructed by combination between trees. This algorithm used decision trees concept to classify. In decision Tree, we calculate Gini index or Gain ratio to construct the tree. In Random Forest, we compute just Gini index to find the most popular attributes founded by Decision Trees [25]. To compute Gini index, we used the probability, which link between class k and selected case . Or, Gini index can written as follow:

K NEAREST NEIGHBORS (KNN): KNN [26] used k closest neighbors to classify. It can be used for classification or clustering. The concept of this algorithm is finding the class, which have the majority of k closest neighbors. Precisely, we compute distances between examples and we take the examples, which have the smaller distances.

K NEAREST NEIGHBORS (KNN): KNN [26] used k closest neighbors to classify. It can be used for classification or clustering. The concept of this algorithm is finding the class, which have the majority of k closest neighbors. Precisely, we compute distances between examples and we take the examples, which have the smaller distances.- ADABOOST (ADAPTIVE BOOSTING): This classier is baptized also Adaptive Boosting. It is one of the classifiers, which based on the use of boosting concept. It combines between multiple classifiers. It is an iterative algorithm. Adaboost consists to set up a solid classifier by combining a various inadequately performing classifiers in order to product high performant classifier. Any classifier accepts weight can be used as base classifier of Adaboost classifier. Adaboost should meet two conditions:

- The training should be done by using examples weighted.

- In every iteration, it attempts to give a very good fit to these examples by minimizing the value of error.

3.3. Experimental Results

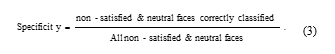

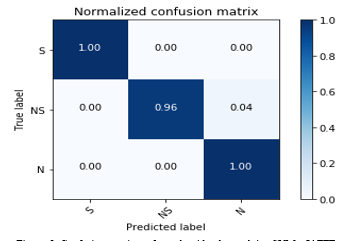

In this work, the Jaffe dataset is used to test the performance of our method. The database contains 213 images of 7 facial expressions (6 basic facial expressions + 1 neutral) posed by 10 Japanese female models. 60 Japanese subjects have rated each image on 6 emotion adjectives. The database was planned and assembled by Michael Lyons, Miyuki Kamachi, and Jiro Gyoba. To use this dataset for our algorithm; we have classified its images into three classes. The first one contains images for satisfied persons, the second is for non-satisfied persons and the third class is for neural persons. Indeed, each face is represented in the form of a vector of 68 elements. Each element is the coordinate of a key point. All key points are calculated using a mask. These operations are applied to all Jaffe images. Next, the distances, shown by Table 1, are calculated. Therefore, the input of our classifiers SVM, RANDOM FOREST, KNN, decision tree and Adaboost classifiers will be vectors, which contain eighteen distances. Alternatively, a distance vector will represent each face. On the other hand, we computed confusion matrix of each classifier. We had also calculated accuracy, sensitivity, and specificity of each classifier. The following equations present the formula of specificity, sensitivity, and accuracy:

- Specificity is the ratio between non-satisfied and neutral faces, which are not classified as a satisfied face and the number total of non-satisfied and neutral faces.

Sensitivity is the ratio between the number of satisfied faces, which are classified correctly, and the number of all satisfied faces (i.e. the satisfied faces, which are classified correctly, satisfied faces, which are classified neutral faces, and satisfied faces, which are classified as non-satisfied faces).

Sensitivity is the ratio between the number of satisfied faces, which are classified correctly, and the number of all satisfied faces (i.e. the satisfied faces, which are classified correctly, satisfied faces, which are classified neutral faces, and satisfied faces, which are classified as non-satisfied faces).

Accuracy is the ratio between the number of satisfied, neutral, and non-satisfied faces are correctly classified and the number of all faces.

Accuracy is the ratio between the number of satisfied, neutral, and non-satisfied faces are correctly classified and the number of all faces.

Figure. 2. Confusion matrices of our algorithm by applying SVM a JAFFE dataset

Figure. 2. Confusion matrices of our algorithm by applying SVM a JAFFE dataset

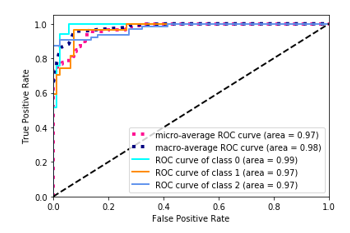

We also calculated the ROC curve (Receiver Operating Characteristics curve). ROC curve determinate the probability of distinguishing capability of our algorithm, i.e. how much our algorithm is able to separate between satisfied, neutral, and non-satisfied faces.

Table- 2: The sensitivity, specificity, and accuracy of applying our algorithm by using SVM, KNN, Decision Tree, Random Forest, and AdaBoost, and by utilizing JAFFE datasets

| Dataset | Accuracy | Sensitivity | Specificity | |

|

JAFFE |

SVM(RBF Kernel) | 98.66% | 100% | 98% |

| KNN | 88.87% | 90% | 75.75% | |

| Decision Tree | 98.66% | 100% | 98% | |

| Random Forest | 77.76% | 97% | 62.77% | |

| AdaBoost | 98.66% | 100% | 98% |

We found that our algorithm detected 97% of satisfied faces correctly. In addition, our method extracted 91% non-

satisfied faces correctly. Finally, it detected 100% of neutral faces correctly.

After computing confusion matrices of our algorithm, we computed the sensitivity, specificity, and sensitivity of our algorithm. We found the results showing into table 2. We calculate these statistic criteria for all classifier. We calculate the sensitivity of applying our algorithm by using SVM with RBF kernel, KNN, AdaBOOST, and Random Forest. We found that the most performant classifier is SVM with RBF Kernel. Table 2 shows the results of each classifier by using JAFFE datasets.

Our algorithm report that, geometrics features a gave a great model to measure satisfaction customers. Our algorithm consists to measure the variation of face’s shape, by computing distances between face landmarks. Since, we based our classification on using the different variation between satisfied, neutral, and non-satisfied faces. For this reason, our algorithm a gave a great model to detect the satisfaction of customers by using emotions of customers faces.

Figure. 3.ROC curve computed by applying our algorithm using SVM and JAFFE dataset.

Figure. 3.ROC curve computed by applying our algorithm using SVM and JAFFE dataset.

4. Conclusion

One of the greatest challenges for marketing era is to ensure customer satisfaction. We have proposed a new method based on the classification of various facial expressions, to assess customer satisfaction using different classifiers: SVM, RANDOM FOREST, KNN and AdaBOOST. Distances between facial landmarks points represent the geometrics features. A vector of eighteen values represents customer’s face expression. We tested our method on the JAFFE database and we obtained a performants result. In the future work, we will develop a multimodal algorithm, which contains speech and motion recognition.

- M.S. Bouzakraoui, S.Abdelalim, Y.A. Abdssamed, “Appreciation of Customer Satisfaction Through Analysis Facial Expressions and Emotions Recognition” in 2019 4th Edition of World conference on Complex Systems, Ouarzazate, Morocco, 2019. https://doi.org/10.1109/ICoCS.2019.8930761.

- F. Conejo, “The Hidden Wealth of Customers: Realizing the Untapped Value of your Most Important Asset”, Journal of Consumer Marketing, 30(5), 463-464, 2013. https://doi.org/10.1108/JCM-05-2013-0570.

- A. Mehrabian, Communication without words, Psychology today, 1968.

- S. M. C. Loureiro, ” Consumer-Brand Relationship: Foundation and State-of-the-Art”, Customer-Centric Marketing Strategies: Tools for Building Organizational Performance, 21, 2013, https://doi.org/10.4018/978-1-4666-2524-2.ch020

- C. Darwin, The expression of the emotions in man and animals, D. Appleton and Company, London, 1913

- J. Hamm, C.G. Kohler, R.C. Gur, R. Verma, “Automated Facial Action Coding System for dynamic analysis of facial expressions in neuropsychiatric disorders”, Journal of neuroscience methods, 200(2), 237-256, 2011. https://doi.org/10.1016/j.jneumeth.2011.06.023

- A. A. Marsh, H. A. Elfenbein, N. Ambady – “Nonverbal “accents” cultural differences in facial expressions of emotion”, Psychological Science, 14(4), 373-376, 2003. https://doi.org/10.1111/1467-9280.24461

- M. Nishiyama, H. Kawashima, T. Hirayama, “Facial Expression representation based on timing structures in faces”, in 2005 workshop on Analysis Modeling of faces and Gestures, Beijing, China, 2005. https://doi.org/10.1007/11564386_12

- K.K. Lee, Human expression and intention via motion analysis: Learning, recognition and system implementation, The Chinese University of Hong Kong Hong, 2004.

- M. Pantic, L.J.M. Rothkrantz, “An expert system for multiple emotional classification of facial expressions” in 1999 IEEE International Conference on Tools with Artificial Intelligence, Chicago, IL, USA, 1999. https://doi.org/10.1109/TAI.1999.809775

- Z. Zeng, J.T., M. Liu, T. Zhang, N. Rizzoto, Z. Zhang, “Bimodal HCI-related Affect Recognition” in 2004 International Conference Multimodal Interfaces, State College, PA, USA, 2004. https://doi.org/10.1145/1027933.1027958

- X. Zhao, S. Zhang, “A review on facial expression recognition: feature extraction and classification” IETE Technical Review, 33(5), 505-517, 2016. https://doi.org/10.1080/02564602.2015.1117403

- K. Shan, J. Guo, W. You, D. Lu, R. Bie, “Automatic Facial Expression Recognition Based on a Deep Convolutional-Neural Network Structure” in 2017 IEEE 15th Int. Conf. Softw. Eng. Res. Manag. Appl. 123–128, London, United Kingdom, 2017. https://doi.org/10.1109/SERA.2017.7965717

- T.A. Rashid, “Convolutional neural networks based method for improving facial expression recognition” in 2016 Intelligent Systems Technologies and Applications, Jaipur, India, 2016. https://doi.org/10.1007/978-3-319-47952-1

- S. Ceccacci, A. Generosi, L. Giraldi, M. Mengoni, “Tool to Make Shopping Experience Responsive to Customer Emotions” International Journal of Automation Technology, 12(3),?319-326, 2018. https://doi.org/10.20965/ijat.2018.p0319

- D. Matsumoto, M. Assar, “The effects of language on judgments of universal facial expressions of emotion” Journal of Nonverbal Behavior, 16, 85-99, 1992. https://doi.org/10.1007/BF00990324

- R. Cui, M. Liu, M. Liu, “Facial expression recognition based on ensemble of multiple CNNs” in 2016 Chinese Conf. of Biometric Recognition, Chengdu, China, 2016. https://doi.org/10.1007/978-3-319-46654-5_56

- L. Huang, T. Chuan-Hoo, W. Ke, K. Wei, “Comprehension and Assessment of Product Reviews: A Review-Product Congruity Proposition” Journal of Management Information Systems, 30(3), 311-343, 2014. https://doi.org/10.2753/MIS0742-1222300311

- M. Slim, R. Kachouri, A. Atitallah, “Customer satisfaction measuring based on the most significant facial emotion”, in 2018 15th IEEE International Multi-Conference on Systems, Signals & Devices, Hammamet, Tunisia, 2018. https://doi.org/10.1109/SSD.2018.8570636

- M. Wedel, R. Pieters – Review of marketing research, 2008.

- G. S. Shergill, A. Sarrafzadeh, O. Diegel, A. Shekar, “Computerized sales assistants: the application of computer technology to measure consumer interest – a conceptual framework”, Journal of Electronic Commerce Research, 9(2), 176 – 191, 2008. DOI:10.1.1.491.5130

- P. M. A. Desmet, P. Hekkert, “The basis of product emotions”, Pleasure with products: Beyond usability. ed. WS Green; PW Jordan, 61-67, 2002. DOI:10.1201/9780203302279.ch4

- C. Cortes, V. Vapnik, “Support-Vector Networks”, Machine Learning, 20, 273-297, 1995. https://doi.org/10.1023/A:1022627411411

- B.Leo, “Random Forests”, Machine Learning, 45, 5-32, 2001. https://doi.org/10.1023/A:1010933404324

- Raileanu, L. Elena, K. Stoffel, “Theoretical Comparison between the Gini Index and Information Gain Criteria,” Annals of Mathematics and Artificial Intelligence 41, 77-93, 2004. https://doi.org/10.1023/B:AMAI.0000018580.96245.c6

- G. Laencina, J. Pedro, “K nearest neighbours with mutual information for simultaneous classification and missing data imputation” Neurocomputing, 72(7-9), 1483-1493, 2009. https://doi.org/10.1016/j.neucom.2008.11.026

- Ajla Kulaglic, Zeynep Örpek, Berk Kayı, Samet Ozmen, "Analysis of Emotions and Movements of Asian and European Facial Expressions", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 42–48, 2024. doi: 10.25046/aj090105

- Nadia Jmour, Slim Masmoudi, Afef Abdelkrim, "A New Video Based Emotions Analysis System (VEMOS): An Efficient Solution Compared to iMotions Affectiva Analysis Software", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 990–1001, 2021. doi: 10.25046/aj0602114

- Rohith Raj S, Pratiba D, Ramakanth Kumar P, "Facial Expression Recognition using Facial Landmarks: A Novel Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 24–28, 2020. doi: 10.25046/aj050504