Artificial Intelligence Approach for Target Classification: A State of the Art

Volume 5, Issue 4, Page No 445-456, 2020

Author’s Name: Maroua Abdellaoui1,a), Dounia Daghouj1, Mohammed Fattah2, Younes Balboul1, Said Mazer1, Moulhime El Bekkali1

View Affiliations

1LIASSE-ENSA, Sidi Mohamed Ben Abdellah University, Fes, 30050, Morocco

2EST, Moulay Ismail University, Meknes, 50050, Morocco

a)Author to whom correspondence should be addressed. E-mail: Abdellaoui.marwa@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 5(4), 445-456 (2020); ![]() DOI: 10.25046/aj050453

DOI: 10.25046/aj050453

Keywords: Artificial Intelligence, Machine Learning, Deep Learning, Feature Extraction, SVD, SVM, RPCA, Sparse Coding

Export Citations

The classification of static or mobile objects, from a signal or an image containing information as to their structure or their form, constitutes a constant concern of specialists in the electronic field. The remarkable progress made in past years, particularly in the development of neural networks and artificial intelligence systems, has further accentuated this trend. The fields of application and potential uses of Artificial Intelligence are increasingly diverse: understanding of natural language, visual recognition, robotics, autonomous system, Machine learning, etc.

This paper is a state of the art on the classification of radar signals. It focuses on the contribution of artificial intelligence to the latter without forgetting target tracking. This by evoking the different feature extractors, classifiers and the existing identification deep learning algorithms. We detail also the process allowing carrying out this classification.

Received: 15 May 2020, Accepted: 17 July 2020, Published Online: 09 August 2020

1. Introduction

The desire to organize in order to simplify has progressively evolved towards the ambition of classifying to understand and, why not, to predict. This development has led to the release of several new techniques capable of satisfying this need. This is how artificial intelligence and the study of its different techniques has become a trend that attracts the interest of researchers in different fields.

Neural networks consist of artificial neurons or nodes that are analogous to biological neurons [1]-[3]. They are the result of an attempt to design a very simplified mathematical model of the human brain based on the way we learn and correct our mistakes. Machine learning allows us to obtain computers capable of self-improvement through experience [4].

The latter is today one of the most developed technical fields, bringing together computer science and statistics, leading to artificial intelligence and data science development [5]. Due to the explosion on the amount of information available online and at low cost, machine learning has experienced tremendous progress resulting in the nonstop development of new algorithms [6]. The use of data-intensive machine learning methods is in all fields of technology and science [1], [5], [6].

Many companies and researches today claim to use artificial intelligence, when in fact the term does not apply to the technologies they use. In the same vein, there is more or less confusion between artificial intelligence and the concept of Machine Learning, without even mentioning Deep Learning. This paper will shed light on these different concepts by detailing each of them and then focuses on the different algorithms employed to extract features and classification of detected objects.

This work complements the various state of the art studies already carried out in AI domain [1]-[3] and is mainly interested in its contribution to road safety through radar target classification (pedestrians, cyclists…).

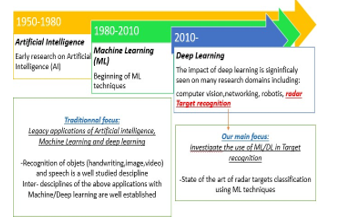

Figure 1: The evolution of artificial intelligence and the value of our work.

Figure 1: The evolution of artificial intelligence and the value of our work.

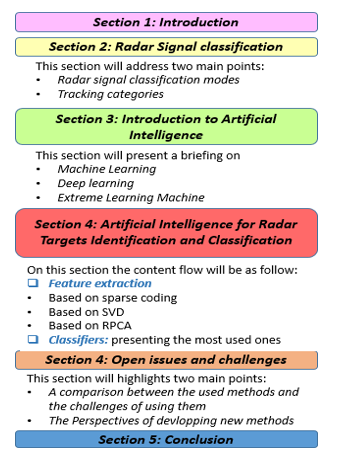

For this, section 2 will represent a study on the classification of radar signals and targets without forgetting the tracking. Then on section 3 we will focus on artificial intelligence by detailing its different concepts in order to eliminate any confusion. We will also study the feature extractors and the algorithms of classification generally used in the overall classification process. A summary bringing together results of the research work in literature in this field will be carried out before concluding, thus opening the way too many perspectives

Figure 2: Content flow diagram

Figure 2: Content flow diagram

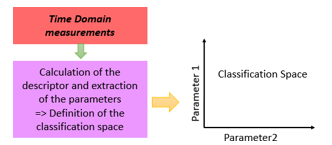

2. Radar Signal Classification

Classification can be defined as the search for a distribution of a set of elements into several categories [7]. Each category, called a class, groups together individuals who share similar characteristics. The objective is to obtain the most homogeneous and distinct classes possible. Identifying categories requires careful definition of a space in which the classification problem must be resolved [7, 8]. Such a space is often represented by vectors of parameters, as shown in Figure3, extracted from the elements to be classified and the classification is carried out by adopting a probabilistic, discriminative, neuronal or even stochastic approach.

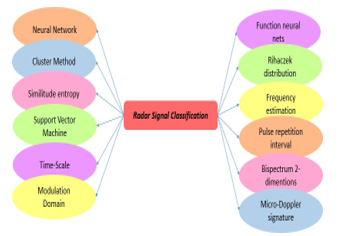

The method of classification of radar signals is presented in Figure 4 that contains the neural network procedures. Their continuous development and improvement have made it possible to clearly understand the potential and the limits of this technique in several fields. Among these, remote sensing, signal processing, identification and characterization of targets [1, 9].

Within these modes of classification, we find; the cluster method that seeks to construct a partition of a data set so that the data from the same group exhibit common properties or characteristics. It distinguishes them from the data contained in the other groups [10, 11]. As such, clustering (or regrouping) is a subject of research in learning stemming from a more general problem, namely classification. A distinction is made between supervised and unsupervised classification. In the first case, it is a question of learning to classify a new individual among a set of predefined classes, from training data (couples (individual, class)). Derived from statistics, and more specifically from Data Analysis (ADD), unsupervised classification, as its name suggests, consists of learning without a supervisor. From a population, it is a question of extracting classes or groups of individuals with common characteristics, the number and definition of classes not being given a priori. Clustering methods are used in a lot of domains of application ranging from biology (classification of proteins or genome sequences), to document analysis (texts, images, videos) or in the context of analysis of traces of use. Many unsupervised classification methods have been published in the literature and it is therefore hard to give an exhaustive list, despite the numerous articles published attempting to structure this very rich and constantly evolving field for more than 40 years [12-18].

Figure 3: Illustration of the classification approach if two parameters are used to define the space in which the classification problem must be solved.

Figure 3: Illustration of the classification approach if two parameters are used to define the space in which the classification problem must be solved.

Entropy of similarity is a method from Shannon’s entropy. The latter named “entropy” his definition of the amount of information. We will therefore talk indifferently in Shannon about the quantity of data generated from a message or the entropy of this message source. The classification method based on entropy will then make it possible to answer several questions which were encountered in all sociological or scientific surveys, namely: the measurement of the correlation between the characters and their selectivity, the homogeneity of the groups as well as problems of formation of new homogeneous classes [19].

Entropy methods are dedicated to the analysis of irregular and complex signals [20]. The support vector machine [7, 8], or large-margin separators, represents a set of supervised learning techniques intended to deal with the issue of discrimination and regression. Support vector machines represents an extension of linear classifiers. They were used because they are capable to work with big amount of information, the low number of hyperparameters, their performance and fiability. SVM have been used in many fields [21-23].

According to the data, the performance of support vector machines is of the same order, or even better, then that of a neural network or of a Gaussian mixture model. Also, the timescale characteristics [24], the modulation domain [25], basic function neural networks [26], Rihaczek distribution and Hough transform [27], which is a pattern recognition technique invented in 1959 by Paul Hough, subject to a patent, and used in the processing of digital images. The simplest application can detect lines present in an image, but modifications can be made to this technique to detect other geometric shapes; it is the generalized Hough transform developed by Richard Duda and Peter Hart in 1972 [28-30], frequency estimation [28], pulse repetition interval [31], two- dimensional bispectrum [32], etc.

These methods of classification represent research in several disciplines [22, 30, 33-35]. To allow proper operation in complex signal environments with many radar transmitters, signal classification should be able to handle not determined, corrupt, and equivocal measurements reliably.

For radar classification of vehicle type and determination of speed profitably by calculation, Cho and Tseng [35] created an improved algorithm that will help smart transport applications in real time containing eight types of mode setting categorization of radar signals.

In general, during a transmission the scattered waves depend on the distance to the target, so to measure these waves we need to measure this distance. In the literature, the scattered waves received are processed by algorithms to detect the presence, the distance and the type of the target [36-40]. Among the target classification methods are the AALF, AALP and ABP method [24].

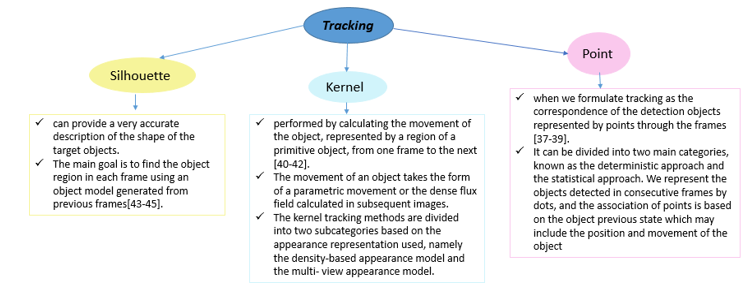

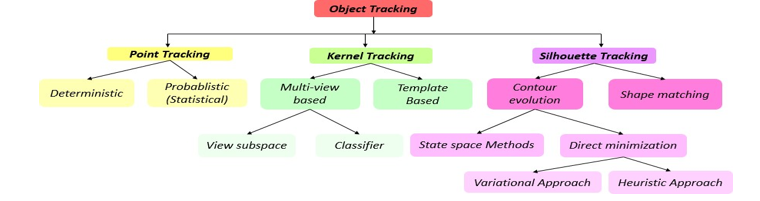

Tracking is a very important element in this process. There are different tracking methods summarized in Figure 5 and Figure 6.

3. Introduction to Artificial Intelligence

Artificial intelligence (AI) has come to the fore in recent years. It is used in several applications for various disciplines [41-54]. Artificial Intelligence (AI) as we know it is weak Artificial Intelligence, as opposed to strong AI, which does not exist yet. Today, machines are capable of reproducing human behavior, but without conscience. Later, their capacities could grow to the point of turning into machines endowed with consciousness and sensitivity.

AI has evolved a lot thanks to the emergence of Cloud Computing and Big Data, which is an inexpensive computing power that gives accessibility to a large amount of data. Thus, the machines are no longer programmed; they learn instead [53, 54].

The following subsections aim to highlight machine learning, deep learning and extreme machine learning respectively, in order to eliminate any confusion between these concepts.

Figure 4: Radar signal classification mode

Figure 4: Radar signal classification mode

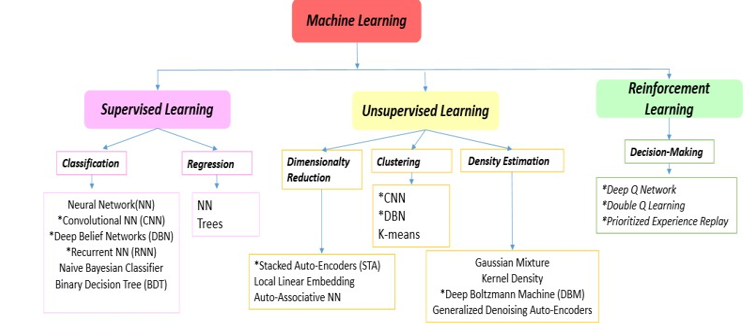

3.1. Machine Learning

Machine Learning is a sub-branch of artificial intelligence, which consists of creating algorithms capable of improving automatically with experience [45-50]. We also speak in this case of self-learning systems. Machine Learning, or automatic learning, is capable of reproducing a behavior thanks to algorithms, themselves fed by a lot of information. In front of a lot of circumstances, the algorithm learns which behavior to follow and decision to take creating a model. The machine makes the tasks automate depending on the situation [54-58].

Figure 5: Tracking methods types and definition

Figure 5: Tracking methods types and definition

Figure 6: Representation of the different tracking categories

Figure 6: Representation of the different tracking categories

Figure 7: Representation of ML types

Figure 7: Representation of ML types

There are three main types of Machine Learning [57]-[62] represented in figure 7: In supervised learning, the algorithms are based on already categorized datasets, in order to understand the criteria used for classification and reproducing them [63]-[66]. In unsupervised learning, algorithms are trained from raw data, from which they try to extract patterns [67]-[70]. Finally, in reinforcement learning, the algorithm functions as an autonomous agent, which observes its environment and learns as it interacts with it [67], [69].

Machine learning is a broad field, which includes many algorithms. Among the most famous are regressions (linear, multivariate, polynomial, regularized, logistic, etc.); these are curves that approximate the data. Naïve Bayes’ algorithm; which gives the probability of the prediction, in knowledge of previous events [71-76]. Clustering is always using mathematics; we will group the data into packets so that in each packet the data is as close as possible to each other [77-79].

There are also more sophisticated algorithms based on several statistical techniques such as the Random Forest (a forest of voting decision trees), Gradient Boosting, Support Vector Machine [21], [23] The learning techniques showed in Figure7 with (*) have emerged recently with their use mainly limited to object recognition, including the classification of radar targets in urban areas.

Machine learning is a very important approach for classification. For instance, the classification of a single target e.g. a pedestrian or a cyclist is relatively simple because the micro- Doppler signatures of the pedestrian and the cyclist are different, but the problem arises when classifying overlapping targets e.g. pedestrians and cyclists. The classification here is much more difficult, which requires the intervention of deep learning techniques to deal with this issue.

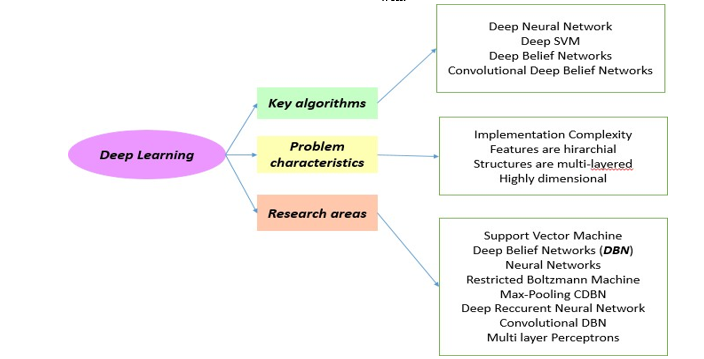

3.2. Deep Learning

Deep Learning aim to understand concepts in a more précised way. In a neural network, successive layers of data are combined to learn the concepts. The simplest networks have only two layers: an input and an output, knowing that each one can have several hundreds, thousands, even millions of neurons. Among the most used deep learning algorithms, we have:

- Artificial neural networks (ANN): these are the simplest and are often used in addition because they sort information well

- Convolutional neural networks (CNN): Applies apply filters to the information collected in order to have new data (for example, bringing out the contours in an image can help to find where is the face)

- Recurrent neural networks (RNN): the best known are LSTM, which have the ability to retain information and reuse it soon after. They are used for text analysis (NLP), since each word depends on the previous few words (so that the grammar is correct)

As well as more advanced versions, such as auto-encoders [80], Boltzmann machines, self-organizing maps (SOM), etc. Figure8 shows the key algorithms of deep learning and the research fields that are interested in it.

3.3. Extreme learning machine

Extreme Learning Machine (ELM) is usually used for pattern classification. It can be considered as an algorithm for direct overshadow single layer neural networks. The latter mastery the slow training speed and over-adjustment complications compared to the conventional neural network learning algorithm. ELM is based on the empirical theory of risk inferiorization. The learning process of it requires only one iteration. The multiple iterations and local minimization are avoided by the algorithm. We can find ELM useful in multiple fields and applications thanks to its robustness, controllability, good generalization capacity and its fast learning rate.

The researchers proposed modifications to the algorithms, to improve ELM [80-85] proposes the fully complex ELM (C-ELM). The latter extends the ELM algorithm from the real domain to the complex domain. Given the significant time consumed by the update procedure using the old data with the new information received, an online sequential ELM (OS-ELM) is proposed in [81-86], which can learn the training data one by one or block by block and discard the data for which the training has already been carried out. A new adaptive set model of ELM (Ada- ELM) is proposed in [82, 87] and allows better prediction performances to be obtained. It can automatically adjust the overall weights.

ELM performance is affected by hidden layer nodes. These are difficult to determine, the incremental ELM (I_ELM) [84, 89], the pruned ELM (P_ELM) [84, 89] and the self-adaptive ELM (SaELM) [85, 90] have been proposed in other works ELM achieves good results and shortens training times that takes several days in deep learning to a few minutes by ELM.

It is difficult to achieve such performance by conventional learning techniques. Example of datasets are showed in Table 1.

Artificial intelligence is widely used for the classification of radar targets. The following section will focus on the classification procedure and the different feature extractors and classifiers used in machine learning. The most famous and most used algorithms in our field (road safety) will be mentioned as well.

Figure 8: Algorithms and characteristic problems linked to deep learning and the research fields, which are interested in it.

Figure 8: Algorithms and characteristic problems linked to deep learning and the research fields, which are interested in it.

Table 1: Examples of Datasets and their learning methods and training time

| Datasets | Learning Methods | Testing Accuracy | Training time |

| MINIST OCR |

§ELM (multi hidden layers, unpublished) §ELM (multi hidden layers, ELM auto encoder) §Deep Belief Networks (DBN) §Deep Boltzman Machines (DBM) §Stacked Auto Encoders (SAE) §Stacked Denoising Auto Encoders (SDAE) |

99.6% 99.14% 98.87% 99.05% 98.6% 98.72% |

|

| 3D Shape Classification |

§ELM (multi hidden layers, local receptive fields) §Convolutional Deep Belief Network (CBDN) |

81.39% 77.32% |

|

|

Traffic sign recognition (GT SRB Dataset) |

§HOG+ELM §CNN+ELM (Convolutional neural networks (as feature extractors)+ ELM (as classifiers) §Multi-column deep neural network (MCDNN) |

99.56% 99.48%

99.46% |

§209s (CPU) §5 hours (CPU)

§>37 hours (GPU) |

4. Artificial Intelligence for Radar Target’s Identification and Classification

There is a lot of characteristics for target identification using micro Doppler signature and ML algorithms that have been studied in other research and interesting results have been presented [47, 48]. Many public datasets for target classification are introduced in [93-100].

It lays the groundwork for disentangling data into independent components [85]. PCA ignores the less important components [85]. We can use SVD in order to find PCA by truncating the less important base vectors in the original SVD matrix.

Most of the research done is on supervised learning, but very little of it uses unsupervised machine learning. The latter turns out to be one of the latest trends and added value in recent work [8, 49] and this based on sparse coding.

4.1. Feature Extraction

4.1.1. Feature Extraction based on sparse coding (sparse)

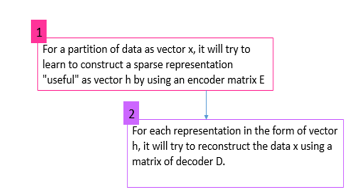

Sparse coding is a technique based on the study of algorithms aimed at learning a sparse / sparse useful representation of all data [99-106]. The next step consists in encoding so that each data will be in the form of sparse code. The algorithm uses information from the input to learn the sparse representation. This can be applied clearly to any type of information. We call this unsupervised learning. It will find the representation without losing any part or aspect of the data [106]. To do this, two main constraints try to be satisfied using sparse coding algorithms. Figure 9 describes them:

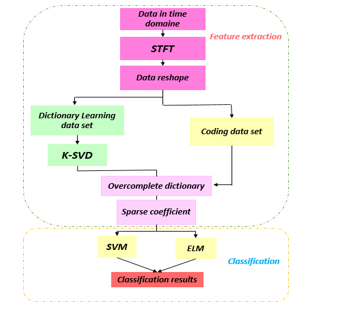

In reality, we just give more than the number of dimensions in which the original data is encoded or sometimes the same amount. Figure10 describes the target identification system based on sparse coding. Radar data in the time domain must be processed before sparse coding. This is done using the short-term Fourier transform (STFT), which is a method of time-frequency analysis used for micro-Doppler signatures by other researchers.

Figure 9: Procedure using sparse coding

Figure 9: Procedure using sparse coding

Figure 10: Functional diagram of the proposed functionality extractor based on sparse coding

Figure 10: Functional diagram of the proposed functionality extractor based on sparse coding

We use the Hamming window to extract micro-Doppler signatures from targets. After the STFT, comes the step of the complete dictionary construction, for this each spectrogram must be reshaped into a matrix. The micro Doppler signature is converted into a matrix with the concatenation of the two parts (real and imaginary), whose dimension is 2U x M. We generate the dataset for training using N given samples. We then gather the data received from the targets from all the angles. The dimension of the data set for each angle X ^ k is 2U x MN.

The resulting data set that we have trained is made of signals from the types of targets combined (pedestrians and cyclists for example). D and W (matrix coefficient) are deducted from the information of training in the optimization equation (1)

![]() Reading equation (1) from the left to the right, the last term represents the error of reconstruction between the original data and its representation based on the dictionary D. A better approximation compared to the original data can be made using the minimization of this term. The work here is to play with D and W both at the same time, adjusting them to solve the equation.

Reading equation (1) from the left to the right, the last term represents the error of reconstruction between the original data and its representation based on the dictionary D. A better approximation compared to the original data can be made using the minimization of this term. The work here is to play with D and W both at the same time, adjusting them to solve the equation.

Among the methods adopted for learning the complete dictionary, we have the K-SVD method. We will first search for W without touching D then on the second iterations we will search for both D and W while keeping the non-zero elements in W intact and fixed.

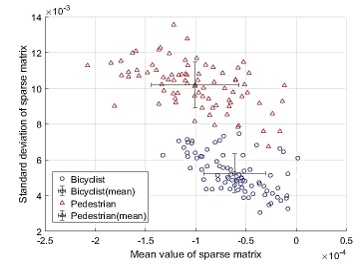

Figure 11: Reduction of sparse coding characteristics (for pedestrians and cyclists’ case) [8]

Figure 11: Reduction of sparse coding characteristics (for pedestrians and cyclists’ case) [8]

SVD ensures the normalization of atoms in the dictionary to each other. K-SVD takes over between the information coding with the existing dictionary and the regular updating of the dictionary in order to obtain a better fit.

The functionality extraction based on a sparse representation can be obtained once the D dictionary has been created. At this stage, the set of sparse matrices can be directly used as classification characteristics. It should be noted that the functionality dimensions are still very important and requires reduction. For this, some numerical characteristics, such as the mean value and the standard deviation, can be calculated from the sparse matrix.

We illustrate in Figure 11 an example of the sparse coding functionalities reduced with an angle of 30 ° (this is the case of a pedestrian and a cyclist).

Numerical characteristics can be used in the classification, even if some information are rejected by the calculation. The sparse matrix and its reduced characteristics are used in [8] to carry out the classification. Five numerical characteristics of sparse matrices are used for the classification, namely: mean values, standard deviations, maximum values, minimum values, and difference between the maximum and minimum values.

4.1.2. SVD-based feature extraction

SVD makes it possible to build an empirical model, without an underlying theory, all the more precise when terms are injected into it [92]. The effectiveness of the method depends in particular on the way in which the information is presented to it. We can describe the SVD decomposition with the equation (2).

![]() S is a diagonal matrix of singular values. We note that the components of S represent only scaling factors and therefore don’t have any information of the spectrogram. The matrices U and V contains singular vectors of F in the two directions (left and right). It represents the information of both the time and the Doppler domain of an MD signature. We can use the singular vector as a characteristic for categorization.

S is a diagonal matrix of singular values. We note that the components of S represent only scaling factors and therefore don’t have any information of the spectrogram. The matrices U and V contains singular vectors of F in the two directions (left and right). It represents the information of both the time and the Doppler domain of an MD signature. We can use the singular vector as a characteristic for categorization.

4.1.3. Robust principal component analysis RPCA

We can calculate the average frequency profile as the average value of the MD signatures along the time axis of the absolute value in the frequency domain. It is demonstrated in (3)

![]() A betterment of the MFP using PCA and minimum covariance determinant estimator is discussed and presented in several works and the method is described with the equation (4)

A betterment of the MFP using PCA and minimum covariance determinant estimator is discussed and presented in several works and the method is described with the equation (4)

4.2. Classifiers

4.2. Classifiers

The objective of [50] is to study the changes in the categorization performance with the parameters of signal processing and the procedures of extracting characteristics applicable to backscattered signals from the UWB radar [51-54]. In the literature, many algorithms have been used for classification. In [41], the classifiers were used: MDC [107-122], NB [111-115], k-NN [113] and SVM [21, 23, 116].

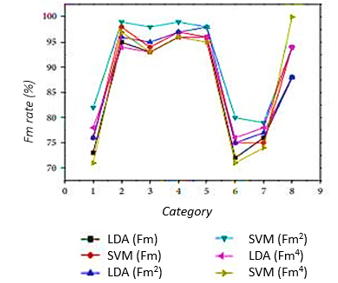

The comparison of recognition rates of the frequency FM using many discriminant analyzes (LDA) and support vector machine (SVM) are presented in Figure 12 that suggests that the support vector machine approach is a method efficient classification of radar signals with an elevated recognition percentage. SVM has the maximum failure rate (≤97%) and it is lower for LDA (≤ 94%) and tends to change.

Those categorization procedures are used to given processed products based whether the cross-validation or leave-a-out method. The appraise and estimation of the performance of every classifier is done using independent tests of the learning set, thus minimizing the generalization inaccuracy.

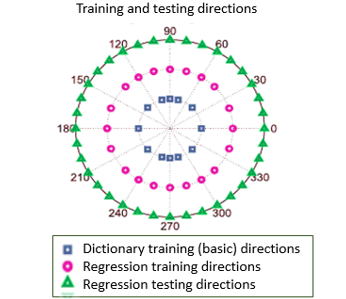

In [7], the author used supervise learning after classifying specific objects. Two main phases known as the training phase and the test phase are in this process. Supervised regression methods are also generally used to approximate and guess the mapping between the directions of movement and the micro-Doppler signatures of the targets, among which we find the support vector regression (SVR) and the multilayer perceptron (MLP) used in [7].

Figure 12: Recognition rate comparison adapted from [35]

Figure 12: Recognition rate comparison adapted from [35]

Figure 13: Regression Model [7]

Figure 13: Regression Model [7]

The regression training data set is useful to approximate the correspondence between the vectors and the direction of motion. The regression model is a function map described in figure 13. Micro-Doppler signatures of complex surface targets with moving sections are used to target the approximation of the direction of movement. Then supervised regression algorithms are applied as a solution to the problem of estimating the direction of movement [119]-[131].

The next section presents a comparison between the methods used and presents the advantages of one method compared to the others. It highlights the usefulness of the algorithms according to the desired application. The challenge here is to choose the method to be used according to the constraints presented by the studied system. The section also discusses the prospects for proposing a new procedure combining the advantages of the methods presented previously and adaptable to several uses.

5. Open Issues and Challenges

Learning approaches can be categorized into supervised learning and unsupervised learning. On the first one, the classifier will be designed by exploiting information already known because the training data set is previously available. This is not the case on the second one because the training information known as class labels, do not exist. We then use a group of characteristic vectors that we devise into another group of subsets called clusters. The information with similar features are portioned between subsets equally.

Table 2: Comparison between methods [8]

| 2sd signal |

SVM Pedestrian |

SVM Bicyclist |

ELM Pedestrian |

ELM Bicyclist |

| RPCA |

Mean : 2.57 Max : 94.67 Min : 80.14 |

2.21 95.71 81.55 |

2.51 95.34 81.55 |

2.73 96.14 83.43 |

| SVD |

Mean : 2.17 Max : 96.15 Min : 85.13 |

2.11 97.33 89.85 |

2.44 96.75 86.66 |

2.01 97.15 896.08 |

| Sparse coding |

Mean : 1.85 Max : 99.97 Min : 85 .13 |

1.79 100 91.31 |

1.99 100 91.55 |

2.26 100 91.11 |

| Reduced sparse coding |

Mean : 2.34 Max : 99.89 Min : 91.35 |

2.23 100 90.02 |

1.92 99.84 91.35 |

2.18 99.94 89.66 |

There is an increasing convergence towards the use of unsupervised ML using non-structured input information in several areas such as traffic engineering, definition of network anomalies, categorization of objects and optimization of road traffic and many others. Table 2 and Table 3 shows a comparison between methods. On one hand, the results in [7] [8] show that classification with characteristics based on sparse coding makes it possible to obtain the highest precision (> 96%) and that the SVD and RPCA methods are very efficient. On the other hand, the computation time for the procedure using the sparse coding functionality extractor and the support vector machine classifier is too long despite that it offers the best classification performance.

Table 3: Method’s performances comparison [8]

| Extractor and classifiers | SVM | ELM |

| SVD | 0.26s | 0.22s |

| RPCA | 0.13 | 0.11 |

| Sparse Coding | 1.12 | 0.98 |

| Reduced sparse coding | 0.87 | 0.86 |

In addition, when the parameters dimensions are minor, the total time for SVM and ELM is alike. As the size of the functionality increases, identification via ELM is faster than SVM.

We can also add that a good motion direction estimate (with an error beneath 5◦) can be obtained based on the SVR-based method. The approximation conduct improves for the directions of movement towards the radar and reduce for the angles of movement at right angles to the radar sight (for a radar targets detection example).

This allows us to deduce that certain methods can be effective for certain applications and not for others. The choice of which method to use will then depend heavily on the application itself. It also prompts us to question the possibility of having a high- performance method at all levels and for all possible applications, including road safety [132-136]. The current trend is converging towards the development of new methods combining the properties of old algorithms and the expectations of new applications and adaptable to different uses.

6. Conclusion

The main goal of classification approach is to group the information into the adequate category based on common features. The classification makes it possible to determine the data including the unknown affiliate type or group. Classification methods can be known as approaches generating non-identical outcome. Artificial intelligence and its various techniques and algorithms help solving those issues. They are the subject of several research studies, including in the field of road safety. This paper is a state of the art of targets classification and the contribution of machine learning technologies in it. The study and comparison of the different extraction methods and classification algorithms allowed us to deduce that efficient algorithms may not be as efficient for some applications. It strongly depends on the desired application and its functionalities. The different deductions open up perspectives on the development of new approaches, adaptable to any kind of application with optimal algorithms in terms of calculation time and processing effectiveness.

Conflict of Interest

The authors declare no conflict of interest.

- M. Fadlullah et al., “State-of-the-Art Deep Learning: Evolving Machine Intelligence Toward Tomorrow’s Intelligent Network Traffic Control Systems,” in IEEE Communications Surveys & Tutorials, 19(4), 2432-2455, Fourthquarter 2017. DOI: 10.1109/COMST.2017.2707140

- Usama et al., “Unsupervised Machine Learning for Networking: Techniques, Applications and Research Challenges,” in IEEE Access, 7, 65579-65615, 2019. DOI: 10.1109/ACCESS.2019.2916648

- Xin et al., “Machine Learning and Deep Learning Methods for Cybersecurity,” in IEEE Access, 6, 35365-35381, 2018. DOI: 10.1109/ACCESS.2018.2836950

- Louridas and C. Ebert, “Machine Learning,” in IEEE Software, 33(5), 110-115, Sept.-Oct. 2016.

- I. Jordan, T. M. Mitchell, “Machine learning : Trends perspectives and prospects”, Science, 349(6245), 255-260, 2015. DOI: 10.1126/science.aaa8415

- LeCun, Y. Bengio, G. Hinton, “Deep learning”, Nature, 521, 436- 444, May 2015. DOI:10.1038/nature14539

- Khomchuk, I. Stainvas, I. Bilik. Pedestrian motion direction estimation using simulated automotive MIMO radar. IEEE Trans. Aerosp. Electron.Syst. 2016. DOI: 10.1109/TAES.2016.140682

- Pedestrian and Bicyclist Identification through Micro Doppler Signature with Different Approaching Aspect Angles Rui Du, Yangyu Fan, Jianshu Wang. 10.1109/JSEN.2018.2816594, IEEE Sensors Journal 2018. DOI: 1109/JSEN.2018.2816594

- A. Anderson, M. T. Gately, P. A. Penz, and D. R. Collins, “Radar signal categorization using a neural network,” Proceedings of the IEEE, 78(10), 1646–1657, 1991. DOI: 10.1109/5.58358

- Hosni Ghedira, utiltsation des réseaux de neurones pour la cartographie des milieux humides àpnntir d’une série temporelle d’images radarsat-1. 2002

- Vittorio cappecchi, une methode de classification fondé sur l’entropie. Revue française de sociologie Année 1964. DOI: 10.2307/3319578

- James Bezdek, James Keller, Raghu Krishnapuram, and Nikhil R. Pal. Fuzzy Models and Algorithms for Pattern Recognition and Image Processing. The Handbook of Fuzzy Sets Series, didier dubois and henri prade edition, 1999. DOI 10.1007/978-0-387-24579-9

- A. Hartigan. Clustering algorithms. John Wiley & Sons Inc., 1975.

- K. Jain and R.C. Dubes. Algorithms for clustering Data. Prentice Hall Advanced Reference series, 1988.

- K. Jain, M.N. Murty, and P.J. Flynn. Data clustering: A review. ACM Computing Surveys, 31(3) :264–323, 1999. doi.org/10.1145/331499.331504

- Anil K. Jain. Data clustering: 50 years beyond k-means. Pattern Recognition Letters, 31(8) :651–666, 2010. DOI:10.1016/j.patrec.2009.09.011

- Rui Xu and Donald Wunsch II. Survey of clustering algorithms. IEEE Transactions on Neural Networks, 16(3) :645–678, May 2005. DOI: 10.1109/TNN.2005.845141

- R. Zaiane, C.H. Lee A. Foss, and W. Wang. On data clustering analysis : Scalability, constraints and validation. In Proc. of the Sixth Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD). Lecture Notes in Artificial Intelligence 2336, Advances in Knowledge Discovery and Data Mining, Springer-Verlag, 2002. DOI: 10.1007/3-540-47887-6_4

- Feng et al., “Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges,” in IEEE Transactions on Intelligent Transportation Systems, 2020. DOI: 10.1109/TITS.2020.2972974.

- Li, A. Sun, J. Han and C. Li, “A Survey on Deep Learning for Named Entity Recognition,” in IEEE Transactions on Knowledge and Data Engineering, 2020. DOI: 10.1109/TKDE.2020.2981314.

- A. Osia et al., “A Hybrid Deep Learning Architecture for Privacy- Preserving Mobile Analytics,” in IEEE Internet of Things Journal, 7(5), 4505-4518, May 2020, DOI: 10.1109/JIOT.2020.2967734.

- Karim, S. Majumdar and H. Darabi, “Adversarial Attacks on Time Series,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020. DOI: 10.1109/TPAMI.2020.2986319.

- Y. B. Lim et al., “Federated Learning in Mobile Edge Networks: A Comprehensive Survey,” in IEEE Communications Surveys & Tutorials, 2020. DOI: 10.1109/COMST.2020.2986024.

- Mei, R. Jiang, X. Li and Q. Du, “Spatial and Spectral Joint Super- Resolution Using Convolutional Neural Network,” in IEEE Transactions on Geoscience and Remote Sensing, 2020. DOI: 10.1109/TGRS.2020.2964288.

- Gholami, P. Sahu, O. Rudovic, K. Bousmalis and V. Pavlovic, “Unsupervised Multi-Target Domain Adaptation: An Information Theoretic Approach,” in IEEE Transactions on Image Processing, 29), 3993- 4002, 2020. DOI: 10.1109/TIP.2019.2963389

- McConaghy, H. Leung, E. Bosse, and V. Varadan, “Classification of audio radar signals using radial basis function neural networks,” IEEE Transactions on Instrumentation & Measurement, 52(6), 1771–1779, 2003.DOI: 10.1109/TIM.2003.820450

- Zeng, X. Zeng, H. Cheng, and B. Tang, “Automatic modulation classification of radar signals using the Rihaczek distribution and Hough transform,” IET Radar Sonar & Navigation, 6(5), 322–331, 2012.DOI: 10.1049/iet-rsn.2011.0338

- Gini, M. Montanari, and L. Verrazzani, “Maximum likelihood, ESPRIT, and periodogram frequency estimation of radar signals in K-distributed clutter,” Signal Processing, 80(6), 1115–1126, 2000. ISSN: 0165- 1684

- Jean-Christophe CEXUS, THT et Transformation de Hough pour la détection de modulations linéaires de fréquence.2012.

- Zeng, Automatic modulation classification of radar signals using the Rihaczek distribution and Hough transform. June 2012, IET Radar Sonar Navigation, 2012. DOI: 10.1049/iet-rsn.2017.0265

- P. Kauppi, K. Martikainen, and U. Ruotsalainen, “Hierarchical classification of dynamically varying radar pulse repetition interval modulation patterns,” Neural Networks, 23(10), 1226–1237, 2010.

- Han, M.-H. He, Y.-Q. Zhu, and J. Wang, “Sorting radar signal based on the resemblance coefficient of bispectrum two dimensions characteristic,” Chinese Journal of Radio Science, 24(5), 286–294, 2009.

- Zhu, N. Lin, H. Leung, R. Leung and S. Theodoidis, “Target Classification From SAR Imagery Based on the Pixel Grayscale Decline by Graph Convolutional Neural Network,” in IEEE Sensors Letters, 4(6), 1- 4, June 2020, DOI: 10.1109/LSENS.2020.2995060

- J. Zhang, F. H. Fan, and Y. Tan, “Application of cluster method to radar signal sorting,” Radar Science and Technology, 4, 219–223, 2004. https://doi.org/10.1007/s11460-007-0062-3

- “IEEE Colloquium on ‘The Application of Artificial Intelligence Techniques to Signal Processing’ (Digest No.42),” IEEE Colloquium on Application of Artificial Intelligence Techniques to Signal Processing, London, UK, 1989.

- S. Ahmad, “Brain inspired cognitive artificial intelligence for knowledge extraction and intelligent instrumentation system,” 2017 International Symposium on Electronics and Smart Devices (ISESD), Yogyakarta, 2017. DOI: 10.5772/intechopen.72764

- Veenman, C. J., Hendriks, E. A., & Reinders, M. J. T. (n.d.). A fast and robust point tracking algorithm. Proceedings 1998 International Conference onImageProcessing.ICIP98, 2010 .DOI:10.1109/icip.1998.999051

- Bhagya Hettige, Hansika Hewamalage, Chathuranga Rajapaksha, Nuwan Wajirasena, Akila Pemasiri and Indika Perera. Evaluation of feature-based object identification for augmented reality applications on mobile devices , Conference: 2015 IEEE 10th International Conference on Industrial and Information Systems (ICIIS), 2015. DOI: 10.1109/ICIINFS.2015.7399005

- Nan Luo, Quansen Sun , Qiang Chen, Zexuan Ji, Deshen Xia. A Novel Tracking Algorithm via Feature Points Matching, 2016. https://doi.org/10.1371/journal.pone.0116315

- JiangxiongFang, Efficient and robust fragments-based multiple kernels tracking. AEU – International Journal of Electronics and Communications. https://doi.org/10.1016/j.aeue.2011.02.013

- Comaniciu, V. Ramesh and P. Meer, “Kernel-based object tracking,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 25(5), 564-577, May 2003, DOI: 10.1109/TPAMI.2003.1195991.

- Haihong Zhang, Zhiyong Huang, Weimin Huang and Liyuan Li, “Kernel-based method for tracking objects with rotation and translation,” Proceedings of the 17th International Conference on Pattern Recognition, 2004. ICPR 2004., Cambridge, 2004. DOI: 10.1109/ICPR.2004.1334362.

- Mondal, S. Ghosh and A. Ghosh, “Efficient silhouette based contour tracking,” 2013 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Mysore, 2013. DOI: 10.1109/ICACCI.2013.6637451.

- R. Howe, “Silhouette Lookup for Automatic Pose Tracking,” 2004 Conference on Computer Vision and Pattern Recognition Workshop, Washington, DC, USA, 2004. DOI: 10.1109/CVPR.2004.438.

- Boudoukh, I. Leichter and E. Rivlin, “Visual tracking of object silhouettes,” 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, 2009. DOI: 10.1109/ICIP.2009.5414280.

- V. Makarenko, Deep learning algorithms for signal recognition in long perimeter monitoring distributed fiber optic sensors, 26th International Workshop on Machine Learning for Signal Processing (MLSP). IEEE 2016.DOI: 10.1109/MLSP.2016.7738863

- Fioranelli, M. Ritchie, H. Griffiths. Classification unarmed/armed personnel using the NetRAD multistatic radar for micro-Doppler and singular value decomposition features. IEEE Geosci. Remote Sens. Lett. 2015. DOI:10.1109/LGRS.2018.2806940

- Zabalza, C. Clemente, G. Di Caterina, J. Ren, J. J. Soraghan, S. Marshall. Robust PCA micro-Doppler classification using SVM on embedded systems. IEEE Trans. Aerosp. Electron. Syst. 2014. https://doi.org/10.1109/TAES.2014.130082.

- Ruhela, “Thematic Correlation of Human Cognition and Artificial Intelligence,” 2019 Amity International Conference on Artificial Intelligence (AICAI), Dubai, United Arab Emirates, 2019.

- Doris Hooi-Ten Wong and S. Manickam, “Intelligent Expertise Classification approach: An innovative artificial intelligence approach to accelerate network data visualization,” 2010 3rd International Conference on Advanced Computer Theory and Engineering (ICACTE), Chengdu, 2010. DOI : 1109/ICACTE.2010.5579790

- Mestoui et al. “Performance analysis of CE-OFDM-CPM Modulation using MIMO system over wireless channels. J Ambient Intell Human Comput 2019. https://doi.org/10.1007/s12652-019-01628-0

- Maroua and F. Mohammed. Characterization of Ultra Wide Band indoor propagation. 2019 7th Mediterranean Congress of Telecommunications (CMT), Fès, Morocco, 2019. DOI: 10.1109 / CMT.2019.8931367

- DAGHOUJ, et al. “UWB Coherent Receiver Performance in a Vehicular Channel”, International Journal of Advanced Trends in Computer Science and Engineering, 9, No 2, 2020.

- Abdellaoui et al. “Study and design of a see-through wall imaging radar system. In Embedded Systems and Artificial Intelligence. Advances in Intelligent Systems and Computing, 1076. Springer, Singapour, 2020. https://doi.org/10.1007/978-981-15-0947-6_15

- Geng, S. Huang and S. Chen, “Recent Advances in Open Set Recognition: A Survey,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020. DOI: 10.1109/TPAMI.2020.2981604.

- Cruciani, F., Vafeiadis, A., Nugent, C. et al. Feature learning for Human Activity Recognition using Convolutional Neural Networks. CCF Trans. Pervasive Comp. Interact. 2, 18–32 (2020). https://doi.org/10.1007/s42486-020-00026-2

- Lin, W. Kuo, Y. Huang, T. Jong, A. Hsu and W. Hsu, “Using Convolutional Neural Networks to Measure the Physiological Age of Caenorhabditis elegans,” in IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2020. DOI: 10.1109/TCBB.2020.2971992.

- Hussain, R. Hussain, S. A. Hassan and E. Hossain, “Machine Learning in IoT Security: Current Solutions and Future Challenges,” in IEEE Communications Surveys & Tutorials, 2020. DOI: 10.1109/COMST.2020.2986444

- Samat, P. Du, S. Liu, J. Li and L. Cheng, “${{\rm E}^{2}}{\rm LMs}$ : Ensemble Extreme Learning Machines for Hyperspectral Image Classification,” in IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 7(4), 1060-1069, April 2014.DOI : 10.1109/JSTARS.2014.2301775

- Bruzzone and M. Marconcini, “Domain Adaptation Problems: A DASVM Classification Technique and a Circular Validation Strategy,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(5), 770-787, May 2010. DOI: 10.1109/TPAMI.2009.57

- ES-SAQY et al., “Very Low Phase Noise Voltage Controlled Oscillator for 5G mm-wave Communication Systems,” 2020 1st International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Meknes, Morocco, 2020. DOI: 10.1109/IRASET48871.2020.9092005

- Ubik and P. Žejdl, “Evaluating Application-Layer Classification Using a Machine Learning Technique over Different High Speed Networks,” 2010 Fifth International Conference on Systems and Networks Communications,Nice, 2010. DOI: 10.1109/ICSNC.2010.66

- I. Bulbul and Ö. Unsal, “Comparison of Classification Techniques used in Machine Learning as Applied on Vocational Guidance Data,” 2011 10th International Conference on Machine Learning and Applications and Workshops, Honolulu, HI, 2011. DOI: 10.1109/ICMLA.2011.49

- Bruzzone and C. Persello, “Recent trends in classification of remote sensing data: active and semisupervised machine learning paradigms,” 201 IEEE International Geoscience and Remote Sensing Symposium, Honolulu,HI, 2010. DOI: 10.1109/IGARSS.2010.5651236

- Miao, P. Zhang, L. Jin and H. Wu, “Chinese News Text Classification Based on Machine Learning Algorithm,” 2018 10th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, 2018. DOI: 10.1109/IHMSC.2018.10117

- Ross, C. A. Graves, J. W. Campbell and J. H. Kim, “Using Support Vector Machines to Classify Student Attentiveness for the Development of Personalized Learning Systems,” 2013 12th International Conference on Machine Learning and Applications, Miami, FL, 2013 .DOI: 10.1109/ICMLA.2013.66

- Guo, W. Wang and C. Men, “A novel learning model-Kernel Granular Support Vector Machine,” 2009 International Conference on Machine Learning and Cybernetics, Hebei, 2009. DOI: 10.1109/ICMLC.2009.5212413

- Kun, T. Ying-jie and D. Nai-yang, “Unsupervised and Semi-Supervised Two-class Support Vector Machines,” Sixth IEEE International Conference on Data Mining – Workshops (ICDMW’06), Hong Kong, 2006), 813-817. DOI: 10.1109/ICDMW.2006.164

- Dharani and M. Sivachitra, “Motor imagery signal classification using semi supervised and unsupervised extreme learning machines,” 2017 International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, 2017. DOI: 10.1109/ICIIECS.2017.8276131

- Zhao, Y. Liu and N. Deng, “Unsupervised and Semi-supervised Lagrangian Support Vector Machines with Polyhedral Perturbations,” 2009 Third International Symposium on Intelligent Information Technology Application, Shanghai, 2009. DOI: 10.1109/IITA.2009.200

- D. Bui, D. K. Nguyen and T. D. Ngo, “Supervising an Unsupervised Neural Network,” 2009 First Asian Conference on Intelligent Information and Database Systems, Dong Hoi, 2009. DOI: 10.1109/ACIIDS.2009.92

- Hussein, P. Kandel, C. W. Bolan, M. B. Wallace and U. Bagci, “Lung and Pancreatic Tumor Characterization in the Deep Learning Era: Novel Supervised and Unsupervised Learning Approaches,” in IEEE Transactions on Medical Imaging, 38(8), 1777-1787, Aug. 2019.DOI: 10.1109/TMI.2019.2894349

- Lee and H. Yang, “Implementation of Unsupervised and Supervised Learning Systems for Multilingual Text Categorization,” Fourth International Conference on Information Technology (ITNG’07), Las Vegas, NV, 2007. DOI: 10.1109/ITNG.2007.107

- Kong, G. Huang, K. Wu, Q. Tang and S. Ye, “Comparison of Internet Traffic Identification on Machine Learning Methods,” 2018 International Conference on Big Data and Artificial Intelligence (BDAI), Beijing, 2018. DOI: 10.1109/BDAI.2018.8546682

- Nijhawan, I. Srivastava and P. Shukla, “Land cover classification using super-vised and unsupervised learning techniques,” 2017 International Conference on Computational Intelligence in Data Science(ICCIDS), Chennai, 2017. DOI: 10.1109/ICCIDS.2017.8272630

- Ji, S. Yu and Y. Zhang, “A novel Naive Bayes model: Packaged Hidden Naive Bayes,” 2011 6th IEEE Joint International Information Technology and Artificial Intelligence Conference, Chongqing, 2011. DOI: 10.1109/ITAIC.2011.6030379

- H. Jahromi and M. Taheri, “A non-parametric mixture of Gaussian naive Bayes classifiers based on local independent features,” 2017 Artificial Intelligence and Signal Processing Conference (AISP), Shiraz, 2017. DOI: 10.1109/AISP.2017.8324083

- Chen, Z. Dai, J. Duan, H. Matzinger and I. Popescu, “Naive Bayes with Correlation Factor for Text Classification Problem,” 2019 18th IEEE International Conference On Machine Learning And Applications (ICMLA), Boca Raton, FL, USA, 2019. DOI: 10.1109/ICMLA.2019.00177

- Tripathi, S. Yadav and R. Rajan, “Naive Bayes Classification Model for the Student Performance Prediction,” 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur,Kerala, India, 2019. DOI: 10.1109/ICICICT46008.2019.8993237

- Feature Selection for Musical Genre Classification Using a Genetic Algorithm. Volume 4, Issue 2, Page No 162-169, 2019. DOI: 25046/aj040221

- W. Putong et al., “Classification Model of Contact Center Customers Emails Using Machine Learning”, in ASTESJ, 5(1), 174-182, 2020. DOI: 10.25046/aj050123

- K. Ibrahim, “Survey on Semantic Similarity Based on Document Clustering”, in ASTESJ, 4(5), 115-122, 2019. DOI: 10.25046/aj040515

- Mehmood, A Comparison of Mean Models and Clustering Techniques for Vertebra Detection and Region Separation from C-Spine X-Rays. Volume 2, Issue 3, Page No 1758-1770, 2017. DOI: 10.25046/aj0203215

- Bowala, “A novel model for Time-Series Data Clustering Based on piecewise SVD and BIRCH for Stock Data Analysis on Hadoop Platform”, in ASTESJ, 2(3), 855-864, 2017. DOI: 10.25046/aj0203106

- Kapala, Emotional state recognition in speech signal, in ASTESJ, 2(3), 1654-1659, 2017. DOI: 10.25046/aj0203205

- M. Vadakara, Aggrandized Random Forest to Detect the Credit Card Frauds, in ASTESJ, 4(4), 121-127, 2019. DOI: 10.25046/aj040414

- Wu, S. Pan, F. Chen, G. Long, C. Zhang and P. S. Yu, “A Comprehensive Survey on Graph Neural Networks,” in IEEE Transactions on Neural Networks and Learning Systems, 2020. DOI: 10.1109/TNNLS.2020.2978386.

- Zhang, P. Cui and W. Zhu, “Deep Learning on Graphs: A Survey,” in IEEE Transactions on Knowledge and Data Engineering, 2020. DOI: 10.1109/TKDE.2020.2981333.

- Khamparia, G. Saini, D. Gupta, “Seasonal Crops Disease Prediction and Classification Using Deep Convolutional Encoder Network. Circuits Syst Signal Process 39, 818–836 (2020). https://doi.org/10.1007/s00034-019-01041-0

- Li and W. Deng, “Deep Facial Expression Recognition: A Survey,” in IEEE Transactions on Affective Computing, 2020. DOI: 10.1109/TAFFC.2020.2981446

- Goularas and S. Kamis, “Evaluation of Deep Learning Techniques in Sentiment Analysis from Twitter Data,” 2019 International Conference on Deep Learning and Machine Learning in Emerging Applications (Deep-ML), Istanbul, Turkey, 2019. DOI: 10.1109/Deep-ML.2019.00011

- -B. Li, G.-B. Huang, P. Saratchandran, and N. Sundararajan, “Fully complex extreme learning machine,” Neurocomputing, 68(1-4), 306–314, 2005.View at: Publisher Site | Google Scholar. 2005.

- Liang, G. Huang, P. Saratchandran, and N. Sundararajan, “A fast and accurate online sequential learning algorithm for feedforward networks,” IEEE Transactions on Neural Networks and Learning Systems, 17(6), 1411–1423, 2006.View at: Publisher Site | Google Scholar. 2006

- Wang, W. Fan, F. Sun, and X. Qian, “An adaptive ensemble model of extreme learning machine for time series prediction,” in Proceedings of the 12th International Computer Conference on Wavelet Active Media Technology and Information Processing, ICCWAMTIP), 80–85, Chengdu,China, December 2015.View at: Publisher Site | Google Scholar. 2015

- Huang, L. Chen, and C. Siew, “Universal approximation using incremental constructive feedforward networks with random hidden nodes,” IEEE Transactions on Neural Networks and Learning Systems, 17(4), 879–892, 2006.View at: Publisher Site | Google Scholar. 2006.

- -J. Rong, Y.-S. Ong, A.-H. Tan, and Z. Zhu, “A fast pruned-extreme learning machine for classification problem,” Neurocomputing, 72(1–3), 359–366, 2008.View at: Publisher Site | Google Scholar.2008.

- G. Wang, M. Lu, Y. Q. Dong, and X. J. Zhao, “Self-adaptive extreme learning machine,” Neural Comput Applic, 27), 291–303, 2016.View at: Publisher Site | Google Scholar. 2016.

- Cityscape website. [Online]. Available: https://www.cityscapes-dataset.com [Accessed: 08.06.2020]

- ImageNet website. [Online]. Available: http://image-net.org [Accessed: 08.06.2020]

- CIFAR-10website.[Online].Available: https://www.cs.toronto.edu/~kriz/cifar.html[Accessed: 08.06.2020]

- [Online].Available: http://www.vision.caltech.edu/Image_Datasets/Caltech101 [Accessed: 08.06.2020]

- website:https://sites.google.com/view/ihsen-alouani/datasets?authuser=0 [Accessed: 08.06.2020]

- website:https://catalog.data.gov/dataset/traffic-and-pedestrian-signals[Accessed: 06.06.2020]

- website : http://coding-guru.com/popular-pedestrian-detection-datasets/

- website:https://www.mathworks.com/supportfiles/SPT/data/PedBicCarData.zip

- Aksu and M. Ali Aydin, “Detecting Port Scan Attempts with Comparative Analysis of Deep Learning and Support Vector Machine Algorithms,” 2018 International Congress on Big Data, Deep Learning and Fighting Cyber Terrorism (IBIGDELFT), ANKARA, Turkey, 2018. DOI: 10.1109/IBIGDELFT.2018.8625370

- Wang, J. Chen and S. C. H. Hoi, “Deep Learning for Image Super- resolution: A Survey,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020. DOI: 10.1109/TPAMI.2020.2982166.

- Zhu, D. Liu, Y. Du, C. You, J. Zhang and K. Huang, “Toward an Intelligent Edge: Wireless Communication Meets Machine Learning,” in IEEE Communications Magazine, 58(1), 19-25, January 2020, DOI: 10.1109/MCOM.001.1900103

- Rehman, S. Naz, M.I. Razzak, “A Deep Learning-Based Framework for Automatic Brain Tumors Classification Using Transfer Learning”, Circuits Syst Signal Process 39, 757–775 (2020). https://doi.org/10.1007/s00034-019- 01246-3

- S.Z. Rizvi, “Learn Image Classification on 3 Datasets using Convolutional Neural Networks (CNN). FEBRUARY 18, 2020

- M. A. Bhuiyan and J. F. Khan, “A Simple Deep Learning Network for Target Classification,” 2019 SoutheastCon, Huntsville, AL, USA, 2019. DOI: 10.1109/SoutheastCon42311.2019.9020517

- Wu, S. Pan, F. Chen, G. Long, C. Zhang and P. S. Yu, “A Comprehensive Survey on Graph Neural Networks,” in IEEE Transactions on Neural Networks and Learning Systems, 2020. DOI: 10.1109/TNNLS.2020.2978386.

- Elly C. Knight, Sergio Poo Hernandez, Erin M. Bayne, Vadim Bulitko & Benjamin V. Tucker (2020) Pre-processing spectrogram parameters improve the accuracy of bioacoustic classification using convolutional neural networks,Bioacoustics,29:3,337-355,2020. DOI: 10.1080/09524622.2019.1606734

- Marwa Farouk Ibrahim Ibrahim, Auto-Encoder based Deep Learning for Surface Electromyography Signal Processing. Volume 3, Issue 1, Page No 94-102, 2018. DOI: 25046/aj030111

- Ibgtc Bowala, A novel model for Time-Series Data Clustering Based on piecewise SVD and BIRCH for Stock Data Analysis on Hadoop Platform.Volume 2, Issue 3, Page No 855-864, 2017. DOI: 25046/aj0203106

- Kountchev and R. Kountcheva, “Truncated Hierarchical SVD for image sequences, represented as third order tensor,” 2017 8th International Conference on Information Technology (ICIT), Amman, 2017. DOI: 10.1109/ICITECH.2017.8079995

- Lei, I. Y. Soon and E. Tan, “Robust SVD-Based Audio Watermarking Scheme With Differential Evolution Optimization,” in IEEE Transactions on Audio, Speech, and Language Processing, 21(11), 2368-2378,Nov. 2013. DOI: 10.1109/TASL.2013.2277929

- Ibrahim, J. Alirezaie and P. Babyn, “Pixel level jointed sparse representation with RPCA image fusion algorithm,” 2015 38th International Conference on Telecommunications and Signal Processing (TSP), Prague, 2015. DOI: 10.1109/TSP.2015.7296332

- Dong Yang, Guisheng Liao, Shengqi Zhu and Xi Yang, “RPCA based moving target detection in strong clutter background,” 2015 IEEE Radar Conference (RadarCon), Arlington, VA, DOI: 10.1109/RADAR.2015.7131231

- Guo, G. Liao, J. Li and T. Gu, “An Improved Moving Target Detection Method Based on RPCA for SAR Systems,” IGARSS 2019 – 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 2019. DOI: 10.1109/IGARSS.2019.8900206

- Manju Parkavi, Recent Trends in ELM and MLELM: A review, Volume 2, Issue 1, Page No 69-75, 2017. DOI: 10.25046/aj020108

- Sehat and P. Pahlevani, “An Analytical Model for Rank Distribution in Sparse Network Coding,” in IEEE Communications Letters, 23(4), 556-559, April 2019. DOI: 10.1109/LCOMM.2019.2896626

- Zhang and C. Ma, “Low-rank, sparse matrix decomposition and group sparse coding for image classification,” 2012 19th IEEE International Conference on Image Processing, Orlando, FL, 2012. DOI: 10.1109/ICIP.2012.6466948.

- J. C. MacKay, “Good error-correcting codes based on very sparse matrices,” in IEEE Transactions on Information Theory, 45(2), 399-431, March 1999. DOI: 10.1109/18.748992

- Wang and W. Wang, “MRI Brain Image Classification Based on Improved Topographic Sparse Coding,” 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 2018. DOI: 10.1109/ICSESS.2018.8663765

- Zhong, T. Yan, W. Yang and G. Xia, “A supervised classification approach for PolSAR images based on covariance matrix sparse coding,” 2016 IEEE 13th International Conference on Signal Processing (ICSP), Chengdu, 2016. DOI: 10.1109/ICSP.2016.7877826

- S. Packianather and B. Kapoor, “A wrapper-based feature selection approach using Bees Algorithm for a wood defect classification system,” 2015 10th System of Systems Engineering Conference (SoSE), San Antonio, TX, 2015), 498-503. DOI: 10.1109/SYSOSE.2015.7151902

- Chen, R.A. Goubran, T. Mussivand, “Multi-Dimension Combining (MDC) in abstract Level and Hierarchical MDC (HMDC) to Improve the Classification Accuracy of Enoses,” 2005 IEEE Instrumentationand Measurement Technology Conference Proceedings, Ottawa, Ont., 2005. DOI: 10.1109/IMTC.2005.1604204

- Chen, R.A. Goubran, T. Mussivand, “Improving the classification accuracy in electronic noses using multi-dimensional combining (MDC),” SENSORS, 2004 IEEE, Vienna, 2004 .DOI: 10.1109/ICSENS.2004.1426233

- Wang, Y. Wan and S. Shen, “Classifications of remote sensing images using fuzzy multi-classifiers,” 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, 2009. DOI: 10.1109/ICICISYS.2009.5357646

- Yadav and A. Swetapadma, “Combined DWT and Naive Bayes based fault classifier for protection of double circuit transmission line,” International Conference on Recent Advances and Innovations in Engineering (ICRAIE- 2014), Jaipur, 2014. DOI: 10.1109/ICRAIE.2014.6909179

- Sharmila and P. Geethanjali, “DWT Based Detection of Epileptic Seizure From EEG Signals Using Naive Bayes and k-NN Classifiers,” in IEEE Access, 4), 7716-7727, 2016. DOI: 10.1109/ACCESS.2016.2585661

- Li, Y. Zhang, W. Chen, S. K. Bose, M. Zukerman and G. Shen, “Naïve Bayes Classifier-Assisted Least Loaded Routing for Circuit-Switched Networks,” in IEEE Access, 7), 11854-11867, 2019. DOI: 10.1109/ACCESS.2019.2892063

- Harender and R. K. Sharma, “DWT based epileptic seizure detection from EEG signal using k-NN classifier,” 2017 International Conference on Trends in Electronics and Informatics (ICEI), Tirunelveli, 2017. DOI: 10.1109/ICOEI.2017.8300806

- Sangeetha and B. N. Keshavamurthy, “Conditional Mutual Information based Attribute Weighting on General Bayesian Network Classifier,” 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Greater Noida (UP), India, 2018. DOI: 10.1109/ICACCCN.2018.8748481

- H. Bei Hui, Y. W. Yue Wu, L. J. Lin Ji and J. C. Jia Chen, “A NB-based approach to anti-spam application: DLB Classification Model,” 2006 Semantics, Knowledge and Grid, Second International Conference on, Guilin, 2006. DOI: 10.1109/SKG.2006.10

- Wu, B. Zhang, Y. Zhu, W. Zhao and Y. Zhou, “Transformer Fault Portfolio Diagnosis Based on the Combination of the Multiple Baye SVM,” 2009 International Conference on Electronic Computer Technology, Macau, 2009. DOI: 10.1109/ICECT.2009.103

- S. Acharya, A. Armaan and A. S. Antony, “A Comparison of Regression Models for Prediction of Graduate Admissions,” 2019 International Conference on Computational Intelligence in Data Science (ICCIDS), Chennai, India, 2019. DOI: 10.1109/ICCIDS.2019.8862140

- Yang, K. Wu and J. Hsieh, “Mountain C-Regressions in Comparing Fuzzy C-Regressions,” 2007 IEEE International Fuzzy Systems Conference,London, 2007. DOI: 10.1109/FUZZY.2007.4295366

- Y. Goulermas, P. Liatsis, X. Zeng and P. Cook, “Density-Driven Generalized Regression Neural Networks (DD-GRNN) for Function Approximation,” in IEEE Transactions on Neural Networks, 18(6), 1683-1696, Nov. 2007. DOI: 10.1109/TNN.2007.902730

- Kavitha, S. Varuna, R. Ramya, “A comparative analysis on linear regression and support vector regression,” 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, 2016. DOI: 10.1109/GET.2016.7916627

- Qunzhu, Z. Rui, Y. Yufei, Z. Chengyao and L. Zhijun, “Improvement of Random Forest Cascade Regression Algorithm and Its Application in Fatigue Detection,” 2019 IEEE 2nd International Conference on Electronics Technology (ICET), Chengdu, China, 2019. DOI: 10.1109/ELTECH.2019.8839317

- Khodjet-Kesba, “Automatic target classification based on radar backscattered ultra wide band signals. Other. Université Blaise Pascal – Clermont-Ferrand II, 2014.

- Fattah, et al. “Multi Band OFDM Alliance Power Line Communication System”, Procedia Computer Science, Volume 151, 2019, https://doi.org/10.1016/j.procs.2019.04.14 6.

- Kumar X. Wu and coll. Top 10 algorithms in data mining. Knowledge and Information Systems, 14:137, 2007.

- Li, P. Niu, Y. Ma, H. Wang, W. Zhang, Tuning extreme learning machine by an improved artificial bee colony to model and optimize the boiler efficiency, Knowl.-Based Syst. 67 , 2014.