Bilateral Communication Device for Deaf-Mute and Normal People

Volume 5, Issue 4, Page No 363-373, 2020

Author’s Name: Raven Carlos Tabiongana)

View Affiliations

College of Engineering, Samar State University, Catbalogan, 6700, Philippines

a)Author to whom correspondence should be addressed. E-mail: raven.tabiongan@ssu.edu.ph

Adv. Sci. Technol. Eng. Syst. J. 5(4), 363-373 (2020); ![]() DOI: 10.25046/aj050442

DOI: 10.25046/aj050442

Keywords: Two-way, Communication device, Deaf-mute, Full-duplex, RX-TX

Export Citations

Communication is a bilateral process and being understood by the person you are talking to is a must. Without the ability to talk nor hear, a person would endure such handicap. Given that hearing and speech are missing, many have ventured to open new communication methods for them through sign language. This bilateral communication device can be utilized by both non-sign language users and Deaf-mute together in a single system. Shaped as a box (8in x 8in) with two multi-touch capable displays on both ends, the contraption has several microcontrollers and touch boards within. The latter has the technology of twelve interactive capacity touch and proximity electrode pads that react when tapped, producing quick response phrases audible via speaker or earphone. These touch boards are equipped with an MP3 decoder, MIDI synthesizer, 3.5mm audio jack and a 128MB microSD card. The touch screen modules mounted on top of the microcontrollers transfer data to and from each other in real-time via receiver-transmitter (RX-TX) full duplex UART serial communication protocol. The device is lightweight weighing at about 3 lbs. The prototype device was piloted in an academic institution of special education for deaf-mute students. Participants were 75 normal and 75 Deaf-mute people aged between 18 and 30 years. The experimental results show the overall rating of the device is 90.6%. The device is designed to promote the face-to-face socialization aspect of the Deaf-mute users to the normal users and vice versa. Several third-party applications were utilized to validate the accuracy and reliability of the device thru metrics of consistency, timing and delay, data transmission, touch response, and screen refresh rates.

Received: 07 May 2020, Accepted: 09 July 2020, Published Online: 28 July 2020

1. Introduction

This paper is an extension of work originally presented in the 2019 8th International Symposium on Next Generation Electronics (ISNE) [1]. Talking and hearing play a very important part of our daily lives. It helps in finishing up tasks, from the simplest to the most complex. It is inherent in humans to rely on other senses when deprived of hearing or speaking. Most people having these disabilities try to learn the art of sign language due to the urge of communicating independently. Unfortunately, being able to acquire such skills has its own sets of fallbacks, such as the inability to converse with the non-sign language, speaking, and hearing-capable people [2]. Communication is a bilateral process and being understood by the person talking to is a must [3]. Without the ability to talk nor hear, a person would be such in a handicap, socially and emotionally. Cases of these handicap conditions are commonly termed as Deaf-mute. In deaf community, the word deaf is spelled in two separate ways. The small “d”, deaf represents a person’s level of hearing through audiology and not being associated with other members of the deaf community whereas the capital “D”, Deaf indicates the culturally Deaf people who use sign language for communication [4]. Research indicated [5] that Deaf people, especially Deaf children, have high rates of behavioral and emotional issues in relation to different methods of communication. Most people with such disabilities become introverts and resist social connectivity and face-to-face socialization. Imagine the depression and discomfort experienced when one is unable to express their thoughts, for sure frustration arises every now and then.

People rely on words and sound from the environment for them to grasp what is happening. Other alternatives to remedy the said language barrier is to acquire a sign language interpreter, but practically speaking it is not the optimal solution. An interpreter is an inconvenience basically due to the 24/7 limitation. Not everyone around can check what they are saying or is intending to express. Most Deaf-mute just limit themselves to performing simple tasks to avoid irritation to others and to themselves. Persons who are Deaf-mute normally suffer when performing typical day-to-day tasks. Given that hearing and speech are missing, many have tried to open new communication medium for them such as sign language.

The above-mentioned problems can be solved by integrating a bilateral communication device capable of sending and receiving text and audio responses via full duplex serial communication protocol in real-time. Moreover, the device is equipped with an operating system that converts sign language into text, images, and audio for better communication between the Deaf-mute and the normal users [6], [7].

The main purpose of this research paper is to provide a user experience setting wherein the Deaf-mute and normal users can communicate and chat in a close-proximity, face-to-face setting. Another purpose of this system is to provide a simple and cost-effective solution that can be utilized by both Deaf-mute and non-sign language people together simultaneously in a single system.

2. Related Works

The Deaf community is not a monolithic group; it has adversity of groups which are as follows [8, 9]: (1) Hard-of-hearing people: they are neither fully deaf nor fully hearing, also known as culturally marginal people [10]. They can obtain some useful linguistic information from speech; (2) Culturally deaf people: they might belong to deaf families and use sign language as the primary source of communication. Their voice (speech clarity) may be disrupted. (3) Congenital or prelingual deaf people: they are deaf by birth or become deaf before they learn to talk and are not affiliated with Deaf culture. They might or might not use sign language-based communication; (4) Orally educated or post lingual deaf people: they have been deafened in their childhood but developed the speaking skills. (5) Late-deafened adults: they have had the opportunity to adjust their communication techniques as their progressive hearing losses.

2.1 Sensor Module Technology Approach.

Sensors and touch screen technology can be integrated in a system to bridge the communication gap between the Deaf-mute and normal people with or without knowledge in sign-language. Sharma et al. used wearable sensor gloves for detecting the hand gestures of sign language [11].

2.2 Visual Module Technology Approach.

Many vision-based technologies interventions are used to recognize the sign languages of Deaf people. For example, Soltani et al. developed a gesture-based game for Deaf-mutes by using Microsoft Kinect which recognizes the gesture command and converts it into text so that they can enjoy the interactive environment [12].Voice for the mute (VOM) system was developed to take input in the form of fingerspelling and convert into corresponding speech[13].The images of finger spelling signs are retrieved from the camera. After performing noise removal and image processing, the finger spelling signs are matched from the trained dataset. Processed signs are linked to appropriate text and convert this text into required speech. Nagori and Malode [14] proposed the communication platform by extracting images from the video and converting these images into corresponding speech. Sood and Mishra [15] presented the system that takes images of sign language as input and displays speech as output. The features used in vision-based approaches for speech processing are also used in different object recognition-based applications [16]-[22].

2.3 Product Design and Development Approach.

The device targets to improve the current way Deaf-mute communicates with the normal people, and vice versa. Thus, a bilateral communication device with the intent to promote proximity and face-to-face socialization between the parties involved is developed [1].

- To design and develop a portable device that enables the Deaf-mute and normal people communicate via multiple modes in a contraption that is designed for proximity and face-to-face communication.

- To establish a stable RX/TX full duplex serial UART communication protocol between the microcontrollers and modules within the device.

- To construct a sturdy packaging for the device suitable for indoor and outdoor portability and use.

- To test the system and device using third-party software. (Terminal Monitor, Balabolka (TTS), Arduino IDE)

- Accuracy in transmitting data

- Consistency in transmitting data

- Speed (Timing and Delay)

- Screen Refresh Rates

- Touch Responses

- To test the system and device using a Five-Point Scale questionnaire given to 150 respondents:

(75 Deaf-mute and 75 normal people).

- Value-Added

- Aesthetic Design

- Hardware Components

- Built-in Features

- User-Friendliness

- Reliability

- Accuracy

2.4 Mobile Application Technology Approach.

Many of the new smartphones are furnished with advanced sensors high processors, and high-resolution cameras [23]. A real-time emergency assistant “iHelp” [24] was proposed for Deaf-mute people where they can report any kind of emergency. The current location of the user is accessed through built-in GPS system in a smartphone. The information about the emergency is sent to the management through SMS and then passed on to the closest suitable rescue units, and hence the user can get rescue using iHelp. MonoVoix [25] is an Android application that also acts as a sign language interpreter. It captures the signs from a mobile phone camera and then converts them into corresponding speech. Sahaaya [26] is an Android application for Deaf-mute people. It uses sign language to communicate with normal people. The speech-to-sign and sign-to-speech technology are used. For a hearing person to interact with Deaf-mute, the text-to-speech (TTS) technology inputs the speech signal, and a corresponding sign language video is played against that input through which the mute can easily understand. Bragg et al. [27] proposed a sound detector. The app is used to detect alert sounds and alert the deaf-mute person by vibrating and showing a popup notification.

Improvement in health care access among Deaf people possible by providing the sign language supported visual communication and implementation of communication technologies for healthcare professionals. Some of the implemented technology-based approaches for facilitating Deaf-mutes with easy-to-use services are as follows.

3. Materials and Methods

The Arduino Mega 2560 is a microcontroller board based on the ATmega2560. It has 54 digital input/output pins, which can be used as PWM outputs, 16 analog inputs, 4 UARTs hardware serial ports, a 16MHz crystal oscillator, a USB connection, a power jack, an ICSP header, and a reset button. It contains everything needed to support the microcontroller; simply connect it to a computer with a USB cable or power it with a AC-to-DC adapter or battery. The Mega 2560 board is compatible with most shields designed.

Figure 1: Arduino Mega 2560 Microcontroller

Figure 1: Arduino Mega 2560 Microcontroller

| Table 1: Arduino Mega 2560 Product Specification | |

| Microcontroller – | ATmega2560 |

| Input Voltage -(recommended) | 7-12V |

| Input Voltage (limit) – | 6-20V |

| Digital I/O Pins | 54 (of which 15 provide PWM output) |

| Analog Input Pins – | 16 |

| DC Current per I/O Pin – | 20 mA |

| DC Current for 3.3V Pin – | 50 mA |

| Flash Memory – | 256 KB of which 8 KB used by bootloader |

| SRAM – | 8 KB |

| EEPROM – | 4 KB |

| Clock Speed – | 16 MHz |

| LED_BUILTIN – | 13 |

| Length – | 101.52 mm |

| Width – | 53.3 mm |

| Weight – | 37 g |

The Touch Board is a microcontroller board with dedicated capacitive touch and MP3 decoder ICs. It has a headphone socket and micro SD card holder (for file storage), as well as having 12 capacitive touch electrodes. It is based around the ATmega32U4 and runs at 16MHz from 5V. It has a micro USB connector, a JST connector for an external lithium polymer (LiPo) cell, a power switch, and a reset button. It is like the Arduino Leonardo board and can be programmed using the Arduino IDE. The ATmega32U4 can appear to a connected computer as a mouse or a keyboard, (HID) serial port (CDC) or USB MIDI device.

Figure 2: Bare Conductive Touch Board

Figure 2: Bare Conductive Touch Board

| Table 2: Bare Conductive Touch Board Product Specification | ||

| Microcontroller –

Touch IC – |

Microchip ATmega32U4

Resurgent Semiconductor MPR121 |

|

| MP3 Decoder IC – | VLSI Solution VS1053b | |

| Audio Output – | 15mW into 32Ω via 3.5mm stereo socket | |

| Removable Storage – | Up to 32GB via micro SD card | |

| Input Voltage – | 3.0V DC – 5.5V DC | |

| Operating Voltage – | 5V DC | |

| Max. Output Current –

(5V Rail) |

400mA (100mA at startup) | |

| Max. Output Current –

(3.3V Rail) |

300mA | |

| LiPo Cell Connector – | 2-way JST PH series – pin 1 +ve, pin 2 -ve | |

| LiPo Charge Current – | 200mA | |

| Capacitive Touch Electrodes- | 12 (of which 8 can be configured as digital I/O) | |

|

Digital I/O Pins – |

20 (of which 3 are used for the MPR121 and 5 are used for the VS1053b-the latter can be unlinked via solder blobs) | |

| PWM Channels – | 7 (shared with digital I/O pins) | |

| Analogue Input Channels – | 12 (shared with digital I/O pins) | |

| Flash Memory – | 32kB (ATmega32U4) of

which 4kB used by bootloader |

|

| SRAM – | 2.5kB (ATmega32U4) | |

| EEPROM – | 1kB (ATmega32U4) | |

| Clock Speed – | 16MHz (ATmega32U4), 12.288MHz (VS1053b) | |

| DC Current per I/O Pin – | 40mA sink and source (ATmega32U4), 12mA source / 1.2mA sink (MPR121) | |

| Analogue Input Resistance – | 100MΩ typical (ATmega32U4) | |

| Table 3: Bare Conductive Touch Board Input and Output Pins | ||

| Touch Electrodes –

E0-E11 |

Connect to the MPR121 and provide capacitive touch or proximity sensing. | |

| Serial –

Pins 0 (RX) and 1 (TX) |

Used to receive (RX) and transmit (TX) TTL serial data using ATmega32U4 UART. | |

| TWI (I2C) –

Pins 2 (SDA) and 3 (SCL) |

Data and clock pins used to communicate with the MPR121. | |

| IRQ –

Pin 4 |

Used to detect interrupt events from the MPR121. | |

| SD-CS –

Pin 5 |

Used to select the micro SD card on the SPI bus. | |

| D-CS –

Pin 6 |

Used to select data input on the VS1053b. | |

| DREQ – | Used to detect data request events from the VS1053b. | |

| MP3-RST – | Used to reset the VS1053b. | |

| MP3-CS – | Used to select the instruction input on the VS1053b | |

| MIDI IN –

Pin 10 |

Used to pass MIDI data to the VS1053b and have it behaved as a MIDI synthesizer | |

| Headphone Output –

AGND, R, L |

Provide the headphone output from the VS1053b on 0.1” / 2.54mm pitch pads as an alternative to the 3.5mm socket. | |

| External Interrupts –

Pins 0, 1, 2, 3, 7 |

Configured to trigger an interrupt on a low value, a rising or falling edge, or a change in value. | |

This TFT display is big (2.8″ diagonal) bright (4 white-LED backlight) and colorful (18-bit 262,000 different shades)! 240×320 pixels with individual pixel control. It has more resolution than a black and white 128×64 display. As a bonus, this display has a resistive touchscreen attached to it already. It can detect finger presses anywhere on the screen. It also includes an SPI touchscreen controller thus it only needs one additional pin to add a high-quality touchscreen controller. This display shield has a controller built into it with RAM buffering, so that almost no work is done by the microcontroller. This shield needs fewer pins than our v1 shield, in order to connect more sensors, buttons and LEDs: 5 SPI pins for the display, another pin or two for the touchscreen controller and another pin for microSD card to read images off of it.

Figure 3: TFT Touch Screen Display (2.8” diagonal)

Figure 3: TFT Touch Screen Display (2.8” diagonal)

| Table 4: TFT Screen Pins | |

| Digital #13 or

ICSP SCLK – |

This pin is used for the TFT, microSD and resistive touch screen data clock |

| Digital #12 or

ICSP MISO – |

This pin is used for the TFT, microSD and resistive touch screen data |

| Digital #11 or

ICSP MOSI – |

This pin is used for the TFT, microSD and resistive touch screen data. |

| Digital #10 – | This is the TFT CS (chip select pin). It is used by the Arduino to tell the TFT that it wants to send/receive data from the TFT only. |

| Digital #9 – | This is the TFT DC (data/command select) pin. It is used by the Arduino to tell the TFT whether it wants to send data or commands |

| Table 5: Resistive Touch Controller Pins | |

| Digital #13 or

ICSP SCLK – |

This pin is used for the TFT, microSD and resistive touch screen data clock. |

| Digital #12 or

ICSP MISO – |

This pin is used for the TFT, microSD and resistive touch screen data. |

| Digital #11 or

ICSP MOSI – |

This pin is used for the TFT, microSD and resistive touch screen data. |

| Digital #8 – | This is the STMPE610 Resistive Touch CS (chip select pin). It is used by the Arduino to tell the Resistive controller that it wants to send/receive data from the STMPE610 only. |

| Table 6: Capacitive Touch Pins | |

| SDA – | This is the I2C data pin used by the FT6206 capacitive touch controller chip. It can be shared with other I2C devices. On UNO’s this pin is also known as Analog 4. |

| SCL – | This is the I2C clock pin used by the FT6206 capacitive touch controller chip. It can be shared with other I2C devices. On UNO’s this pin is also known as Analog 5. |

| Table 7: MicroSD Card Pins | |

| Digital #13 or

ICSP SCLK – |

This pin is used for the TFT, microSD and resistive touch screen data clock |

| Digital #12 or

ICSP MISO – |

This pin is used for the TFT, microSD and resistive touch screen data |

| Digital #11 or

ICSP MOSI – |

This pin is used for the TFT, microSD and resistive touch screen data |

| Digital #4 – | This is the microSD CS (chip select pin). It is used by the Arduino to tell the microSD that it wants to send/receive data from the microSD only |

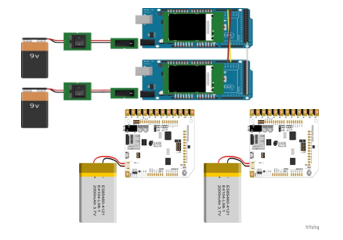

3.1 Power Supply Design

The two microcontrollers used in the study are the Bare Conductive Touch Board and Arduino Mega 2560 Rev3. As shown in Figure 5, both microcontrollers are directly powered. Arduino Mega 2560 Rev3 was powered by a 9V Battery with power snap for easier insertion. While the Touch Board was operated with a 3.7V 3000mAh Lithium Polymer Battery. Both power supplies are rechargeable, ensuring energy efficiency. Meanwhile, Bare Conductive Touch Board is powered via rechargeable 3.7V Li-Po Battery, which is directly inserted onto the boards LiPo socket.

The main reason why there are two designated power supplies for each microcontroller is to ensure that there is a sufficient supply independent from one another.

Figure 4: Illustrates how the LCD Touch Shield is mounted to Arduino Mega 2560 Rev3 with 9V power supply (top) and the Bare Conductive Touch Boards is powered thru 3.7V, 3000mah LiPo battery (bottom).

Figure 4: Illustrates how the LCD Touch Shield is mounted to Arduino Mega 2560 Rev3 with 9V power supply (top) and the Bare Conductive Touch Boards is powered thru 3.7V, 3000mah LiPo battery (bottom).

3.2 Software Application – Terminal Emulation Program

Terminal Emulation Program is a simple serial port (COM) monitoring program that is a very useful tool in debugging serial communication applications.

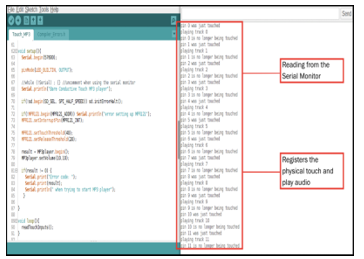

3.3 Software Application – Arduino Integrated Development Environment (IDE)

Figure 6 shows the real-time process happening inside the touch boards. Using the Arduino IDE 1.6.7. serial monitor, the reading indicates an accurate response, with no delay or whatsoever. From the instance that it registers a physical touch a pre-programmed audio response is played instantly. The Arduino Integrated Development Environment is a cross-platform application that is written in functions from C and C++. It is used to write and upload programs to Arduino compatible boards, but also, with the help of 3rd party cores, other vendor development boards. Furthermore, contains a text editor for writing code, a message area, a text console, a toolbar with buttons for common functions and a series of menus.

3.4 Hardware Design

The indicated connections are the I/O pins that when attached together fits in a mount position. These are the exact pin outs to where the touch screens should be connected to the microcontrollers, to execute the desired output. Basic RX-TX communication was utilized to interact and link the two pairs as shown in Figure 7.

Programming for Arduino is done on Arduino IDE which is based on C Language. It’s neither completely C nor embedded C. The code written on the IDE will be passed directly to a C/C++ compiler (avr-g++).

Figure 5: Actual data acquired using the Terminal Emulation Program. What is shown are the source codes in hexadecimal and binary format in real time. This was utilized to ensure accuracy and reliability in transmitting data from one shield to the other and vice-versa.

Figure 5: Actual data acquired using the Terminal Emulation Program. What is shown are the source codes in hexadecimal and binary format in real time. This was utilized to ensure accuracy and reliability in transmitting data from one shield to the other and vice-versa.

Figure 6. As indicated, for every physical tap a user made on the solder pads, it will play the preloaded phrase track. A total of twelve tracks, one for each pad.

Figure 6. As indicated, for every physical tap a user made on the solder pads, it will play the preloaded phrase track. A total of twelve tracks, one for each pad.

3.5 Software Application – Balabolka Text-to-Speech (TTS)

One of the twelve preloaded phrase tracks is “Hello! How are you? I am a Bilateral Communication Device for Hearing and speech Impaired. A communication device that pertains to people suffering from hearing and speaking disabilities most referred to as deaf, mute, and deaf-mute individuals. I am capable of being used both indoor and outdoor environment. I am dedicated to bridge the language gap between the impaired subjects to those of the normal, talking, non-sign language people. Have a good day.”

Figure 7: Displays the schematic diagram of the touch screens mounted on top of the microcontroller. Meanwhile, separate microSD card is inserted on its slot for storing .bmp image files.

Figure 7: Displays the schematic diagram of the touch screens mounted on top of the microcontroller. Meanwhile, separate microSD card is inserted on its slot for storing .bmp image files.

Figure 8: Actual data seen using a third-party program named Balabolka. What shown is the raw text file to be imported as .mp3 format to the micro SD Card which will then be inserted to the Touch Board’s compartment.

Figure 8: Actual data seen using a third-party program named Balabolka. What shown is the raw text file to be imported as .mp3 format to the micro SD Card which will then be inserted to the Touch Board’s compartment.

4. Scope and Delimitation

The system is composed of two microcontroller and two touch boards. It can receive and send sign language images in .bmp format via touch screens through the microcontrollers’ RX-TX serial communication. The system comprises four microcontrollers, one electric paint, two earphones, two rechargeable 9V batteries and two 3.7V lithium-ion rechargeable batteries with 3000mAh capacity and a twin barrel battery charger. (1) A surface consists of twelve common words and/or phrases based from the 1000 most common phrases used); (2) Two touch screens consisting of preloaded standard English alphabet and phrases for the non-sign language users. The impaired subjects have two options either to tap the surface with the signed image of their choice, correspondingly the Touch Board will read the message out loud via built-in speaker or to communicate using the touch screens like texting.

It is a bilateral communication device comprising of the following processes and components: (1) two microcontrollers, two touch boards, configured to act as with powerful processing power with a built-in Li-Po and Li-Ion battery charger. Standard audio jack for speakers and earphones. Composed of twelve electrode solder pads which produce audio when touched or tapped: (2) Innovative User Interface. Two microcontrollers with two mounted screen display module and touch boards Solder Pads; (3) Stable UART Communication. An RX-TX serial connection was utilized to send and receive data from one touch screens to another: (4) On Top Guide. A surface consisting of twelve hand sign images with their corresponding translations; (5) Text-to-Speech Capable. A third-party text-to-speech program (TTS) called Balabolka, enabling the converted audio in an .mp3 format to be exported within the Touch Board’s SD card memory; (6) Lightweight and Sturdy Casing. A lightweight packaging made from stainless alloy was constructed to form a rigid box for indoor and outdoor usage; (7) Accessories Integrated. Two earphones attached on the two touch boards respectively for audio feature and One twin barrel battery charger: (8) Long-lasting Battery Consumption. Two rechargeable 9V 220mAh battery supply for the microcontrollers; Two rechargeable 3.7V 3000mAh li-ion battery; (9) Convenient On & Off Preferences. Two separate rocker switches (on/off) states for the touch screens; Two separate built-in switches (on/off) states found in the touch boards for interface purposes; (10) Non-Water-Resistant. Any nearby water reservoir could damage the device when exposed to.

Figure 9: External Components (Top) and Internal Components (Bottom) of the device. Designed to attain balance in weight distribution and shortest path of circuit layout.

Figure 9: External Components (Top) and Internal Components (Bottom) of the device. Designed to attain balance in weight distribution and shortest path of circuit layout.

5. Perspective Renderings of the Device

Based on design it has an eight by eight (8×8) inch aluminum casing, with four differently sized compartments dedicated for the two 2.8-inch touch shields comprising the thirty percent (30%), two touch boards comprising the ten percent (10%), and display of hand sign images with English phrase translation comprising the sixty percent (60%) of the space. Additionally, there are four (4) separate power switches, one for each microcontrollers and boards, respectively. The device is approximately weighed at around 2.8 lbs. to 3.0 lbs.

6. Proposed Methodology

Distress caused by a verbal barrier which hinders the subjects from being able to communicate freely and easily to sign language incapable individuals and vice-versa is the focal point of concern this project aims to resolve. This communication device consists of two major parts: (1) a surface consists of two Bare Conductive Touch Boards preloaded with the twelve phrases and words with its corresponding display of hand sign images converted to its English translation and (2) two TFT Touch Shields mounted in Arduino Mega 2560 Rev3 respectively, preloaded with the standard English alphabet and American Sign Language symbols. The Deaf-mute have two options either to tap the desired electrode pads on the touch board or to communicate using the TFT touch shield like a multi-touch screen. In this regard, we proposed and developed a system that converts and interprets American sign-language (ASL) hand-sign image to its corresponding English translation and vice versa. Aside from that, it can also produce speech or audio responses via interactive capacity touch and proximity electrode pads. The bilateral communication device promotes two-way communication between Deaf-mute and normal person in a close. The normal person refers to the one who has no hearing or vocal impairment or disability. The main features of the prototype’s operating system are listed below.

Figure 10: Shows the actual set-up of how the Normal User and the Deaf-mute

Figure 10: Shows the actual set-up of how the Normal User and the Deaf-mute

Users communicate with one another using the device and its peripherals.

6.1 Normal to Deaf-Mute Person Communication.

This module takes text message of a normal person as an input and outputs an image file in a .bmp format, that displays sign language images for the Deaf-mute person. Each image file is given tags and indexing. The steps of normal to Deaf-mute person communication are as follows:

- The application takes text of normal person as an input.

- The application converts the text message of a normal person into an image by using the text-to-image conversion program.

- The program matches the text to any of the image tags and index associated with the file and displays the corresponding sign for the Deaf-mute.

6.2 Deaf-Mute to Normal Person Communication.

Not everyone has knowledge of sign language. The Deaf-mute have two options either to tap the desired electrode pads preloaded with the most commonly used English words and phrases located on the touch board or to communicate using the TFT touch shield like a multi-touch screen, swiping and tapping hand-sign and illustrative images of English words in alphabetical order by default. A search option is also integrated to the system to find images on the screen faster.

6.3 System Flowchart of the Operating System.

It is straightforward bilateral communication device with simple, yet sharp responses made possible through the layer of process it undergoes. As shown in Figure 11, the device starts with tapping (choosing the words), followed by chatting (forming syntax and semantics), then a process of sending or receiving (decision-making), after that two alternative options follow either to ignore (no) or to reply (yes). To ignore means ending the system flow while replying resumes the entire process creating a loop from tapping to responding, until the user ends it. The reply stage involves the sending and receiving process which constitutes conversion of text message to picture message (normal user to Deaf-mute) or conversion of picture message to text message (Deaf-mute to normal user).

Figure 11: Presents the System Flowchart: it shows the internal logic of the entire system, thus providing the backbone of the how the project works (start and end)’

Figure 11: Presents the System Flowchart: it shows the internal logic of the entire system, thus providing the backbone of the how the project works (start and end)’

6.4 Framing.

Framing process is used to split the pre-emphasizedaudio files into short segments. The Bare Conductive Touch Boards consists of capacitive and proximity electrodes that is responsive to touch. The said board also stores audio files via microSD card. The audio files is represented by N frame samples and the interframe distance or frameshift is M (M < N). In the program, the frame sample size (N) = 256 and frameshift (M) = 100. The frame size and frameshift in milliseconds are calculated as follows:

6.5 Statistical Treatment of Data.

6.5 Statistical Treatment of Data.

Weighted Mean is used for calculating the weighted mean statistics for the given data set. If all the values are equal, then the weighted mean is equal to the arithmetic mean. It is a kind of average, wherein instead of each data point contributing equally to the final mean, some data points contribute more weight than the others. If all the weights are equal, then the weighted mean equals the arithmetic mean.

where,

Σ = the sum of

w = the weights

x = the value

7. Experimental Results and Discussion

7.1 Experimental Setup

This communication device consists of two major parts: (1) a surface consists of two Bare Conductive Touch Boards preloaded with the twelve phrases and words with its corresponding display of hand sign images converted to its English translation and (2) two TFT Touch Shields mounted in Arduino Mega 2560 Rev3 respectively, preloaded with the standard English alphabet and American Sign Language symbols. The Deaf-mute have two options either to tap the desired electrode pads on the touch board or to communicate using the TFT touch shield like a multi-touch screen. The device is powered via two separate power supply. Rechargeable 9V DC supply charges up the Arduino microcontrollers and a different rechargeable 3.7V, 3000mAh LiPo powers up the touch boards. Sufficient power supply is vital to ensure the device functionality and features work.

7.2 Message Conversion and Transmission Phase

The working operating system of the bilateral communication device. (a) Write/Text Message Mode allows normal user to type-in English words and sent it to Deaf-mute. (b) Picture Message Mode converts the previously sent text message, into its translation in hand-sign images. (c) Picture Message Mode allows the Deaf-mute to select sign language image sets and sent to normal user. (d) Write/Text Message converts the previously sent picture message, into its English word translation. The operating system treats each image stored in the memory card of touch screen displays, as indexed then call each .bmp image file once its match word is chosen. The same protocol works the other way around from text messages to picture message.

7.3 Qualitative Feedback

Researchers formalized questionnaire survey to evaluate the overall performance device through several criteria. The demographics of the respondents includes fifty percent (50%) non sign language users aged 18 to 30 years old and fifty percent (50%) Deaf-mute aged 18 to 30 years old. The researcher divides the population into strata based off the participant’s racial demographics. Here, there are two strata, one for each of the two racial categories. Using this information, a disproportional stratified sample was further implemented. The basis for rating are the following parameters: (1) Value Added – The device was advantageous to the speech and hearing impaired people; (2) Aesthetic Design – The packaging of the device prove to be stable and sturdy; (3) Hardware Components – device’s hardware is properly placed and well organized; (4) Built-in Features – The device has innovative user interfaces like the touch shield making it more interesting; (5) User-Friendliness – The device is easy to use, very convenient, and innovative; (6) Reliability – The data sent and received by the device are consistent and correct; and (7) Accuracy – The data sent and received by the device are exact and true on what was intended to.

7.4 Application Interface

Initial bootup of the screens from both ends of the device differs from each other. Normal users and Deaf-mute have their designated position to where they should be at. Display screen of the Deaf-mute contains picture message tab showing the multiple sign language images with English translation at the bottom, in grid view. These images can be a word, a letter, or a phrase. These are arranged alphabetically, in which an additional search bar is added for faster image searches. Meanwhile, display screen of the normal users contains a standard QWERTY keyboard layout. Both screens have sent button, wherein the conversion and transmission of data is initialized and completed once data is received from one end to the other

In this example (a), the message contains the word “hello”. From this point, the normal user will tap “Send Message”, then this will be sent and converted to the corresponding hand sign images on the second TFT Touch Shield for the Deaf-mute users. Accordingly, the previously sent “hello” word was converted into hand sign images as shown at (b).

In this example (c), the image that has the word “all done” was tapped. This is how the Deaf-mute will communicate with the normal user. Consequently, the image that has the word “all done” was received by the TFT Touch Shields from the normal user in an English-based text form (d).

7.5 Questionnaire Design

Corresponding interpretation includes: Excellent (4.51-5.00); Very Good (3.51-4.00); Average (2.51-3.50); Fair (1.51-2.50); Poor (1.00-1.50); Very Poor (0.00-0.99). Likert surveys are also quick, efficient, and inexpensive methods for data collection. They have high versatility and can be sent out fast. In answering the questionnaire, the respondents are group in pairs of normal users and Deaf-mute, for a total of 75 pairs.

The respondents rated the device based on the following criteria:

a. Value Added

b. Aesthetic Design

c. Hardware Components

d. Built-in Features

e. User-Friendliness

f. Reliability

g. Accuracy

Test cases involved:

a. Accuracy in transmitting data

(back and forth sending and receiving 10 times)

b. Consistency in transmitting data

(back and forth sending and receiving 10 times)

c. Speed (timing and delay in seconds)

d. Screen Refresh and Transitions Rates (min. 2 seconds)

e. Touch Responsiveness (min. 2 seconds)

- User Experience (easy-to-use)

- User Interface (easy-to-learn)

Table 8: Respondents’ Scale

| Range | Interpretation |

| 5 | Excellent (4.51-5.00) |

| 4 | Very Good (3.51-4.50) |

| 3 | Average (2.51-3.50) |

| 2 | Fair (1.51-2.50) |

| 1 | Poor (1.00-1.50) |

| 0 | Very Poor (0.00-0.99) |

Table 9: Tallied Results based on the Respondents’ Scale

| Metrics | Users’ Feedbacks | Weighted Mean | Interpretation | |||||

| 0 | 1 | 2 | 3 | 4 | 5 | |||

| Value Added | 0 | 130 | 10 | 10 | 0 | 0 | 4.80 | Excellent |

| Aesthetic Design | 0 | 50 | 100 | 0 | 0 | 0 | 4.33 | Very Good |

| Hardware Components | 0 | 70 | 80 | 0 | 0 | 0 | 4.47 | Very Good |

| Built-in Features | 0 | 90 | 40 | 20 | 0 | 0 | 4.47 | Very Good |

| User-Friendliness | 0 | 100 | 40 | 0 | 10 | 0 | 4.53 | Excellent |

| Reliability | 0 | 100 | 30 | 20 | 0 | 0 | 4.53 | Excellent |

| Accuracy | 0 | 110 | 20 | 20 | 0 | 0 | 4.60 | Excellent |

| Overall Rating | 4.53 | Excellent | ||||||

Figure 12: The working operating system of the bilateral communication device. (a) Write/Text Message Mode allows normal user to type-in English words and sent it to Deaf-mute. (b) Picture Message Mode converts the previously sent text message, into its translation in hand-sign images. (c) Picture Message Mode allows the Deaf-mute to select sign language image sets and sent to normal user. (d) Write/Text Message converts the previously sent picture message, into its English word translation.

Figure 12: The working operating system of the bilateral communication device. (a) Write/Text Message Mode allows normal user to type-in English words and sent it to Deaf-mute. (b) Picture Message Mode converts the previously sent text message, into its translation in hand-sign images. (c) Picture Message Mode allows the Deaf-mute to select sign language image sets and sent to normal user. (d) Write/Text Message converts the previously sent picture message, into its English word translation.

8. Conclusion

Communication is a bilateral process and being understood by the person you are talking to is a must. Without the ability to talk nor hear, a person would endure such handicap. Given that hearing and speech are missing, many have ventured to open new communication methods for them through sign language. This bilateral communication device can be utilized by both non-sign language users and Deaf-mute together in a single system. Shaped as a box (8in x 8in) with two multi-touch capable displays on both ends, the contraption has several microcontrollers and touch boards within. The latter has the technology of twelve interactive capacity touch and proximity electrode pads that react when tapped, producing quick response phrases audible via speaker or earphone. These touch boards are equipped with an MP3 decoder, MIDI synthesizer, 3.5mm audio jack and a 128MB microSD card. The touch screen modules mounted on top of the microcontrollers transfer data to and from each other in real-time via receiver-transmitter (RX-TX) full duplex UART serial communication protocol. The device is lightweight weighing at about 3 lbs. The prototype device was piloted in an academic institution of special education for deaf-mute students. Participants were 75 normal and 75 Deaf-mute people aged between 18 and 30 years. The experimental results show the overall rating of the device is 90.6%. The device is designed to promote the face-to-face socialization aspect of the Deaf-mute users to the normal users and vice versa. Several third-party applications were utilized to validate the accuracy and reliability of the device thru metrics of consistency, timing and delay, data transmission, touch response, and screen refresh rates.

Conflict of Interest

The author declares no conflict of interest.

Acknowledgment

The proponent would like to acknowledge the Samar State University particularly the College of Engineering and the Center for Engineering, Science and Technology Innovation for the continuous support, resources, and unwavering encouragements in order to achieve milestones and deliverables set for this research undertaking.

- R. Tabiongan, “Chatbox: two-way schemed communication device for speech and hearing impairment,” in 2019 8th International Symposium on Next Generation Electronics (ISNE), Zhengzhou China, CN, 2019. doi: 10.1109/ISNE.2019.8896577

- T. Humphries and J. Humphries, “Deaf in the time of the cochlea,” Journal of Deaf Studies and Deaf Education, 16, issue 2, spring 2011, 53-163, 2010. doi: 10.1093/deafen/enq054

- V. Kimmelman, “List of sign languages and their abbreviations,” Information Structure in Sign Languages, 23-26, Feb. 2019. doi: 10.1515/9781501510045-206

- I.W. Leigh and J.F. Andrews, “Deaf people and society: psychological, sociological and educational perspectives”, Psychology Press, 2016. doi: 10.4324/9781315473819

- P. Vostanis, M. Hayes, M. Du Feu, and J. Warren, “Detection of behavioural and emotional problems in deaf children and adolescents: Comparison of two rating scales,” Child: Care, Health and Development, 23, 3, 233–246, 1997. doi: 10.1111/j.1365-2214.1997.tb00966.x

- J. Gugenheimer, K. Plaumann, F. Schaub et al., “The impact of assistive technology on communication quality between deaf and hearing individuals,” in Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing, CSCW 2017, 669–682, March 2017. doi: 10.1145/2998181.2998203

- S. Kaur, and K.S. Dhinda, “Design and Development of Android Based Mobile Application for Specially Abled People”. Wireless Pers Commun 111, 2353-2367, 2020. doi: 10.1007/s11277-019-06990-y

- J.L. PrayandI. K. Jordan, “The deaf community and culture at a crossroads: Issues and challenges,” Journal of Social Work in Disability and Rehabilitation, 9, 2, 168–193, 2010. doi: 10.1080/1536710X.2010.493486

- S. Barnett, “Communication with deaf and hard-of-hearing people: A guide for medical education,” Academic Medicine: Journal of the Association of American Medical Colleges, 77, 7, 694–700, 2002. doi: 10.1097/00001888-200207000-00009

- E. Rothe and A. Pumariega, “Culture, identity, and mental health: conclusions and future directions” Oxford Medicine Online, February 2020. doi: 10.1093/med/9780190661700.001.0001

- M.V. Sharma, N.V. Kumar, S.C. Masaguppi, S. Mn, and D.R. Ambika, “Virtual talk for deaf, mute, blind and normal humans,” in Proceedings of the 2013 1st Texas Instruments India Educators’ Conference, TIIEC 2013, 316–320, April 2013. doi: 10.1109/TIIEC.2013.63

- F. Soltani, F. Eskandari, and S. Golestan,“Developing a gesture based game for deaf/mute people Using microsoft kinect,” in Proceedings of the 2012 6th International Conference on Complex, Intelligent, and Software Intensive Systems, ICCISIS 2012, 491– 495, July 2012. doi: 10.1109/CISIS.2012.55

- A. K. Tripathy, D. Jadhav, S. A. Barreto, D. Rasquinha, and S.S. Mathew, “Voice for the mute”, in Proceedings of the 2015 International Conference on Technologies for Sustainable Development, ICTSD 2015, February 2015. doi: 10.1109/ICTSD.2015.7095846

- N. P. Nagori and V. Malode, “Communication Interface for Deaf-Mute People using Microsoft Kinect,” in Proceedings of the 1st International Conference on Automatic Control and Dynamic Optimization Techniques, ICACDOT2016, 640–644, September 2016. doi: 10.1109/ICACDOT.2016.7877664

- A. Sood and A. Mishra, “AAWAAZ: A communication system for deaf and dumb,” in Proceedings of the 5th International Conference on Reliability, Infocom Technologies and Optimization, ICRITO 2016, 620–624, September 2016. doi: 10.1109/ICRITO.2016.7785029

- M. Yousuf, Z. Mehmood, H.A. Habib et al., “A Novel Technique Based on Visual Words Fusion Analysis of Sparse Features for Effective Content-Based Image Retrieval,” Mathematical Problems in Engineering, 2018, Article ID 2134395, 13 pages, 2018. doi: 10.1155/2018/2134395

- D. Zhong and I. Defee, “Visual retrieval based on combination of histograms of ac block patterns and block neighborhood,” International Conference on Image Processing, Atlanta, GA, 2006, 1481-1484, 2006. doi: 10.1109/ICIP.2006.312562

- Z. Mehmood, T. Mahmood, and M. A. Javid, “Content-based image retrieval and semantic automatic image annotation based on the weighted average of triangular histograms using support vector machine,” Applied Intelligence, 48, 1, 166–181, 2018. doi: 10.1007/s10489-017-0957-5

- N. Ali, K.B. Bajwa, R. Sablatnig, and Z. Mehmood, “Image retrieval by addition of spatial information based on histograms of triangular regions,” Computers & Electrical Engineering, 54, 539–550, 2016. doi: 10.1016/j.compeleceng.2016.04.002

- Z. Mehmood, S.M. Anwar, N. Ali, H.A. Habib, and M. Rashid, “A Novel image retrieval based on a combination of local and global histograms of visual words,” Mathematical Problems in Engineering, 2016, Article ID 8217250, 12 pages, 2016. doi: 10.1155/2016/8217250

- Z. Mehmood, F. Abbas, T. Mahmood, M.A. Javid, A. Rehman, and T. Nawaz, “Content-based image retrieval based on visual words fusion versus features fusion of local and global features,” Arabian Journal for Science and Engineering, 1–20, 2018. doi: 10.1007/s13369-018-3062-0

- S. Jabeen ,Z. Mehmood, T. Mahmood, T. Saba, A. Rehman, and M. T. Mahmood, “An effective content-based image retrieval technique for image visuals representation based on the bag-of-visual-words model,” PLoS ONE, 13, 4, e0194526, 2018.doi: 10.1371/journal.pone.0194526

- S. Ghanem, C. Conly, and V. Athitsos, “A Survey on Sign Language Recognition Using Smartphones,” in Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, 171–176, ACM, June 2017. doi: 10.1145/3056540.3056549

- L.B. Chen, C.W. Tsai, W.J. Chang, Y. M. Cheng, and K.S.M. Li, “A real-time mobile emergency assistance system for helping deaf-mute people/elderly singletons,” in Proceedings of the IEEE International Conference on Consumer Electronics, ICCE 2016, 45-46, January 2016. doi: 10.1109/icce.2016.7430516

- R. Kamat, A. Danoji, A. Dhage, P. Puranik, and S. Sengupta, “MonVoix-An Android Application for the acoustically challenged people,” Journal of Communications Technology, Electronics and Computer Science, 8, 24–28, 2016. doi: 10.22385/jctecs.v8i0.123

- A. Kaur and P. Madan, “Sahaaya: Gesture Recognising System to Provide Effective Communication for Specially-Abled People,” 2012 IEEE Global Humanitarian Technology Conference, Seattle, WA, 2012, 93-97, doi: 10.1109/GHTC.2012.65

- D. Bragg, N. Huynh, and R. E. Ladner, “A personalizable mobile sound detector app design for deaf and hard-of-hearing users,” in Proceedings of the 18th International ACMSIG ACCESS Conference on Computers and Accessibility, ASSETS2016, 3– 13, October 2016. doi: 10.1145/2982142.2982171

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

No. of Downloads Per Month

No. of Downloads Per Country