Racial Categorization Methods: A Survey

Volume 5, Issue 3, Page No 388-401, 2020

Author’s Name: Krina B. Gabania), Mayuri A. Mehta, Stephanie Noronha

View Affiliations

Department of Computer Engineering, Sarvajanik College of Engineering and Technology, Surat, 395001, Gujarat, India

a)Author to whom correspondence should be addressed. E-mail: krinagabani007@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 5(3), 388-401 (2020); ![]() DOI: 10.25046/aj050350

DOI: 10.25046/aj050350

Keywords: Ethnicity, Ethnic group, Race, Racial classification, Anthropometry, Facial features, Race identification, Face recognition, Machine learning

Export Citations

Face explicitly provides the direct and quick way to evaluate human soft biometric information such as race, age and gender. Race is a group of human beings who differ from human beings of other races with respect to physical or social attributes. Race identification plays a significant role in applications such as criminal judgment and forensic art, human computer interface, and psychology science based applications as it provides crucial information about the person. However, categorizing a person into respective race category is a challenging task because human faces comprise of complex and uncertain facial features. Several racial categorization methods are available in literature to identify race groups of humans. In this paper, we present a comprehensive and comparative review of these racial categorization methods. Our review covers survey of the important concepts, comparative analysis of single model as well as multi model racial categorization methods, applications, and challenges in racial categorization. Our review provides state-of-the-art technical information concerning racial categorization and hence, will be useful to the research community for development of efficient and robust racial categorization methods

Received: 30 January 2020, Accepted: 25 May 2020, Published Online: 11 June 2020

1. Introduction

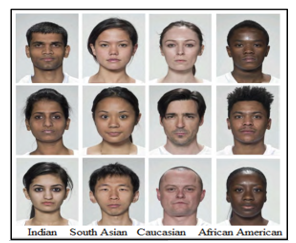

Human face expresses social information that is highly useful in automated systems. It provides soft biometric information of human such as race, gender, age, identity and emotions [1-7]. This information is significant in interdisciplinary research areas such as psychology science, computer vision science, neuroscience, anthropological science as well as in the social security and forensic art department. Amongst the various types of biometric information, race information is crucial and is required for a wide range of applications. Race is a group of human beings differentiated based on physical or social attributes. Race conveys social and cultural traits of different communities. Facial features such as eyes, eyebrow, ear, nose, cheek, mouth, chin, forehead area and jaw differ from human to human and are highly dependent on racial category [8-14]. Figure 1 shows the difference in facial features of different racial groups.

Race analysis is essential in contemporary applications such as criminal judgment and forensic art [15-20], aesthetic surgery [21], healthcare [22-26], medico legal [27-29], video security

surveillance and public safety [30], human computer interface [31-33] and face recognition [34]. In such applications, race analysis is required for identification of individuals. Several racial categorization methods have been proposed in literature. They are either single model racial categorization methods or multi model racial categorization methods. Single model racial categorization method uses facial features to recognize race [35-45]. Conversely, multi model racial categorization method considers fusion of physical characteristics such as gait pattern and audio clues in addition to facial features [5, 7, 46-50]. The majority of the practical applications involve single model racial categorization because facial data is available in large quantities compared to gait pattern and audio clues. However, there are applications that consider gait pattern and audio cues in absence of facial image. The single model racial categorization methods mainly differ from each other with respect to classification approach such as Support Vector Machine (SVM) [51-54], Convolutional Neural Network (CNN) [38, 44, 55-59], Artificial Neural Network (ANN) [60], Local Binary Pattern (LBP) [61] and Local Circular Pattern (LCP) [62]. In literature, participants based racial categorization methods such as diffusion model [63] and implicit racial attitude [64] are also available. The multi model racial categorization methods involve classification approaches such as SVM [65-67], logistic regression [66], Adaboost [66], random forest [66], CNN [68] and Haar-LBP histogram [69].

Figure 1: Illustrative facial characteristic variance of human races. (Figure source: http://www.faceresearch.org/)

In this paper, we present a thorough and extensive study of various racial categorization methods. First, we present a taxonomy of available racial categorization methods. Then we describe several single model and multi model racial categorization methods. Based on our study, we identify several parameters to evaluate them. Subsequently, we present parametric evaluation of single model racial categorization methods and multi model racial categorization methods separately based on identified parameters. Next, we illustrate applications of racial categorization and future research direction in the field of racial categorization. Our comprehensive and comparative survey will serve as a catalogue to researchers in this area.

The rest of the paper is structured as follows: In section 2, we describe the taxonomy of racial categorization methods and features considered by different racial categorization methods. In section 3, we illustrate various single model racial categorization methods and their parametric evaluation. In section 4, we illustrate various multi model racial categorization methods and their parametric evaluation. Section 5 describes the major applications of racial categorization. In section 6, we list key challenges in the field of racial categorization. Finally, section 7 specifies conclusion and feature scope in the field of racial categorization.

2. Classification of Racial Categorization Methods

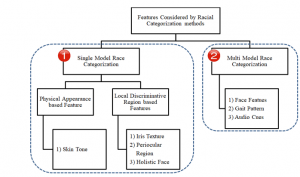

Racial categorization methods are broadly categorized into two categories: single model and multi model. As shown in Figure 2, the features used by single model and multi model methods to classify humans into features or local discriminative region based features or combination of both. Amongst the discriminative region based features, iris texture, periocular region or/and holistic face are used for racial categorization [70-77]. Multi model racial categorization takes into consideration face features, gait pattern and audio cues to classify humans into various race categories. Gait pattern is also useful to recognize the biometric information of humans [6, 78-80].

At this juncture, we clarify that at the top of all categorizations, a human is mainly divided into two categories, namely race and ethnicity, on the basis of his/her physical appearance and social appearance respectively. However, some researchers use words race and ethnicity interchangeably [24, 35, 81].

Figure 2: Features considered for racial categorization

2.1. Features Considered by Single Model Racial Categorization

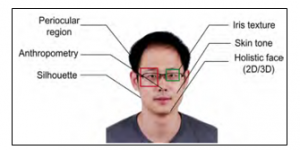

Figure 3 shows the facial features such as periocular region, anthropometry distance, silhouette, iris texture, skin tone and holistic face.

Figure 3: Facial features considered by single model racial categorization [30]

Skin tone differs mainly due to geographical location of humans. African-American, South-Asian, East-Asian, Caucasian, Indian and Arabian have different skin tones. Skin tone plays a minor role in identification of racial groups because skin color may also differ due to varying lighting conditions during the image capturing process [56, 64].

Like fingerprints, iris texture is a significant biometric characteristic of humans because it is unique for every human being [82]. It is highly useful for racial categorization because different race groups such as American, Indian and so on have different iris texture [59, 74-76, 82-84]. The key limitation of this feature is that it cannot be considered if race is to be identified from video because video may be of low quality and hence, may not give precise iris texture information [85-86].

Periocular region is defined as the region surrounded by eye. It is a region that overlays eyebrows, eyelid, eyelash and canthus [52]. It gives rich texture and biometric information as compared to iris texture [30]. Some facial features get influenced due to different facial poses and expressions. However, the periocular region does not get affected due to facial poses and expressions. Hence, it is considered as the most reliable feature for racial categorization [52, 62, 77].

Holistic face provides the texture information of various facial features such as eyes, nose, mouth, cheek, chin, skin color and jaw line [43, 51, 63, 87-89]. Extra frontal face features such as hairline and hair color in combination with cropped aligned face features ease racial categorization process [54].

2.2. Features Considered by Multi Model Racial Categorization

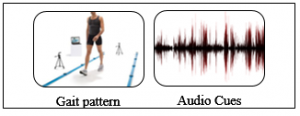

Multi model racial categorization improves accuracy of racial categorization via fusion of facial features with other human features such as gait pattern and audio cues [90] (Figure 4). Below we discuss the features considered by multi model racial categorization.

Gait pattern, also known as the walking pattern, is a prominent biometric feature that varies from human to human and is used for identification of a person [91-92]. Advanced racial categorization methods use gait pattern fused with facial features for overall effective racial categorization [92-97]. For videos in which humans at near to moderate distance have been captured, facial features are sufficient to identify the race. However, for videos in which humans at far distance have been captured, gait pattern is highly useful to identify the race of human because facial features of humans at far distance are not clearly visible. Thus, fusion of gait pattern with facial features improves overall racial categorization accuracy [65].

Figure 4: Features considered by multi model racial categorization

Audio pattern differs from race to race [98]. It is useful to identify race in case a video sequence or image of a person is not available. For instance, it is useful to identify race from phone calls.

3. Single Model Racial Categorization Methods

Race depends on physical and social characteristics of humans. As geographic distance increases, variation in facial features of inter-races become visible. As facial data is easily available compared to gait pattern, the majority of the racial categorization applications use a single model racial categorization method. Moreover, it has been revealed in literature that facial features are more prominent for race categorization [99-100]. Below we discuss various single model racial categorization methods available in literature.

3.1. Multi Ethnical Categorization using Manifold Learning

In [51], authors have proposed a method for intra-racial categorization based on facial landmarking. This method classifies eight intra-races residing in China based on facial landmarking concept. It includes Active Shape Model (ASM) to locate 77 facial landmarks. The landmarks are used to calculate three types of geometric facial features: distance, angle, and ratio. These features are provided to different classifiers such as Bayesian Net, Naive Bayesian, SMO, J4.8, RBF Network and LibSVM to identify the race category. The dimensionality reduction process carried out by manifold learning approach is useful to reduce the complexity. Though this method is efficient, it is not useful to identify race from a person’s profile face images.

3.2. GWT and Ratina Sampling based Ethnicity Categorization

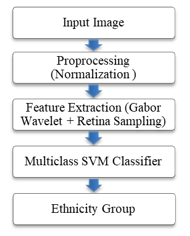

Multiclass SVM based ethnicity categorization method is proposed in [52]. Figure 5 shows the key steps involved in this method.

As shown in figure, first image is normalized via applying rotation operation and changing resolution. The resolution of the image is changed in such a manner that it maintains distance of 28 pixels between two inner corners of eyes. Subsequently, eye and mouth facial features are extracted by fusion of Gabor Wavelet Transfer (GWT) and retina sampling for efficient categorization. GWT is used to extract accurate orientation and frequency of facial features. Retina sampling method is used to set facial feature points. The features are fed to multiclass SVM classifier for ethnicity identification. Typically eye is considered as a most prominent feature for racial categorization due to its pose invariant characteristic. On the other hand, uncertainty is introduced by mouth region due to its pose variant characteristic. The disadvantage of this method is that GWT provides erroneous features in case of hollow around the eyes. Moreover, Gabor features reflect error due to variation in frequency of eyelashes.

Figure 5: Steps for ethnicity categorization using GWT and retina sampling

3.3. Real Time Racial Categorization

A new method for racial categorization by fusion of Principal Component Analysis (PCA) and Independent Component Analysis (ICA) is introduced in [53]. It consists of major two steps: feature extraction and classification. During the feature extraction step, facial features are extracted using PCA. Subsequently, ICA is used to map and generate new facial features from facial features generated by PCA. New facial features are more suitable for efficient racial categorization. During the classification step, SVM classifier is applied in conjunction with ‘321’ algorithm to classify races. ‘321’algorithm is inspired by the bootstrap approach for real time racial categorization from video streams. The categorization accuracy of this method can further be enhanced by including pre-processing step to diminish noise from the image and for face alignment.

3.4. Binary Tree based SVM for Ethnicity Detection

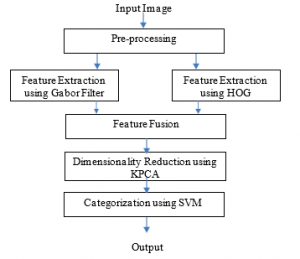

In this method, fusions of texture and shape facial features have been considered for better ethnicity categorization [54]. Figure 6 shows the functioning of this method. The first step pre-processing involves the operations such as image resize, image enhancement and image conversion. Then texture features are extracted using Gabor filter and shape features are extracted using Histogram Oriented Gradient (HOG). Subsequently, texture features and shape features are fused together. The fused feature vector is large and it requires more computational time. Hence, Kernel Principle Component Analysis (KPCA) algorithm is applied to reduce dimensionality and complexity. Fused facial features are given as input to binary tree based SVM for ethnicity detection.

Figure 6: Steps for ethnicity detection using binary tree based SVM [54]

3.5. Racial Categorization using CNN

In [55], a hybrid supervised deep learning based racial categorization method has been proposed. It uses VGG 16 convolution neural network for facial feature extraction and categorization. 224 X 224 face image is given as an input to VGG-16 network for race prediction. Any CNN requires millions of images for training from scratch, which is critical a situation for the medical domain. Hence, to overcome an issue of small dataset, authors have used hybrid approach via fusing VGG 16 with image ranking engine to improve race prediction. It has been shown that image ranking engines work efficiently with CNN based classifiers even for small dataset. The fused feature information extracted by CNN and image ranking engine is used by SVM to learn racial class labels. This hybrid method provides better categorization accuracy.

Figure 7: Steps involved in racial categorization using ANN

3.6. Neural Network based Racial Categorization

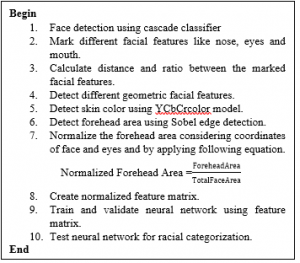

In [56], skin color, forehead area, sobel edge and geometric features are fused for efficient race estimation. Authors have proposed two methods: 1) using Artificial Neural Network (ANN) and 2) using convolution neural network. The steps involved in racial categorization using ANN are shown in Figure 7.

CNN based racial categorization method uses pre-trained VGGNet for racial categorization. It has been observed by authors that CNN based method gives more accurate racial prediction for the given image as compared to ANN based method.

3.7. Local Circular Pattern for Race Identification

Local circular pattern for race identification method works on texture and shape features extracted from 2D face and 3D face respectively. A local circular pattern is an advanced version of a local binary pattern produced for feature extraction. LCP improves the widely utilized LBP and its variants by replacing binary quantization with clustering approach. As compared to LBP, LCP provides higher accuracy even for noisy data. Moreover, AdaBoost algorithm is used for selection of better features and thereby to improve the categorization accuracy. Experimental results have revealed that this method is time efficient and memory efficient.

3.8. Biometric based Machine Learning Method

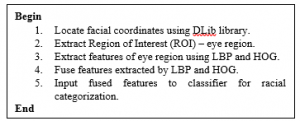

In [62], authors have proposed a method that focuses on eye region features for racial categorization. The method comprises of five major steps shown in Figure 8. First facial coordinates are located using the DLib library. Subsequently, the region of interest (eye region) is extracted. Then features extracted by LBP and HOG are integrated for efficient racial categorization. LBP and HOG both are individually useful to extract features for categorization. However, fusion of LBP features with HOG features gives higher accuracy compared to other feature fusion approaches. The performance is tested utilizing different classifiers such as SVM, Multi-Layer Perceptron (MLP) and Quadratic Discriminant Analysis (QDA).

Figure 8: Steps of biometric based machine learning method for racial categorization

3.9. Diffusion Model and Implicit Racial Attitude for Racial Group Identification

In [63-64, 101], manual racial categorization method is proposed. Race is identified by performing several tasks with participants. Diffusion model is used to identify response time boundaries of different participants. This method takes into consideration the visualization of participants for their own race

and other races. Skin color is a less effective feature for automated racial categorization methods due to lightning conditions. However, it is a prominent feature for manual race prediction.

3.10. Performance Evaluation of Single Model Racial Categorization Methods

The above discussed race categorization methods are automated except the last one which is manual race categorization method. With increasing technology, manual race categorization is less effective and less useful as compared to automated racial categorization. Amongst the various automated racial categorization methods, CNN based methods produce more accurate results [55-56] as it considers deep facial features for racial categorization. We observed that the intra-race categorization is not much focused by the researchers in their study.

Based on our study on aforementioned single model racial categorization methods, we have identified the following parameters to compare them: dataset used, racial/ethnic class considered, region of interest, feature extraction operator/s and classifiers used. Dataset refers to the source of data. It is either available online in the form of a standard dataset or it is self-generated. HOIP Database, FERET (Facial Recognition Technology), FRGC v2.0 and BU-3DFE are examples of standard dataset. Self-generated dataset is created by researchers if their predefined requirements are not satisfied with a standard dataset. Racial/ethnic class represents the racial group targeted for study such as Indian, Chinese, Asian, European, African, African American, Caucasian, Bangladeshi, Mongolian, Caucasian, Negro, Hispanic and Pacific Islander. Region of interest specifies the area of face considered for racial categorization. Different face areas include eyes, eyebrow, ear, nose, cheek, mouth, chin, forehead area and jaw line. Some methods also consider skin color for race prediction. Feature extraction operator/s extracts the facial feature from the image. GWT, ICA, PCA, HOG, LBP, LCP are the features extraction operators used by different methods. Classifier specifies the classification algorithm used by the racial categorization method. Different classifiers such as SVM, CNN, ANN, kernel PCA, MLP, LDA, QDA and Kernel based Neural Network (KNN) have been used in different racial categorization methods. Table 1 presents the assessment of aforementioned single model racial categorization methods based these identified parameters.

4. Multi Model Racial Categorization Methods

Multi model racial categorization is highly useful when we do not have human’s facial image information. It has been shown in literature that gait pattern and audio cues are amongst the prominent features for biometric information identification. Hence, multi model racial categorization methods use gait pattern, audio cues or fusion of facial features with gait pattern/audio cures to identify race. However, a smaller number of multi model racial categorization methods are available in literature because race data that includes gait pattern or audio cues is not available easily.

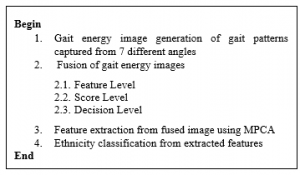

4.1. Multi-view Fused Gait based Ethnicity Classification

In [102], authors have proposed a method that identifies ethnicity from seven gait patterns captured from seven different

Table 1: Parametric Evaluation of Single Model Racial Categorization Methods

| Method | Dataset used | Racial/ethnic class considered | Region of interest | Feature extraction Operator/s | Classifier/s used |

| Multi Ethnical Categorization using Manifold Learning [51] | Self-Generated | Chinese (8-Subgroups) | Face | – | Bayesian Net, Naive Bayesian, J4.8, RBF (Radial Basis Function) Network, LibSVM |

| GWT and Ratina Sampling based Ethnicity Categorization [52] | HOIP Database + Self- Generated | Asian, European, African | Eyes, Mouth | GWT, Ratina Sampling | SVM |

| Real Time Race Categorization [53] | FERET | Asian, Non-Asian | Face | ICA, PCA | SVM |

| Binary Tree based SVM for Ethnicity Detection [54] | FERET | Caucasian, African, Asian | Face | Gabor Filter, HOG | SVM, Kernel PCA |

| Racial Categorization using CNN [55] | Self- Generated | Bangladeshi, Chinese, Indian | Face | – | SVM |

| Neural Network based Racial Categorization [56] | FERET | Mongolian, Caucasian, Negro | Skin Colour, Forehead Area | – | ANN, CNN |

| Local Circular Pattern for Race Identification [61] | FRGC v2.0 and BU-3DFE | Whites and East-Asians | Face | LCP | Adaboost |

| Biometric based Machine Learning Method [62] | FERET | Asian, White, Black or African American, Hispanic, Pacific Islander, Native American | Eyes, Eyebrows, Periocular Region | LBP, HOG | SVM, MLP, LDA, QDA |

| Diffusion Model (Binary Classifier) [63] | Race Morph Sequence | Asian, Caucasian | Face | Manually | Manually |

| Implicit Racial Attitude [64] | Facial stimuli used in current research | African American, Caucasian | Skin Colour, Facial Physiognomy | Manually | Manually |

angles. Figure 9 shows the key steps involved in the ethnicity identification process. First, all seven gait patterns are converted into corresponding Gait Energy Image (GEI). Next, seven GEIs are fused using three different fusion methods: score fusion, feature fusion and decision fusion. The goal of using three fusion methods is to accurately identify the ethnicity (race) of the person. Subsequently, features are extracted from the fused image using Multi-linear Principal Component Analysis (MPCA) and fed to classifier for ethnicity classification.

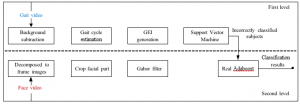

4.2. Hierarchical Fusion for Ethnicity Identification

In this method [65], gait pattern and facial features are fused for better ethnicity categorization. Figure 10 shows the two level processing involved in this method. First level involves gait pattern evolution. It takes gait video as an input. It includes the intermediate steps such as gait cycle estimation and GEI generation. First level also includes SVM for classification. Second level takes face video as an input. It comprises of three

Figure 9: Steps involved in multi-view fused gait based ethnicity classification

Figure 10: Steps of ethnicity categorization using hierarchical fusion system [65]

Figure 11: Block diagram of ethnicity classification system [67]

major steps: frame extraction from video, face detection and feature extraction using Gabor filter. Features extracted in first and second level are fused together to get accurate classification. The fused features are given to SVM and then to Adaboost to identify ethnicity.

4.3. Dialogue based Biometric Information Classification

In [66], a method for biometric information classification and deception detection has been proposed. It identifies gender, personality and ethnicity from the audio (dialogue). Lexical and acoustic-prosodic features are extracted from the dialogue. Lexical features are extracted using Linguistic Inquiry and Word Count (LIWC). Acoustic-prosodic features are extracted using Praat. Both types of features are given to different machine learning classifiers such as SVM, logistic regression, Adaboost and random forest for classification.

4.4. Cross-Model Biometric Matching

A new method for cross biometric matching by fusion of voice and facial image is introduced in [68]. The cross model is used for inferring the two types of information: 1) voice from human face and 2) human face from voice. This method involves two key steps: feature extraction and classification. The features are extracted from image as well as voice. The extracted features are fused and given as input to CNN for biometric matching and classification.

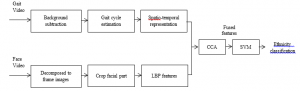

4.5. Gait and Face Fusion for Ethnicity Classification

Ethnicity classification system is proposed in [67]. It considers fusion of facial features and gait pattern for better classification. As shown in Figure 11, inputs to this system are gait video and facial video. Both videos are processed in parallel. During gait video processing, first background is subtracted from the video and subsequently each gait cycle pattern is estimated. Next all gait cycle patterns are represented using spatio- temporal representation for gait pattern characterization. During face video processing, frames are extracted from the face video and subsequently the facial part is cropped from the face image. Facial features are extracted from each frame using LBP. Features extracted from gait and face videos are fused together using Canonical Correlation Analysis (CCA). Fused features are given as input to SVM for ethnicity identification.

4.6. Performance Evaluation of Multi Model Racial Categorization Methods

As illustrated in Table 2, we identified the same set of parameters for comparison of multi model racial categorization methods as we identified for single model racial categorization methods. As defined and discussed previously in section 3, they are dataset used, biometric information considered for classification, region of interest, feature extraction operator/s and classifiers used in the method.

Table 2: Parametric Evaluation of Multi Model based Racial Categorization Methods

| Method | Dataset used | Biometric information considered | Region of interest | Feature extraction operator/s | Classifier/s used |

| Multi-view Fused Gait based Ethnicity Classification [102] | Self- Generated | Ethnicity (East-Asian and South-American) | Gait Pattern | Multi-linear Principal Component Analysis | – |

| Hierarchical Fusion for Ethnicity Identification [65] | Self- Generated | Ethnicity (East-Asian and South-American) | Gait Pattern and Face | Gabor Filter | SVM |

| Dialogue based Classification [66] | NEO-FFI | Gender, Ethnicity and Personality | Dialogue (Audio) | LIWC and Praat | SVM, Logistic Regression, AdaBoost and Random Forest |

| Cross-Modal Biometric Matching [68] | VGGFace (Face data) and VoxCeleb (Audio Data) | Gender, Age, Ethnicity and Identity | Audio and Face | – | CNN |

| Gait and Face Fusion for Ethnicity Classification [67] | Self- Generated | Ethnicity | Gait Pattern and Face | LBP | SVM |

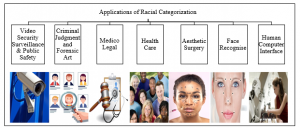

Figure 12: Application areas of racial categorization

5. Application of Racial Categorization

Racial categorization has a high impact on our social life. Race defines common physical characteristics of humans to represent his existence. Physical characteristics of humans of different races differ from each other. Racial categorization is significant for several applications. As shown in Figure 12, the major application areas are video security surveillance and public safety, criminal judgment and forensic art, medico legal, healthcare, aesthetic surgery, face recognition, and human computer interface.

5.1. Video Security Surveillance and Public Safety

Race identification from the subject’s face plays a crucial role in video security surveillance. Video security surveillance system assists in identifying criminals by comparing the detected subject’s image with the existing criminal database. Automated race identification system fused with video security surveillance system provides quick information about the subject [53]. Such a fused system is already in use at several airports and public places. Moreover, it has been proven useful for applications such as maritime, aviation, mass transformation, government office building, recreational centers, stadium and large retail malls.

5.2. Criminal Judgment and Forensic Art

Crime related investigation requires crucial information related to criminals including cross-country evidence (if any) [103-108]. Race/ethnicity of criminals provides such crucial information. Face is typically considered for criminal investigation because face conveys important information. Particularly, it conveys age, race and gender that are needed for criminal investigation. This information makes the investigation process easy for the government to find the right criminal [104, 109-111]. Moreover, such information is useful to prevent innocent people and provides justice to minority community groups.

Normally the forensic department has the subject’s image captured using a public camera. However, it is difficult for the forensic department to manually extract the crucial information from the image. Conversely, racial categorization method can be used to identify the race from the image which assists in further investigation targeting a particular race community [106].

5.3. Medico Legal

Medico legal case is defined as a case of suffering or injury in which examination by the police is essential to determine the cause of suffering or injury. Suffering or injury may be due to several unnatural conditions such as accidents, burning and death. Race provides patient’s information that is useful to law enforcing agency for further investigation [112]. By evaluating the race information of the medico legal case, law enforcing agency can obtain history of medico legal cases in that particular racial group [113-115]. Such information eases the investigation process and assists medico legal department in decision making.

5.4. Healthcare

Disease and healthcare issues are conflicting for different geographical areas due to their weather conditions, living sense and food. Healthcare treatment differs for different racial groups [116]. Thus, racial categorization is useful to solve the healthcare issues and to provide quick treatment [117-121]. Moreover, ethnic information is useful to provide appropriate services and special advantages to minority ethnic groups which are defined by the government for the minority and economically low conditions [122].

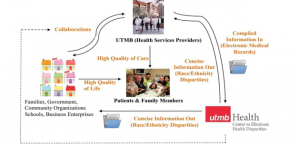

The center to Eliminate Health Disparities (CEHD) of the University of Texas Medical Branch (UTMB) has implemented the Information System for the health of people of UTMB to reduce disparities in health. Their information system is also known as REAL (race, ethnicity and language) [122]. Figure 13 illustrates the role of CEHD in the health system of UTMB and Galveston County as a whole. UTMB is a university health center that welcomes patients from diverse backgrounds. It provides services to different racial groups whose income level is below the poverty line. However, the main objectives of this REAL project are (1) to improve the UTMB’s health information system for better diagnostics and stratified quality measures by race, ethnicity, language and status (2) to develop and disseminate contingency plans to address disparities through effective partnerships with relevant stakeholders.

5.5. Aesthetic Surgery

Aesthetic surgery is described as a facial plastic surgery either for the beautification of face or to create an attractive face [123]. Anthropometric measurement is the distance between two facial points. It has been revealed in literature that anthropological measurements such as ratio, geometric distance and Euclidean distance are different for different racial groups [124-126]. Geometric and Euclidean distances are the distances between primary or secondary facial landmarks on the frontal face/profile face. Depending on the race of patient, anthropological measurements are derived and used in aesthetic surgery [127-129]. For example, aesthetic surgery for Chinese people and Indian people is different as both groups have different facial features and thereby different anthropological measurements.

5.6. Face Recognition

Racial difference in humans is useful for biometric illustration and human identification [130]. Race cues and race wise anthropological measurements make it easy for face recognition systems to recognize the person [131-134]. Moreover, integration of race information with face recognition makes the face recognition system more intelligent and quick for accurate face recognition [135-136].

Figure 13: Role of the health center system to eliminate health disparities for different ethnicity [122]

5.7. Human Computer Interface

Nowadays, several systems are automated using robots [137-140]. Consumers of such robotic systems need to interact with robots frequently. In human-robot communication, racial cues play an important role [141-145]. Specifically, by recognizing the race of a human from his face, behavior and expression, robots can deliver the relevant services to humans. Such robotic systems are useful for an open service atmosphere where robots work as humans [146]. In particular, they are useful in hospitals, malls, stadiums, hotels, gaming zones and intelligent HCI organizations for easy communication with humans.

6. Challenges in Racial Categorization

Several challenges are faced to get correct and accurate racial categorization. Below we mention the major challenges faced in racial categorization. These challenges create new opportunities for researchers in this field to carry out further research. An efficient racial categorization method can be developed by overcoming these challenges and higher classification accuracy can be achieved.

6.1. Intra-race Categorization

To the best of our knowledge, intra-race categorization has not been focused much in literature. 85% of the worldwide population is divided into major 7-racial groups, namely African-American, South-Asian, East-Asian, Caucasian, Indian, Arabian and Latino race [30]. Intra-race categorization for aforementioned racial groups is challenging due to severe similarity in facial features and in physical appearance of humans belonging to a particular group [147-148]. It is difficult to infer different clues for intra-race categorization.

6.2. Anthropometry Measurements

Facial landmarking technique is used to measure anthropometry measurements. It has been shown in literature that accuracy of anthropometry measurements and thereby accuracy of racial categorization method varies with respect to the number of facial landmarks [21, 51, 56, 62, 147-148]. Thus, existing landmarking methods can be further improved by increasing the number of landmarks. Moreover, landmarks on forehead area, hairline and earlobe can be additionally considered to increase accuracy further [56]. In addition, anatomists have revealed that Ear pinna and Iannarelli’s measures differ for different racial groups. Like finger print, ear pinna is unique for each individual.

6.3. Real-Time Data

It is required to process real-time video streams at public places such as airports, hospitals, health care centers, malls and stadiums for public safety and security systems. However, existing racial categorization methods are not applicable and reliable for processing real-time video stream [53]. They are applicable to only off-line image dataset.

6.4. Manual Racial Categorization

The aforementioned issues are related to automated racial categorization methods. However, the issues faced by manual racial categorization methods are different. The major issue related to manual racial categorization which involves participants is that the number of stimulus levels for race prediction is limited. Stimulus level is defined as the number of tasks performed by participants for race prediction [56, 61].

7. Conclusion

In this paper, we have presented in-depth review on various single model and multi model racial categorization methods. Moreover, parametric evaluation of racial categorization methods based on identified set of parameters is presented. It has been observed that fusion of facial features and physical appearance provides accurate race categorization. Moreover, it has also been observed that CNN based racial categorization model gives substantially higher accuracy because it extracts deep features. Our rigorous review on racial categorization methods will provide researchers state-of-the-art advancements related to racial categorization methods. Furthermore, the applications and challenges of racial categorization discussed herein will help researchers to develop an efficient and competent racial categorization method.

Conflict of Interest

The authors declare no conflict of interest.

- S. Gutta, H. Wechsler and P. Phillips, “Gender and ethnic classification of face images”, Proceedings Third IEEE International Conference on Automatic Face and Gesture Recognition. https://doi.org/10.1109/afgr.1998.670948.

- B. Xia, B. Ben Amor and M. Daoudi, “Joint gender, ethnicity and age estimation from 3D faces”, Image and Vision Computing, vol. 64, pp. 90-102, 2017. https://doi.org/10.1016/j.imavis.2017.06.004.

- S. Azzakhnini, L. Ballihi and D. Aboutajdine, “Combining Facial Parts For Learning Gender, Ethnicity, and Emotional State Based on RGB-D Information”, ACM Transactions on Multimedia Computing, Communications, and Applications, vol. 14, no. 1, pp. 1-14, 2018. https://doi.org/10.1145/3152125.

- P. Mukudi and P. Hills, “The combined influence of the own-age, -gender, and -ethnicity biases on face recognition”, Acta Psychologica, vol. 194, pp. 1-6, 2019. https://doi.org/10.1016/j.actpsy.2019.01.009.

- Xiaoli Zhou and B. Bhanu, “Integrating Face and Gait for Human Recognition”, 2006 Conference on Computer Vision and Pattern Recognition Workshop (CVPRW’06). https://doi.org/10.1109/CVPRW.2006.103.

- Yoo, D. Hwang and M. Nixon, “Gender Classification in Human Gait Using Support Vector Machine”, Advanced Concepts for Intelligent Vision Systems, pp. 138-145, 2005. https://doi.org/10.1007/11558484_18.

- Ju Han and B. Bhanu, “Statistical feature fusion for gait-based human recognition”, Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004. https://doi.org/10.1109/CVPR.2004.1315252.

- O. Çeliktutan, S. Ulukaya and B. Sankur, “A comparative study of face landmarking methods,” EURASIP Journal on Image and Video Processing, vol. 2013, no. 1, 2013. https://doi.org/10.1186/1687-5281-2013-13.

- Y. Wu and Q. Ji, “Facial Landmark Detection: A Literature Survey,” International Journal of Computer Vision, 2018. https://doi.org/10.1007/s11263-018-1097-z.

- H. Akakin and B. Sankur, “Analysis of Head and Facial Gestures Using Facial Landmark Trajectories,” Biometric ID Management and Multimodal Communication, pp. 105-113, 2009. https://doi.org/10.1007/978-3-642-04391-8_14.

- L. Farkas, M. Katic and C. Forrest, “International Anthropometric Study of Facial Morphology in Various Ethnic Groups/Races,” Journal of Craniofacial Surgery, vol. 16, no. 4, pp. 615-646, 2005. https://doi.org/10.1097/01.scs.0000171847.58031.9e.

- H. Dibeklioglu, A. Salah and T. Gevers, “A Statistical Method for 2-D Facial Landmarking,” IEEE Transactions on Image Processing, vol. 21, no. 2, pp. 844-858, 2012. https://doi.org/10.1109/TIP.2011.2163162.

- B. Martinez, M. Valstar, X. Binefa and M. Pantic, “Local Evidence Aggregation for Regression-Based Facial Point Detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 5, pp. 1149-1163, 2013. https://doi.org/10.1109/TPAMI.2012.205.

- M. Taner Eskil and K. Benli, “Facial expression recognition based on anatomy,” Computer Vision and Image Understanding, vol. 119, pp. 1-14, 2014. https://doi.org/10.1016/j.cviu.2013.11.002.

- E. Lloyd, K. Hugenberg, A. McConnell, J. Kunstman and J. Deska, “Black and White Lies: Race-Based Biases in Deception Judgments”, Psychological Science, vol. 28, no. 8, pp. 1125-1136, 2017. https://doi.org/10.1177/0956797617705399.

- Breetzke, “The concentration of urban crime in space by race: evidence from South Africa”, Urban Geography, vol. 39, no. 8, pp. 1195-1220, 2018. https://doi.org/10.1080/02723638.2018.1440127.

- Threadcraft-Walker and H. Henderson, “Reflections on race, personality, and crime”, Journal of Criminal Justice, vol. 59, pp. 38-41, 2018. https://doi.org/10.1016/j.jcrimjus.2018.05.005.

- Lehman, T. Hammond and G. Agyemang, “Accounting for crime in the US: Race, class and the spectacle of fear”, Critical Perspectives on Accounting, vol. 56, pp. 63-75, 2018. https://doi.org/10.1016/j.cpa.2018.01.002.

- Jefferson, “Predictable Policing: Predictive Crime Mapping and Geographies of Policing and Race”, Annals of the American Association of Geographers, vol. 108, no. 1, pp. 1-16, 2017. https://doi.org/10.1080/24694452.2017.1293500.

- Petsko and G. Bodenhausen, “Race–Crime Congruency Effects Revisited: Do We Take Defendants’ Sexual Orientation into Account,” Social Psychological and Personality Science, vol. 10, no. 1, pp. 73-81, 2017. https://doi.org/10.1177/1948550617736111.

- Wai et al., “Nasofacial Anthropometric Study among University Students of Three Races in Malaysia”, 2019. https://doi.org/10.1155/2015/780756.

- Rata and C. Zubaran, “Ethnic Classification in the New Zealand Health Care System”, Journal of Medicine and Philosophy, vol. 41, no. 2, pp. 192-209, 2016. https://doi.org/10.1093/jmp/jhv065.

- Reid, D. Cormack and M. Crowe, “The significance of socially-assigned ethnicity for self-identified Māori accessing and engaging with primary healthcare in New Zealand”, Health: An Interdisciplinary Journal for the Social Study of Health, Illness and Medicine, vol. 20, no. 2, pp. 143-160, 2015. https://doi.org/10.1177/1363459315568918.

- Mehta et al., “Race/Ethnicity and Adoption of a Population Health Management Approach to Colorectal Cancer Screening in a Community-Based Healthcare System”, Journal of General Internal Medicine, vol. 31, no. 11, pp. 1323-1330, 2016. https://doi.org/10.1007/s11606-016-3792-1

- .L. Culley, “Transcending transculturalism Race, ethnicity and health-care”, Nursing Inquiry, vol. 13, no. 2, pp. 144-153, 2006. https://doi.org/10.1111/j.1440-1800.2006.00311.x.

- Gibbons, ” Use of Health Information Technology among Racial and Ethnic Underserved Communities,” Perspect Health Inf Manag, vol. 8, 2011.

- Memarian, S. Mostafavi, E. Zarei, S. Esfahani and B. Ghorbanzadeh, “The Credibility of Cephalogram Parameters in Gender Identification From Medico-Legal Relevance Among the Iranian Populatio,” International Journal of Medical Toxicology and Forensic Medicine, vil. 9, 2019.

- Ibrahim, A. Khalifa, H. Hassan, H. Tamam and A. Hagras, “Estimation of stature from hand dimensions in North Saudi population, medicolegal view,” The Saudi Journal of Forensic Medicine and Sciences, vol. 1, pp. 19-27, 2018. https://doi.org/10.4103/sjfms.sjfms_10_17.

- Bukovitz, J. Meiman, H. Anderson and E. Brooks, “Silicosis: Diagnosis and Medicolegal Implications”, Journal of Forensic Sciences, 2019. https://doi.org/10.1111/1556-4029.14048.

- Fu, H. He and Z. Hou, “Learning Race from Face: A Survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 36, no. 12, pp. 2483-2509, 2014. . https://doi.org/10.1109/TPAMI.2014.2321570.

- Carroll, “Evaluation, Description and Invention: Paradigms for Human-Computer Interaction”, Advances in Computers, pp. 47-77, 1989. https://doi.org/10.1016/S0065-2458(08)60532-X.

- Mackal, “The effects of ethnicity and message content on affective outcomes in a computer-based learning environment,” 2009.

- Evers, “Human – Computer Interfaces: Designing for Culture,” 1997.

- Marsh, K. Pezdek and D. Ozery, “The cross-race effect in face recognition memory by bicultural individuals”, Acta Psychologica, vol. 169, pp. 38-44, 2016. https://doi.org/10.1016/j.actpsy.2016.05.003.

- Farkas, M. Katic and C. Forrest, “International Anthropometric Study of Facial Morphology in Various Ethnic Groups/Races”, Journal of Craniofacial Surgery, vol. 16, no. 4, pp. 615-646, 2005. https://doi.org/10.1097/01.scs.0000171847.58031.9e.

- Ozdemir, D. Sigirli, I. Ercan and N. Cankur, “Photographic Facial Soft Tissue Analysis of Healthy Turkish Young Adults: Anthropometric Measurements”, Aesthetic Plastic Surgery, vol. 33, no. 2, pp. 175-184, 2008. https://doi.org/10.1007/s00266-008-9274-z.

- Boutellaa, A. Hadid, M. Bengherabi and S. Ait-Aoudia, “On the use of Kinect depth data for identity, gender and ethnicity classification from facial images”, Pattern Recognition Letters, vol. 68, pp. 270-277, 2015. https://doi.org/10.1016/j.patrec.2015.06.027.

- Narang and T. Bourlai, “Gender and ethnicity classification using deep learning in heterogeneous face recognition”, 2016 International Conference on Biometrics (ICB), 2016. https://doi.org/10.1109/ICB.2016.7550082.

- Wang, X. Duan, X. Liu, C. Wang and Z. Li, “Semantic description method for face features of larger Chinese ethnic groups based on improved WM method”, Neurocomputing, vol. 175, pp. 515-528, 2016. https://doi.org/10.1016/j.neucom.2015.10.089.

- Zhao and S. Bentin, “Own- and other-race categorization of faces by race, gender, and age”, Psychonomic Bulletin & Review, vol. 15, no. 6, pp. 1093-1099, 2008. https://doi.org/10.3758/PBR.15.6.1093.

- Lu, H. Chen and A. Jain, “Multimodal Facial Gender and Ethnicity Identification”, Advances in Biometrics, pp. 554-561, 2005. https://doi.org/10.1007/11608288_74.

- Guo and G. Mu, “A framework for joint estimation of age, gender and ethnicity on a large database”, Image and Vision Computing, vol. 32, no. 10, pp. 761-770, 2014. https://doi.org/10.1016/j.imavis.2014.04.011.

- da Silva, L. Magri, L. Andrade and M. da Silva, “Three-dimensional analysis of facial morphology in Brazilian population with Caucasian, Asian, and Black ethnicity”, Journal of Oral Research and Review, vol. 9, no. 1, p. 1, 2017. http://dx.doi.org/10.1590/0103-6440201802027.

- Vo, T. Nguyen and C. Le, “Race Recognition Using Deep Convolutional Neural Networks”, Symmetry, vol. 10, no. 11, p. 564, 2018. https://doi.org/10.3390/sym10110564.

- Bobeldyk and A. Ross, “Analyzing Covariate Influence on Gender and Race Prediction From Near-Infrared Ocular Images”, IEEE Access, vol. 7, pp. 7905-7919, 2019. https://doi.org/10.1109/ACCESS.2018.2886275.

- Samangooei and M. Nixon, “Performing content-based retrieval of humans using gait biometrics”, Multimedia Tools and Applications, vol. 49, no. 1, pp. 195-212, 2009. https://doi.org/10.1007/s11042-009-0391-8.

- Kale, A. Roychowdhury and R. Chellappa, “Fusion of gait and face for human identification”, 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing. https://doi.org/10.1109/ICASSP.2004.1327257.

- Sarkar, P. Phillips, Z. Liu, I. Vega, P. Grother and K. Bowyer, “The humanID gait challenge problem: data sets, performance, and analysis”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 27, no. 2, pp. 162-177, 2005. https://doi.org/10.1109/TPAMI.2005.39.

- Ju Han and Bir Bhanu, “Individual recognition using gait energy image”, IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 28, no. 2, pp. 316-322, 2006. https://doi.org/10.1109/TPAMI.2006.38.

- Gomez-Ezeiza, J. Torres-Unda, N. Tam, J. Irazusta, C. Granados and J. Santos-Concejero, “Race walking gait and its influence on race walking economy in world-class race walkers”, Journal of Sports Sciences, vol. 36, no. 19, pp. 2235-2241, 2018. https://doi.org/10.1080/02640414.2018.1449086.

- Wang, Q. Zhang, X. Duanand J. Gan, “Multi-ethnical Chinese facial characterization and analysis,” Multimedia Tools and Applications,2018. https://doi.org/10.1007/s11042-018-6018-1.

- Hosoi, E. Takikawa and M. Kawade, “Ethnicity estimation with facial images,” Sixth IEEE International Conference on Automatic Face and Gesture Recognition, 2004. https://doi.org/10.1109/AFGR.2004.1301530.

- Yongsheng, W. Xinyu, Q. Huihuanand Yangsheng Xu, “A real time race categorization system,” IEEE International Conference on Information Acquisition, 2005.

- Uddin and S. Chowdhury, “An integrated approach to classify gender and ethnicity,” 2016 International Conference on Innovations in Science, Engineering and Technology (ICISET), 2016. https://doi.org/10.1109/ICISET.2016.7856480.

- Heng, M. Dipu and K. Yap, “Hybrid Supervised Deep Learning for Ethnicity Categorization using Face Images,” 2018 IEEE International Symposium on Circuits and Systems (ISCAS), 2018.

- Masood, S. Gupta, A. Wajid, S. Gupta and M. Ahmed, “Prediction of Human Ethnicity from Facial Images Using Neural Networks,” Advances in Intelligent Systems and Computing, pp. 217-226, 2017. https://doi.org/10.1007/978-981-10-3223-3_20.

- Das, A. Dantcheva and F. Bremond, “Mitigating Bias in Gender, Age and Ethnicity Classification: a Multi-Task Convolution Neural Network Approach,” ECCV, 2018. https://link.springer.com/conference/eccv.

- Srinivas, H. Atwal, D. Rose, G. Mahalingam, K. Ricanek and D. Bolme, “Age, Gender, and Fine-Grained Ethnicity Prediction Using Convolutional Neural Networks for the East Asian Face Dataset”, 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), 2017. https://doi.org/10.1109/FG.2017.118.

- Mohammad and J. Al-Ani, “Convolutional Neural Network for Ethnicity Classification using Ocular Region in Mobile Environment”, 2018 10th Computer Science and Electronic Engineering (CEEC), 2018. https://doi.org/10.1109/CEEC.2018.8674194.

- Bagchi, D. Geraldine Bessie Amali and M. Dinakaran, “Accurate Facial Ethnicity Classification Using Artificial Neural Networks Trained with Galactic Swarm Optimization Algorithm”, Advances in Intelligent Systems and Computing, pp. 123-132, 2018. https://doi.org/10.1007/978-981-13-3329-3_12.

- Huang, H. Ding, C. Wang, Y. Wang, G. Zhang and L. Chen, “Local circular patterns for multi-modal facial gender and ethnicity categorization,” 2018. https://doi.org/10.1016/j.imavis.2014.06.009.

- Mohammad and J. Al-Ani, “Towards ethnicity detection using learning based classifiers,” 2017 9th Computer Science and Electronic Engineering (CEEC), 2017. https://doi.org/10.1109/CEEC.2017.8101628.

- Benton and A. Skinner, “Deciding on race: A diffusion model analysis of race-categorisation,” 2018. https://doi.org/10.1016/j.cognition.2015.02.011.

- Stepanova and M. Strube, “The role of skin color and facial physiognomy in racial categorization: Moderation by implicit racial attitudes,” Journal of Experimental Social Psychology, vol. 48, no. 4, pp. 867-878, 2012. https://doi.org/10.1016/j.jesp.2012.02.019.

- Zhang, Y. Wang and Z. Zhang, “Ethnicity Classification Based on a Hierarchical Fusion”, Biometric Recognition, pp. 300-307, 2012. https://doi.org/10.1007/978-3-642-35136-5_36.

- Levitan, Y. Levitan, G. An, M. Levine, R. Levitan, A. Rosenberg and J. Hirschberg, “Identifying Individual Differences in Gender, Ethnicity, and Personality from Dialogue for Deception Detection,” NAACL-HLT, pp. 40-44, 2016.

- Zhang, Y. Wang, Z. Zhang and M. Hu, “Ethnicity classification based on fusion of face and gait”, 2012 5th IAPR International Conference on Biometrics (ICB), 2012. https://doi.org/10.1109/ICB.2012.6199781.

- Nagrani, S. Albanie and A. Zisserman, “Seeing Voices and Hearing Faces: Cross-Modal Biometric Matching,” IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- Hengliang Tang, Yanfeng Sun, Baocai Yin and Yun Ge, “Face recognition based on Haar LBP histogram”, 2010 3rd International Conference on Advanced Computer Theory and Engineering(ICACTE), 2010. https://doi.org/10.1109/ICACTE.2010.5579370.

- Sáenz and M. Morales, “6 Demography of Race and Ethnicity”, Handbooks of Sociology and Social Research, pp. 163-207, 2019. https://doi.org/10.1007/978-3-030-10910-3_7.

- Qiu, S. Fang and K. Song, “Method and apparatus for face classification,” Google pattern, 2018.

- Lv, Z. Wu, D. Zhang, X. Wang and M. Zhou, “3D Nose shape net for human gender and ethnicity classification”, Pattern Recognition Letters, 2018. https://doi.org/10.1016/j.patrec.2018.11.010.

- Singh, S. Nagpal, M. Vatsa, R. Singh, A. Noore and A. Majumdar, “Gender and ethnicity classification of Iris images using deep class-encoder”, 2017 IEEE International Joint Conference on Biometrics (IJCB), 2017.

- Jang, J. Yoon, Y. Kim and Y. Park, “Classification of Iris Colors and Patterns in Koreans”, Healthcare Informatics Research, vol. 24, no. 3, p. 227, 2018. https://doi.org/10.4258/hir.2018.24.3.227.

- Mabuza-Hocquet, F. Nelwamondo and T. Marwala, “Ethnicity Distinctiveness Through Iris Texture Features Using Gabor Filters”, Intelligent Information and Database Systems, pp. 551-560, 2017. https://doi.org/10.1007/978-3-319-54430-4_53.

- Bobeldyk and A. Ross, “Analyzing Covariate Influence on Gender and Race Prediction From Near-Infrared Ocular Images”, IEEE Access, vol. 7, pp. 7905-7919, 2019. https://doi.org/10.1109/ACCESS.2018.2886275.

- Chen, M. Gao, K. Ricanek, W. Xu and B. Fang, “A Novel Race Classification Method Based on Periocular Features Fusion”, International Journal of Pattern Recognition and Artificial Intelligence, vol. 31, no. 08, p. 1750026, 2017. https://doi.org/10.1142/S0218001417500264.

- Arseev, A. Konushin and V. Liutov, “Human Recognition by Appearance and Gait”, Programming and Computer Software, vol. 44, no. 4, pp. 258-265, 2018. https://doi.org/10.1134/S0361768818040035.

- Chi, J. Wang and M. Meng, “A Gait Recognition Method for Human Following in Service Robots”, IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 48, no. 9, pp. 1429-1440, 2018. https://doi.org/10.1109/TSMC.2017.2660547.

- Rida, A. Bouridane, G. Marcialis and P. Tuveri, “Improved Human Gait Recognition”, Image Analysis and Processing ICIAP 2015, pp. 119-129, 2015. https://doi.org/10.1007/978-3-319-23234-8_12.

- Shah and N. Davis, “Comparing Three Methods of Measuring Race/Ethnicity”, The Journal of Race, Ethnicity, and Politics, vol. 2, no. 1, pp. 124-139, 2017. https://doi.org/10.1017/rep.2016.27.

- Lee, B. Chon, S. Lin, M. He and S. Lin, “Association of Ocular Conditions With Narrow Angles in Different Ethnicities”, American Journal of Ophthalmology, vol. 160, no. 3, pp. 506-515.e1, 2015. https://doi.org/10.1016/j.ajo.2015.06.002.

- Latinwo, A. Falohun, E. Omidiora and B. Makinde, “Iris Texture Analysis for Ethnicity Classification Using Self-Organizing Feature Maps”, Journal of Advances in Mathematics and Computer Science, vol. 25, no. 6, pp. 1-10, 2018. https://doi.org/10.9734/JAMCS/2017/29634.

- Mabuza-Hocquet, F. Nelwamondo and T. Marwala, “Ethnicity Prediction and Classification from Iris Texture Patterns: A Survey on Recent Advances”, 2016 International Conference on Computational Science and Computational Intelligence (CSCI), 2016. https://doi.org/10.1109/CSCI.2016.0159.

- Singh, S. Nagpal, M. Vatsa, R. Singh, A. Noore and A. Majumdar, “Gender and ethnicity categorization of Iris images using deep class-encoder,” 2017 IEEE International Joint Conference on Biometrics (IJCB), 2017.

- Lagree and K. Bowyer, “Predicting ethnicity and gender from iris texture,” 2011 IEEE International Conference on Technologies for Homeland Security (HST), 2011. https://doi.org/10.1109/THS.2011.6107909.

- Huri, E. (Omid) David and N. Netanyahu, “DeepEthnic: Multi-label Ethnic Classification from Face Images”, Artificial Neural Networks and Machine Learning – ICANN 2018, pp. 604-612, 2018. https://doi.org/10.1007/978-3-030-01424-7_59.

- Wang, Q. Zhang, W. Liu, Y. Liu and L. Miao, “Facial feature discovery for ethnicity recognition”, Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, vol. 9, no. 1, p. e1278, 2018. https://doi.org/10.1002/widm.1278.

- Sajid et al., “Facial Asymmetry-Based Anthropometric Differences between Gender and Ethnicity”, Symmetry, vol. 10, no. 7, p. 232, 2018. https://doi.org/10.3390/sym10070232.

- Shakhnarovich, L. Lee and T. Darrell, “Integrated face and gait recognition from multiple views”, Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. CVPR 2001. https://doi.org/10.1109/CVPR.2001.990508.

- Zhang, Y. Wang and Z. Zhang, “Ethnicity Categorization Based on a Hierarchical Fusion,” Biometric Recognition, pp. 300-307, 2012. https://doi.org/10.1007/978-3-642-35136-5_36.

- Mecca, “Impact of Gait Stabilization: A Study on How to Exploit it for User Recognition”, 2018 14th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), 2018. https://doi.org/10.1109/SITIS.2018.00090.

- Liu, K. Lu, S. Yan, M. Sun, D. Lester and K. Zhang, “Gait phase varies over velocities”, Gait & Posture, vol. 39, no. 2, pp. 756-760, 2014. https://doi.org/10.1016/j.gaitpost.2013.10.009.

- Wang, S. Yu, Y. Wang and T. Tan, “Gait Recognition Based on Fusion of Multi-view Gait Sequences”, Advances in Biometrics, pp. 605-611, 2005. https://doi.org/10.1007/11608288_80.

- Xiaxi Huang and N. Boulgouris, “Gait Recognition using Multiple Views”, 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, 2008. https://doi.org/10.1109/ICASSP.2008.4517957.

- Ross and A. Jain, “Information fusion in biometrics”, Pattern Recognition Letters, vol. 24, no. 13, pp. 2115-2125, 2003. https://doi.org/10.1016/S0167-8655(03)00079-5.

- Deng and C. Wang, “Human gait recognition based on deterministic learning and data stream of Microsoft Kinect”, IEEE Transactions on Circuits and Systems for Video Technology, pp. 1-1, 2018. https://doi.org/10.1109/TCSVT.2018.2883449.

- Sturm, K. Donahue, M. Kasting, A. Kulkarni, N. Brewer and G. Zimet, “Pediatrician-Parent Conversations About Human Papillomavirus Vaccination: An Analysis of Audio Recordings,” Journal of Adolescent Health, vol. 61, no. 2, pp. 246-251, 2017. https://doi.org/10.1016/j.jadohealth.2017.02.006.

- Rehman, G. Khan, A. Siddiqi, A. Khan and U. Khan, “Modified Texture Features from Histogram and Gray Level Co-occurence Matrix of Facial Data for Ethnicity Detection”, 2018 5th International Multi-Topic ICT Conference (IMTIC), 2018. https://doi.org/10.1109/IMTIC.2018.8467231.

- Loo, T. Lim, L. Ong and C. Lim, “The influence of ethnicity in facial gender estimation”, 2018 IEEE 14th International Colloquium on Signal Processing & Its Applications (CSPA), 2018. https://doi.org/10.1109/CSPA.2018.8368710.

- Young, D., Sanchez, D. and Wilton, L. (2019). Biracial perception in black and white: How Black and White perceivers respond to phenotype and racial identity cues. https://doi.org/10.1037/cdp0000103.

- Zhang, Y. Wang and B. Bhanu, “Ethnicity classification based on gait using multi-view fusion”, 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition – Workshops, 2010. https://doi.org/10.1109/CVPRW.2010.5544614.

- Krivo, M. Vélez, C. Lyons, J. Phillips and E. Sabbath, “Race, Crime, And The Changing Fortunes of Urban Neighborhoods, 1999–2013”, Du Bois Review: Social Science Research on Race, vol. 15, no. 1, pp. 47-68, 2018. https://doi.org/10.1017/S1742058X18000103.

- Billy Huang, “Immigrant crime in Taiwan: perspectives from Eastern Asia”, Foresic Research & Criminology International Journal, vol. 6, no. 3, 2018. https://doi.org/10.15406/frcij.2018.06.00210.

- Hollis and R. Martínez, “Theoretical Approaches to the Study of Race, Ethnicity, Crime, and Criminal Justice”, The Handbook of Race, Ethnicity, Crime, and Justice, pp. 203-207, 2018. https://doi.org/10.1002/9781119113799.part2.

- Gabbidon and H. Greene, “Race and Crime,” SAGE Publication, 2019.

- Awaworyi Churchill and E. Laryea, “Crime and Ethnic Diversity: Cross-Country Evidence”, Crime & Delinquency, vol. 65, no. 2, pp. 239-269, 2017. https://doi.org/10.1177/0011128717732036.

- Martínez, M. Hollis and J. Stowell, “The Handbook of Race, Ethnicity, Crime, and Justice”, 2018. https://doi.org/10.1002/9781119113799.part1.

- Fernandes and R. Crutchfield, “Race, Crime, and Criminal Justice”, Criminology & Public Policy, vol. 17, no. 2, pp. 397-417, 2018. https://doi.org/10.1111/1745-9133.12361.

- Martínez and M. Hollis, “An Overview of Race, Ethnicity, Crime, and Justice”, The Handbook of Race, Ethnicity, Crime, and Justice, pp. 11-16, 2018. https://doi.org/10.1002/9781119113799.part1.

- Unnever, “Ethnicity and Crime in the Netherlands”, International Criminal Justice Review, vol. 29, no. 2, pp. 187-204, 2018. https://doi.org/10.1177/1057567717752218.

- A. LaVeist, “Beyond dummy variables and sample selection: what health services researchers ought to know about race as a variable,” Health Serv Res, pp. 1-16, 1994.

- Stewart, “Medico-legal aspects of the skeleton. I. Age, sex, race and stature,” American Journal of Physical Anthropology, vol. 6, no. 3, pp. 315-322, 1948. https://doi.org/10.1002/ajpa.1330060306.

- Dubey, S. Roy and S. Verma, “Significance of Sacral Index in Sex Determination and Its Comparative Study in Different Races”, 2019. https://doi.org/10.16965/ijar.2016.153.

- Kumar, “Forensic odontology: A medico legal guide for police personnel”, International Journal of Forensic Odontology, vol. 2, no. 2, p. 80, 2017. https://doi.org/10.4103/ijfo.ijfo_13_17.

- Betancourt, “Defining Cultural Competence: A Practical Framework for Addressing Racial/Ethnic Disparities in Health and Health Care”, Public Health Reports, vol. 118, no. 4, pp. 293-302, 2003. https://doi.org/10.1093/phr/118.4.293.

- Williams, R. Walker and L. Egede, “Achieving Equity in an Evolving Healthcare System: Opportunities and Challenges”, The American Journal of the Medical Sciences, vol. 351, no. 1, pp. 33-43, 2016. https://doi.org/10.1016/j.amjms.2015.10.012.

- Jones, B. Truman, L. Elam-Evans, “Using Socially Assigned Race to Probe White Advantages in Health Status,” Race, Ethnicity, and Health: A Public Health Reader, 2013.

- Harris, D. Cormack and J. Stanley, “The relationship between socially-assigned ethnicity, health and experience of racial discrimination for Māori: analysis of the 2006/07 New Zealand Health Survey”, BMC Public Health, vol. 13, no. 1, 2013. https://doi.org/10.1186/1471-2458-13-844.

- Cormack, R. Harris and J. Stanley, “Investigating the Relationship between Socially-Assigned Ethnicity, Racial Discrimination and Health Advantage in New Zealand”, PLoS ONE, vol. 8, no. 12, p. e84039, 2013. https://doi.org/10.1371/journal.pone.0084039.

- Harris, D. Cormack, J. Stanley and R. Rameka, “Investigating the Relationship between Ethnic Consciousness, Racial Discrimination and Self-Rated Health in New Zealand”, PLOS ONE, vol. 10, no. 2, p. e0117343, 2015. https://doi.org/10.1371/journal.pone.0117343.

- Lee, S. Veeranki, H. Serag, K. Eschbach and K. Smith, “Improving the Collection of Race, Ethnicity, and Language Data to Reduce Healthcare Disparities: A Case Study from an Academic Medical Center,” Perspect Health Inf Manag, vol. 13, 2016.

- Holliday, “Aesthetic surgery as false beauty”, Feminist Theory, vol. 7, no. 2, pp. 179-195, 2006. https://doi.org/10.1177/1464700106064418.

- A. Elsamny, A. N. Rabie, A. N. Abdelhamidand E. A. Sobhi, “Anthropometric Analysis of the External Nose of the Egyptian Males,” Aesthetic Plastic Surgery, vol. 42, no. 5, pp. 1343–1356, 2018. https://doi.org/10.1007/s00266-018-1197-8.

- Mohammed, T. Mokhtari, S. Ijaz, A. Omotosho , A. Ngaski, M. Milanifard and G. Hassanzadeh, “Anthropometric study of nasal index in Hausa ethnic population of northwestern Nigeria,” Journal of contemporary medical science, vol. 4, 2018.

- Sa, O. B, A. Gt, F. Op And I. Ag, “Anthropometric Characterization Of Nasal Parameters In Adults Oyemekun Ethnic Group In Akure Southwest Nigeria”, International Journal of Anatomy and Research, vol. 6, no. 22, pp. 5272-5279, 2018. https://dx.doi.org/10.16965/ijar.2018.178.

- Ozdemir, D. Sigirli, I. Ercan and N. Cankur, “Photographic Facial Soft Tissue Analysis of Healthy Turkish Young Adults: Anthropometric Measurements,” Aesthetic Plastic Surgery, vol. 33, no. 2, pp. 175-184, 2008. https://doi.org/10.1007/s00266-008-9274-z.

- Plemons, “Gender, Ethnicity, and Transgender Embodiment: Interrogating Classification in Facial Feminization Surgery”, Body & Society, vol. 25, no. 1, pp. 3-28, 2018. https://doi.org/10.1177/1357034X18812942.

- Elsamny, A. Rabie, A. Abdelhamid and E. Sobhi, “Anthropometric Analysis of the External Nose of the Egyptian Males”, Aesthetic Plastic Surgery, vol. 42, no. 5, pp. 1343-1356, 2018. https://doi.org/10.1007/s00266-018-1197-8.

- Marsh, K. Pezdek and D. Ozery, “The cross-race effect in face recognition memory by bicultural individuals,” Acta Psychologica, vol. 169, pp. 38-44, 2016. https://doi.org/10.1016/j.actpsy.2016.05.003.

- Tong, T. Key, J. Sobiecki and K. Bradbury, “Anthropometric and physiologic characteristics in white and British Indian vegetarians and nonvegetarians in the UK Biobank”, The American Journal of Clinical Nutrition, vol. 107, no. 6, pp. 909-920, 2018. https://doi.org/10.1093/ajcn/nqy042.

- Mehta and R. Srivastava, “The Indian nose: An anthropometric analysis”, Journal of Plastic, Reconstructive & Aesthetic Surgery, vol. 70, no. 10, pp. 1472-1482, 2017. https://doi.org/10.1016/j.bjps.2017.05.042.

- Ozsahın, E. Kızılkanat, N. Boyan, R. Soames and O. Oguz. “Evaluation of Face Shape in Turkish Individuals,” Int. J. Morphol., 2016. https://scielo.conicyt.cl/pdf/ijmorphol/v34n3/art15.pdf.

- Chettri, D. Sinha, D. Chakraborty and D. Jain, “Naso-facial anthropometric study of Female Sikkimese University Students”, IOSR Journal of Dental and Medical Sciences, vol. 16, no. 03, pp. 49-54, 2017. https://doi.org/10.9790/0853-1603074954.

- Ho and K. Pezdek, “Postencoding cognitive processes in the cross-race effect: Categorization and individuation during face recognition”, Psychonomic Bulletin & Review, vol. 23, no. 3, pp. 771-780, 2015. https://doi.org/10.3758/s13423-015-0945-x.

- Cavazos, E. Noyes and A. O’Toole, “Learning context and the other-race effect: Strategies for improving face recognition”, Vision Research, 2018. https://doi.org/10.1016/j.visres.2018.03.003.

- Anacleto and A. Carvalho, “Improving Human-Computer Interaction by Developing Culture-sensitive Applications based on Common Sense Knowledge,” Human-Computer Interaction.

- Cowell and K. Stanney, “Manipulation of non-verbal interaction style and demographic embodiment to increase anthropomorphic computer character credibility”, International Journal of Human-Computer Studies, vol. 62, no. 2, pp. 281-306, 2005. https://doi.org/10.1016/j.ijhcs.2004.11.008.

- Schlesinger, W. Edwards and R. Grinter, “Intersectional HCI”, Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems – CHI ’17, 2017. https://doi.org/10.1145/3025453.3025766.

- Munteanu, H. Molyneaux, W. Moncur, M. Romero, S. O’Donnell and J. Vines, “Situational Ethics”, Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems – CHI ’15, 2015. https://doi.org/10.1145/2702123.2702481.

- Schlesinger, W. Edwards and R. Grinter, “Intersectional HCI,” Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems – CHI ’17, 2017. https://doi.org/10.1145/3025453.3025766.

- Bellini et al., “Feminist HCI,” Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems – CHI ’18, 2018. https://doi.org/10.1145/3170427.3185370.

- Breslin and B. Wadhwa, “Towards a Gender HCI Curriculum,” Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems – CHI EA ’15, 2015. https://doi.org/10.1145/2702613.2732923.

- Yardi and A. Bruckman, “Income, raceand class,” Proceedings of the 2012 ACM annual conference on Human Factors in Computing Systems – CHI ’12, 2012. https://doi.org/10.1145/2212776.

- M. Allison and L. Kendrick, “Towards an Expressive Embodied Conversational Agent Utilizing Multi-Ethnicity to Augment Solution Focused Therapy,” International Florida Artificial Intelligence Research Society Conference, pp. 332-337, 2013.

- J. Waycott et al., “Ethical Encounters in Human-Computer Interaction”, Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems – CHI EA ’16, 2016. https://doi.org/10.1145/2851581.2856498.

- P. Kumar, M. Bashour and V. Packiriswamy, “Anthropometric and Anthroposcopic Analysis of Periorbital Features in Malaysian Population: An Inter-racial Study”, Facial Plastic Surgery, vol. 34, no. 04, pp. 400-406, 2018. https://doi.org/10.1055/s-0038-1648224.

- S. Jilani, H. Ugail and A. Logan, “Inter-Ethnic and Demic-Group Variations in Craniofacial Anthropometry: A Review,” PSM Biological Research, vol. 1, 2019.