Automated Abaca Fiber Grade Classification Using Convolution Neural Network (CNN)

Volume 5, Issue 3, Page No 207-213, 2020

Author’s Name: Neptali Montañez, Jomari Joseph Barreraa)

View Affiliations

Department of Computer Science and Technology, Visayas State University, Baybay City, Leyte, 6521, Philippines

a)Author to whom correspondence should be addressed. E-mail: jomarijoseph.barrera@vsu.edu.ph

Adv. Sci. Technol. Eng. Syst. J. 5(3), 207-213 (2020); ![]() DOI: 10.25046/aj050327

DOI: 10.25046/aj050327

Keywords: Artificial intelligence, Deep learning, Convolutional neural network, Abaca fiber grade, VGGNet-16, CNN classifier

Export Citations

This paper presents a solution that automates Abaca fiber grading which would help the time-consuming baling of Abaca fiber produce. The study introduces an objective instrument paired with a system to automate the grade classification of Abaca fiber using Convolutional Neural Network (CNN). In this study, 140 sample images of abaca fibers were used, which were divided into two sets: 70 images; 10 per grade, each for training and testing. The input images were then scaled to 112×112 pixels. Next, using a customized version of VGGNet-16 CNN architecture, the training set images were used for training. Finally, the performance of the classifier was evaluated by computing the overall accuracy of the system and its Cohen kappa value. Based on the result, the classifier achieved 83% accuracy in correctly classifying the Abaca fiber grade of a sample image and obtained a Cohen kappa value of 0.52 — Weak, Level of Agreement. The implementation of this study would greatly help Abaca producers and traders ensure that their Abaca fiber would be graded fairly and efficiently to maximize their profit.

Received: 02 April 2020, Accepted: 06 May 2020, Published Online: 19 May 2020

1. Introduction

Abaca (Musa textilis) or Manila hemp in international trade is indigenous to the Philippines. The Philippines is the world’s largest producer of abaca fiber accounting for about 85% of the global production. Abaca plants are cultivated in 130 thousand hectares across the island by over 90 thousand farmers. It is one of the major export products of the country together with the banana, coconut oil, and pineapple. Because of this, the Philippines’ agricultural sector is very important for the economy as it contributes about 16% of Gross Domestic Product (GDP). The abaca industry continues to make a stronghold in domestic and international markets providing a yearly average baling (in bales of 125 kgs) of 424, 212 annually from 2012 – 2017 [1].

Philippine Fiber Industry Development Authority (PhilFIDA), is a government agency tasked to grade different fiber grades of the Abaca fibers, due to the use of different stripping knives serrations and spindle/machine-stripper. There are 13 fiber classifications in grading in which eight in normal grades (see Table 1), four in residual and one in wide strips that are currently used in the market based on its texture, color, length and strength [2]. Many manufacturing industries require large qualities of different classifications of fiber such as pulp, paper products, and for automotive applications.

Owing to the lack of instruments for an objective measurement of fiber quality, grading and classification have to be done by visual inspection, which is time-consuming and costly hence, the purpose of this study.

2. Related Work

Abaca fiber is considered as one of the strongest among natural fibers which are three times stronger than sisal [3]. It can be planted through disease-free tissue cultured plantlets, corm cut into 4 pieces with one eye each (seed piece) and sucker (used for replanting missing hills) [4]. It is mostly grown in the upland parts and grow on light textured soils under the shed of coconut trees. Abaca is harvested by cutting the stem using a sharp machete within 18–24 months of planting. After which, fibers are stripped by hand or spindle stripping. The fibers are then sun-dried and sold on an ‘all in’ basis [5]. Abaca fiber bundles are then delivered by local traders to the Grading Baling Establishment (GBE) that will undergo grading and classification.

In a study by [6], the grade of the Abaca fiber image in the bundle was predicted by two types of learning algorithms in neural networks based only on the color of the eight different normal grades. The learning algorithm in neural networks was the Self-Organizing Map (SOM) and Back Propagation Neural Network (BPNN). When implemented, the accuracy of SOM is 46% while

Table 1: Normal grades of hand stripped abaca fiber [6]

| Grade | Description | ||||

| Name | Alphanumeric code | Fiber strand size (in mm) | Color | Stripping | Texture |

| Mid current | EF | 0.20 – 0.50 | Light ivory to a hue of very light brown to very light ochre | Excellent | Soft |

| Streaky Two

|

S2 | 0.20 – 0.50 | Ivory white, slightly tinged with very light brown to red or purple streak | Excellent | Soft |

| Streaky Three | S3 | 0.20 – 0.50 | Predominant color – light to dark read or purple or a shade of dull to dark brown | Excellent | Soft |

| Current | I | 0.51 – 0.99 | Very light brown to light brown | Good | Medium soft |

| Soft seconds | G | 0.51 – 0.99 | Dingy white, light green and dull brown | Good | |

| Soft Brown | H | 0.51 – 0.99 | Dark brown | Good | |

| Seconds | JK | 0.51 – 0.99 | Dull brown to dingy light brown or dingy light yellow, frequently streaked with light green | Fair | |

| Medium brown | M1 | 0.51 – 0.99 | Dark brown to almost black | Fair | |

BPNN is 88%. BPNN is then the efficient alternative for the identification of Abaca fiber grades based on color classification provided by PhilFIDA. This study did not determine the grade of the Abaca fiber based on its texture which is the primary basis in grading its quality.

In [7] the study shows that by combining hand-crafted (color and texture) and convolutional neural network (CNN) features, the classification of images acquired in different lighting conditions, greatly improved. The same study also shows that VGGNet-16 CNN architecture outperforms other CNN architecture.

Furthermore, CNN has achieved human-like performance in several recognition tasks such as handwritten character recognition, face recognition, scene labeling, object detection, and image classification among others [8–16].

3. Material and Method

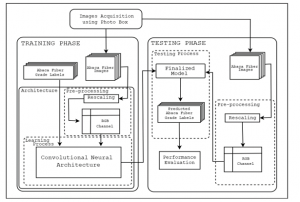

Figure 1 illustrates the system architecture applied in this study. The Abaca fiber grade classifier was composed of two phases. Each phase started with image acquisition and followed by a segregation of samples into folders for training and testing dataset. Then rescaling the image dimension from 2976×2976 pixels into 112×112 pixels then followed by converting each image to RGB channel.

3.1. Image Acquisition and Pre-processing

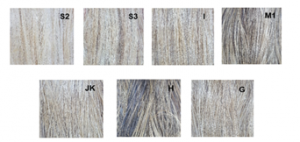

The abaca fiber samples (see Figure 2) were obtained by requesting Ching Bee Trading Corporation in Brgy. Hilapnitan, Baybay City, Leyte for Abaca fiber samples of different grades and the actual grading was done by a certified PhilFIDA inspector. Samples of abaca fiber for seven grades (S2, S3, I, G, H, JK, M1) only were segregated for image acquisition. The EF grade fiber was not available in the current season because of the ongoing El Niño phenomenon and other climate change factors.

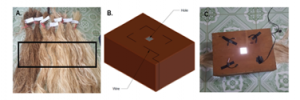

In [7] it is further explained that the different viewpoint and illumination of the acquired digital image will greatly affect the classification accuracy of the system. So, in this case, Abaca fiber sample image must be acquired in a controlled environment using an objective instrument ― a customized photo box and a 16.0-megapixel Samsung S6 camera with a distance of 19 cm above the sample and taken in the middle part only as shown in Figure 3.

3.2. Training Phase

The CNN architecture implemented in this study is shown in Figure 4. It consists of five convolutional layers followed by a rectified linear unit as activation function, and three pooling layers. After the last pooling layer is flatten into a single column vector, the concatenated 1024 data values are inputs to the neural network and it needs to be trained using back propagation algorithm. Then, the training optimization used was stochastic gradient descent optimization technique in finding the set of weights and biases between the neurons that determine the global minima of the loss function. All weights and bias values are set to random. Drop-outs and batch normalization were also applied in the CNN training phase.

3.3. Testing Phase and Performance Evaluation

The generated model and label files after the training phase will be used in the testing phase. Ten sample abaca fibers per grade were used as testing data to determine the performance of the system. The results will be plotted in a confusion matrix and from this, we can acquire the classifier’s accuracy using (1) and Cohen kappa value using (2). Thereafter, we can evaluate the classifier’s level of agreement based on the Cohen kappa value (see Table 2).

![]()

Figure 1: System architecture of the Abaca fiber grade classifier.

Figure 2: Sample images of abaca fiber with their corresponding grade.

Figure 3: (A) Location of the Abaca fiber, (B) CAD design of photo box, and (C) acquisition set-up of abaca fiber image.

where,

pο = the relative observed agreement among raters.

pε = the hypothetical probability of chance agreement

4. Experimental Results

After conducting the training phase using the training set abaca fiber sample images (see Table 3), the parameters of the instantiated model based from Figure 4 were adjusted. The adjusted model will be used in the testing phase as the Abaca grade classifier.

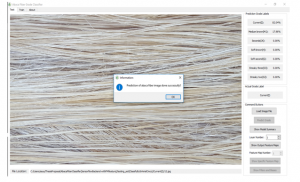

In the testing phase, the user needs to load an Abaca fiber captured image from the objective instrument, and trigger the “Predict Grade” button where a matching percentage per grade class will be generated (see Figure 5). After testing the model with the testing dataset of 70 images (10 images per grade), the sample results were shown in Table 4. Confusion matrix (see Table 5) were then derived from Table 4.

Based on the results, the classifier achieved an 83% overall accuracy in correctly classifying the Abaca fiber grade of a sample image and attained a Cohen kappa value of 0.52.

The overall accuracy rate of the system indicates that the objective instrument paired with the application of a customized VGGNet-16 convolutional neural network is sufficient enough to support an accurate classification of the Abaca fiber grade based on an Abaca fiber sample image.

No grade G Abaca fibers were correctly identified. Instead they were mistakenly classified as either grade M1 or I. Only grade G’s recall achieved 0% (see Table 6) while other grades achieved greater than or equal to 90%. This means that CNN did not draw a distinct difference between features extracted from grade G Abaca fibers, and M1 or I Abaca fibers. This is a highly likely scenario since grades M1 and I Abaca fibers have a 100% accurate prediction.

The Cohen kappa value of the classifier conveys that the percentage of data that are only reliable ranges from 15% – 35% only. The low Cohen’s Kappa value stems from having no correct classification of G grade due to ambiguity or low distinct features between grade G Abaca fibers and grade M1 and I Abaca fibers, in 2-dimensional texture processing.

Table 2: Cohen’s Kappa Value – Level of Agreement Equivalency

| Value of

Kappa |

Level of Agreement | Percentage of Data that are Reliable |

| 0-.20 | None | 0-4% |

| 0.21–0.39 | Minimal | 4-15% |

| 0.40–0.59 | Weak | 15-35% |

| 0.60–0.79 | Moderate | 35-63% |

| 0.80–0.90 | Strong | 64-81% |

| Above .90 | Almost Perfect | 82-100% |

Figure 4: Customized VGGNet-16 CNN Architecture.

Table 3: Sample Abaca Fiber Training Set Images per Grade

Figure 5: Prediction results of the Abaca fiber image.

Table 4: Prediction Results of Testing Dataset Abaca fiber images

| Sample No. | Actual Grade | Predicted Grade | Remarks |

| 1 | Current(I) | Current(I) | Correct |

| 2 | Current(I) | Current(I) | Correct |

| 3 | Current(I) | Current(I) | Correct |

| 4 | Current(I) | Current(I) | Correct |

| 5 | Current(I) | Current(I) | Correct |

| 6 | Current(I) | Current(I) | Correct |

| 7 | Current(I) | Current(I) | Correct |

| 8 | Current(I) | Current(I) | Correct |

| 9 | Current(I) | Current(I) | Correct |

| 10 | Current(I) | Current(I) | Correct |

| 11 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| 12 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| 13 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| 14 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| 15 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| 16 | Medium Brown(M1) | Medium Brown(M1) | Correct |

| : | : | : | : |

| 70 | Streaky Two(S2) | Streaky Two(S2) | Correct |

Table 5: Confusion matrix based on Table 4.

| Actual Abaca Fiber Grades | ||||||||

| S2 | S3 | I | G | H | JK | M1 | ||

| System Predicted Grades | S2 | 10 | 0 | 0 | 0 | 0 | 0 | 0 |

| S3 | 0 | 9 | 0 | 0 | 0 | 0 | 0 | |

| I | 0 | 0 | 10 | 2 | 0 | 0 | 0 | |

| G | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| H | 0 | 0 | 0 | 0 | 10 | 1 | 0 | |

| JK | 0 | 0 | 0 | 0 | 0 | 9 | 0 | |

| M1 | 0 | 1 | 0 | 8 | 0 | 0 | 10 | |

Table 6: Recall for each Abaca fiber grade from Table 5.

| Grade | Recall |

| S2 | 100% |

| S3 | 90% |

| I | 100% |

| G | 0% |

| H | 100% |

| JK | 90% |

| M1 | 100% |

5. Conclusion and Future Work

This study addresses the lack of objective instrument in acquiring unbiased datasets of Abaca fiber images. The implemented system used CNN as the main classifier and its performance was evaluated. Though the system correctly classified most of their Abaca fiber grades, it fails to classify any of the grade G Abaca fibers. Thus, adding other features aside from ones extracted from the image or trying other AI systems to be used as a classifier would be recommended.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

We would like to thank our consultant, Mr. Ariel C. Cinco, a certified PhilFIDA inspector assigned to Ching Bee Trading Corporation in Brgy. Hilapnitan, Baybay City, Leyte at the time of conducting the study, for lending his expertise on Abaca fiber trading.

- PhilFIDA, “Fiber Statistics,” PhilFIDA, 2018. [Online]. Available: http://www.philfida.da.gov.ph/index.php/2016-11-10-03-32-59/2016-11-11-07-56-39. [Accessed 28 October 2018].

- PhilFIDA, “Abaca fiber: Grading and Classification – Hand-stripped and Spindle/ Machine stripped.,” Diliman, Quezon City, Philippine National Standard. Bureau of Agriculture and Fisheries Standards, 2016.

- F. Göltenboth and W. Mühlbauer, “Abacá – Cultivation, Extraction and Processing,” in Industrial Applications of Natural Fibres: Structure, Properties and Technical Applications, John Wiley & Sons, Ltd, 2010, pp. 163-179. https://doi.org/10.1002/9780470660324.ch7

- P. P. Milan and F. Göltenboth, Abaca and Rainforestation Farming: A Guide to Sustainable Farm Management, Baybay, Leyte: Leyte State University, 2005.

- R. Armecin, F. Sinon and L. Moreno, “Abaca Fiber: A Renewable Bio-resource for Industrial Uses and Other Applications,” in Biomass and Bioenergy: Applications, Springer International Publishing, 2014, pp. 107-118. https://doi.org/10.1007/978-3-319-07578-5_6

- B. Sinon, Development of an Abaca Fiber Grade Recognition System using Neural Networks, Visayas State University (VSU), Baybay City, Leyte: Undergraduate Thesis (Department of Computer Science and Technology), 2013.

- C. Cusano, P. Napoletano and R. Schettini, “Combining multiple features for color texture classification,” Journal of Electronic Imaging 25(6), p. 10, 2016. https://doi.org/10.1117/1.JEI.25.6.061410

- L. Tobias, A. Ducournau, F. Rousseau, G. Mercier and R. Fablet, “Convolutional Neural Networks for Object Recognition on Mobile Devices: A Case Study,” in 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 2016. https://doi.org/10.1109/ICPR.2016.7900181

- F. Chollet, Deep Learning with Python, San Diego, USA: Manning Publications, 2017.

- M. Ji, L. Liu and M. Buchroithner, “Identifying Collapsed Buildings Using Post-Earthquake Satellite Imagery and Convolutional Neural Networks,” MDPI, p. 20 pages, 2018. https://doi.org/10.3390/rs10111689

- A. Rosebrock, Deep Learning for Computer Vision with Python, California, USA: Pyimagesearch, 2017.

- A. Zisserman and K. Simonyan, “Very Deep Convolutional Neural Networks For Large-Scale Image Recognition,” ICLR, p. 14 pages, 2015. https://arxiv.org/abs/1409.1556

- F. Bianconi, “Theoretical and experimental comparison of different approaches for color texture classification,” Journal of Electronic Imaging, p. 20, 2011. https://doi.org/10.1117/1.3651210

- Y. Lecun, L. Bottou, Y. Bengio and H. P., “Gradient-Based Learning Applied to Document Recognition,” IEEE, vol. 86, no. 11, pp. 2278-2324, 1998. https://doi.org/10.1109/5.726791

- J. Li, G. Liao, Z. Ou and J. Jin, “Rapeseed Seeds Classification by Machine Vision,” in Workshop on Intelligent Information Technology Application (IITA 2007), Zhang Jiajie, China, 2007. https://doi.org/10.1109/IITA.2007.56

- T. Mȁenpȁȁ and M. Pietikȁnen,”Classification with color and texture: jointly or separately?,” Pattern Recognition, vol. 37, no. 8, pp. 1629-1640, 2004. https://doi.org/10.1016/j.patcog.2003.11.011

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

No. of Downloads Per Month

No. of Downloads Per Country