A Psychovisual Optimization of Wavelet Foveation-Based Image Coding and Quality Assessment Based on Human Quality Criterions

Volume 5, Issue 2, Page No 225-234, 2020

Author’s Name: Abderrahim Bajit1,a), Mohammed Nahid2, Ahmed Tamtaoui3, Mohammed Benbrahim1

View Affiliations

1Ibn Toufail University, GERST Electrical Engineering Department, National School of Applied Sciences, Kénitra, Morocco

2Mohammed V University, SC Department, INPT Institute, Rabat, Rabat, Morocco

3Hassan II University, Electrical Engineering Department, Faculty of Sciences and Technologies, Mohammedia, Morocco

a)Author to whom correspondence should be addressed. E-mail: abderrahim.bajit@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 5(2), 225-234 (2020); ![]() DOI: 10.25046/aj050229

DOI: 10.25046/aj050229

Keywords: Discrete Wavelet Transform DWT Psycho-visual Quality Criterions, Wavelet Foveation Filter FOV, Just Noticeable Difference JND, Contrast Threshold Elevation, Embedded Image Coder SPIHT, Objective Quality Metric FWVDP, Subjective Quality Metric, Mean Opinion Score MOS

Export Citations

In the present article, we introduce a foveation-based optimized embedded and its optimized version image coders thereafter called VOEFIC/MOEFIC and its related foveation wavelet visible difference predictor FWVDP coding quality metric. It advances a visually advanced foveal weighting mask that regulates the wavelet-based image spectrum before its encoding by the SPIHT encoder. It intends to arrive at a destined compression rate with a significant quality improvement for a disposed of binary budget, witnessing separation, and a foveal locale that locates the object in the zone of concern ROI. The coder embodies a couple of masking achieves build on the human psycho-visual quality criteria. Hence, the coder administers the foveal model to weigh the source wavelets samples, reshapes its spectrum content, adapts its shape, discards or somewhat shrinks the redundant excess and finally enhances the visual quality. The foveal weighting mask is computed indoors wavelet sub-bands as come after. First, it administers the foveal wavelet-based filter depending on the intention point so that it removes or at least reduces the imperceptible frequencies around the zone of concern. Next, it augments the picture contrast according to wavelet JND thresholds to manage brightening and nice the contrast above the distortion just notable. Once refined, the weighted wavelet spectrum will be embedded coded using the standard SPIHT to reach a desired binary bit budget. The manuscript also advances a foveation-based objective quality evaluator that embodies a psycho-visual quality criterion identified with the visual cortex framework. This investigator furnishes a foveal score FPS having the power of detecting probable errors and measuring objectively the compression quality. Keep in mind that the foveal coder VOEFIC and its visually upgraded variant MOEFIC, have similar complexity as their reference SPIHT. In contrast, their gathered data highlight the visual coding advancement and the boost ratio purchased in its quality gain.

Received: 29 December 2019, Accepted: 20 February 2020, Published Online: 20 March 2020

1. Introduction

In multi-channel wavelet picture coding [1], psycho-visual investigations show that spatially, the goals, or examining thickness, has the most noteworthy incentive at the purpose of the fovea and descends quickly aside from that seeing point depending on the glancing angle. Therefore, when a spectator eyewitness glances at a zone in a natural picture, the locale encompassing the purpose of obsession is anticipated toward his fovea. At that point, examined with the most noteworthy thickness and thusly saw with the most elevated differentiation affectability. Overall, the viewing thickness and contrast susceptibility diminishes significantly with expanding the glancing angle regarding the gazed point. The inspiration driving the foveal compression is that there exists significant high-recurrence data excess in the fringe districts.

In the vision, an image can capably be addressed by discarding or somewhat shrinking the redundant excess, considering the foveal point (s) and the watching space. The foveal masking plans to filter a constant resolution picture, with the end goal that when the spectator glances at his interested zone, he could not recognize the unlikeness among the source picture and its foveal variant around the locale of intrigue. As illustrated in Figure .1, we administer the foveal mask to the BARBARA test picture and subjectively compare its quality to its original version. As a result, when we center our attention on a gazed zone, both pictures grant the likewise appearance. However, in peripheral regions, the disparities are significant. On the other hand, the foveal quality becomes acceptable, but its reference still distorted. In addition, the foveal coder is practiced adopting three vital parameters, the viewing distance (V=4), the bit rate (0.15bpp), and the regarded zone (the face middle) which specify the region of intrigue ROI.

In the literature, various strategies inexact the ideal foveal channel. In [2], a pyramid architecture is prescribed to focus pictures. In [2-5], the foveal channel comprises of a set of low-pass bands with different cutoff frequencies. In [3], the configuration of the foveal mask relies upon the Laplacian pyramidal design. In [2-5], the foveal masking strategy implements a non-uniform arranging design [6-9] that lacks the integration of psycho-visual properties. Lately, incredible achievement has been furnished by a couple of wavelet picture coders that are based zones of concern (ROI), like the coders implemented in [4-9], their psychovisual variant accurate in [10-12] and the norm JPEG2000 cited in [14].

The previous solution doesn’t fuse the Watson’s psycho-visual compression approach that is founded on the quality criterions examinations of the wavelets filter 9/7 [13-14] to aggregate the clarity of its coefficients distortion limits [14-16], then to quantify them visually and finally to furnish an enhanced lossless squeezing. In the last plan, the merge of psychovisual aspect isn’t appropriated, similar to luminance and contrast hiding or edge rise [17-20], whose specific characteristics is to spatially refine these picture features and also to reform every single conceal recurrence to the observer’s visual cortex. Abusing this reality, we adjust the picture technical aspect (luminance acclimating and difference hiding), to fine-tune conceal frequencies as indicated by the JND limits [15] and to still quantize proficiently the picture spectrum among the zones of concern ROI.

Once a picture is coded, the gained quality needs to be evaluated. To attain this objective, one may find in the literature a series of “Image Fidelity Assessor” (IFA) [21-24]. Based on the “Visible Difference Predictor” VDP [25] and its wavelet-based variant WVDP [26-35], we suggest a psycho-visual assessor that integrates the “Human Visual System HVS” quality criterions [31-33]. Our foveal metric named thereafter FWVDP adapts the original wavelet coefficients, provides a foveal score FPS aiming to predict the probability of detecting the coder introduced errors.

This manuscript includes the following section: first, it advances the flow diagram of the foveal coder. After, it explains, in the second section, how to implement, the wavelet-based foveal masking. Then, it highlights in the third section, how to compute and implement the visual threshold elevation model integrating the wavelet-based edges (JND) entitled “Just Notable Differences”. This way, it improves the content of the weighted wavelet spectrum and subsequently optimizes coding. Then it details, in the fourth section, how to evaluate the foveal coding quality based on the foveation wavelet-based visible distortion predictor model to conclude our visual encoder performances. Finally, it emotionally and neutrally discards, in the last section, the results gathered depending on the viewing distance, the bit rate, and the regarded zone which specify the region of intrigue ROI.

Figure. 1. Barbara original test image (left) and its foveated version (right) for a viewing distance V = 4 and binary targeted bit rate bpp = 0.125.

Figure. 1. Barbara original test image (left) and its foveated version (right) for a viewing distance V = 4 and binary targeted bit rate bpp = 0.125.

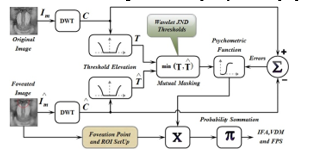

2. Foveation Wavelet-Based Visual Image Coding Diagram

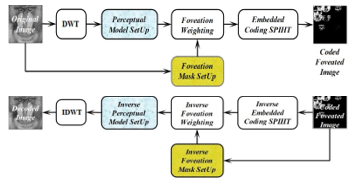

We advance in Figures 2 and 3, the flow process of the VOEFIC coder “Visually Optimized Embedded Foveation Image Coder” [12] and its upgraded variant MOEFIC the “Modified Optimization Embedded Foveation Image Coder“. The two coders comprise five successive stages itemized as come after. In the initial trail, it transforms the source picture utilizing a discrete wavelet DWT that play out a cortical-like decay [1] exploiting a 9/7 biorthogonal filter [13-14] which, in view of its extraordinary mathematic criterions [15] guarantees an ideal reproduction as prescribed by the standard coder JPEG2000 [12-13].

Figure 2: Foveation Wavelet-Based Visual Embedded Image Coding and Decoding flow diagrams based together on a Psycho-Visual Upgrading Tools.

Figure 2: Foveation Wavelet-Based Visual Embedded Image Coding and Decoding flow diagrams based together on a Psycho-Visual Upgrading Tools.

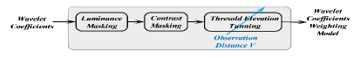

In the following trail, related to the fixation point and its related observation distance we compute the wavelet-based foveal filter [2-5]. Its main aim is to eliminate or reduce invisible frequencies in the peripheral regions of the zone of concern ROI (section III). Then in the subsequent trail, according to the wavelet notable difference edges, we figure the contrast veiling known as limits height [14-16]. Its task starts first on computing the luminance concealing identified with the foveation wavelet coefficients. At that point, it uses the perceptual JND [15] edges required for contrast redress. This activity shadows undetectable differentiation parts and lifts above JNDs limits every single noticeable one concerning the degree of wavelet edges (Section IV).

Figure 3: Visually Optimized Wavelet Weighting Process

Figure 3: Visually Optimized Wavelet Weighting Process

The primary reason for our upgraded visually based masking layout and exclusively furnished to the MOEFIC coder (VOEFIC coder upgraded variant) [10-12] is its capacity of regulating none directly the picture spectral contents regarding the visioning conditions. This parameter, as it develops from a lower to higher values, the spectral form plans to cover the significant frequencies. For low separations, the obtained channel spread significantly lower spectrum contents. Oppositely, for higher length, the mask removes, this time, substantially higher image spectral frequencies.

In the last advance, we administer a progressive coder to salable code with different compression rates our visually ballasted wavelet samples till arriving at destined goal identified with a disposed of binary budget. For this scope, we have opted for the use of the SPIHT progressive encoder, which is itself an advanced variant that appertains to the clan of “Embedded Zero Tree Wavelet EZW” Coder commenced early by Shapiro [36] and heightens alongside by A. Said and W. A. Pearlman [37-39].

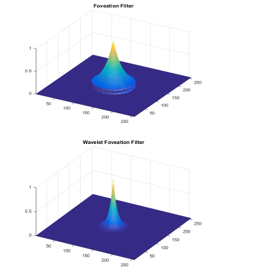

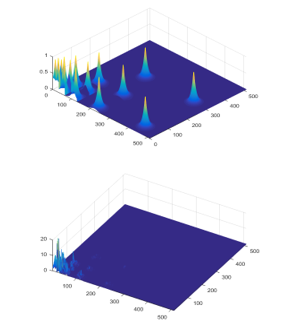

3. Conceiving and Configuring a Wavelet Foveal Filter

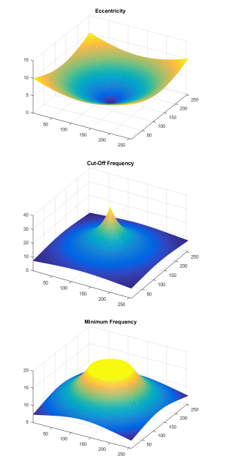

In the guise of the observer framework, the HVS is profoundly space-variation in testing, encoding, preparing, and understanding, and in light of the fact that the spatial goals of the visual cortex is most noteworthy all over the gazed region, and diminishes quickly with the working up of the eccentricity, we register the foveation channel [2-5]. By misusing its preferences, it is conceivable to decrease or evacuate impressive high frequencies and unfortunate data from the fringe districts and still perceptually reproduce with a brilliant nature of the decoded picture. To reach this aim; we apply the upcoming process shown in Figure 5. First, we locate the fixation point and calculate the distortion for all pixels with respect to this point. Then, we convert these distortions to eccentricities given cycle/degree. Next, we compute the cut-off frequency (cycle/degree) beyond which, all higher frequencies become invisible (eq. 1) which limits the visible frequencies with no aliasing display in the human visual cortex. Moreover, based on the half display resolution (eq. 2) (cycle/degree), we obtain the minimum frequency (cycle/degree) so known to us as the Nyquist frequency (eq. 3) which determinates the visible spectrum.

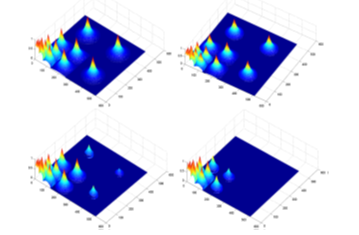

The foveation channel alters the picture range contingent upon the survey perception separation. Its shape wipes out logically higher frequencies with expanding perception separation. Therefore, the onlooker is continuously unfit to recognize high frequencies when the distance increments [2-5] Figure 4.

Figure. 4. Foveation wavelet-based models designed according to the following survey distance V = 1, 3, 6 and 10. With increasing observation distances, the shadowed regions increase and the foveal filter sensitivity value decrease.

Figure. 4. Foveation wavelet-based models designed according to the following survey distance V = 1, 3, 6 and 10. With increasing observation distances, the shadowed regions increase and the foveal filter sensitivity value decrease.

Figure. 5. Foveation Wavelet-Based Visual Weighting Filter process implementation stages computed for one wavelet sub-band. We plot successively its components as come after eccentricity angle, Cut-off frequency avoiding aliasing cortical display, Minimum visible frequency concerning the half media resolution (Nyquist-Frequency), Contrast Sensitivity Function, Foveation Wavelet filter.

Figure. 5. Foveation Wavelet-Based Visual Weighting Filter process implementation stages computed for one wavelet sub-band. We plot successively its components as come after eccentricity angle, Cut-off frequency avoiding aliasing cortical display, Minimum visible frequency concerning the half media resolution (Nyquist-Frequency), Contrast Sensitivity Function, Foveation Wavelet filter.

4. Contrast Adjust based on Elevation to JND Thresholds

The fundamental advance received in our coding strategy is its complexity covering the masking layout, which we only embrace right now. In fact, we administer this activity to the first wavelet range. Its origination depends on three psycho-visual criterions to visually develop and configure the weighting mask, progressively: first, it decides the perceptual edges JND experimented by Watson [14-16], at that point, it arranged the brightening [17-20] (otherwise called, luminance concealing), Contrast redress [17-20] known as edge height. Once figured, the model is applied to wavelet coefficients in the wake of guaranteeing a cortical-like decay [1], at that point contrasted with the genuine model handled by the renowned human visual cortical disintegration which we at long last confirm their ideal relationship.

To arrive at this point, we initially register the JND limits [8-9] utilizing a base location identified with wavelet sub-groups. These edges were gathered from the psychophysical tests prepared by Watson. The accomplished edges values relate solely to the Daubechies linear phase wavelet channel [13-14]. Displaying edges in picture pressure relies upon the mean luminance over a chose district in the picture. To figure the differentiation affectability we consider its variety that we can refine utilizing luminance veiling redress mark. In this manuscript, we regulate this veiling with a constant set to 0.649, in light of force work, received appropriate in the JPEG2000 picture coder [13-14].

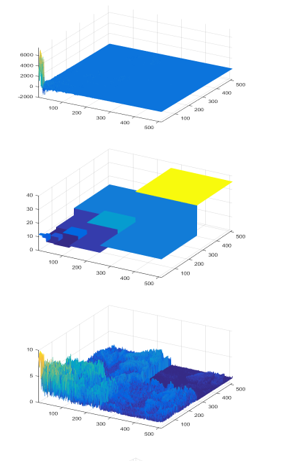

Likewise, differentiate amendment or complexity limit rise is a second factor that influences essentially the recognition edge. This rise remembers that the permeability of one picture design part changes altogether with the nearness of a picture masker compound [17-20]. Complexity veiling revises the variety of the location edge of an example segment as an element of the hider content. The covering result alludes to us as a differentiation capacity of an objective limit contrast a hider. Right now, coefficients speak to the masker signal info picture to code outwardly, while the quantization twisting speaks to the objective sign [17-20]. In display 6, we process the wavelet foveation-based visually adapted masking layout. It explains progressively the visual covering impact on the image content. It displays first, the original wavelet coefficients, then plots the JND thresholds [15], next draws the contrast masking effect, then plots the foveation filter and finally its impact on the outwardly weighted variant.

To start with, the procedure breaks down the first picture to give wavelet coefficients – initial step-. At that point figures their relating perceptual edges JND – second step-. These limits depend upon both to Daubechies linear phase channel. It registers next, the foveal filter FOV to foveate the wavelet areas of intrigue ROI and reshape their range [2-10]–third step-. From that point forward, it adjusts the picture relating luminance [17-20] – fourth step-and raises the complexity as per perceptual limits [14-16]. At last, – in the fifth step-, it administers the planned channel to load the wavelet samples and scalable encodes them as indicated by SPIHT described coding reasoning [36-37].

We advance in Figure 6, the masking process conceived specially for the LENA test picture at a granted review separation V=4. This task explains how we restyle the picture wavelet spectrum regarding our visual loads and explains how the channel influences the wavelet dispersion across sub-bands. It impressively reshapes medium and low frequencies that comprise normally the fundamental image substance. Keep in mind that, we can regulate the shape conditionally to a changeable perception separation.

Figure. 6. Visually Optimized Foveation-based Weighting Model exclusively conceived for the LENA test image, for her face as foveal region and for a survey distance V = 4. We cover successively its stages as come after the Wavelet-based Frequency Spectrum, the Limit of Visible Distortions JND, Contrast Elevation, Foveal Filter FOV, and the visually adapted foveal image frequency spectrum to be progressively coded by the standard coder SPIHT.

Figure. 6. Visually Optimized Foveation-based Weighting Model exclusively conceived for the LENA test image, for her face as foveal region and for a survey distance V = 4. We cover successively its stages as come after the Wavelet-based Frequency Spectrum, the Limit of Visible Distortions JND, Contrast Elevation, Foveal Filter FOV, and the visually adapted foveal image frequency spectrum to be progressively coded by the standard coder SPIHT.

5. Foveation-based Unbiased Quality Investigator

To quantize the strategies of image coding quality, we contrast dependably the measures concurring with abstractly to the opinion averaged notes (MOS). The utilization of scientific models, like, the “average squared distortion” (MSE) and its streamlined variant the “Peak Signal to Noise Ratio” (PSNR) are straightforward and spatially processed. Notwithstanding, these measurements correspond ineffectively to the MOS averaged notes that rely upon favorable conditions ensuring the cortical quality criteria [33].

Figure 7: Foveation Wavelet-based Visible Difference Predictor.

Figure 7: Foveation Wavelet-based Visible Difference Predictor.

As of late, we exercise a cortical-like measurements depending on the psychovisual properties to streamline the interaction factor with the opinion average notes MOS. it expects the merged blunders in a picture naturally unrecognizable to the human onlooker [21-24]. The VDP metric [25] unlike such metrics, enlivens on a cortical transform that intercepts a significant level of visual errors dependably on the picture components. In fact, Wavelet depending metrics get productive in picture coding, due to its closeness to the cortical decay. Regardless of its channels restriction, the wavelet-based investigator yields an exemplary quality gauge and add to advancing the picture squeezing plans.

Right now, we have built up another cortical-like picture quality measurement; labeled “Foveation Wavelet-based Visible Difference Predictor FWVDP” that appeared in Figure 7. It is a foveal form of its native variant WVDP [25-30]. It sums noticeable blunders exercising the “Minkowski Aggregation” to arrive at the obvious contrast guide and yields, obviously [25-30], the foveal mark FPS employing a “Psychometric Activity” [23]. The foveal metric regulates both the source and degraded picture utilizing the advanced arranging mask we conveyed in sections 3 and 4. Along these lines, it disposes of all imperceptible data and looks at just the significant ones. At that point, it moves these blunders to the “Minkowski Addition” [23] entity to arrive at the obvious distortions map and their foveal factor FPS utilizing the “Psychometric Activity”. Therefore, it supplies productively to psycho-visual coders planning to upgrade their exhibition. It communicates the capacity to see noticeable blunders inside wavelet channels. Its recognition likelihood recipe is as come after:

Here, the term means degradations at the area , refers to the visual edge, α and β are refining constants, P the error probability. Propelled from the Minkowski Summation, and exercised indoors image wavelet spectrum, the probability summation furnishes the aimed mark as come after:

Keep in mind that with increasing values of the FPS mark approaching the unit the degraded image reaches its excellent quality. Oppositely, the degraded image attend its poor quality.

6. Gathered Results Analysis

In the vision, all coders are completely subject to their quality measurement which hypothetically and effectively corresponds well with its subjective reference issued from the opinion averaged note MOS. Right now, we experimented with foveal coder MOEFIC and its native variant VOEFIC engaging 8 bits gray scale pictures. We also surveyed their quality exercising the FWVDP foveal wavelet metric for the former and its streamlined variant FVDP for the later. For such reason, we study the MOEFIC and VOEFIC coding quality coding to their reference, separately with VOEFIC for the former and to SPIHT for the later. To arrive at this point, we conveyed three assessment manners that act objectively, subjectively and quantitatively, as a function of the bit binary budget spending and survey conditions.

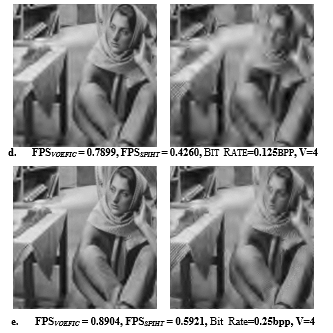

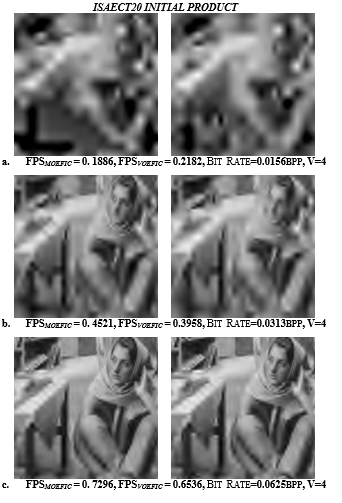

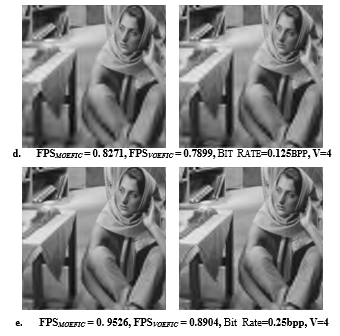

The main methodology counts completely upon the scores FPS acquired from the coding quality assessment afforded by the coders SPIHT, and its visually revised variants VOEFIC, and MOEFIC experienced together on standard test grayscale pictures. As appeared in figures 8, 9, 10, 11 and 12, the outcomes are done for expanding squeezing rates changing from 256:1 conforming to 0.0039bpp till 2:1 corresponding to 0.5bpp and a viewing separation constant grabbing its value in the set: {1,3,6,10}. Accordingly, all methodologies support that exceptionally for low spending plan (below 0.0625bpp), numerous spatial blunders are noticeable in the SPIHT coder picture, while visual coders MOEFIC and VOEFIC display substantially more fascinating data with regards to the locales of concern. So also, at the intermediate squeezing rate (beneath 0.125bpp), the SPIHT coder despite everything giving obscured pictures, while the foveal variants coders show a huge quality over the entire picture. Then again, for a higher compression rate (above 0.25bpp), the foveal coders’ quality remains constantly better than its reference one. At long last, when the compression rate arrives at a high piece pace, all the mentioning coders approache a uniform appearance and their afforded images become indistinguishable at the entire images.

The qualitative manner affords the average scores of the advanced coders and of their reference. As exhibited in Figures 8-9, we emotionally highlight the MOEFIC, VOEFIC, and SPIHT coder images. We inspect the approach on the “BARBARA” test picture for spending compression rate and fixed perception length V. This experience affirms the abstract quality documentation to the target esteems identified with its corresponding property score FPS. It affirms that, at a lower compression rate, the candidate coders MOEFIC and VOEFIC results keep up significant-quality over the entire picture. So also, for the intermediate spending plan, the witnessed images zones are pitifully unmistakable in the reference coder pictures. However, those areas are emphatically recognizable to the visual coders’ images that emphasize significantly the whole picture contents. For higher compression rates and survey distances, the visual coders behave superbly well at the whole analyzed image, as well as the images offered by the SPIHT encoder become significant.

In the QUANTITATIVE methodology, we contrast the visual quality boost ratio against its reference SPIHT as come after:

100*(FPSVOEFIC – FPSSPIHT)/FPSSPIHT

100*(FPSMOEFIC – FPSVOEFIC)/FPSVOEFIC

We can finish up as filled in tables 1, 2 and 3, with expanding parallel spending compression rate and also perception conditions the quality addition develops continuously up. This establishes our coder/assessor upgrading aim in terms of additional enhancement.

Figure 8: The foveal coder visually optimized VOEFIC (left column) contrast its Reference SPIHT coder (right column) with accompanied FPS values granted by the Quality Metric FWVDP experienced for BARBARA test picture, for changeable binary budget and fixed survey distance. The former has its values in the set: a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp and the latter has its value set to V = 4.

Figure 8: The foveal coder visually optimized VOEFIC (left column) contrast its Reference SPIHT coder (right column) with accompanied FPS values granted by the Quality Metric FWVDP experienced for BARBARA test picture, for changeable binary budget and fixed survey distance. The former has its values in the set: a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp and the latter has its value set to V = 4.

Figure 9: The foveal coder visually optimized MOEFIC (left column) contrast its legacy version VOEFIC coder (right column) with accompanied FPS values granted by the Quality Metric FWVDP experienced for BARBARA test picture, for changeable binary budget and fixed survey distance. The former has its values in the set: a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp and the latter has its value set to V = 4.

Figure 9: The foveal coder visually optimized MOEFIC (left column) contrast its legacy version VOEFIC coder (right column) with accompanied FPS values granted by the Quality Metric FWVDP experienced for BARBARA test picture, for changeable binary budget and fixed survey distance. The former has its values in the set: a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp and the latter has its value set to V = 4.

Figure 10: Foveal coder VOEFIC contrasts the standard SPIHT Coder with accompanied FPS scores granted by the FVDP Metric experienced for BARBARA and GOLDHIL test pictures, for changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}.

Figure 10: Foveal coder VOEFIC contrasts the standard SPIHT Coder with accompanied FPS scores granted by the FVDP Metric experienced for BARBARA and GOLDHIL test pictures, for changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}.

Figure 11: Foveal Coder MOEFIC contrasts the VOEFIC Foveal Coder with accompanied scores FPS, granted by the FWVDP Metric experienced for BARBARA and MANDRILL test pictures, for a changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}..

Figure 11: Foveal Coder MOEFIC contrasts the VOEFIC Foveal Coder with accompanied scores FPS, granted by the FWVDP Metric experienced for BARBARA and MANDRILL test pictures, for a changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}..

Figure 12: Foveal Coder MOEFIC contrasts the VOEFIC Foveal Coder with accompanied scores FPS, granted by the FWVDP Metric experienced for LENA and BOAT test pictures, for a changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}..

Figure 12: Foveal Coder MOEFIC contrasts the VOEFIC Foveal Coder with accompanied scores FPS, granted by the FWVDP Metric experienced for LENA and BOAT test pictures, for a changing binary rate in the set {a. 0.0156bpp, b. 0.0313bpp, c. 0.0625bpp, d. 0.125bpp, and e. 0.25bpp} and fixed survey distance taking its value in the set {1, 3, 6, 10}..

Table 1: VOEFIC vs SPIHT quality boost ratio based on the FVDP Quality Metric experienced for the test pictures: BOAT, MANDRILL, BARBARA, and LENA for changing survey distances and still binary budget.

| Targeted Binary Budget | Quality boost ratio (%) | |||

| LENA | BARBARA | MANDRILL | BOAT | |

| BPP = 0.0625 | 8.9022 | 4.4205 | 5.1243 | 6.9512 |

| BPP = 0.25 | 26.3367 | 34.5819 | 22.4297 | 16.6942 |

| BPP = 1 | 94.2398 | 74.7420 | 53.1172 | 58.8734 |

ISAECT20 INITIAL PRODUCT

Table 2: VOEFIC vs SPIHT quality benefits granted by FVDP Quality Metric experienced for the test pictures: BOAT, MANDRILL, BARBARA, and LENA for changeable binary compression rates and still survey separation.

| Viewing Separation | Quality boost ratio (%) | |||

| LENA | BARBARA | MANDRILL | BOAT | |

| V = 1 | 29.8790 | 50.2645 | 91.4849 | 64.2214 |

| V = 3 | 22.6332 | 35.6187 | 64.7933 | 45.1057 |

| V = 6 | 13.6161 | 22.5175 | 39.6148 | 38.8212 |

| V = 10 | 16.5556 | 23.0739 | 26.2814 | 26.3661 |

ISAECT’20 INITIAL PRODUCT

Table 3: MOEFIC vs VOEFIC quality boost ratio based on the FWVDP metric experienced for the test pictures: BOAT, MANDRILL, BARBARA, and LENA for changeable binary compression rates and still survey separation.

| Viewing Separation | Quality boost ratio (%) | |||

| LENA | BARBARA | MANDRILL | BOAT | |

| V = 1 | 22.8005 | 47.6810 | 14.0676 | 11.6146 |

| V = 3 | 28.3076 | 77.9034 | 3.2422 | 39.7098 |

| V = 6 | 26.8725 | 66.7592 | 3.7920 | 3.3685 |

| V = 10 | 5.1945 | 0.9735 | 14.0159 | 6.6470 |

ASTESJ’20 LIMITED PRODUCT

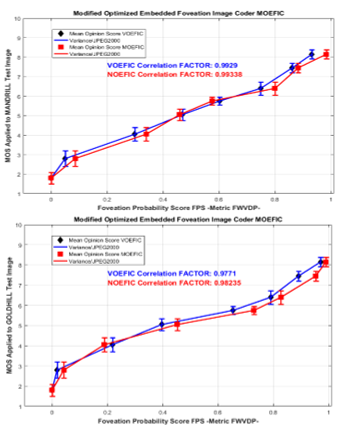

7. Biased and Unbiased Quality Correlation Factor

Right now, we will present the subjective quality assessor. This abstract methodology computes a quality scale named MOS (Mean Opinion Score) which midpoints the emotional estimates applied on pictures coded by our coders for various squeezing rates. First, the measures are gathered dependent on an investigation built up by a gathering of eyewitnesses of alternate class, age, and sex on an abstract scale differing from exceptionally poor to a particular quality. Then, a MOS factor calculation is established to approve the utilization of the MOEFIC and VOEFIC visual coders over the standard coder SPIHT and to validate our FWVDP foveal metric.

Table 4: Necessary conditions to experience Subjective Evaluation

| Experienced Pictures | Standard Pictures |

| Environment | Environment of Normal Desk |

| Viewing Separation V | This choice belongs to the spectator |

| Perceiving Time | Limitless |

| Observers Number | Between 15 and 39 |

| Score Range | Between 0 and 1 |

7.1. Requirements for an Abstract Quality Assessors

The instinctive aspect assessment is standardized according to CCIR suggestions [10, 31-33], initially intended for TV pictures. We plan to assess the identified differences among a candidate and reference pictures and embrace instinctive measures. We expect the utilization of Fränti conditions [40-41] required for this assessment experimentation, as suggested in the CCIR recommendation detailed in [42-43] and summarized in Table. 4. Assuming that these conditions are regarded, we standardize the assessment range to evade extra blunders environment dependent.

7.2. MOS Quality Factor Experimentation

To satisfy the relationship among the unbiased measures (vector X) and the biased measures (vector Y), we administer the matching coefficient evolved as come after:

Where and , n, refer separately the array elements of X and Y. n refers to the total of elements. and mean, individually, the mean of the arrays X and Y values, according to the accompanying equation:

and decides the inconstancy note, as follow:

7.3. Results of subjective quality assessment

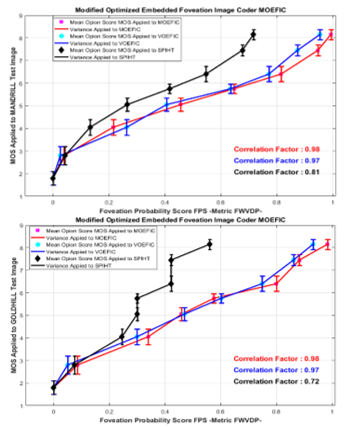

To explore the MOS figuring, refer to [10, 31-33], to fit the conditions to be respected before launching the subjective assessment. As shown in figure 13, the subjective score experienced by the MOS metric is compared to its objective scores FPS provided by the FWVDP assessor and deployed to highly textured test images and distorted at different bit rates by the JPEG2000 coder. This is to demonstrate a superior relationship between the objective and the subjective measures. This is highlighted in figure 13. This correlation is approved for the visual coders MOEFIC and VOEFIC (with a significant improvement for the former), however, it is disapproved for the SPIHT coder.

Figure 13: Opinion Averaged Notes using MOS vs FPS scores using FWVDP foveal metric experienced to “GOLDHILL” and “MANDRILL” images under MOEFIC/VOEFIC/SPIHT coders as indicated by the accompanying arrangement: resolution 512×512 degraded pictures, JPEG2000 reference coding, number of spectators=24, Degraded pictures versions=24, .Separation=4.

Figure 13: Opinion Averaged Notes using MOS vs FPS scores using FWVDP foveal metric experienced to “GOLDHILL” and “MANDRILL” images under MOEFIC/VOEFIC/SPIHT coders as indicated by the accompanying arrangement: resolution 512×512 degraded pictures, JPEG2000 reference coding, number of spectators=24, Degraded pictures versions=24, .Separation=4.

8. Conclusion

In this manuscript, we submitted a novel picture coder MOEFIC, that is labeled “Modified Optimization Embedded Foveation Image Coding“. It takes its advantages from its adapted variant VOEFIC, that is baptized “Visually Optimized Embedded Foveation Image Coder” and largely explained in [10]. We have conceived these coders regarding their forerunner EVIC [11], MEVIC [12], POEZIC [38], as well as their foveal variant POEFIC that was elaborated in [39]. We additionally presented the quality evaluator FWVDP, that is entitled “Foveation Wavelet Visible Difference Predictor” [32-33]. The pair of the referenced frameworks manages precise cortical properties by the integration of the human psychophysical weighting models. They consolidate progressively the foveation channel (FOV), the wavelet visible distortions edges (JND), the contrast elevation above those JND limits dependent on luminance adaptation and contrast correction.

The ultimate model, when is applied to the wavelet-based picture spectrum, will reshape its range. Therefore, it will keep its significant data situated in the looked locale of intrigue and disposes of all subtle and repetitive ones. Thusly, we encoded and assessed the valuable data, which as indicated by the obsession point, using a paired spending squeezing rate and a perception separation. Consequently, we arrived at an increasingly upgraded picture quality contrasted with the reference form that has processed straightforwardly the initial wavelet-based picture spectrum. The foveal coder and its corresponding foveal assessor register together psychovisual-like transform, which regardless of its recurrence sub-bands constraint upgrades the picture transformation, limits perceptually increasingly pertinent blunders, arrives at the purpose binary rate and advances the ocular aspect contrasted with that provided by the mentioning SPIHT [37]. It likewise directly refine the picture spectrum shape contingent upon the coding aspect and the survey conditions. Indeed, when the perception separation increases taken away lower level to the greatest values, the picture adapted spectrum shape will spread the significant wavelet channels that, naturally, are the zones of interest located in low and medium frequency bands.

Besides, we highlighted in this manuscript three evaluation approaches aiming to validate the suggested coders. We meant here, the objective validation based on foveal score FPS that is provided by the mentioned assessor (FWVDP). The subjective validation, which is based on averaged spectator’s notes (MOS). The quantitative and qualitative approaches that are based on the boost ratio for the former and the interaction factor between the objectives scores and the subjective notes for the later. This validation process was administered to the visually advanced coder MOEFIC, to its adapted variant VOEFIC, and to their mentioning coder SPIHT. As highly discussed above, all gathered fruits from obtained results approve our perceptual coders in terms of quality improvement and binary budget optimization.

To finish our unobtrusive work, note that we assembled, talked about and contrasted the got outcomes and distinctive assessment techniques we implied unbiased, biased, qualitative and quantifiable ways to deal with endorse and approve our work.

- S. G. Mallat, “Multi-frequency channel decomposition of images and wavelet models”, IEEE Trans. Acoust., Speech, Signal Processing, Vol. 37, 2091-2110, 2000.

- P. Kortum and W. S. Geisler. ”Implementation of a foveated image coding system for image bandwidth reduction,” In Proc. SPIE: Human Vision and Electronic Imaging, Vol. 2657, 350-360, 1996.

- W. S. Geisler and J. S. Perry. “A real-time foveated multiresolution system for low-bandwidth video communication”, Proc. SPIE, Vol. 3299, 1998.

- Zhou Wang, Alan Conrad Bovik “Embedded Foveation Image Coding, IEEE transactions on image processing, Vol. 10, Oct. 2001.

- S. Lee, M. S. Pattichis, and A. C. Bovik., ”Foveated video compression with optimal rate control”, IEEE Trans. Image Processing, vol. 10, pp. 977-992, July 2001.

- Andrew Floren, Alan C. Bovik, “Foveated Image and Video Processing and Search,”, Academic Press Library in Signal Processing, Volume 4, 2014, Pages 349-401, Chapter 14

- J. C. Galan-Hernandez, V. Alarcon-Aquino, O. Starostenko, J. M. Ramirez-Cortes, Pilar Gomez-Gil, “Wavelet-based frame video coding algorithms using fovea and SPECK”, Engineering Applications of Artificial Intelligence Volume 69March 2018, Pages 127-136

- Rafael Beserra Gomes, Bruno Motta de Carvalho, Luiz Marcos Garcia Gonçalves, « Visual attention guided features selection with foveated images”, Neuro computing Volume 12023, November 2013, Pages 34-44

- J. C. Galan-Hernandez, V. Alarcon-Aquino, O. Starostenko, J. M. Ramirez-Cortes, Pilar Gomez-Gil, « Wavelet-based frame video coding algorithms using fovea and SPECK”, Engineering Applications of Artificial Intelligence Volume 69, March 2018, Pages 127-136

- Bajit, A., Benbrahim, M., Tamtaoui, Rachid, E., “A Perceptually Optimized Wavelet Foveation Based Embedded Image Coder and Quality Assessor Based Both on Human Visual System Tools”, 2019, ISACET’2019 -IEEE-International Symposium on Advanced Electrical and Communication Technologies, ROMA, 2020.

- Bajit, A., Nahid, M., Tamtaoui, A, Lassoui, A, “A Perceptually Optimized Embedded Image Coder and Quality Assessor Based on Visual Tools” 2018, ISACET 2018 -IEEE-International Symposium on Advanced Electrical and Communication Technologies, 2018.

- Bajit, A., Nahid, M., Tamtaoui, A, Lassoui, A, “A Perceptually Optimized Embedded Image Coder and Quality Assessor Based on Visual Tools”, ASTESJ’19 –Special Issue- CFP for Special Issue on Advancement in Engineering and Computer Science, Volume 4, Issue 4, Page No 230-238, 2019.

- Michael Unser, and Thierry Blu.,”Mathematical Properties of JPEG2000 Wavelet Filters”, IEEE Transaction on Image Processing, Vol. 12, n. 9, Sep. 2003.

- Z. Liu, L. J. Karam and A. B. Watson.,”JPEG2000 Encoding With Perceptual Distortion Control”, IEEE Transactions on Image Processing, Vol. 15, N. 7, July 2006.

- A.B Watson, G.Y.Yang, J.A.Solomon, and J.Villasonor. ”Visibility Of Wavelet Quantization Noise”, IEEE Trans. Image Processing, Vol. 6, n. 8, 1164-1175, 1997.

- Y. Jia, W. Lin and A. A. Kassim.,”Estimating Just-Noticeable Distortion for Video”, IEEE Transactions on Circuits and Systems for Video Technology, Vol. 16, 820829. July 2006.

- Watson and J. A. Solomon., ”A model of visual contrast gain control and pattern masking”, J. Compar. Neurol., Vol. 11, 1710-1719, 1994.

- J. M. Foley., ”Human luminance pattern-vision mechanisms: masking experiments require a new model”, J. Compar. Neurol., Vol. 11, N. 6, 1710-1719, 1999.

- S. Daly, W. Zeng, J. Li, S. Lei., ”Visual masking in wavelet compression for JPEG 2000”, in: Proceedings of IS&T/SPIE Conference on Image and Video Communications and Processing, San Jose, CA, Vol. 3974, 2000.

- D. M. Chandler and S. S. Hemami.,”Dynamic contrast-based quantization for lossy wavelet image compression”, IEEE Trans. Image Process. 14(4), 397410, 2005.

- Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli.,”Image Quality Assessment: From Error Visibility to Structural Similarity”, IEEE Transactions on Image Processing, Vol. 13, N.4, April 2004.

- H.R. Sheikh, A. C. Bovik, and G. de Veciana., ”A Visual Information Fidelity Measure for Image Quality Assessment”, IEEE Trans on Image Processing, Vol.14 , pp. 2117-2128, N.12, Dec. 2005.

- J. G. Robson and N. Graham., «Probability summation and regional variation in contrast sensitivity across the visual field”, Vis. Res., vol. 19, 409-418, 1981.

- S. J. P. Westen, R. L. Lagendijk and J. Biemond., ”Perceptual Image Quality based on a Multiple Channel HVS Model”, Proceedings of ICASSP, 2351-2354, 1993.

- S. Daly. “The visible differences predictor: An algorithm for the assessment of image fidelity, in Digital Images and Human Vision”, Cambridge, MA: MIT Press, 179-206, 1993.

- Andrew P. Bradley. ”A Wavelet Visible Difference Predictor”, IEEE Transactions on Image Processing, Vol. 8, No. 5, May 1999.

- E. C. Larson and Damon M. Chandler. ”Most apparent distortion: full-reference image quality assessment and the role of strategy,” Journal of Electronic Imaging, 19(1), 011006. Jan Mar 2010.

- Yim and A. C. Bovik, “Quality assessment of deblocked images, ”IEEE Trans on Image Processing, vol. 20, no. 1, pp. 88–98, 2011.

- Zhou Wang, Qiang Li”, Information Content Weighting for Perceptual Image Quality Assessment, IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 20, NO. 5, MAY 2011.

- M. Oszust., ”Full-Reference Image Quality Assessment with Linear Combination of Genetically Selected Quality Measures”, , in PLOS ONE journal, Jun 24, 2016

- M. Nahid, A. Bajit, A. Tamataoui, El. Bouyakhf ” Wavelet visible difference measurement based on human visual system criteria for image quality assessment”, Journal of Theoretical and Applied Information Technology, Vol. 66 No.3 p:736-774 2014

- M. Nahid, A. Bajit, A. Tamataoui, El. Bouyakhf ” Wavelet Image Coding Measurement based on the Human Visual System Properties”, Applied Mathematical Sciences, Vol. 4, no. 49, 2417 – 2429, 2010

- Nahid, M., Bajit, A., Baghdad, “A., Perceptual quality metric applied to wavelet-based 2D region of interest image coding” SITA- 11th International Conference on Intelligent Systems, 2016.

- Mario Vranješ, Snježana Rimac-Drlje, Krešimir Grgi, “Review of objective video quality metrics and performance comparison using different databases”, Signal Processing: Image Communication, Volume 28, Issue 1January 2013, Pages 1-19

- Xikui Miao, Hairong Chu, Hui Liu, Yao Yang, Xiaolong Li, “Quality assessment of images with multiple distortions based on phase congruency and gradient magnitude”, Signal Processing: Image Communication, Volume 79, November 2019, Pages 54-62

- J. M. Shapiro. ”Embedded image coding using Zero-Trees of wavelet coefficients”, IEEE Trans. Signal Processing, Vol.41, 3445-3462, 1993.

- A. Said and W. A. Pearlman., ”A new fast and efficient image codec based on set partitioning in hierarchical trees”, IEEE Trans. Circuits and Systems for video Technology, Vol. 6, 243-250, June 1996.

- A. Bajit, A. Tamtaoui & al, “A Perceptually Optimized Foveation Based Wavelet Embedded ZeroTree Image Coding” Int. Journal of Computer, Elecl, Aut, Control and Information Eng Vol:1, No:8, 2007,

- A. Bajit, A. Tamtaoui & al, “A Perceptually Optimized Wavelet Embedded ZeroTree Image Coder” International Journal of Computer and Information Engineering Vol:1, No:6, 2007

- Pasi Fränti, “Blockwise distortion measure for statistical and structural errors in digital images”, Signal Processing: Image Communication, Volume 13, Issue 2August 1998Pages 89-98 C.

- Deng, Z. Li, W. Wang, S. Wang, L. Tang, A. C. Bovik “Cloud Detection in Satellite Images Based on Natural Scene Statistics and Gabor Features” IEEE Geoscience and Remote Sensing Letters, 2018.

- L. Stenger Signal Processing: Image Communication, Volume 1, Issue 1June 1989, Pages 29-43,“Digital coding of television signals—CCIR activities for standardization”

- Erika Gularte, Daniel D. Carpintero, Juliana Jaen, “Upgrading CCIR’s foF2 maps using available ionosondes and genetic algorithms” Advances in Space ResearchVolume 61, Issue 71 April 2018Pages 1790-1802.

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Anass Barodi, Abderrahim Bajit, Mohammed Benbrahim, Ahmed Tamtaoui, S. Bourekkadi, J. Abouchabaka, O. Omari, K. Slimani, "Improving the transfer learning performances in the classification of the automotive traffic roads signs." E3S Web of Conferences, vol. 234, no. , pp. 00064, 2021.

- Anass Barodi, Abderrahim Bajit, Sanaa El Aidi, Mohammed Benbrahim, Ahmed Tamtaoui, "Applying Real-Time Object Shapes Detection To Automotive Traffic Roads Signs." In 2020 International Symposium on Advanced Electrical and Communication Technologies (ISAECT), pp. 1, 2020.

- Abdelhadi EI Allali, Ilham Morino, Salma AIT Oussous, Siham Beloualid, Ahmed Tamtaoui, Abderrahim Bajit, "Application of Computational Intelligence in Visual Quality Optimization Watermarking and Coding Tools to Improve the Medical IoT Platforms Using ECC Cybersecurity Based CoAP Protocol." In Information Security and Privacy in the Digital World - Some Selected Topics, Publisher, Location, 2023.

- Salma Ait Oussous, Abderrahim Bajit, Hicham Essamri, Rachid Elbouayadi, Driss Zejli, "A New Optimization of Agricultural Greenhouse’s PAYLOAD Applying Light Weighted, Green Intelligent, and Secured Solutions Based on Visual Watermarking of Objective and Subjective Plant’s PAYLOAD." In ICT for Intelligent Systems, Publisher, Location, 2024.

- Anass Barodi, Abdrrahim Bajit, Mohammed Benbrahim, Ahmed Tamtaoui, "An Enhanced Approach in Detecting Object Applied to Automotive Traffic Roads Signs." In 2020 IEEE 6th International Conference on Optimization and Applications (ICOA), pp. 1, 2020.

- Salma Ait Oussous, Abderrahim Bajit, Yasmine Achour, Ilham Morino, Driss Zejli, Rachid Elbouayadi, "Applying Computational Intelligence, Visual Optimization Tools, and Synchronized PAYLOAD’s Handshaking to Enhance Greenhouses ECC Cyber Security-based Agriculture IoT’s Properties." In 2023 9th International Conference on Optimization and Applications (ICOA), pp. 1, 2023.

- Ilham Morino, Abderrahim Bajit, Abdelhadi EL Allali, Rachid EL Bouayadi, Driss Zejli, "A New Wavelet Visual Weighting Model to Optimize Image Coding and Quality Evaluation Based on the Human Psychovisual Quality Properties." In Proceedings of Ninth International Congress on Information and Communication Technology, Publisher, Location, 2024.

- Reza Khodadadi, Gholamreza Ardeshir, Hadi Grailu, "A new method of facial image compression based on meta-heuristic algorithms with variable bit budget allocation." Signal, Image and Video Processing, vol. 17, no. 8, pp. 3923, 2023.

- Reza Khodadadi, Gholamreza Ardeshir, Hadi Grailu, "Compression of face images using meta-heuristic algorithms based on curvelet transform with variable bit allocation." Multimedia Systems, vol. 29, no. 6, pp. 3721, 2023.

- Hicham Essamri, Abderrahim Bajit, Youness Zahid, Driss Zejli, Rachid El Bouayadi, "Green, Intelligent, Optimized, and Visual Watermarking Solutions Applied to Precision Farming to Optimize Crop’s PAYLOAD and Enhance Its Objective and Subjective Data." In Intelligent Sustainable Systems, Publisher, Location, 2025.

- Abdelhadi El Allali, Ilham Morino, Siham Beloualid, Abderrahim Bajit, Salma Aitoussous, Idriss Zejli, "Deploying Computational Intelligence AI and Visual Quality Optimization Tools In Embedded Image Watermarking to Enhance the Agricultural IoT PAYLOAD Features." In 2023 9th International Conference on Optimization and Applications (ICOA), pp. 1, 2023.

- Reza Khodadadi, Gholamreza Ardeshir, Hadi Grailu, "Variable bit allocation method based on meta-heuristic algorithms for facial image compression." Multimedia Systems, vol. 29, no. 6, pp. 3903, 2023.

- Sanaa El Aidi, Abderrahim Bajit, Anass Barodi, Habiba Chaoui, Ahmed Tamtaoui, "An Advanced Encryption Cryptographically-Based Securing Applicative Protocols MQTT and CoAP to Optimize Medical-IOT Supervising Platforms." In Innovative Systems for Intelligent Health Informatics, Publisher, Location, 2021.

No. of Downloads Per Month

No. of Downloads Per Country