Multi-Stage Enhancement Approach for Image Dehazing

Volume 4, Issue 6, Page No 343-352, 2019

Author’s Name: Madallah Alruwailia)

View Affiliations

College of Computer and Information Sciences, Department of Computer Engineering and Networks, Jouf University, 42421, KSA

a)Author to whom correspondence should be addressed. E-mail: madallah@ju.edu.sa

Adv. Sci. Technol. Eng. Syst. J. 4(6), 343-352 (2019); ![]() DOI: 10.25046/aj040644

DOI: 10.25046/aj040644

Keywords: Haze images, Wiener filter, Image restoration, Image enhancement

Export Citations

Over the past decades, huge efforts have been devoted for image enhancement under uncontrolled scene such as fog and haze. This work proposes Multi-stage de-hazing approach for improving the quality of hazy images. Four main stages are introduced, in our approach, to achieve an automated, efficient and robust de-hazy processing. The first two stages are utilized to diminish the blurring noise and enhance the contrast using Wiener filter and contrast scattering in the RGB color, respectively. In contrast, the last two stages are utilized for luminance and quality enhancement using luminance spreading and color correction. It is obvious from the experimental that the proposed approach significantly improves the prominence of the hazy images and outperforms the performance of conventional methods, such as multi-scale fusion and histogram equalization. In addition, it is also found that our approach exhibits low complexity compared to existing works.

Received: 06 September 2019, Accepted: 01 December 2019, Published Online: 16 December 2019

1. Introduction

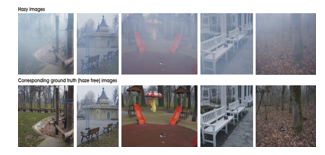

Nowadays, hazy weather appears more and more frequently in different areas of the world as a result of pollution. Under hazy weather conditions, suspended particles in atmosphere for example fog, murk, the mist, dust causes poor visibility image and distorts the colors of the scene. Images taken in such conditions represent a major issue for numerous outdoor applications of vision community including surveillance, object recognition, target tracking, which require high-quality images. Figure 1 shows an example of hazy and de-hazy images taken from [1].

Figure 1: Sample Hazy and De-hazy Images

Figure 1: Sample Hazy and De-hazy Images

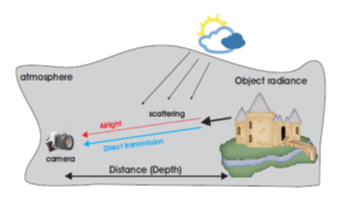

Images under hazy weather exhibit very poor quality and blurred even in a small dynamic range of color intensities [2]. The main factors causing hazy images might be represented as the mixture of scene vivacity, air-light and communication [3,4]. The study of haze is related to the works on scattering of light in the atmosphere (see Figure 2). In imaging system, the radiance captured by the camera along the sightline is diclined due to atmospheric light and it is replaced by scattered light that leading to lose contrast and colors in captured images.

Figure 2: Optical model against diverse weather situations [5].

Figure 2: Optical model against diverse weather situations [5].

In general, the following equation has been widely employed to improve the quality of the haze images:

IM(x) = F(v )t(v) + L(1 – t(v)) (1)

where F, L and I represent haze image, free scene in the haze image and the atmospheric light, respectively. In contrast, v is denoted as the location of the image component while t is denoted as the intermediate of broadcast to describe the percentage of the bright, which is not dispersed by the camera. The haze reduction aims to recuperate F, L, and t from IM.

2. Literature Review

In the recent years, different types of techniques have been proposed for de-hazing images, which further are classified into two main categories with respect to the amount of images utilized for de-hazing process [ 6,7]. The first category relies on multiple images taken from a hazy scene with different densities at the same point [8, 9, 10]. This category provided impressive results by assuming the scene depth can be estimated from multiple images. However, this requires specific inputs (fixed scene under different weather condition) making this type of method impractical for real-time applications.

To overcome the need of changed weather conditions [11], polarization based approach has been introduced to simulate changed weather conditions. This simulation is obtained by applying various filters on different images. However, this approach still is not appropriate for real-time applications as the static scenes cannot be considered unless the polarization filter based approaches are utilized.

The second category relies on a single image to achieve haze removal [12–13]. This type of methods is capable effectively to improve both the contrast and the visibility of hazy images. However, it may result in vanishing some detailed information and increasing the computational burden.

He et al. [14] developed a Dark Channel Prior (DCP) method based on the measurements about the haze-free images. The proposed method utilized dark pixels to estimate haze transmission map. These dark pixels are categorized through low intensity worth of as a minimum one-color parameter. Meng et al. [15] proposed an enhancement to dark channel prior through estimating transmission map. The prior map was estimated via enhancing intrinsic edge limit with biased L1 standard relative regularization. In contrast, Zhu et al [16] devloped a color attenuation prior (CAP) that defines a undeviating model on local priors which aims to recover depth information. Some other works tried to optimize the dark channel by combining it with other methods. For example, Xie et al (2010) introduced a model that utilizing dark channel and Multi-Scale Retinex [17]. This technique implemented on the luminance element in YCbCr space. Wiener filtering also has been implemented with dark channel prior to have well enhancement [18]

Over the past decades, Retinex theory [19] has been considered a millstone in many image enhancement and de-hazy methods. This theory is basically based on lightness and color constancy and it has several advantages including dynamic range compression, color independence and color and lightness rendition. Therefore, Retinex theory has been extensively employed in image processing tasks. For example, the center/surround Retinex method has gained a huge interest as it offers low computational cost and no calibration for scenes.

Based on the Retinex theory, this work proposes a novel Multi-stage de-hazing method for improving the superiority of hazy images. In the proposed approach, the first two stages are utilized to diminish the blurring noise and enhance the contrast, while the last two stages are utilized for luminance and quality enhancement. More details about the proposed approach is given in the next part.

3. Proposed Approach

This approach involves four stages to enhance the quality of hazy images. These stages as follows

- Wiener filter.

- Contrast scattering

- Luminance spreading

- Color correcting.

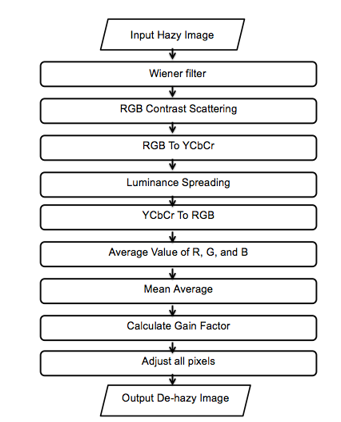

The workflow of the proposed approach is described in Figure 3, in which every stage is explained in the next parts.

Figure 3: Workflow of the proposed approach.

Figure 3: Workflow of the proposed approach.

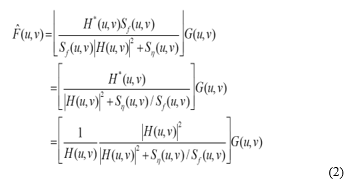

3.1 Wiener filter

The exciting camera devices still have some problems specifically with images taken form haze environment. Haze environment greatly affects quality of image captured by the camera. As mention above a noise occurs in the captured image. To overcome this problem, the well-known method have been used to reduce blur in images due to linear motion is the Wiener filter. This filter was proposed by Norbert Wiener during the 1940s and was published in 1949 [20]. It reduces the noise impact in frequency domain. The main goal of this filter is to obtain noisy image of the original image and reduce mean square error between the two images [21]. The next formula is used to measure the error where E represents the expected value.

Following three conditions are assumed:

- The images have zero mean, i.e., the images will be noise free.

- Noise and the image are uncorrelated.

- Grey levels in the restored image are linear functions of the gray levels in the noisy image.

Using the above conditions, the best value of the error function in frequency domain is given by next formulas

H(u,v) = Degradation function

H*(u,v) = complex conjugate of H(u,v) = H*(u,v) H(u,v), Sh(u,v) = Power spectrum of noise, Sf(u,v) = Power spectrum of the un-degraded image.

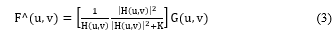

In haze environment the original image is not typically available. Thus, the power spectrum ratio is substituted by a parameter K that is experimentally determined. Practically, the value of K is chosen to be 0.00025 in order for achieving the best visual result. The following formula is used to decrease noise from hazy images.

3.2 Contrast Scattering

3.2 Contrast Scattering

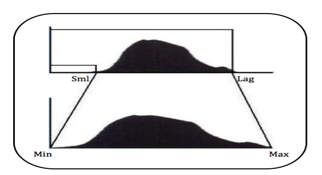

In the second stage, the contrast scattering technique is used so as to improve the hazy images contrast. This technique selects smallest and highest values of the each RGB color model.. Then, all the color parameter values are scattered among the smallest and highest values, as shown in Figure 4. Next formula is used for contrast scattering:

![]() where is is denoted as the novel pixel value and is is denoted as the current pixel value. , , , and represent he minimum, maximum, least, and highest pixel value in each color parameter respectively.

where is is denoted as the novel pixel value and is is denoted as the current pixel value. , , , and represent he minimum, maximum, least, and highest pixel value in each color parameter respectively.

3.3 Luminance Spreading

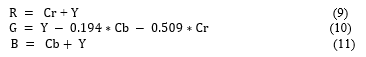

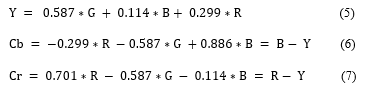

Subsequently, in order to achieve a true Luminance; an improvement method is applied. The Luminance in YCbCr plays a major role in image quality. The YCbCr color model is used to separate chrominance and luminance, in which, Y denotes the luminance parameter; while, Cb and Cr are respectively the chrominance parameters. On the other hand, RGB color model mutually make luminance. the following formulas are used to convert RGB color model to YCbCr color model:

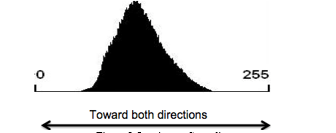

Consequently, the luminance parameter is adjusted to reach true colors and illumination. The proposed approach spans the luminance toward both directions 0 and 255 as shown in figure 5. The next formula is used for luminance spreading [22].

Consequently, the luminance parameter is adjusted to reach true colors and illumination. The proposed approach spans the luminance toward both directions 0 and 255 as shown in figure 5. The next formula is used for luminance spreading [22].

![]() where Oo and Oi are novel and current luminance value correspondingly. min denotes the minimum value, while max denotes the maximum value of luminance. Subsequently, following formulas are used to transfer image from the YCbCr to the RGB color model.

where Oo and Oi are novel and current luminance value correspondingly. min denotes the minimum value, while max denotes the maximum value of luminance. Subsequently, following formulas are used to transfer image from the YCbCr to the RGB color model.

3.4 Color Correcting

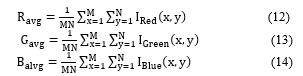

Color correcting method plays a major role to produce great quality images, which need balance number for pixels values. In this approach to get true colors, following formulas are used to calculate the average value [23]:

The proposed approach utilizes the mean average value of all colors. The mean average value is employed to increase the other values due to which the entire color is balanced. Mean value is used to overcome the over-saturation matter and keeps both the illumination and color at same levels [24]. This value is calculated for de-haze image using next formulas:

The proposed approach utilizes the mean average value of all colors. The mean average value is employed to increase the other values due to which the entire color is balanced. Mean value is used to overcome the over-saturation matter and keeps both the illumination and color at same levels [24]. This value is calculated for de-haze image using next formulas:

Then a diagonal model [25] is used to adjust all pixels values the image. Following formulas are utilized to recover the superiority of the hazy images.

Then a diagonal model [25] is used to adjust all pixels values the image. Following formulas are utilized to recover the superiority of the hazy images.

where Red, Green, and Blue are the values of the pixels in the hazy images respectively; while, , ,and denote the balanced values of the pixels.

where Red, Green, and Blue are the values of the pixels in the hazy images respectively; while, , ,and denote the balanced values of the pixels.

4. Experimental Evaluation

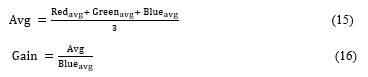

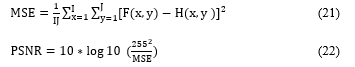

The proposed methods were assessed based on detached techniques such as PSNR (peak signal to noise ratio), SSIM (structural similarity index), and RMSE (root mean square error). RMSE is well known method used for result evaluation. RMSE is a accumulative aligned fault among de-hazy image and a free haze image by using next formula:

when RMSE is very small and close to zero that means a high quality de-haze image.

when RMSE is very small and close to zero that means a high quality de-haze image.

The second approach is PSNR. In order to get an excellent quality of de-haze image, the value of PSNR should be high. However, to find MSE among the new and de-haze images respectively.

Furthermore, we need to find the MSE between free haze and de-haze images in order to calculate PSNR. Next formulas were used to find MSE and PSNR

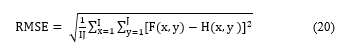

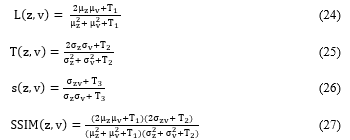

The third approach is the SSIM (structural similarity index). SSIM measures the visual impact of brightness, dissimilarity and construction. The value of SSIM is among [0, 1] when free haze and de-haze images are almost identical; the SSIM is close to 1.

The third approach is the SSIM (structural similarity index). SSIM measures the visual impact of brightness, dissimilarity and construction. The value of SSIM is among [0, 1] when free haze and de-haze images are almost identical; the SSIM is close to 1.

![]() where α , β and γ are factors to adjust the relative importance of the components[26]. Next formulas were used to compute SSIM

where α , β and γ are factors to adjust the relative importance of the components[26]. Next formulas were used to compute SSIM

where , and are the mean, standard deviation, and covariance for images z, v. , where L is 255.

where , and are the mean, standard deviation, and covariance for images z, v. , where L is 255.

5. Experimental Setup

5. Experimental Setup

In this work, we employed two kinds of open-source datasets namely I-Haze [2], and generalized dataset for validating the proposed approach performance. All the datasets are explained below.

5.1 Used Datasets

In this study, we utilized two datasets to show the efficacy of the proposed approach. These datasets are defined as below.

Generalized Dataset: Hazy images were downloaded from different free websites such as personal web pages. The collected images served as inputs to the program and process in order to produce the enhanced images. The generalized dataset consisted of total of 50 images with different haze effect.

I-Haze Dataset: C. O. Ancuti et al have proposed I-Haze dataset, which includes 35 of hazy and conforming interior images of haze-free. Haze images have been produced under the same illumination conditions includes a MacBeth color checker.

5.2 Setup

To validate the effectiveness of defined method, the following set of experiments were executed using Matlab.

- The first experiment was conducted on generalized dataset to show the efficacy of the defined method on real hazy images against the state-of-the-art methods namely multi-scale fusion[13], Dark channel prior [14], Gray world, White patch and Histogram equalization.

- The second experiment was conducted on I-Haze dataset in order to show the performance of the proposed approach against the state-of-the-art methods. Here, RMSE, PSNR, and SSIM have been calculated to compare proposed results with state-of-the-art methods.

- Finally in the third experiment, a inclusive set of the comparisons was performed using two datasets. In the first experiment, the defined technique is matched against the well-known methods like multi-scale fusion [13], dark channel prior [14], gray world, white patch and histogram equalization. While, in the second set of experiment, the comparison has been performed against the latest methods such as RMSE, PSNR, and SSIM.

6. Results and Discussion

6.1 A First Set of Experiments

As described before, this experiment validates the efficacy of the defined approach on real dataset. A few sample images are shown in Figure 6. As observed from figure 6., images produced using the proposed approach have better clarity and no blur noise matched against latest methods.

6.2 Second Set of Experiments

As described before, the second experiment was conducted to show the importance of the defined method using I-Haze dataset. For this purpose, this study has used the most known methods for image enhancement and restoration. The unbiased valuations are described in Table 1. The proposed approach was able to provide smaller RMSE values and higher PSNR values. In addition, the values of SSIM are close to 1 compared to the result produced by latest methods. It is clear that the defined approach has provided better results s shown in Figure 6.

6.3 Third Set of Experiments

In the third set of experiments, a strong comparison has been performed under the two datasets. The comparison results are presented in Figures 7 – 11. It is obvious that the defined method showed significant performance than of the existing methods. The proposed approach can acquire the resulting image without color distortion. Nevertheless, the histogram equalization creates unwanted color and some artefacts in images. The. The results produced by Multi-scale fusion and dark channel prior were acceptable, but new colours were created in some images, which changed their appearances. Therefore, the proposed approach accurately and robustly enhance different kind of images degraded by haze.

7. Conclusions

De-hazing approach have become a need for many computer applications. This work has proposed a Multi-stage approach for image de-hazing. Four main stages have been utilized in our approach. The first stage is defined to diminish the blurring noise using Wiener filter. The second stage is defined to enhance the luminance, where each RGB color pixel was spread among the least and highest values. IN the next stage, the luminance adjustments is achieved by transforming the resultant images from the RGB model to YCbCr model. Finally, the last stage is utilized to overcome colorcast by color correcting. The proposed approach has showed high superior performance under challenging conditions including distorting images, incomplete variety, and little contrast. The approach also keeps the properties and characteristics of the images through de-hazing the images. In addition, unlike other approaches, our approach exhibit low complexity makes applicable for online scenarios

Conflict of Interest

The authors declare no conflict of interest.

- C. Ancuti, C. O. Ancuti, R. Timofte, L. Van Gool, L. Zhang, M.-H. Yang, et al. NTIRE 2018 challenge on image dehazing: Methods and results. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, June 2018.

- C. O. Ancuti, C. Ancuti, R. Timofte, and C. De Vleeschouwer. I-HAZE: a dehazing benchmark with real hazy and haze-free indoor images. In arXiv, 2018.

- He, Renjie; Wang, Zhiyong; Fan, Yangyu; Feng, David Dagan: ‘Combined constraint for single image dehazing’, Electronics Letters, 2015, 51, (22), p. 1776-1778, DOI: 10.1049/el.2015.0707IET Digital Library

- Mahdi, Hussein & El abbadi, Nidhal & Mohammed, Hind.’ Single image de-hazing through improved dark channel prior and atmospheric light

- Xu D.; Xiao C. and Yu J. COLOR-PRESERVING DEFOG METHOD FOR FOGGY OR HAZY SCENES .In Proceedings of the Fourth International Conference on Computer Vision Theory and Applications VISAPP, 2009

- Mahdi, Hussein & El abbadi, Nidhal & Mohammed, Hind.’ Single image de-hazing through improved dark channel prior and atmospheric light estimation’. Journal of Theoretical and Applied Information Technology. 2017.

- Wenfei Zhang, Jian Liang, Haijuan Ju, Liyong Ren, Enshi Qu, Zhaoxin Wu, Study of visibility enhancement of hazy images based on dark channel prior in polarimetric imaging, Optik, Volume 130, 2017, Pages 123-130.

- Zhiyuan Xu. “Fog Removal from Video Sequences Using Contrast Limited Adaptive Histogram Equalization”, International Conference on Computational Intelligence and Software Engineering, 2009.

- Ancuti C.O., Ancuti C., Hermans C., Bekaert P. A Fast Semi-inverse Approach to Detect and Remove the Haze from a Single Image. In: Kimmel R., Klette R., Sugimoto A. (eds) Computer Vision – ACCV 2010. ACCV 2010. Lecture Notes in Computer Science, vol 6493. Springer, Berlin, Heidelberg

- S. Narasimhan and S. Nayar, “Contrast Restoration of Weather Degraded Images” in IEEE Transactions on Pattern Analysis & Machine Intelligence, vol. 25, no. 06, pp. 713-724, 2003.

- S. G. Narasimhan and S. K. Nayar, “Shedding light on the weather,” 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2003. Proceedings., Madison, WI, USA, 2003, pp. I-I.

- Makkar, Divya & Malhotra, Mohinder. (Single Image Haze Removal Using Dark Channel Prior. International Journal Of Engineering And Computer Science 2016).

- O. Ancuti and C. Ancuti, “Single image dehazing by multiscale fusion,” IEEE Transactions on Image Processing, vol. 22(8), pp. 3271–3282, 2013

- K. He, J. Sun, and X. Tang, “Single image haze removal using dark channel prior,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 12, pp. 2341–2353, Dec. 2011.

- G. Meng, Y. Wang, J. Duan, S. Xiang and C. Pan, “Efficient Image Dehazing with Boundary Constraint and Contextual Regularization,” 2013 IEEE International Conference on Computer Vision, Sydney, NSW, 2013, pp. 617-624.

- Q. Zhu, J. Mai and L. Shao, “A Fast Single Image Haze Removal Algorithm Using Color Attenuation Prior,” in IEEE Transactions on Image Processing, vol. 24, no. 11, pp. 3522-3533, Nov. 2015.

- Xie, Bin, Fan Guo, and Zixing Cai. “Improved single image dehazing using dark channel prior and multi-scale Retinex.”Intelligent System Design and Engineering Application (ISDEA), 2010 International Conference Vol.1.IEEE, 2010

- Shuai, Yanjuan, Rui Liu, and Wenzhang He. “Image Haze Removal of Wiener Filtering Based on Dark Channel Prior.” Computational Intelligence and Security (CIS), 2012 Eighth International Conference on IEEE, 2012

- E. H. Land and J. J. McCann, “Lightness and retinex theory,” Journal of the Optical Society of America A, vol. 61, pp. 1–11, 1971.

- R. C. Gonzalez and R. E. Woods, “Digital Image Processing”. New Jersey: PearsonPrentice Hall, 2008.

- Alruwaili, M., & Gupta, L. (2015). A statistical adaptive algorithm for dust image enhancement and restoration. Proceedings of 2015 IEEE International Conference on Electro/Information Technology (pp. 286–289).

- Fisher, R., Perkins, S., Walker, A. & Wolfart, E. Contrast stretching Retrieved 25th August 2019, from Web site: http://homepages.inf.ed.ac.uk/rbf/HIPR2/stretch.htm

- Barnard, K., Cardei, V. & Funt, B. A comparison of computational color constancy algorithms-part I: Experiments with image data, IEEE Transactions on Image Processing, Vol. 11, no. 9, 2002.

- G.D. Finlayson, S.D. Hordley, and P.M. Hubel, “Color by correlation: A simple, unifying framework for color constancy,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 23, no. 11, pp. 1209–1221, November 2001.

- Fairchild, M.D. Colour appearance models, 2nd Edition. Wiley-IS&T, Chichester, UK, ISBN 0-470-01216-1, 2005.

- Z. Wang, A. C. Bovik, H. R. Sheikh and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600-612, Apr. 2004.

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

No. of Downloads Per Month

No. of Downloads Per Country