Brain-inspired IoT Controlled Walking Robot – Big-Foot

Volume 4, Issue 3, Page No 220-226, 2019

Author’s Name: Anna Lekova1.a), Ivan Chavdarov1,2, Bozhidar Naydenov1, Aleksandar Krastev1, Snezhanka Kostova1

View Affiliations

1Bulgarian Academy of Sciences, Institute of Robotics, Str. Acad. G. Bonchev, Blk. 2, 1113, Sofia, Bulgaria

2University of Sofia, “St. Kliment Ohridski”, Faculty of Mathematics and Informatics, 5 James Bourchier Blvd. 116, Sofia, Bulgaria

a)Author to whom correspondence should be addressed. E-mail: alekova.iser@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 4(3), 220-226 (2019); ![]() DOI: 10.25046/aj040329

DOI: 10.25046/aj040329

Keywords: Brain-robot interaction, Walking robot, 3D printing

Export Citations

This work presents the development of an original idea for a walking robot with a minimum number of motors, simple construction and a control system based on the brain bioelectrical activities. Described are geometric and kinematic dependencies related to the robot movement, as well as brain-inspired IoT control method. Various aspects are discussed for improving the robot’s qualities, concerning the shape of the robot’s feet and base in order to overcome various obstacles and maintain the static mechanical equilibrium. Improvements in the mechanical design are provided to improve reliability and enhance the scope of robot’s applications. A new IoT framework for creating Human-robot interaction applications based on Node-RED “wiring” of Emotiv Brain Computer Interface (BCI) and Arduino based robot is designed, developed and tested. An educational application how to train the joint attention of children by a mind control method based on neurofeedback from beta oscillation in the right temporoparietal region is illustrated in a Node-RED flow. The neurofeedback is exposed on the walking robot.

Received: 14 April 2019, Accepted: 04 June 2019, Published Online: 18 June 2019

1. Introduction

Applications of walking robots include rescue operations, work and inspection in harmful and dangerous environments, military purposes, etc. Typically, they move in an environment with obstacles whose positions and dimensions are not known in advance. Their mechanical and control systems are designed to be able to avoid or overcome obstacles. In some cases, the obstacles can dynamically change in time. This leads to more complicated designs of the walking robots compared to robots on wheels or tracks. They have more degrees of freedom and are slower. A common problem is the task of climbing and descending stairs [1-5]. Experimental robots are developed with a small number of degrees of freedom and a special shape of their feet that overcome obstacles while maintaining static stability. [7]. For these reasons, alternative solutions are investigated [5], [6] and [7]. In [7], the author presented a low-budget two-legged robot which is able to maintain static equilibrium. Other simple solutions are also investigated, such as: passive-dynamic two-legged walking [7] and different variants inspired by nature [1], [2] and [8].

Walking robots are also used for educational purposes. The results in the scientific literature show positive reactions and improvement in the attentional and positive emotional state of children with special educational needs (SEN) [9]. Nonhumanoid walking robot Big-Foot is successfully implemented to support the education of such children in two day-care centers for children with SEN in Bulgaria [10]. Some ideas have been challenged with the special educators – how the walking robot to become more intelligent and personal, in order to act as a mediator for learning and socializing because these children show deficits in early social communication skills such as Joint Attention (JA), social requesting and referencing. Joint attention is the shared focus of two individuals on an object and gaze shifting and behavioral response are the most used measures to assess the establishment of JA [11]. However, children with ASD avoid eye contact or lose focus on humans quickly. In this context, our hypothesis is that the robot has the potential to establish JA better because these children trust robots more than humans [10] and we can use this as a pure social consequence of sharing an experience. Furthermore, the JA can be made visible on a robot by exploiting a neuro physiological approach instead of gaze tracking as an indicator for a shared focus of two individuals on a same object. By integrating walking robot with a brain-aware device we propose an innovative concept for establishing and assessing JA in more objective way. For example, the Big-Foot will climb stairs only if the active brain-patterns correlated to attention system of the human brain are observed and evaluated.

The proposed nontraditional brain-inspired Robotics intervention should run anytime and anywhere with a remote supervision of the special educator over the WiFi in order to aid children not only in schools but in family environment. Therefore, the proposed framework should comply to the concept for human-robot personal communication by augmenting intelligence to robot, however not digitally by pushing a button, clicking, dragging or speaking, but biologically and continuously through emotions, mental intentions or even via chemically released by neurons rewards [12].

All this imposes technical challenges concerning robot design, proper neuroscience computing and ubiquitousness. Movement of walking robots is accomplished in two ways: motion with maintaining static stability or use of dynamic gaits. When the number of legs is small, less than 4, maintaining static stability is not an easy task as it is necessary to change the center of gravity, which requires additional degrees of freedom. Overcoming obstacles with few supports is even harder. This article discusses a robot design that has only three supports. In this case the problem of maintaining static stability is overcome by increasing the area of these supports and using suitable shapes and materials.

The technical challenges concerning ubiquitous computing are how to merge people, processes, devices and technologies with sensors and actuators. We exploited the idea behind the Internet of Things (IoT) and the innovative” Cloud Computing” infrastructure [13]. Thus, all sensing, computation, and memory can be integrated into a single standalone Socially-assistive Robotics system. Node-RED [14] is an open source development tool built by IBM, which allows to wire up IoT as nodes in flows. Node-RED is built on Node.js and can run anywhere if the applications are capable of hosting node.js, such as small single board computers like the Raspberry Pi, personal laptops or in cloud environments, such as the IBM Cloud. The Node-RED connectivity allows nodes to collect and exchange data ubiquitously and its flow-based programming is an ideal solution to wire up the biological brain intelligence to robots anytime and anywhere. Based on the idea behind IoT, that uniquely addressable “things” communicate with each other and transfer data over the existing network protocols, we propose how the information channel between the human brain and external devices to be applied for IoT brain-to-robot control. By analogy to Visual servoing [15] and Tactile servoing [16], we defined a term “Brain servoing” (or brain-based robot control) that uses EEG feedback information extracted from the brain EEG sensor to control the motion of a robot. The control tasks intend to translate a specific brain activity interaction patterns in robot commands ubiquitously. The control instructions are transferred from continuously decoded JA performance metric into robot commands and are sent to the robot actuators via a Node-RED set-up Emotiv Brain-Computer Interface (BCI) to Arduino.

In this study we illustrate a non-traditional control method where the brain electrical activity is captured by EMOTIV brain-listening headset [17] and specific spatial and temporal brain frequencies correlated to JA are translated into commands to control the walking robot Big-Foot. Because the robot Big-Foot has a simple and innovative design, children with specific needs find it attractive, and it does not create feelings of anxiety and discomfort when interacting with it. This helps the robot function as a mediator between the children and the therapist. The 3D model of the robot is cheap and easy to be controlled by children. It is extremely maneuverable and can climb stairs. These features allow it to be used in educational games for children. To the best of our knowledge we first propose an IoT framework for creating Human Robot Interaction (HRI) applications based on Node-RED “wiring” of Emotiv BCI [18] and Arduino based robot.

2. Mechanical design and improvement of the robot

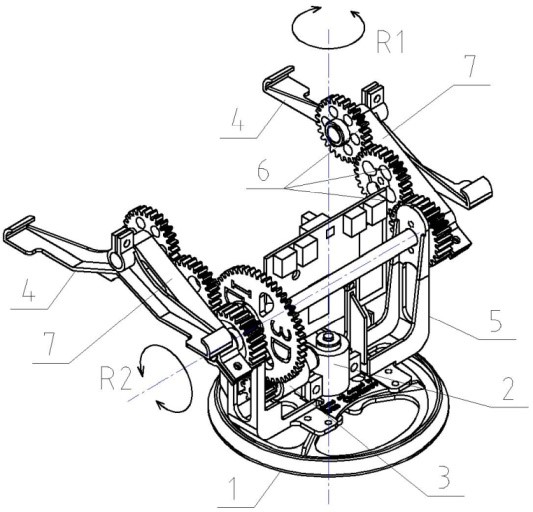

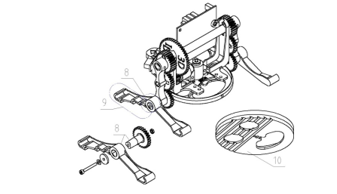

Fig. 1 presents the design of the robot and Table 1 lists its main components. Two engines are mounted in the body 5. The rotor of motor 2 is connected to and rotates the circular base 1. This allows the robot to change its orientation and turn when the feet 4 are raised above the ground. The motor 3, via a connecting shaft, drives the arms 7. At the end of the arms 7, the feet 4 are mounted. The feet maintain a constant orientation with respect to the base 1 and the body 5 by means of two gear mechanisms 6 with a gear ratio of i=1. This design allows the robot to rotate more than 360 degrees around axis R1. There is also no limitation of the rotation around axis R2. The two rotations are reversible, making the robot extremely maneuverable.

Figure 1: 3D model of the robot Big-Foot

Figure 1: 3D model of the robot Big-Foot

Table 1. Part list of the main components

| Position | Part description |

| 1 | Circular base |

| 2 | DC motor for rotating the base |

| 3 | DC motor for rotation of the arms |

| 4 | Feet |

| 5 | Body of the robot |

| 6 | Gear transmission mechanisms |

| 7 | Rotating arms |

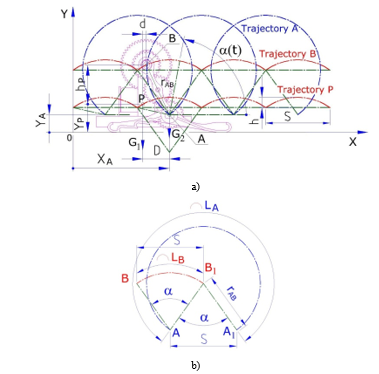

When the robot moves on a flat terrain it passes through two main phases. During the first phase, the feet 4 are stationary on the ground. The arms 7 move, and the body 5, together with the base 1, is moved at a distance of one step S (Fig. 2a). All points on the robot’s body move along trajectories that represent arcs of circles, for example, the trajectories of points B and P on Fig. 2. The radius rAB is determined by the length AB of the arms 7. During this phase, the arms 7 are rotated at an angle about point A. When the base 1 reaches the terrain, the robot’s body stops its movement and phase 2 occurs. During the second phase, the arms 7 and the feet 4 are moving. In this case, the arms are rotated about point B. Similarly, to the first phase, the trajectories of the points of the feet are also moving along arcs of a circle with radius rAB. In this case, the angle of rotation of the arms 7 is (Fig. 2b). During this phase, the robot does not move forward but can rotate around axis R1 (Fig. 1). The second phase ends when the feet touch the ground. The movements during the two phases are cyclically repetitive.

Figure 2: Trajectories of different points of the robot during the two phases of motion – a) and b) step S and angles of rotation of arm AB.

Figure 2: Trajectories of different points of the robot during the two phases of motion – a) and b) step S and angles of rotation of arm AB.

During the second phase, the robot’s feet rise to a relatively high height, which helps to overcome high obstacles. The behavior of the robot has been verified through computer simulation and experiments with 3D printed prototypes.

More detailed descriptions of the kinematics and behavior of the robot in the various phases of its movement are described in [8], [19] and [20].

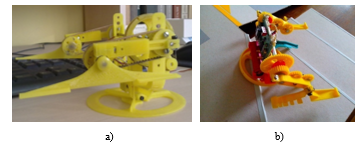

In the original idea described in patent [19], maintaining of parallelism between the feet and the base is achieved by a belt mechanism. The 3D printed prototype (Fig. 3a) showed that the belt drive is not suitable due to the need for considerable tensioning. The drawbacks of this model, described in more details in [20], have led to the need of improvement of the mechanical design.

Figure 3: a) First 3D printed model, b) model with improved design climbing stairs.

Figure 3: a) First 3D printed model, b) model with improved design climbing stairs.

Improvements have been made to the mechanical structure for a more reliable torque transmission. A new type of joint between the shaft and the feet is used (Fig. 4, position 8), which significantly reduces the stress concentration. This joint is realized by a smooth transition from a polygon to a circle, and the 3D printing technology allows its physical implementation and application in our model [20], [29].

Changing the shape of the feet and the round base makes it possible to overcome higher obstacles (Fig. 4, positions 9 and 10).

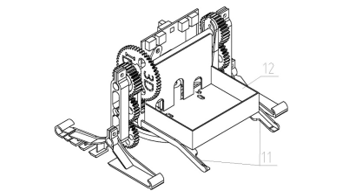

Another approach is used to improve the capabilities of the robot, namely to add additional elements. Two tails have been added (Fig. 5, position 11), which prevent the robot from rolling over when overcoming high obstacles.

Figure 4: Improvements in the design of the Big-Foot robot

Figure 4: Improvements in the design of the Big-Foot robot

The addition a platform (Fig. 5, position 12) is used for applications of the robot for developing games for children with specific needs.

Figure 5: Walking robot with tails and a paltform.

Figure 5: Walking robot with tails and a paltform.

3. Human mind control method of robot with Node-RED EmotiveBCI-to-Arduino interface

The most common way to control a robot is the joystick or mobile device. However, for some people the joystick or mobile control is difficult or impossible for many reasons and other (non-traditional) control methods have being developed based on the recent innovative sensors and technologies, such as motion sensing devices, etc. In this section, we present a non-traditional control method that rely on “Brain servoing” which uses feedback information extracted from a brain sensor (EEG feedback) to control the walking robot.

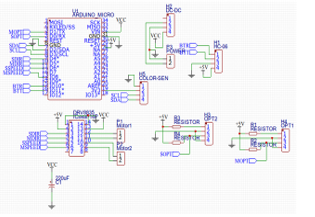

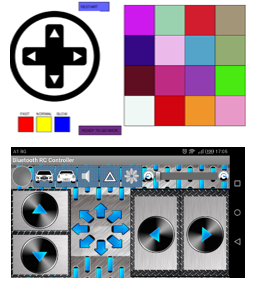

A traditional bi-directional DC control of two motors (operating at a voltage of 2.7-10.8V) is used. The current is about 1.2A per motor and the load on motor is up to 2A for few seconds. The H-bridge DC motor control is used in order to rotate motors in both directions. Four transistors in the circuit are controlled in pairs and play a role of switches to control the motor to rotate in both directions. Fig. 6 presents the connecting scheme and Fig. 7 the code uploaded on the ARDUINO MICRO. The end-user interfaces via dialog box for laptop or from mobile device are presented in Fig. 8.

Figure 7: The code for ARDUINO MICRO

Figure 7: The code for ARDUINO MICRO

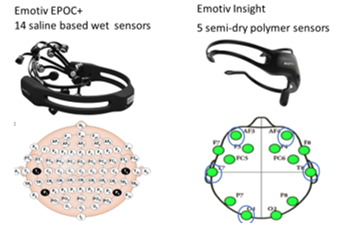

The nontraditional control method intends to translate in robot commands a specific brain activity interaction patterns within a framework of IoT. Fig. 9 illustrates in general this framework for Brain-robot interaction and its deployment using a Node-RED tool. An application of EmotivBCI-to-Arduino Node-RED flow is explained in Section V. The commercial EEG devices, Emotiv EPOC or Emotiv Insight [17] could be used. The placement of electrodes are shown in Fig.10. In this study EEG data are acquired and recorded with the 14-channel neuroheadset EPOC+ and the EEG signals were sampled at the rate of 256 Hz. The data are wirelessly transmitted to a host computer through Bluetooth and further processed with EmotivBCI Node-RED Toolbox [18].

Figure 8: Traditional control methods of walking robot BigFoot

Figure 8: Traditional control methods of walking robot BigFoot

Figure 9: IoT framework for Brain-robot interaction – Node-RED EmotivBCI-to-Arduino Interface.

Figure 9: IoT framework for Brain-robot interaction – Node-RED EmotivBCI-to-Arduino Interface.

Figure 10: EMOTIV EEG headsets for EEG-based BCI – listen, record and transmit in real time the electrical activity of the brain

Figure 10: EMOTIV EEG headsets for EEG-based BCI – listen, record and transmit in real time the electrical activity of the brain

Node-RED uses a visual programming approach for ‘wiring together’ of code blocks and make up ‘flows’ to carry out tasks. It connects nodes as a combination of input nodes, processing nodes and output nodes in a browser-based flow editor using a wide range of nodes in the palette. The EmotivBCI Node-RED Toolbox is a custom library of input nodes for Node-RED which allow interfacing the EMOTIV technology with other Node-RED nodes and thus to create a wide variety of BCI integrations. Installation, node descriptions and use are presented in [18]. EmotivBCI Node-RED gets data from Emotiv Cortex (Cortex is built on JSON) and WebSockets for creating BCI applications and integrates data streams from the human headset with third party software or hardware. In a browser-based flow in a Web page, a user is allowing to control the BigFoot rotation and direction of both motors using one of the following brain activity correlated patterns: in the frequency domain, correlated with human cognitive, facial and emotional states or directly by preliminary trained mental commands. EMOTIV offers the opportunity for the user to create and execute a number of Mental Commands [21], such as: push, pull, lift, drop, left, right, rotate left, rotate right, rotate forwards, rotate backwards, rotate clockwise, rotate anticlockwise, and disappear. The detected facial expressions are blink, left wink, right wink, raised eyebrows (surprise), furrowed brows (frown), smile and clenched teeth. The Emotiv technology currently offers five performance metrics detections: Engagement/Boredom, Frustration, Meditation, Instantaneous Excitement, and Long-Term Excitement, that are based on universal in nature brainwave characteristics and don’t require an explicit training of the user [22].

All these correlated frequency patterns or detections are interpreted in the Node-RED function blocks and map to commands in order to control the walking robot by sending dynamically information to the serial port where the Arduino is connected. There are several ways to interact with an Arduino using Node-RED. As the Arduino appears as a Serial device, the Serial in/out nodes can be used to communicate with it after adjusting the serial port speed (baud rate) to be the same at both ends. We wired the EmotivBCI nodes to serial port and Arduino Software (IDE). We program the Arduino with the IDE, and then send and receive input over the serial port to interact with BigFoot. Two browser-based flows in a Web page, as well as Node-RED Graphical User Interface (GUI) are designed to allow the user to control the BigFoot motors dynamically by its own brain activity patterns in the frequency domain corresponding to the joint attention. Every 1 or 2 s a string for one of the following commands – ‘S’ for stop, ‘F’ for forward, ‘B’ for backward, ‘L’ for left rotation and ‘R’ for right rotation is sent. Except the neurofeedback exposed on the BigFoot, the GUI shows the rotation and direction as a feedback in the web page.

4. Application of the IoT framework for brain- robot control method

The walking robot is used in the development of educational play-like activities of children with SEN. Trough playing with the robot, the children improve their special orientation abilities, they learn easily and with fun by controlling the robot [11]. The interaction of children with the walking robot engages them to communicate with each other and develop their joint attention (JA). By using the non-traditional mind control methods, a neurofeedback rehabilitation is possible and will be effective for training the attention or emotion self-regulation of the brain function. We place the child’s neurofeedback in the play-with-robot interventions and expose it on the walking robot.

Joint attention precedes the development of children mentalization skills [23]. Mentalization is the ability to understand the mental state, of oneself or others, that underlies evident behavior. Brain activity patterns in time and frequency domains correlated with JA are discussed in many papers [23], [24] and [25]. According to studies [25] and [26] the oscillatory brain activity in the alpha and beta ranges in the right temporoparietal region correlates with the anticipation and prediction of another person’s responses and preferences. Authors in [23] have tested whether neuronal activity preceding JA correlates with mentalization in typically developing (TD) children and whether this activity is impaired in children with autistic spectrum disorder (ASD) who evidence deficits in JA and mentalization skills. TD children shown beta rhythm (15-25 Hz) in the temporoparietal region preceding the JA behavior, while ASD children did not show an increase in beta activity. In the study [23] statically significant difference in increasing the beta band power in the right parietal group of channels is found and again the data analysis suggested that the right temporoparietal region and the middle/superior frontal gyrus are the main brain regions contributing to the beta power differences between the two groups. Based on these neuroimaging findings we designed a play where a child can move the BigFoot to climb the stairs through a neurofeedback from the oscillatory brain activity in the alpha and beta ranges in the right temporoparietal region. Thus, we try to teach and train children joint attention by learning to modulate their rhythm power in the beta frequency band [15-25 Hz] in order to move the walking robot. The power spectrum of right temporoparietal lobe intensity is obtained by EmotivBCI tool and the changes in JA are calculated based on functional brain imaging in terms of event-related desynchronization (ERD) or event-related synchronization (ERS) [26].

According to Pfurtscheller [26] sensory and cognitive processing results in changes of the ongoing EEG in form of ERD/ERS that are highly frequency-band specific. For example, oscillations with 10 Hz comprise more synchronized neurons than oscillations with 40 Hz. The ERD is interpreted as a correlate of an activated cortical area with increased excitability and the ERS in the alpha and lower beta bands can be interpreted as a correlate of a deactivated cortical area [26]. Furthermore, the frequency of brain oscillations depends on the percentage of a population of neurons synchronized. With an increasing number of synchronized neurons, the average frequency becomes slower and if only 10% are synchronized, the amplitude is 10-fold the activity of the 90% of not-synchronized neurons.

In terms of information theory, a desynchronized system represents a state of maximal readiness and a maximum of information capacity [27]. The neuroscience explanation is the ERD, i.e. in the underlying neural network small areas of neurons involved in a particular neural computation (neural ensemble), work in a relative independent or desynchronized manner.

We use (8) to obtain the percentual decease (ERD) or increase (ERS) in the band power during a test (activation) interval compared with a baseline (reference) interval. The ERD/ERS index foe alpha and beta bands are the respond to different levels of JA:

![]() where A is the power within the frequency band of interest in the activity period and R is the preceding baseline or reference period. Positive numbers are obtained for ERS% and negative – for ERD% that reflect synchronization and a state of band power decrease. We tested our research hypothesis in pilot experiments with five students (right handed male and female in average age about 18 years old) by measuring whether the amount of alpha ERD increase and the amount of beta ERS increase with higher level of JA and mentalization skills. The mean threshold of 5% for ERS in the beta rhythm was defined experimentally. We plan to perform the experiment explained in [23] with children with ASD in order to compare the establishment and assessment of JA based on the intervention and observations of the gaze shifting and behavioral response from practitioners versus establishment and assessment of JA by the brain-inspired robot control and a neurofeedback response.

where A is the power within the frequency band of interest in the activity period and R is the preceding baseline or reference period. Positive numbers are obtained for ERS% and negative – for ERD% that reflect synchronization and a state of band power decrease. We tested our research hypothesis in pilot experiments with five students (right handed male and female in average age about 18 years old) by measuring whether the amount of alpha ERD increase and the amount of beta ERS increase with higher level of JA and mentalization skills. The mean threshold of 5% for ERS in the beta rhythm was defined experimentally. We plan to perform the experiment explained in [23] with children with ASD in order to compare the establishment and assessment of JA based on the intervention and observations of the gaze shifting and behavioral response from practitioners versus establishment and assessment of JA by the brain-inspired robot control and a neurofeedback response.

The control commands are transferred from continuously decoded JA performance metric into robot commands and are sent to the robot actuators via Node-RED EmotivBCI-to-Arduino Interface. For this application, only the right temporal T8 and right parietal P8 electrodes are used for right-handed participants and their beta and alpha power are analyzed during the neutral or joint attention states. The power spectrum density for beta band is received continually from the EMOTIV Node-RED EEG streaming data. The command ‘S’ is sending to COM4 by default. In a function node, the ERS and ERD are calculated for each electrode and when alpha ERD significantly decrease and beta ERS increase (thresholds in %, specific for the participant), the corresponding command ‘F’ or ‘B’ is sent to COM4. The results showed that robot could be successfully navigated by the positive values of 5% for ERS in the beta rhythm, which is a correlate with joint attention on walking robot in order to complete a navigation task to climb stairs by human intention. In the future we will assign the percentual increase into fuzzy sets in order to define levels of difficulty for climbing stairs.

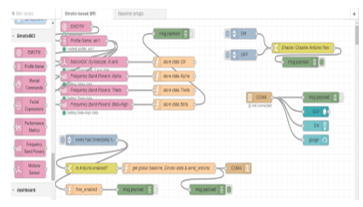

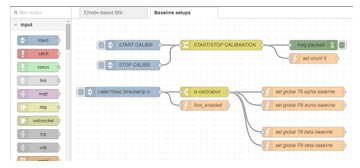

The Node-red flow for brain-robot interaction based on EmotivBCI toolbox is shown in Fig.11. After streaming the row data from the Emotiv node in the first Node-RED flow, the power of frequency bands of interest are stored in global variables. They are accessed in the second flow (Fig.12) to set-up the baseline settings in the frequency band of interest for 1 or 2 min. How the robot commands are mapped and sent to serial port can be seen in the first Node-RED flow.

We intend to test the proposed framework with a neurofeedback from the frontal theta for training and remembering the orientation in space and implicitly to expose it by the walking robot. The increase in theta power during successful encoding of new information is discussed from neurological point of view in [28] with the relationship with hippocampal theta induced in the cortex.

Figure 11: Node-red flow for EmotivBCI-Arduino interaction

Figure 11: Node-red flow for EmotivBCI-Arduino interaction

Figure 12: Node-red flow for baseline setting in the frequency band of interest

Figure 12: Node-red flow for baseline setting in the frequency band of interest

5. Conclusion

Although the proposed walking robot has a minimum number of mechanical components, it is extremely maneuverable. It has only two motors that makes it simple to control and suitable for educational or rehabilitation purposes. The robot is not expensive and easy to manufacture with a 3D printer. Several control methods are proposed, one of them is “Brain servoing” that uses EEG feedback and brain activity patterns for mind-based robot control. The proposed IoT framework for creating Human Robot Interaction (HRI) applications based on Node-RED “wiring” of Emotiv BCI and Arduino based robot has been applied for neurofeedback training. The results showed that robot could be successfully navigated by the level of human attention to complete a navigation task for climbing stairs.

In the future, the robot’s design and control system will be enhanced by collecting and recording information from more sensors. Since, the proposed framework for mind-robot control is enough general, it will be easily applied for other areas of neurons involved in a particular neural computation, as well as other brain sensors and different humanoid or nonhumanoid robots in the IoT.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

This research is supported by the National Scientific Research Fund, Project N DН17/10. We thank to all high school students from the “National Professional High School for Computer Technologies and Systems”, Pravets, Bulgaria, who participated in the experiments and implementation of the Arduino hardware.

- Ben-Tzvi P., Ito S., Goldenberg A.A. (2009), A mobile robot with autonomous climbing and descending of stairs, Robotica 27(2), pp. 171-188, https://pdfs.semanticscholar.org/0550/5591df73849ecba97e8754d518eda1bead41.pdf

- Poramate Manoonpong, Sakyasingha Dasgupta, Dennis Goldschmidt, Florentin Wörgötter, Reservoir-based online adaptive forward models with neural control for complex locomotion in a hexapod robot, 2014, International Joint Conference on Neural Networks (IJCNN), Pages: 3295 – 3302.

- Liu Juan Xiu, Chen Qing Wei, Dynamics and control study of a stair-climbing walking aid robot, 2010 International Conference on Mechanic Automation and Control Engineering, Pages: 6190 – 6194.

- Chih-Hsing Liu, Meng-Hsien Lin, Ying-Chia Huang, Tzu-Yang Pai, Chiu-Min Wang, The development of a multi-legged robot using eight-bar linkages as leg mechanisms with switchable modes for walking and stair climbing, 2017, 3rd International Conference on Control, Automation and Robotics (ICCAR), Pages: 103 – 108.

- Jeon, Y. Jeong, K. Kwak, S. Yeo, D. Ha, S. Kim (2009), Bio-Mimetic Articulated Mobile Robot overcoming stairs by using a slinky moving mechanism, Proceedings of ICAD2009, The Fifth International Conference on Axiomatic Design, Campus de Caparica, March 25-27, 2009, pp.173-179.

- Fei Sun, He Hua Ju, Ping Yuan Cui, A new 12 DOF biped robot’s mechanical design and kinematic analysis, Proceedings of 2011 International Conference on Electronic & Mechanical Engineering and Information Technology, 2011, Pages: 2396 – 2400.

- E. Nava Rodriguez* G. Carbone† M. Ceccarelli Design Evolution of low-cost humanoid robot CALUMA, 12th IFToMM World Congress, Besançon (France), June18-21, 2007 University Cassino, Italy, https://pdfs.semanticscholar.org/bde8/0efbb0328483ff611f82ad035981ab3a133e.pdf

- Chavdarov I. , Walking robot realized through 3d printing, Comptes rendus de l’Acad´emie bulgare des Sciences, Tome 69, No 8, 2016, pp. 1069-1076, http://www.proceedings.bas.bg/cgi-bin/mitko/0DOC_abs.pl?2016_8_13

- Kruse T., Pandey A., Alami , Kirsch A.. Human-Aware Robot Navigation: A Survey. Robotics and Autonomous Systems, Elsevier, 2013, 61 (12), pp.1726-1743. hal-01684295

- Weisberg H., Jones E. Individualizing Intervention to Teach Joint Attention, Requesting, and Social Referencing to Children with Autism. Behavior Analysis in Practice (2019) 12:105–123

- Dimitrova, M., Lekova, A., Kostova, S., Roumenin, C., Cherneva, M., Krastev, A., Chavdarov, I. (2016) A multi-domain approach to design of CPS in special education: Issues of Evaluation and Adaptation. Proceedings of the 5th Workshop of the MPM4CPS COST Action, November 24-25, 2016, Malaga, Spain, pp.196-205.

- Lekova A., Pavlov V., Chavdarov I., Krastev A., Datchkinov P., Stoyanov I.. Augmented Intelligence For Teaching Robots By Imitation, International Scientific Journal “Industry 4.0” Web Issn 2534-997x; Print Issn 2543-8582, Year Ii, Issue 5, P.P. 201-204 (2017), pp. 201-204

- Zhang, L. Cheng, and R. Boutaba, “Cloud computing: State-of-theartand research challenges,” J. Internet Services Appl., vol. 1, no. 1,pp. 718, 2010.

- IBM NodeRED Flow-based programming for the Internet of Things. URL: https://nodered.org/

- Agin, G.J., “Real Time Control of a Robot with a Mobile Camera”. Technical Note 179, SRI International, Feb. 1979

- Li, C. Sch¨urmann, R. Haschke, and H. Ritter, “A control framework for tactile servoing,” in Robotics: Science and Systems, 2013

- Martinez-Leon, J., Cano-Izquierdo, J. & Ibarrola, J. Are low cost Brain Computer Interface headsets ready for motor imagery applications? Expert Syst Appl 49, 136 (2016).

- EmotivBCI Node-RED Toolbox.https://emotiv.gitbook.io/emotivbci-node-red-toolbox/node-descriptions-and-use

- Chavdarov, T. Tanev, V. Pavlov Patent application 111362. „Walking Robot”, Published summary – Billetin No. 6, 30.06.2014., page 11, in Bulgarian, http://www.bpo.bg/images/stories/buletini/binder-2014-06.pdf

- Chavdarov, B. Naydenov, S. Kostova, A. Krastev, A. Lekova, “Development and Applications of a 3D Printed Walking Robot – Big-Foot”, 2018 26th International Conference on Software, Telecommunications and Computer Networks (SoftCOM), https://ieeexplore.ieee.org/document/8555843/references#references

- Create and execute a number of Mental Commands in EMOTIV. URL: https://emotiv.zendesk.com/hc/en-us/articles/201216335-Training-Mental-Commands

- Understanding the Performance Metrics Detection Suite. URL: https://emotiv.zendesk.com/hc/en-us/articles/201444095-Understanding-the-Performance-Metrics-Detection-Suite

- Soto-Icaza , Vargas L., Aboitiz F., Billeke P, Beta oscillations precede joint attention and correlate with mentalization in typical development and autism, Cortex, Volume 113, 2019, Pages 210-228

- Billeke, P., Zamorano, F., Cosmelli, D., & Aboitiz, F. (2013). Oscillatory brain activity correlates with risk perception and predicts social decisions. Cerebral Cortex (New York N.Y. 1991), 23(12), pp. 2872-2883

- Park, J., Kim, H., Sohn, J.-W., Choi, J., & Kim, S.-P. EEG beta oscillations in the temporoparietal area related to the accuracy in estimating others’ preference. Frontiers in Human Neuroscience, 12(February). 2018

- Gert Pfurtscheller, F. H. Lopes da Silva. Functional Brain Imaging. Hans Huber Publishers, 1988, 264 pages.

- Thatcher, R. W., McAlaster, R., Lester, M. L., Horst, R. L., & Cantor, D. S. (1983). Hemispheric EEG asymmetries related to cognitive functioning in children. In A. Perecuman, Cognitive Processing in the Right Hemisphere (pp. 125–145). New York: Academic Press.

- Klimesch W, Doppelmayr M, Russegger H, Pachinger T. Theta band power in the human scalp EEG and the encoding of new information. NeuroReport 1996;7:1235-1240.

- Chavdarov, Patent application No 112346, “3D printed joint between a shaft and a link”, Published summary – Bulletin 01.2 31.01.2018., page 13-14, in Bulgarian http://www.bpo.bg/images/stories/buletini/binder-2018-01-31.pdf

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Sudip Hazra, Shane Whitaker, Panos S. Shiakolas, "Design and Implementation of a Behavioral Sequence Framework for Human–Robot Interaction Utilizing Brain-Computer Interface and Haptic Feedback." Journal of Engineering and Science in Medical Diagnostics and Therapy, vol. 6, no. 4, pp. , 2023.

No. of Downloads Per Month

No. of Downloads Per Country