ITSM Software Ranking for Small Medium Enterprises Based on ITIL V3 Quick Win Criteria using Fuzzy SIR Method

Volume 4, Issue 2, Page No 288-298, 2019

Author’s Name: Husni Teja Sukmana1, Luh Kesuma Wardhani1, Sarah Khairunnisa1, Kyung Oh Lee2,a), Risa Wati3

View Affiliations

1UIN Syarif Hidayatullah Jakarta, Information Technology, Saints and Technology Faculty, 15412, Indonesia

2Sun Moon University, Computer Engineering, 31460, South Korea

3Universitas Bina Sarana Informatika, Jakarta 12450, Indonesia

a)Author to whom correspondence should be addressed. E-mail: leeko2002@naver.com

Adv. Sci. Technol. Eng. Syst. J. 4(2), 288-298 (2019); ![]() DOI: 10.25046/aj040237

DOI: 10.25046/aj040237

Keywords: IT Service Management, IT Infrastructure Library, ITIL Quick-Win Criteria, Small Medium Enterprises (SMEs), Fuzzy SIR

Export Citations

There are various software related to the implementation of IT Service Management (ITSM) for a company, including those that are open source and commercial. An input is needed for companies in determining what software to choose from various software, especially for small and medium enterprises (SMEs) that have limited human and financial resources. In this study, we have contributed in evaluating open source ITSM software (including OTRS, ITOP, and SDP) that are suitable for use by small and medium-sized companies. In the evaluation process, we evaluated various appropriate criteria, so that we finally chose the quick-win criteria from ITIL V3 (one of the best practices that are widely used in ITSM). The method used for training is Fuzzy SIR (Superiority and Inferiority Ranking) with assessment criteria for data taken in the form of quantitative data from experts who have used and had certificates in the field of ITSM. The results showed that OTRS was the best software with a value of 0.86, SDP was in second place with a value of 0.77, and ITOP was in the last place with a value of 0.04.

Received: 11 February 2019, Accepted: 03 April 2019, Published Online: 08 April 2019

1. Introduction

The use of the IT Service Management (ITSM) framework has been widely used in order to increase the value of a service delivered by the company to its stakeholders. ITSM provides a framework to structure IT operations that enables organizations to provide quality of IT services to meet business needs and adhere to service level agreements (SLAs) [1]. Various ITSM frameworks has been developed to provide guidelines and best practice to help manager improve IT operations. ITSM Framework can be divided into two, first made by the vendor, among others, includes: MOF 4.1 (Microsoft), ITUP (IBM) and the framework created by organizations and communities, including ITIL which was pioneered by the ITSM Forum (ITSMF), CMMI-SVC by Software Engineering Institute (SEI), COBIT from ISACA, and International Organization for Standardization (ISO) published standards related ITSM such as ISO 20000-1 [2]. Among the various frameworks, ITIL is the most influential and popular to be used today. To support this statement, in 2017 itSMF conducted a survey about ITSM which mention in [3], where among the surveyed frameworks (ITIL, ISO/IEC 20000, DevOps, Service Integration and Mgt, and Lean), ITIL is the most widely adopted framework for respondents.

To support the implementation of ITSM, many service providers have made applications based on the ITSM framework and ITIL V3 best practices. Even The ITSM software industry is one of the fastest growing sectors in the computer software industry which now includes hundreds of ITSM software solutions in the market [4]. Based on report from Gartner, some of these software includes: Samanage Service Desk, SolarWind Web Help Desk, Freshservice, Zendesk, Manage Engine Service Desk Plus, Spiceworks, and JIRA Service Desk. In addition, several companies also developed open source ITSM software including: Combodo ITOP, OTRS, CITSMART, Project Open, OCS Inventory NG, and I-DOIT. Each software has its advantages and disadvantages. For this reason, a method is needed to determine which application is suitable for use by a company, especially for small and medium enterprises (SMEs). So that the investment spent on the implementation of the application is in line with the results that will be obtained.

Research related to ITSM software rankings has been carried out by [1], [2], [5], [6]. Each of these studies differs from three sides, first related to the software to be ranked, secondly related to the criteria taken, and the third difference based on the method of decision making taken. The chosen method varies from the use of AHP [2], Fuzzy TOPSIS, [1] and Fuzzy SIR [5]. In terms of software selection, there are studies that prioritize open source software ranking [2], [6] and there are two studies that do not list the types of software studied [1], [5]. The fundamental difference from these studies is from the selection of criteria. Research by [1], [5] used all the criteria from ITIL V.3, while [2] employed criteria from the side of ITIL V.3 service operation. Research [6] has tried to see larger criteria with organizational, financial, end-user risk and technical risk aspects.

The use of these criteria is important, especially related to the type of company that will implement this software. Companies that do have services in the IT field such as telecommunications companies should use full ITSM criteria, while small and medium enterprises for the initial stages of implementation can adequately use several important processes in ITIL V.3. Small-Medium Enterprises (SMEs) are businesses that maintain revenues, assets, or a number of employees below a certain threshold. SMEs play a significant role in emerging economies. Based on report from SMEs create 80% of the new jobs in emerging economies[7], [8]. According to the website[9], there are 3 main problems commonly faced by SMEs including: difficulty in hiring staff, high manpower costs, and increasing business competition. So that certain processes need to be chosen to guarantee the implementation of ITSM in accordance with the capacities of these SMEs.

In this study, we looked for different perspectives from previous studies related to the selection of criteria for small and medium enterprises, which we then got 9 criteria for quick win processes from ITIL V.3 which will be used as ranking criteria. To rank this software, the author used the Fuzzy Superiority and Inferiority Ranking (Fuzzy SIR) method. This method was originally developed by Xiaozhan Xu in 2001 in [10].

2. Related Works

2.1. The Evaluation Methods

In this research, the author used the Fuzzy SIR (Superiority and Inferiority Ranking) method based on [5] which was initially developed by Xiaozhan Xu in 2001 [10]. Based on the research from [12], it explained the differences between several MCDM methods. Analytical Hierarchy Process (AHP) [11] and fuzzy models methods can only handle ordinal data. Neither provides preference structures for decision making, a technique that can mimic the decision-makers’ mindset in describing the nature of the criteria. ELECTRE III [12] only provides a single preference structure without other options for criteria of a different nature.

In contrast, the SIR method [10] can process both cardinal and ordinal data. It also provides six different preference structures and incorporates outranking rationale to deal with the “poor” true-criteria preference structure. Therefore, the SIR method provides more information to represent decision-making preferences for each decision criterion. Table 1 shows a comparison of several MCDM methods.

Table 1. Comparison of MCDM Methods

| Characteristic | AHP | ELECTRE III | Fuzzy Evaluation System | PROMETHEE | SIR METHOD |

| Handle real data? | No | Yes | No | Yes | Yes |

| Different weight between criteria? | Yes | Yes | No | No | Yes |

| Provide multi preferences structure? | No | No | No | Yes | Yes |

| Best choice? | No | No | No | No | Yes |

According to the research of [13], some of the advantages of the SIR method include:

- Providing scientific methods for alternative selection processes.

- Provide a user-friendly and multi-criteria decision-making model for practitioners.

- Improve the alternative selection process where all factors that affect performance will be considered.

- Avoid common traps of other decision-making models in the alternative selection, such as oversimplifying decision criteria.

The SIR technique is an easy to understand logical model. This methodology enables all leaders to consider consistently and can improve precision and unwavering quality in the determination procedure. Thus, in general execution can be improved by choosing fitting software [13]. As per the examination from [5] which was cited from [14], one of the restrictions of conventional procedure for Superiority and Inferiority Ranking (SIR) is utilizing fresh qualities in the assessment procedure. A few criteria are hard to gauge by fresh qualities, so amid the assessment, these criteria are frequently ignored. In any case, in numerous genuine cases, the human inclination show is dubious, and chiefs unfit to relegate fresh qualities for their decisions. Leaders face trouble to deal with vulnerabilities of genuine world in the customary MCDM approach and lean toward interim decisions than in pointing out their decisions in fresh qualities. Consequently, fluffy SIR is an appropriate strategy to take care of positioning issues [15]. Along these lines, this investigation has built up the conventional SIR strategy under dubious conditions.

2.2. ITSM Selection Criteria

Several studies have tried to compare ITSM software including by [1], [2], [5], [16]–[19]. These studies have the same purpose, namely, to evaluate and rank software based on ITSM criteria. The differences in each of these studies are in the selection of criteria, the software to be evaluated, the selection of evaluation methods and the object objectives that will utilize the results of the evaluation.

For example studies [1] and [5] used overall criteria from ITIL V.3, starting from service strategic, service design, service transition, service operation, and coupled with continual service improvement. This research also adds several nonfunctional criteria, including portability, platforms, security, etc. While [18], [19] use four main criteria in the form of risk factors, for example for financial risk, there are two attributes, namely new business opportunity and switching costs. The organizational risk in the form of training, user investment, top management support, end-user risk in the form of functionality, usability, quality, and usefulness. Technical risk uses attribute maturity, interoperability, communication support, documentation, security, and technical environment.

In previous studied [2], the author have evaluated some ITSM software by using only service operation criteria, which consist of request fulfillment, incident management, problem management and event management. The purpose of this study is to help new companies or organizations want to use the ITSM concept in determining what software should be selected from the many open source software on the market. We assume that they do not need to apply all the criteria because usually these companies fall into the category of small and medium enterprises, where they are usually constrained by a shortage of IT experts, funds and some of them are not making IT their main business.

More detailed information related to the use of criteria, software that will be evaluated and evaluation methods from previous studies can be seen in the Table 2. Our research uses different criteria from previous research, which we call the quick win criteria. These criteria are taken from companies where they have just tried to implement IT Service Management.

Table 2 Tools Selecting Criteria

| Journal | Criteria | Method | Tools |

| [1], [5] | 46 criteria (function and non-functional) | Fuzzy TOPSIS [1]

Fuzzy SIR [5] |

ITSM Tools 1-5 |

| [18], [19] | 1. Financial

2. Organization 3. End user 4. Technical |

Multiple attribute decision making (MADM) | ITOP, IDOIT, OTRS |

| [17] | 1. Product Functionality

2. Requirements for Free and Open Source Project 3. Specifications 4. User friendliness |

Multiple-criteria decision-making (MCDM) | Zenos, Nagios |

| [2] | ITSM Service Operation

1. Request Fulfillment 2. Incident management 3. Problem Management 4. Event Management |

Analytical Hierarchy Process

(AHP) |

ITOP, IDOIT, OTRS |

| [16] | Multiple Criteria Decision Analysis (MCDA) |

3. Research Method

3.1. Research stages

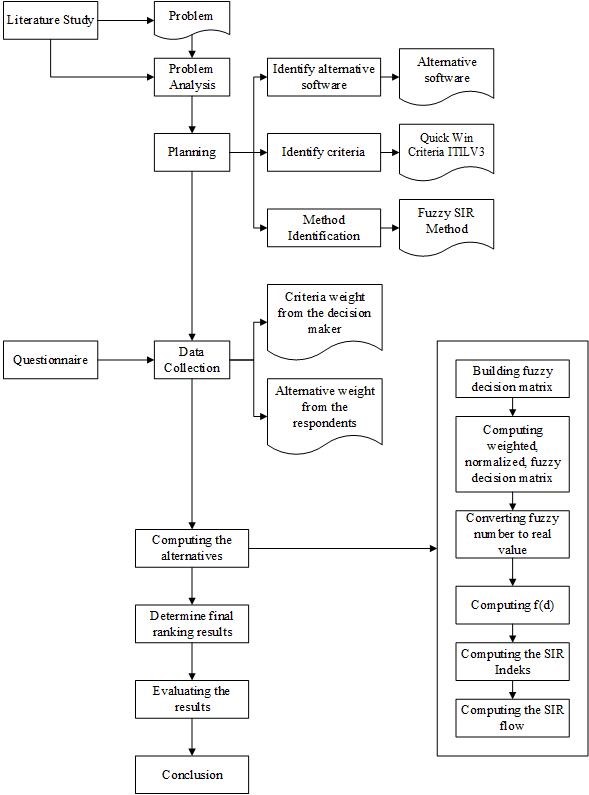

The overall research stages can be seen in Figure 1, which consist of 5 steps.

- First, we conducted a literature study. This phase aims to analyze existing problems related to ITSM by comparing similar literature studies. After obtaining references, the author searches for the information needed in this research to be used in the preparation of the theoretical foundation and method determination.

- Second, we made planning for identified the alternative software, identify ITSM criteria for SMEs, and finding the selection method.

- Third, we collect the data by making questionnaires for getting the weight of rules and alternatives.

- Fourth, conducting the evaluation calculation by using Fuzzy SIR method.

- Fifth, evaluating, analyzing the result and end with a summary

3.2. Research sample and location

We used a quantitative approach by disseminating data for two purposes, first to determine the weight of each of the quick win (decision maker) criteria. Respondents were selected as five ITSM experts who had participated in ITIL V3 training, had ITIL V3 certificates, and the companies where they worked had implemented ITIL V3 best practices in Indonesia. Second, the alternative weight questionnaire uses ten respondents, who have worked using ITSM software. We did an online survey using the Google Forms application. This questionnaire link is then distributed to the appropriate respondents through social media such as WhatsApp and LinkedIn.

3.3. ITSM Software Evaluation Method

In evaluating software ratings, the author uses the Fuzzy SIR method. Fuzzy SIR method is a modification of classic SIR method. Classic SIR method uses crisp value in evaluation process. For ITSM software ranking case, the criteria were gained from expert who involved in this area. However, criteria from human preferences are sometimes uncertain, therefore not all criteria from expert in ITSM can be measured using crisp value [1]. Fuzzy logic, due to its nature can process criteria with uncertain value. Modifying Fuzzy logic and SIR become the best solution for ITSM software ranking.

In their research, [5] using Triangle Fuzzy Number (TFN) for fuzzy SIR calculations because of its ease of use in performing calculations. Also, it has been displayed that modelling with triangular fuzzy numbers is an effective way to formulate decision problems when the available information is subjective and inaccurate. In [20]–[22] triangular membership function shows better performance concerning the steady-state behavior compare to gaussian fuzzy number. In this research, we follow the step how to calculate the Fuzzy SIR by using equation 1-20 which mentioned in [5]

4. Quick Win Selection Criteria

In identifying ITSM software selection criteria, the authors conducted a literature study on several books, research journal, and global survey from itSMF to determine what criteria would be used in this study. In this research, the generated criteria will lead to processes that exist in ITIL V3. Based on research conducted by [1] and [5], both of the research uses 46 criteria to rank the ITSM software, which are grouped into functional criteria and non-functional criteria. Functional criteria are determined based on ITIL V3 which consists of 5 main criteria. This main criterion is taken based on the 5 phases in the ITIL V3 lifecycle, namely: Service Strategy, Service Design, Service Transition, Service Operation, and Continual Service Improvement. Then, for the sub categories it is taken from the functional process of each phase. Meanwhile, the non-functional criteria consist of 4 main criteria, namely: Quality, Technical, Vendor, and Implementation.

Based on research conducted by [2], the criteria used for ranking are determined based on ITIL V3 at the service operation process. These criteria include: Event Management, Incident Management, Problem Management, and Request Fulfillment. The approach to criteria in service operations process was chosen because many new organizations wanted to implement ITSM and almost all started from service operations.

According to the book entitled “ITIL V3: Where to Start & How To Achieve Quick Wins” written by [23] –an IT Management Consultant from Pink Elephant–, to implement ITIL is not an easy thing and sometimes can take several years or more. Therefore, a plan is needed to achieve “quick win”. Pink Elephant recommends starting with what are called with ‘Customer Fulfillment’ process, such as Incident Management, Service Level Management and Change Management, which has daily interaction and visibility with the business. Some suggested processes include: Incident Management, Service Desk, Change Management, Service Catalog Management, Problem Management and Service Assets & Configuration Management (SACM).

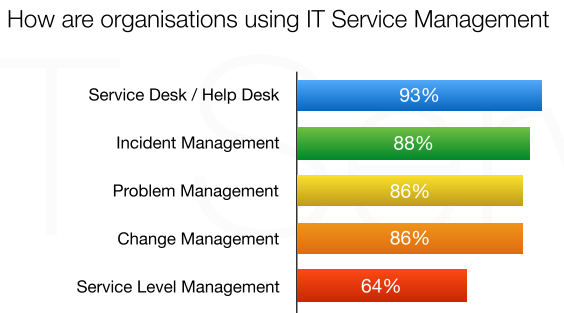

Based on the global survey results which is cite by [3] in 2016, there are 5 sub-process in ITIL V3 Life Cycle that widely used by organizations in implementing ITSM, among others, include: Service Desk, Incident Management, Problem Management, Change Management, and Service Level Management. The percentage of the survey can be seen in the Figure 3 below.

According to the survey results which is cited by [3], that there are 3 processes in ITIL V3 which is the most widely used by companies including: Service Operations, Service Transitions, and Service Design. Therefore, based on previous literature studies, the criteria used in this study will be taken from the three processes mentioned before as a quick-win criteria that will be implemented in Small-Medium Enterprises (SMEs). Summary of criteria can be seen in the Table 3 below.

Figure 2 How are organizations using IT Service Management. Source: [3]

Figure 2 How are organizations using IT Service Management. Source: [3]

Table 3. Quick Win Criteria in ITIL V3

| No | Criteria | Main Phase in ITIL V3 |

| C1 | Service Desk | Service Operation Function |

| C2 | Event Management | Service Operation Process |

| C3 | Incident Management | Service Operation Process |

| C4 | Problem Management | Service Operation Process |

| C5 | Request Fulfilment | Service Operation Process |

| C6 | Change Management | Service Transition Process |

| C7 | Service Catalogue Management | Service Design Process |

| C8 | Service Asset and Configuration Management | Service Transition Process |

| C9 | Service Level Management | Service Design Process |

5. Fuzzy SIR implementation in Quick Win Criteria

This section describes the implementation of experiments conducted by the author, starting from the stage of problem analysis, collecting primary data, determining criteria and weighting criteria, determining alternatives and calculations using the Fuzzy SIR method.

5.1. Problem Analysis

To support the implementation of ITSM in a company, appropriate ITSM software is needed. Currently available various kinds of software, ranging from software that is available for free (open source) to paid software. For companies that are large and stable, purchasing paid software may not be too problematic. Also for companies that have made IT as a service that is inseparable from other services, such as the banking industry, purchasing paid software can help transfer risk both in terms of integration into existing systems, or cases of problems or bugs in the system that occur. However, with quite expensive costs, Small Medium Enterprises (SMEs) that will implement ITSM may be more suitable to try ITSM implementation using open source software.

The question is how Small Medium Enterprises (SMEs) who want to implement ITSM can choose the appropriate open source software? What criteria are appropriate to evaluate ITSM software ratings? Which ITSM open source software ranks best? Therefore, this research was built to answer these questions.

5.2. Planning

In this phase, there are 3 things done by the author, including: identify alternative software, identify criteria, and method identification. In identifying alternative software, the author conducted a literature study on ITSM software that is widely used today. Based on the research from [1] and [5], the software ranking is applied to a local company in Iran engaged in IT services to analyse 6 software, namely: ITSM I, ITSM II, ITSM III, ITSM IV , ITSM V, and ITSM VI. Whereas based on the research [2], there are 3 open source software that were studied using the AHP method, namely: OTRS, ITOP, and IDOIT. The reason for choosing this three software is because the three software are the most widely used ITSM software. According to ITSM daily report, there are 6 best ITSM software solutions including: ITOP, OTRS, CITSMART, Project Open, OCS Inventory NG, and I-doit. In addition, there are some of the best commercial software alternatives including: ManageEngine Service Desk Plus, Freshservice, and Samanage. Therefore, the author also made further observations. According to research conducted by Garther, Manage Engine Service Desk Plus is one of the best ITSM software based on user reviews. Manage Engine Service Desk Plus gets a 4.3 rating of a maximum of 5. Therefore, the author decided to conduct ITSM software selection research on 3 software, namely: OTRS, ITOP, and Manage Engine Service Desk Plus (SDP). The criteria used for software ranking can be seen in Table 3. Whereas for software ranking method is fuzzy SIR which was discussed earlier in the section 3.3.

Table 4. Criteria measurement scale

| Linguistic Variable | Description |

| Not Important (NI) | Activities in the process have no effect on the service. |

| Less Important (LI) | Activities in the process have less influence on the service. |

| Enough (E) | Activities in the process have an effect on the service. |

| Important (I) | Activities in the process affect the service. |

| Very Important (VI) | Activities in the process greatly affect the service. |

Table 5. Software measurement scale

| Linguistic Variable | Description |

| Very Bad (VB) | 1. Not able to minimize the adverse effects that will occur.

2. Not able to prevent when an incident occurs |

| Bad (B) | 1. Does not explain the relationship between events that occur in software infrastructure.

2. Cannot detect incidents or instructions (notifications). |

| Average (A) | 1. Able to provide and examine incidents that occur.

2. Need settings, so that it is rather difficult for users. |

| Good (G) | 1. Good processing, because of the detail and complexity.

2. There is an escalation process. |

| Very Good (VG) | 1. In its use, it is easier for users.

2. The request process can be done by an external or service desk. 3. Able to manage incidents (minimize adverse effects). 4. Able to diagnose the cause of the incident. 5. Able to provide requests for services from users |

5.3. Data Collection

At this stage, the author collects primary data that will be processed using a predetermined method. Before conducting data collection, the writer first determines the measurement scale, where in this study the author uses a Likert scale. Explanation of the measurement scale used can be seen below.

- Criteria measurement scale

The weight of the criteria used in this study is the weight obtained from the decision maker that has been determined previously in section 3.2. The weighting of these criteria aims to find out what criteria are most influential in this study. The weight of each criterion that has been given by the decision maker is summarized in the Appendix 1.

- Software measurement scale

The weight of alternative software is used to rank which alternative software is best based on quick win criteria in ITIL V3. The weight of each alternative software that has been given by the decision maker is summarized in the Appendix 1. This section describes the implementation of experiments conducted by the author, starting from the stage of problem analysis, collecting primary data, determining criteria and weighting criteria, determining alternatives and calculations using the Fuzzy SIR method.

5.4. Computing the Alternatives

In calculating of software ratings, the author uses the Fuzzy SIR method.

Fuzzy logic, due to its nature of characterizing a case with multiple values, offers

wider and richer resources covering such kind of cases

- Building Fuzzy Decision Matrix

At this stage, the weight of the alternative software that has been obtained from the results of the questionnaire from the respondent will be changed into a Triangular Fuzzy Number (TFN). The use of Triangular Fuzzy Number (TFN) is due to its simplicity and is useful in promoting representation and information processing.

Before forming a fuzzy decision matrix, the writer first determines the Triangular Fuzzy Number (TFN) scale for each rating weight. In applying the TFN scale there are three points in each value, namely: Lower (l), Median (m), and Upper (u) to calculate the value of uncertainty in the assessment given.

- Criteria measurement scale

Table 6 TFN scale for measuring criteria

| Scale | Linguistic Variable | Fuzzy Number | Membership Function |

| 1 | Not Important (NI) | (0.00, 0.00, 0.25) | |

| 2 | Less Important (LI) | (0.00, 0.25, 0.50) | |

| 3 | Enough (E) | (0.25, 0.50, 0.75) | |

| 4 | Important (I) | (0.50, 0.75, 1.00) | |

| 5 | Very Important (VI) | (0.75, 1.00, 1.00) |

- Software measurement scale

Table 7 TFN scale for software measurement

| Scale | Linguistic Variable | Fuzzy Number | Membership Function |

| 1 | Very Bad (VB) | (0.00, 0.00, 0.25) | |

| 2 | Bad (B) | (0.00, 0.25, 0.50) | |

| 3 | Average (A) | (0.25, 0.50, 0.75) | |

| 4 | Good (G) | (0.50, 0.75, 1.00) | |

| 5 | Very Good (VG) | (0.75, 1.00, 1.00) |

After determining the weight of the fuzzy value, the author changes the value of the linguistic variable in the questionnaire (Appendix 1) into a triangular fuzzy number according to the equation (7) where the fuzzy value (l, m, u) used can be seen in the Table 6. To be processed at the next stage, the data must be aggregated first. Therefore, the author uses the equation (8) as the aggregation procedure.

- Computing Weighted, Normalized Fuzzy Decision Matrix

At this stage, we will multiply the triangular fuzzy number by weighting the criteria determined by the decision maker. The results can be seen in Appendix 2.

- Converting fuzzy numbers to real values

The equation (10) is used for the defuzzification process.

In the research [5], using Gaussian criterion because it is the best nonlinear function. Nonlinear functions are more universal than linear. Therefore, the author uses the equation (11) in calculating f(d).

5. Computing the SIR Index

The SIR index consists of 2, namely: Superiority Index and inferiority index. Equation (12) and (13) used in this process of calculating the SIR index. Superiority and inferiority indices will form two types of matrices, namely Superiority and Inferiority matrices as can be seen below.

- Superiority Matrix

- Inferiority Matri

- Computing the SIR Flow

In calculating SIR flow, an aggregation function is needed. For that, equation (16) and (17) used in this study. The results can be seen in the table below.

Table 8 S-flow dan I-flow Matrix

| Software | S-flow | I-flow |

| OTRS | 0.957322829 | 0.151767908 |

| ITOP | 0.065921576 | 1.497607927 |

| SDP | 0.876659551 | 0.250528121 |

6. ITSM S/W Evaluation Result

Alternative ranking of ITSM software is determined by superiority flow and inferiority flow. Superiority Flow measures how is globally superior to all the others, and Inferiority flow measures how is globally inferior to all the others. Therefore, the higher S-flow and the lower I-flow , the better alternative is.

Based on Table 8, we obtain two complete ratings from the alternatives as follows:

Information:

The two complete ranks are different complete rankings. The two complete ratings are then combined into partial rankings

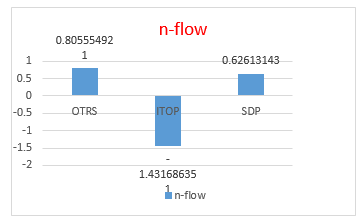

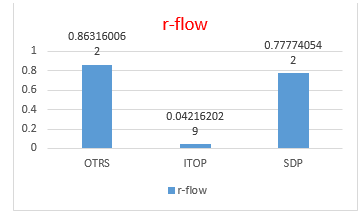

The results of the partial ranking, as described above, cannot yet be used to determine the final ranking of this study. To get a complete ranking, several synthesis streams can be used to determine ratings. In this study, n-flow will be used (like net flow in the PROMETHEE method) and r-flow (like the relative flow in the TOPSIS method). Of the two synthesis streams, one of them can be used to determine the final ranking. For this reason, the equation is used (19) and (20) in doing the final ranking. The table below shows the process of calculating n-flow and r-flow.

Table 9. Calculation of n-flow

| Software | n-flow | Result |

| OTRS | 0.957322829 – 0.151767908 | 0.805554921 |

| ITOP | 0.065921576 – 1.497607927 | -1.431686351 |

| SDP | 0.876659551 – 0.250528121 | 0.62613143 |

Table 10. Calculation of r-flow

| Software | r-flow | Result |

| OTRS | 0.863160062 | |

| ITOP | 0.042162029 | |

| SDP | 0.777740542 |

As we can see from the two tables above (Table 9 and 10), that the value of n-flow not only has a positive value but can also be negative. While, the value of r-flow always positive between 0 and 1. The following are tables and graphs based on the final results of ITSM software ratings.

Table 11 Final results of software ranking

| Software | n-flow | r-flow |

| OTRS | 0.805554921 | 0.863160062 |

| ITOP | -1.431686351 | 0.042162029 |

| SDP | 0.62613143 | 0.777740542 |

From both data, it can be concluded as follows:

OTRS software still ranks first from the results of ITSM software ranking analysis based on ITIL V3 quick-win process criteria.

From the results of the ranking (see Table 11), can be seen that OTRS software is superior to ITOP and SDP software with n-flow values (0.80) and r-flow (0.86). This result shows that OTRS is the best software when viewed from the ITIL V3 criteria in the service operation process. Whereas in the second position there is SDP software with n-flow value (0.62) and r-flow (0.77). ITOP software is ranked last with n-flow values (-1.43) and n-flow (0.04).

7. Conclusion and Future Work

Based on the results of research that has been done, the author uses the fuzzy SIR (Superiority and Inferiority Ranking) method in ITSM software ratings. The ITSM software that was evaluated in this study included: OTRS, ITOP, and Service Desk Plus (SDP). The evaluation was done by using some criteria which taken from ITIL V.3 that widely used by companies including: Service Operations, Service Transitions, and Service Design. We use 9 criteria based on that quick win, namely: Service Desk, Event Management, Incident Management, Problem Management, Request Fulfilment, Change Management, Service Catalogue Management, Service Asset and Configuration Management and Service Level Management.

The OTRS software shows a value of 0.86, while ITOP software has a value of 0.04, then the Service Desk Plus (SDP) software has a value of 0.77. So, the final software ranking shows that OTRS software is the best software that meets the ITIL V3 criteria in the service operation process, SDP software is in second place, and ITOP software is in the last position.

In order to improve the results of better research, the authors suggest the addition of criteria in ranking alternative software. The criteria used can be adjusted to the needs of the company so that the ranking of alternative software can better meet the needs of users (in this case Small-Medium Enterprises (SMEs)) to improve their company performance for the better. In addition, this research can be developed into an application to facilitate the decision maker in determining ITSM software that is in accordance with the vision and mission of the company.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

This work was supported by The Research-Based National Publication Program, The Center of Research and Publication, Syarif Hidayatullah State Islamic University Jakarta, Indonesia

APPENDIX 1 Questionnaire Data

- The weight of the criteria from the decision maker (DM).

| DM | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | |

| 1 | DM1 | 5 | 4 | 5 | 4 | 5 | 4 | 5 | 4 | 4 |

| 2 | DM2 | 5 | 5 | 5 | 3 | 5 | 5 | 5 | 5 | 5 |

| 3 | DM3 | 5 | 4 | 4 | 4 | 3 | 5 | 5 | 3 | 5 |

| 4 | DM4 | 5 | 5 | 5 | 5 | 3 | 5 | 4 | 3 | 5 |

| 5 | DM5 | 5 | 4 | 4 | 5 | 2 | 4 | 4 | 4 | 5 |

- The alternatives weight from the respondent

| Respondent | ||||||||||||||||

| 1 | 2 | 3 | 4 | 5 | ||||||||||||

| NO | Quick Win Process | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP |

| 1 | Service Desk | 4 | 2 | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 3 |

| 2 | Event Management | 3 | 2 | 3 | 4 | 3 | 2 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 3 |

| 3 | Incident Management | 4 | 2 | 3 | 4 | 4 | 3 | 3 | 4 | 4 | 3 | 3 | 4 | 4 | 4 | 3 |

| 4 | Problem Management | 4 | 2 | 3 | 5 | 3 | 3 | 3 | 3 | 4 | 3 | 3 | 4 | 4 | 4 | 3 |

| 5 | Request Fulfillment | 3 | 3 | 2 | 3 | 3 | 4 | 3 | 4 | 4 | 3 | 3 | 4 | 4 | 4 | 3 |

| 6 | Change Management | 3 | 3 | 2 | 4 | 4 | 3 | 3 | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 3 |

| 7 | Service Catalog Management | 3 | 3 | 2 | 3 | 3 | 3 | 3 | 4 | 4 | 3 | 4 | 3 | 4 | 4 | 3 |

| 8 | Service Asset and Configuration Management | 2 | 2 | 2 | 5 | 3 | 2 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 3 |

| 9 | Service Level Management | 3 | 2 | 3 | 4 | 2 | 3 | 3 | 4 | 4 | 3 | 4 | 4 | 4 | 4 | 3 |

| USER? | NO | YES | YES | YES | YES | YES | NO | NO | YES | YES | YES | YES | YES | YES | NO | |

Continue…

| Respondent | ||||||||||||||||

| 6 | 7 | 8 | 9 | 10 | ||||||||||||

| NO | Quick Win Process | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP | OTRS | ITOP | SDP |

| 1 | Service Desk | 4 | 3 | 5 | 3 | 2 | 4 | 5 | 3 | 4 | 4 | 4 | 4 | 5 | 4 | 5 |

| 2 | Event Management | 3 | 3 | 3 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 5 | 4 |

| 3 | Incident Management | 3 | 3 | 4 | 2 | 3 | 4 | 4 | 4 | 5 | 4 | 4 | 4 | 4 | 5 | 5 |

| 4 | Problem Management | 3 | 2 | 4 | 3 | 2 | 3 | 3 | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 4 |

| 5 | Request Fulfillment | 5 | 3 | 3 | 3 | 3 | 4 | 4 | 5 | 4 | 4 | 4 | 4 | 4 | 5 | 4 |

| 6 | Change Management | 4 | 3 | 2 | 3 | 3 | 4 | 4 | 3 | 4 | 4 | 4 | 4 | 5 | 3 | 5 |

| 7 | Service Catalog Management | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 3 | 4 | 4 | 4 | 3 | 4 | 3 | 5 |

| 8 | Service Asset and Configuration Management | 4 | 3 | 4 | 3 | 3 | 3 | 4 | 4 | 4 | 4 | 4 | 4 | 4 | 3 | 4 |

| 9 | Service Level Management | 4 | 3 | 4 | 3 | 3 | 4 | 4 | 4 | 4 | 3 | 4 | 4 | 5 | 3 | 4 |

| USER? | NO | NO | YES | NO | NO | YES | YES | YES | YES | YES | YES | NO | YES | NO | YES | |

APPENDIX 2 Fuzzy Decision Matrix

- Fuzzy Decision Matrix

| C1 | C2 | C3 | C4 | |||||||||

| OTRS | l | m | u | l | m | u | l | m | u | l | m | u |

| R1 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R2 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 |

| R3 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R4 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R5 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R6 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R7 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 |

| R8 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R9 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R10 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| ITOP | l | m | u | l | m | u | l | m | u | l | m | u |

| R1 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 |

| R2 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R3 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R4 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R5 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R6 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 |

| R7 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 |

| R8 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R9 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R10 | 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 |

| SDP | l | m | u | l | m | u | l | m | u | l | m | u |

| R1 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R2 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R3 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R4 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R5 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| R6 | 0.75 | 1.00 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| R7 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R8 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 |

| R9 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| R10 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 |

Continue…

| C5 | C6 | C7 | C8 | C9 | ||||||||||

| l | m | u | l | m | u | l | m | u | l | m | u | l | m | u |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 |

| 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 |

| 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 |

| l | m | u | l | m | u | l | m | u | l | m | u | l | m | u |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 |

| 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 |

| 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| 0.75 | 1.00 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.75 | 1.00 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| l | m | u | l | m | u | l | m | u | l | m | u | l | m | u |

| 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 |

| 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 | 0.25 | 0.50 | 0.75 |

| 0.25 | 0.50 | 0.75 | 0.00 | 0.25 | 0.50 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 | 0.25 | 0.50 | 0.75 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

| 0.50 | 0.75 | 1.00 | 0.75 | 1.00 | 1.00 | 0.75 | 1.00 | 1.00 | 0.50 | 0.75 | 1.00 | 0.50 | 0.75 | 1.00 |

- Aggregated Fuzzy Decision Matrix

| C1 | C2 | C3 | C4 | |||||||||

| l | m | u | l | m | u | l | m | u | l | m | u | |

| OTRS | 0.25 | 0.75 | 1.00 | 0.25 | 0.65 | 1.00 | 0.00 | 0.63 | 1.00 | 0.25 | 0.63 | 1.00 |

| ITOP | 0.00 | 0.58 | 1.00 | 0.00 | 0.63 | 1.00 | 0.00 | 0.65 | 1.00 | 0.00 | 0.48 | 1.00 |

| SDP | 0.25 | 0.73 | 1.00 | 0.00 | 0.63 | 1.00 | 0.25 | 0.73 | 1.00 | 0.25 | 0.63 | 1.00 |

Continue…

| C5 | C6 | C7 | C8 | C9 | ||||||||||

| l | m | u | l | m | u | l | m | u | l | m | u | l | m | u |

| 0.25 | 0.65 | 1.00 | 0.25 | 0.68 | 1.00 | 0.25 | 0.60 | 1.00 | 0.00 | 0.68 | 1.00 | 0.25 | 0.65 | 1.00 |

| 0.25 | 0.68 | 1.00 | 0.25 | 0.58 | 1.00 | 0.25 | 0.63 | 1.00 | 0.00 | 0.58 | 1.00 | 0.00 | 0.58 | 1.00 |

| 0.00 | 0.65 | 1.00 | 0.00 | 0.60 | 1.00 | 0.00 | 0.60 | 1.00 | 0.00 | 0.60 | 1.00 | 0.25 | 0.68 | 1.00 |

- Weighted, Normalized Fuzzy Decision Matrix

| C1 | C2 | C3 | C4 | |||||||||

| l | m | u | l | m | u | l | m | u | l | m | u | |

| OTRS | 0.19 | 0.75 | 1.00 | 0.13 | 0.55 | 1.00 | 0.00 | 0.56 | 1.00 | 0.06 | 0.50 | 1.00 |

| ITOP | 0.00 | 0.58 | 1.00 | 0.00 | 0.53 | 1.00 | 0.00 | 0.59 | 1.00 | 0.00 | 0.38 | 1.00 |

| SDP | 0.19 | 0.73 | 1.00 | 0.00 | 0.53 | 1.00 | 0.13 | 0.65 | 1.00 | 0.06 | 0.50 | 1.00 |

Continue…

| C5 | C6 | C7 | C8 | C9 | ||||||||||

| l | m | u | l | m | u | l | m | u | l | m | u | l | m | u |

| 0.00 | 0.42 | 1.00 | 0.13 | 0.61 | 1.00 | 0.13 | 0.54 | 1.00 | 0.00 | 0.47 | 1.00 | 0.13 | 0.62 | 1.00 |

| 0.00 | 0.44 | 1.00 | 0.13 | 0.52 | 1.00 | 0.13 | 0.56 | 1.00 | 0.00 | 0.40 | 1.00 | 0.00 | 0.55 | 1.00 |

| 0.00 | 0.42 | 1.00 | 0.00 | 0.54 | 1.00 | 0.00 | 0.54 | 1.00 | 0.00 | 0.42 | 1.00 | 0.13 | 0.64 | 1.00 |

- S. Rouhani and A. Z. Ravasan, “A Fuzzy TOPSIS based Approach for ITSM Software Selection,” Int. J. It/bus. Alignment Gov., vol. 5, no. 2, pp. 1–26, 2014, https://10.4018/ijitbag.2014070101.

- H. T. Sukmana, L. K. Wardhani, R. Argantone, and K. Lee, “The Evaluation of ITSM Open Source Software for Small Medium Organizations Based on ITIL v.3 Criteria using AHP Method,” Int. J. Control Autom., vol. 10, no. 7, pp. 203–216, 2017, https://10.14257/ijca.2017.10.7.17.

- J. Wulf, T. J. Winkler, and W. Brenner, “Measuring IT Service Management Capability: Scale Development and Empirical Validation,” in 12th International Conference on Wirtschaftsinformatik, 2015, pp. 630–644.

- E. Hubbert, P. O’Neill, and B. Echols, “Market Overview: IT Service Management Support Tools,” Cambridge, 2010.

- S. Rouhani, “A fuzzy superiority and inferiority ranking based approach for IT service management software selection,” Kybernetes, vol. 46, no. 4, pp. 728–746, 2017, https://10.1108/K-05-2016-0116.

- M. Mora, “An Initial Classification of Open Source Software Tools for Supporting the IT Service Management Processes by Using Formal Concept Analysis,” pp. 1–5, 2010.

- D. Yazdanfar and P. Öhman, “Firm-level determinants of job creation by SMEs: Swedish empirical evidence,” J. Small Bus. Enterp. Dev., vol. 22, no. 4, pp. 666–679, 2015.

- I. Mandl and S. Ledermaier, “SMEs and job creation in Europe,” in European Born Globals, Routledge, 2017, pp. 22–35.

- Y. Sudaryo and D. Permatasari, “Strategy Development Micro Small And Medium Business Performance In Small And Medium Enerprises (Smes) Cibaduyut Shoe Bandung,” Int. J. Sci. Technol. Res., vol. 6, no. 3, pp. 102–105, 2017.

- Xiaozhan Xu, “The SIR method: A superiority and inferiority ranking method for multiple criteria decision making.” European Journal of Operational Research, China, pp. 587–602, 2001.

- R. W. Saaty, “The Analytic Hierarchy Process-What It is and How It is Used,” Math Model., vol. 9, no. 3–5, pp. 161–176, 1987.

- B. Roy, “ELECTRE III: Un algorithme de classements fondé sur une représentation floue des préférences en présence de critères multiples,” Cah. du CERO, vol. 20, no. 1, pp. 3–24, 1978.

- C. M. Tam, T. K. L. Tong, and Y. W. Wong, “Selection of Concrete Pump Using the Superiority and Inferiority Ranking Method,” J. Constr. Eng. Manag., vol. 130, no. 6, pp. 827–834, 2004, https://10.1061/(ASCE)0733-9364(2004)130:6(827).

- M. Daugdeviren and I. Yüksel, “Developing a fuzzy analytic hierarchy process (AHP) model for behavior-based safety management,” Inf. Sci. (Ny)., vol. 178, no. 6, pp. 1717–1733, 2008, https://https://doi.org/10.1016/j.ins.2007.10.016.

- J. Chai, J. N. K. Liu, and Z. Xu, “A New Rule-Based SIR Approach To Supplier Selection Under Intuitionistic Fuzzy Environments,” Int. J. Uncertainty, Fuzziness Knowledge-Based Syst., vol. 20, no. 03, pp. 451–471, 2012, https://10.1142/S0218488512500237.

- N. Encantado Faria and M. Mira da Silva, “Selecting a Software Tool for ITIL using a Multiple Criteria Decision Analysis Approach,” in Designing Digitalization (ISD2018 Proceedings), 2018.

- L. Kralik, R. Senkerik, and R. Jasek, “Comparison of MCDM methods with users’ evaluation,” Iber. Conf. Inf. Syst. Technol. Cist., vol. 2016–July, 2016, https://10.1109/CISTI.2016.7521387.

- M. Mora, J. Gomez, R. V O\textquoterightConnor, and O. Gelman, “A Risk-based MADM Model for Selecting Free-Libre Open Source Software Tools in the Domain of IT Service Management,” in 16th Symposium on Decision Technology and Intelligent Information Systems, 2016, no. August.

- M. Mora, J. Marx Gómez, R. V O ’connor, and O. Gelman, “’An MADM risk-based evaluation- selection model of free-libre open source software tools A MADM Risk-based Evaluation-Selection Model of Free- Libre Open Source Software Tools,” Int. J. Technol. Policy Manag., vol. 16, no. 4, 2016, https://10.1504/IJTPM.2016.081665.

- O. A. M. Ali, A. Y. Ali, and B. S. Sumait, “Comparison between the Effects of Different Types of Membership Functions on Fuzzy Logic Controller Performance,” Int. J. Emerg. Eng. Res. Technol., vol. 3, no. 3, pp. 76–83, 2015.

- P. Harliana and R. Rahim, “Comparative Analysis of Membership Function on Mamdani Fuzzy Inference System for Decision Making,” J. Phys. Conf. Ser., vol. 930, no. 1, 2017, https://10.1088/1742-6596/930/1/012029.

- J. Gayathri Monicka, D. N.O.Guna Sekhar, and K. Ramash Kumar, “Performance Evaluation of Membership Functions on Fuzzy Logic Controlled AC Voltage Controller for Speed Control of Induction Motor Drive,” Int. J. Comput. Appl., vol. 13, no. 5, pp. 8–12, 2011, https://10.5120/1778-2451.

- G. Case, “ITIL V3: Where To Start & How To Achieve Quick Wins,” Consultant, pp. 1–7, 2007.

Citations by Dimensions

Citations by PlumX

- Citations

- CrossRef - Citation Indexes: 3

- Scopus - Citation Indexes: 14

- Captures

- Mendeley - Readers: 59

Google Scholar

Scopus

Crossref Citations

- Mario Barcelo-Valenzuela, Carlos Maximiliano Leal-Pompa, "An ITSM Framework Adaptation: Case Study in an Electoral Institution." In 2020 International Conference on Computing and Data Science (CDS), pp. 468, 2020.

- Sandy Kosasi, I Dewa Ayu Eka Yuliani, Budi Susilo, Utin Kasma, Robertus Laipaka, "The Antecedent of Digital Business Improvement through IT Service Management." In 2021 9th International Conference on Cyber and IT Service Management (CITSM), pp. 1, 2021.

- Muhammad Yoma Putra Perdana, Arini, Andrew Fiade, Iik Muhamad Malik Matin, "Fuzzy Multi-Criteria Decision Making for Optimization of Housing Construction Financing." In 2021 Sixth International Conference on Informatics and Computing (ICIC), pp. 1, 2021.

- Mario Barcelo-Valenzuela, Carlos Maximiliano Leal-Pompa, Gerardo Sanchez-Schmitz, "An IT Service Management Methodology for an Electoral Public Institution." In 2020 3rd International Conference on Information and Computer Technologies (ICICT), pp. 219, 2020.

- Mvelase Nqobizizwe, Smith Rozanne, "IT Service Management Best Practices in Insurance Industries." In Towards Digitally Transforming Accounting and Business Processes, Publisher, Location, 2024.

- Arif Wicaksono Septyanto, Isnaini Rosyida, Suryono Suryono, "A Fuzzy Rule-Based Fog-Cloud for Control the Traffic Light Duration Based On-road Density." In 2021 Sixth International Conference on Informatics and Computing (ICIC), pp. 1, 2021.

- Harrizki Arie Pradana, Pande Putu Gede Putra Pertama, Sujono, Melati Suci Mayasari, Yuyi Andrika, Fransiskus Panca Juniawan, Dwi Yuny Sylfania, "Audit of Provincial Library Information System based on COBIT 4.1." In 2019 1st International Conference on Cybernetics and Intelligent System (ICORIS), pp. 18, 2019.

- George Morris William Tangka, Andrew Tanny Liem, Joe Yuan Mambu, "Information Technology Governance Audit Using the COBIT 5 Framework at XYZ University." In 2020 2nd International Conference on Cybernetics and Intelligent System (ICORIS), pp. 1, 2020.

- Muharman Lubis, Rizky Cherthio Annisyah, L Lyvia Winiyanti, "ITSM Analysis using ITIL V3 in Service Operation in PT.Inovasi Tjaraka Buana." IOP Conference Series: Materials Science and Engineering, vol. 847, no. 1, pp. 012077, 2020.

- S. B. Rajeshwari, Jagadish S. Kallimani, "Similar Incident Detection for IT Service Management (ITSM) in Multilingual Records Using Information Retrieval Model." In ICT Analysis and Applications, Publisher, Location, 2023.

- Amita Rahma Permatasari, Selo Sulistyo, Paulus Insap Santosa, "Optimizing IT Services Quality: Implementing ITIL for Enhanced IT Service Management." In 2024 11th International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), pp. 296, 2024.

No. of Downloads Per Month

No. of Downloads Per Country