Virtualization in Cloud Environment: Bandwidth Management

Volume 4, Issue 2, Page No 221-227, 2019

Author’s Name: Isaac Odun-Ayoa), Blessing Idoko, Temidayo Abayomi-Zannu

View Affiliations

Department of Computer and Information Sciences, Covenant University, Ota, 112233, Nigeria

a)Author to whom correspondence should be addressed. E-mail: isaac.odunayo@covenantuniversity.edu.ng

Adv. Sci. Technol. Eng. Syst. J. 4(2), 221-227 (2019); ![]() DOI: 10.25046/aj040229

DOI: 10.25046/aj040229

Keywords: Cloud computing, Virtualization, Bandwidth Management Applications, Hypervisor, Virtual Machines

Export Citations

Cloud computing recently emerged as an interesting model that enables computing and other related internet activities to take place anywhere, anytime. Cloud service providers centralize all servers, networks, and applications to allow their users’ access at any time and from any location. Cloud computing uses already existing resources like server, CPU and storage memory but runs on a new technology known as virtualization. The core idea of virtualization is to create several virtual versions of one single computing device or resource. This enables many user operating systems to work on such a single underlying piece of device. Network bandwidth is one of the critical resources in a cloud environment. Bandwidth management involves the use of techniques, technologies, tools, and policies to help avoid network congestion and ensure optimal use of the subscribed bandwidth resources while also being a bedrock of any subscription-based access network. Bandwidth management is being utilized by organizations to allow them to efficiently utilize their subscribed bandwidth resources. Bandwidth management deals with the measurement and control of packets or traffic on a network link in order to refrain from overburdening or overloading the link which can lead to poor performance and network congestion. In this paper, the highest development with respect to virtualization in cloud computing is presented. This study review papers available on cloud computing and relevant published literature in multiple areas like conferences, journals etc. This paper examined present mechanisms that enable cloud service providers to distribute bandwidth more effectively. This paper is therefore a study of virtualization in cloud computing, and the identification of bandwidth management mechanisms in the cloud environment. This will benefit forthcoming cloud providers and even cloud users..

Received: 22 January 2019, Accepted: 20 March 2019, Published Online: 27 March 2019

1. Introduction

This paper expands upon the findings of the research carried out in the 2017 international conference on next-generation computing and information systems [1]. It expands on cloud bandwidth management, its mechanisms, and challenges. Cloud computing means “almost anything can be accessed” [2]. This allows for ubiquitous, convenient, on-demand network access to customizable computing resources e.g. storage, networks, servers, services, applications etc. that service providers could quickly render with minimal intervention. Cloud computing is powerfully changing the manner in which organizations and enterprises perform IT-related activities. Cloud computing features like elasticity, scalability, multi-tenancy, resource pooling, lower initial investment, easy management, faster deployment, location independent, device independent, reliability, and security make it attractive to business owners and IT users [3],[4]. Cloud computing model permits outsourcing of computational resources in a way that is more effective. The huge computing power and storage size of cloud resources permit day-to-day internet users to perform their tasks on pay-as-you-go terms. Cloud data centers will keep expanding, as their client base becomes larger.

In general, the Cloud massive network is built using an approach known as virtualization which permits the installation of different applications and operating systems unto physical hardware. It creates a different layer for the operating system (OS) to seat on, thereby separating the OS from the physical hardware and the core OS. Virtualization is done on the physical hardware by installing a hypervisor. A Hypervisor is a common technique used for the implementation of Virtualization. It permits for different operating systems to be installed on the same hardware. Though the operating systems coexist on the same physical hardware, they behave as each OS have its dedicated resources. Some of the types of Hypervisors include VMware ESX, KVM, Xen, and Hyper-V. Another emerging approach for implementing virtualization is containerization commonly known as Container-based virtualization or operating system virtualization [5].

Cloud data centers normally have mechanisms for planning how their resources like computing space, memory, and bandwidth are released to the users. Bandwidth is required to distribute resources across different cloud networks. It is very important to maintain cloud bandwidth to meet up with the increasing request from cloud resources across data centers. On a cloud network, the bandwidth size requested by a customer is usually not assured [2].

For a satisfactory performance and fair usage of the network, bandwidth management is used to achieve this with the implementation and creation of network policies which ensures that the sufficient amount of bandwidth being required is readily available for those time-sensitive and mission-critical applications. It also avoids opposition between lower priority traffic and the critical applications for the limited network. With cloud tenants making greater use of cloud networking infrastructure in a shared manner, there is an increased desire and a growing concern on how to arrange, reserve and monitor the bandwidth in the cloud computing environment [6], [7].

Generally, bandwidth management involves the use of techniques, technologies, tools, and policies put together by an organization in order to ensure optimal utilization of the available bandwidth [8]. The reason for a network bandwidth management is to ensure the accurate bandwidth size is available and assigned to the accurate users and application at the right location and time [9]. Generally, without an active plan for ensuring bandwidth management, the available bandwidth regardless of its size can never be sufficient for the ever-increasing request of the users [10]. Also, Malicious Cloud Bandwidth Consumption (MCBC) which attack is a new type of attack that aims to consume the bandwidth maliciously causing the financial burden to the cloud service host can be mitigated or avoided when utilizing bandwidth management [11].

Different operating systems (Oss) are running simultaneously on a physical server is a process known as Virtualization and is carried out utilizing a hypervisor or virtual machine manager (VMM). The physical network resources require sufficient bandwidth to assign to the virtual machines (VMs) and optimal bandwidth allocation to VMs is very critical especially in today’s cloud data centers with massive networked VMs. Bandwidth management poses a significant tension especially within a cloud-computing environment that permits the idea of VMs to have specific aims like load balancing and failure tolerance. High latency and a sharp drop in network speed are expected if there is no sufficient bandwidth after VM-migration. Every initiated data transfer that must take place over the internet requires bandwidth. For example, server to VMs data transmission over the internet requires bandwidth; this is one reason why network administrators must ensure accurate bandwidth management.

The objective of this study is to analyze bandwidth management in a cloud-based computing and virtualization environment. Various area of virtualization and cloud computing will be discussed. This research will impart to the knowledge of cloud-based bandwidth allocation approaches and management. The remaining section of the research is as follows. Section 2 examines related work. Section 3 discusses virtualization in a cloud environment and bandwidth management mechanisms. Section 4 concludes the paper and suggests future work.

2. Related work

In [12] the author suggested a solution in Bandwidth Management on Cloud Computing Network, to distribute available bandwidth according to user priorities and actual demand of data transfer to-from the cloud is proposed. Three key metrics: throughput, response time and utilization for monitoring and analyzing the network performance were presented. In [13], the authors proposed an approach that will only permit the guaranteed bandwidth size when providing the requested service. It also discussed how Linux kernel module called Linux TC can be used to manage the allocation of network bandwidth. In [14], the authors proposed having a formal definition for cloud-based applications that are free and open to all. The authors built a Cloud Resources Allocation Model named CRAM4FOSS for these free and opened applications. According to the authors, the proposed solution is to accurately certify the stability of allocating Cloud resources. In [15], the authors discussed the idea of an open source virtualization. Several other virtualization ideas are addressed and afterward, analysis of two open sources IaaS systems was presented. [16] discussed the idea of skewness to strengthen the manipulation of servers. [17] proposed an approach to introduce separation of VMM concept instead of kernels separation concept since it will enable suitable separation of cloud VMs. In [18], the authors concentrated on the idea of VM migration for cloud providers and reviewed VM placements in data centers alongside its limitations and advantages were conducted. 1n [19], the authors focused on virtualization and its application in radiology. In [20], the authors highlighted how virtualization affects information security. In [21], the authors proposed a modular kind of the bandwidth manager that could rapidly adapt to unexpected changes that can be induced by the rates of dirty inactive applications or due to network congestion. The authors’ goal is to optimize bandwidth consumption and latency during live VM migration. [22] proposed a combined algorithm for optimal allocation which enables network bandwidth and VMs to minimize the cost affiliated with the users. A decision is made by the algorithm to restrain network bandwidth and VMs from some specified cloud providers. [23] Argued on the importance of stability and fair bandwidth allocation. The authors proposed a method to prevent interference between the underlying hardware and VMs. In [24], the authors proposed an algorithm for dynamic bandwidth shaping while still maintaining the limitations on network resources. In [25], “Towards a Tenant Demand-aware bandwidth allocation strategy in the cloud data center” is discussed. The authors propose a strategy to handle the issue of over bandwidth subscription cloud datacenter. The main functions of the proposed system involve bandwidth prediction, bandwidth pre-allocation, and bandwidth gathering. In [26], the authors developed SpongeNet; which is a bandwidth allocation proposal that’s made up of three determinants. Component one is to provide an accurate, flexible and simple way for users’ requirements definition. Component two is an algorithm for merging policies to handle multiple goals. Component three is to guarantee fairness between dedicated and non-dedicated bandwidth users. In [27], the authors proposed a framework for predicting resource management to overcome the drawbacks of the reactive cloud resource management approach. In [28], the authors discussed the current trend and development in cloud computing and open source software. The authors found out that Open Source Software (OSS) like OpenStack provides the most comprehensive infrastructure in cloud computing and open source software.

3. Virtualization in Cloud Environment and Bandwidth Management

3.1. Virtual Machines and Containers Technology

- Concept of Virtual Machines

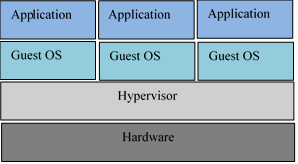

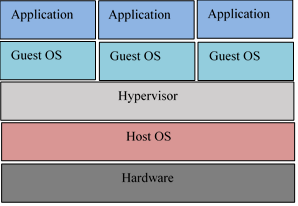

The infrastructure layer is the host of the virtual machines (VMs) within the cloud network. It is a component within cloud computing. The core concept of virtualization is to separate OS from the underlying hardware, it divides a physical hardware machine such as a server into multiple virtual machines. This makes it possible to run all types of operating like Linux, Unix, Mac OS, and Windows on a host or physical machine, that is the coexistence of different operating systems on the same physical machine. Bandwidth size that closely matches the actual task and needs of each VM can be assigned. It allows for maximum utilization of the host machine. Hypervisors also knew as Virtual Machine Managers (VMSs) are used to create and control VMs. The virtual machine is then tied to the hypervisors and not directly to the physical hardware. There are two types of hypervisors. Type-1 hypervisors remove an extra layer between the underlying host hardware and the virtual machines because it executes directly on physical hardware without a base OS and acts as the operating system on the hardware. Here, physical hardware without a base OS can be known as a bare-metal. Type-2 hypervisors are hosted hypervisors since the installation is done on top of the existing OS on the physical hardware. These hypervisors execute as applications and the host machine won’t need to be set up separately for setting up the virtual machines. Figure 2 depicts the Type-1 hypervisor and Figure 3 depict the Type-2 hypervisor [29].

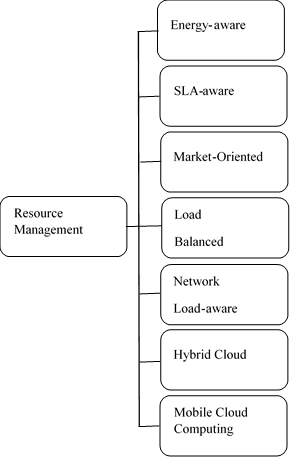

Figure 1: Classification of Resource Management Techniques [32]

Figure 1: Classification of Resource Management Techniques [32]

Figure 2: Type-1 Hypervisor [29]

Figure 2: Type-1 Hypervisor [29]

Figure 3: Type-2 Hypervisor [29]

Figure 3: Type-2 Hypervisor [29]

- Concept of Containers

- Containerization is known as a virtualization technique which occurs within the OS level instead of the hardware level. Containers allow for easy migration between platforms and hosts compared to virtual machines and share a single OS kernel within an isolated environment [30]. This creates isolation confines within the application area instead of the server area which makes sure that if an error occurs within the single container, it won’t affect the whole server or VM but only that individual container. Container technology is continuously evolving and guarantees an easy to deploy, streamlined and secure means of enacting certain infrastructure requirements while offering offer an alternative to VMs and mitigates the compatibility issues between applications which exists within the same OS [31].

- Cloud containers are trending within the IT world and are modeled to virtualize just one application. Linux is the only server OS that supports cloud containers presently and Hyper-V containers are to be integrated to Microsoft Azure after being introduced by Microsoft [1].

C. Characteristics, Application, Benefits of Virtualization

In [1] it is highlighted the following characteristics of virtualization and its application areas as follows:

- Partitioning: This is a key feature in virtualization, used to logically divide a physical hardware resource for the installation of different/several operating systems.

- Isolation: Virtual machines coexist on the same physical hardware but each virtual machine exists separately from the core physical hardware and other virtual machines. With this feature, if there is a breakdown/downtime on one VM, the other active VMs will not be affected. Also, there is no data-sharing between VMs.

- Encapsulation: The encapsulated process maybe a business service and VMs can be stored and labeled as a file for identifying its service type. This averts the issue of hindrance amongst the applications. Storage, memory, networks, application, operating systems, and hardware are some of the application areas of virtualization. Here, to separate a software implies placing that software in a separate virtual machine space or in a container so that it does not coexist with other operating systems.

Virtualization of hosts or physical machines provides the following benefits [1]:

· Functional Execution Isolation: The hypervisor is assigned the responsibility of maintaining protection between the VMs and applications deployed on multiple VMs. Privileges may be given to users in their VM without violating the host integrity or isolation.

· Enhance Reliability: Hypervisors provides greater reliability of hosted virtualized applications due to their live migration capabilities enabling them to be independent and reliable.

· Customized Environment: Virtualization permits for the creation of customized space for a dedicated user, upon request for a customized and dedicated environment can be created and provided to handle a specific need.

· Testing and Debugging Parallel applications: Testing parallel applications can leverage virtualized environments, as a fully distributed system could be emulated inside a single physical host.

· Easier Management: Custom-made run-time environment can be migrated, started-up, shut down in a considerably variable way which depends upon the requirements of the person that is responsible for the essential hardware.

· Ability to coexist with legacy applications.

· VMs help to preserve binary compatibility in the run-time environment for legacy applications.

3.2. Resource Management Techniques

Cloud Resource management involves the procurement and release of cloud resources like physical hardware, storage space, virtual memory, and network bandwidth. Cloud resources can be physical or virtual components of limited availability. Resource management in virtualization environment and cloud computing is very critical. [32] used SLA-awareness, load balancing, energy efficiency etc. in the classification of resource management techniques, also depicted in figure 1.

- Energy-aware RM techniques: This involves minimizing the energy consumption by merging workload on a few numbers of physical servers. Virtual machines (VMs) on physical hardware with less load are migrated to other hardware that can accommodate more workload. This less loaded and idle hardware is then switched off to reduce the power expenses incurred by service providers and decreases Carbon dioxide (CO2) emission. [33] Presented a centralized control system for the allocation and management of all the concerned cloud resources. Resource Management (RM) choices are done hourly in order to reduce the subsequent effects. The choices can be based on the shutdown of the server, VM migration between multiple servers and powering up of the server.

- Load-balanced RM techniques: This is an important feature of the system in any computing environment. It is made use of in order to explain the idea of distributing the workload or traffic request between many resources and can maximize the performance of available bandwidth. Operations are moved amongst the physical machines after the load balancing algorithm have been applied and the system efficiently balances the load amongst the cloud resources while the usage by each server is examined. Once there is an overload of server resources, it migrates some of these workloads to an underutilized server but in a situation whereby both are underutilized, one server gets all the workload transferred to itself while the other server will be left unutilized or on standby. If hypothetically speaking that the workload migration failed, the server itself can be scaled down or up accordingly.

- SLA-aware RM techniques: Cloud-based Service Providers (CSPs) must strongly discourage the issue of violations, maintaining regular check during service provisioning to users. A service level agreement (SLA) is signed between the CSPs and customers which includes information such as the price, penalty clause that is enforced in an event of agreement violation and required a level of service(s). Authors in [34] proposed algorithms for capacity allocation in the hope of guaranteed SLA and handle fluctuating workloads which interfaces with the resource controllers that are geographically distributed while redirecting the network traffic load whenever congestion is observed. An application can also execute on different workloads and VMs equally allocated on the VMs if necessary. A workload analyst is utilized in order to predict future workload necessities throughout the workloads fluctuation and the capacity is altered on the premise of resultant predictions. Additionally, SLA violations can be mitigated by making sure that the response time is low when inter-VM communications are ongoing but if there is a higher response time, the VM is carried/moved to another physical machine.

- Market-oriented RM techniques: This involves solutions that are beneficial to cloud service providers and market-oriented. [35] Presented an RM technique where a SaaS service provider employs an IaaS service provider to serve its clients. Clients pay to the SaaS service provider for the services received and depending on the satisfaction level shown by the clients, a one-of-a-kind optimal function is utilized to calculate the amount that the user has to pay. According to [36], each cloud server has a dynamic voltage/frequency scaling (DVFS) module and it is assumed that a server cannot be switched on or off, also there is a common cost of VM migration

- Network load aware RM: This technique reduces high network traffic that can degrade the overall network performance. It allows service providers to choose a data center that could fulfill the interests of the clients. A user sends a request to the service provider and resources are assigned using an adaptive resource allocation algorithm. These algorithms achieve this by selecting a data center either based on the distance between the user or based on the distance between the data center. After the data center is chosen, the work is allocated to one of the servers thanks to the VM.

- RM techniques for the hybrid/federated cloud: In this technique, private cloud users are offered the benefit of utilizing the resources in a public cloud environment whenever there are insufficient resources to handle the needs of it, in-house To address the issue of decision-making on when to use public cloud resource, the authors in [37] presented a solution that is rule-based for hybrid clouds. Users’ requests are divided into two which are the critical data/tasks request that is granted higher precedence and secondary data/tasks request that are granted lower priority. The critical data/tasks are hosted on the private clouds for security while the task with low priority can utilize the resources of both private and public cloud. When the resources of the private cloud are exhausted, the public cloud resources would be utilized.

- RM techniques for mobile clouds: Here, an energy efficient solution is presented to solve the problem of energy which is a major challenge in mobile systems. The authors in [38] proposed a technique to reduce the total power consumption. All the mobile devices primary job is to move the workload to one of the available servers in order to reduce the overall energy consumption.

3.3. Cloud Bandwidth Allocation Mechanisms

According to [2], bandwidth mechanisms used by Cloud providers in allocating bandwidth resource and ensuring load balancing include:

- Fair-Share Bandwidth allocation: In this approach, the specific time interval is set for the flow of the defined data size between the host machine and virtual machines. The Throughput and Network weight are used to ensure fairness during the data flows. When the calculated fair share values reach the random acceptable values, the packets are transferred otherwise they are dropped.

- Transmission Control Protocol (TCP): Here, packets are divided into segments and assigned a sequence number each to specify the order of the data. Transmission takes place at a specific time interval. An acknowledgment is received for every successful transmission otherwise, retransmission takes place. This approach is connection oriented and for end-to-end congestion controls.

- Bandwidth Capping: in this approach, a specific bandwidth limit is set for the VM when transmission of data is happening simultaneously.

- Secondnet: This approach guarantees the assignment of network bandwidth amongst every VM pair and the communication patterns between multiple pair of the VM can vary due to the time as well as the data received/transferred amongst them.

- Netshare: Utilized in big data centers and function as a central system for allocating bandwidth in a virtual cloud network. It lessens the tasks in data centers. Each Cloud customer is connected to various cloud network resources. Division of the network into slices is done and each of the slices is assigned bandwidth via the link connecting it to the cloud network.

- Approximate Fairness- Quantized Congestion Notification (AF-QCN): This is a congestion control approach for data centers, delivered via a switch. The network bandwidth is shared among the transmitter’s connection to the VM.

3.4. Bandwidth Management Benefits

In [39] the author highlighted the following benefits using the Zscaler Bandwidth Control solution:

- Prioritization of business applications: It limits the possible impact of bandwidth-intensive applications like streaming media, file sharing, and social media business applications. Bandwidth constraints and policies are checked and reconfigured to meet user requirement

- Deliver a better user experience: features like segmentation of network traffic and retransmission provide a faster Internet connection and avoid loss of packet after a successful data transmission.

- Reduce costs and simplify IT: Eliminate the need to install and manage additional hardware in-house and prevent bottlenecks due to the enforced cloud policies.

In [26] the author highlighted the following benefits:

- Work-conservation

- Guarantees bandwidth

- Practical enforcement

- Fairness between tenants

- Applicability to different contexts

- Easy deployment

- Precise and flexible requirement expression

- Efficient bandwidth saving

In [25] the author presented the following benefits:

- Provides dynamic network management

- Improves network efficiency

- Easy integration.

3.5. Cloud Bandwidth Management Challenges

According to [40], as VMs scale bigger, utilizing more memory, higher bandwidth, the need to maintain optimal performance and health management tools become difficult for the existing management software. These tools require multiple information about the different features of each VM, the underlying host storage infrastructure, machine, and networking. Supporting these tools and coordinating the activities of a large number of simultaneous users with management privileges require a secure connection in real-time with a high level of consistency and must be backward compatible. Scaling the bandwidth of the ever-increasing hundreds of VMs means improving the existing code for optimal performance. Since users are getting used to virtualization and migrating every one of their in-house server workloads/applications to virtual environments, the need to scale bandwidth capacity will continuously get bigger for the predictable future. The management software needed to scale bandwidth to reach the level of the users’ needs is highly challenging. [9] Presented the following challenges in the utilization of bandwidth and can become a problem for the existing management tools:

- The absence of administrative support

- Uncontrolled users’ downloads

- Virus attacks

- Higher demand compared to available bandwidth

- Unreliable Internet service provider

- Technical breakdowns

- Different user bandwidth requirements

- Too many network users

4. Conclusion

Cloud computing enables many organizations store, compute and retrieve/access their application from any location over the internet. This has shifts management core concern of maintaining in-house hardware resources and reduced the cost incurred. The concept of VMs-migration in cloud computing requires that the right bandwidth size must be made available on the host or physical machine. For example, when a VM is unable to serve its users request due to a technical failure on the host machine, the VM is moved to another physical machine to provide the needed services. VMs-migrated enables better management and optimization of the data centers and host machines. This paper focused on Cloud bandwidth management. An analysis of the concept of VM-migration and bandwidth management approaches was done. In conclusion, with the increasing number of cloud users and data centers, further research work for enhancing the bandwidth allocation approaches and management is required. This will further enhance the resource-sharing feature of the VMs.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

We acknowledge the support and sponsorship provided by Covenant University through the Centre for Research, Innovation, and Discovery (CUCRID).

- I. Odun-Ayo, O. Ajayi, and C. Okereke, “Virtualization in Cloud Computing: Developments and Trends,” in 2017 International Conference on Next Generation Computing and Information Systems (ICNGCIS), 2017, pp. 24–28. https://doi.org/10.1109/ICNGCIS.2017.10

- K. Chandrakanth and S. Gayathri, “Examining Bandwidth Provisioning on Cloud Computing and Resource Sharing Via Web,” Int. J. Eng. Sci. Adv. Technol. [IJESAT], vol. 2, no. 5, pp. 1372–1376, 2012.

- M. Peter, and G. Timothy, “The NIST Definition of Cloud Computing,” NIST Special Publication pp. 800-145, 2011. https://doi.org/10.6028/NIST.SP.800-145

- M. Ahmed, A. Sina, R. Chowdhury, M. Ahmed, and M. H. Rafee, “An Advanced Survey on Cloud Computing and State-of-the-art Research Issues,” vol. 9, no. 1, pp. 201–207, 2012.

- Y. Al-dhuraibi, F. Paraiso, N. Djarallah, P. Merle, and C. Computing, “Elasticity in Cloud Computing: State of the Art and To cite this version: Elasticity in Cloud Computing: State of the Art and Research Challenges,” 2018.

- C. T. Yang, J. C. Liu, R. Ranjan, W. C. Shih, and C. H. Lin, “On construction of heuristic QoS bandwidth management in clouds,” Concurr. Comput. Pract. Exp., vol. 25, no. 18, pp. 2540–2560, Dec. 2013. https://doi.org/10.1002/cpe.3090

- A. Amaro, W. Peng, and S. McClellan, “A survey of cloud bandwidth control mechanisms,” in 2016 International Conference on Information and Communication Technology Convergence (ICTC), 2016, pp. 493–498. https://doi.org/10.1109/ICTC.2016.7763520

- G. Hong, J. Martin, and J. Westall, “Adaptive bandwidth binning for bandwidth management,” Comput. Networks, vol. 150, pp. 150–169, 2019. https://doi.org/10.1016/j.comnet.2018.12.019

- C. Technology, “Bandwidth management in universities in Zimbabwe: Towards a responsible user base through effective policy implementation Lockias Chitanana Midlands State University, Zimbabwe,” vol. 8, no. 2, pp. 62–76, 2012.

- Y. Liu, V. Xhagjika, V. Vlassov, and A. Al Shishtawy, “BwMan: Bandwidth Manager for Elastic Services in the Cloud,” in 2014 IEEE International Symposium on Parallel and Distributed Processing with Applications, 2014, pp. 217–224. https://doi.org/10.1109/ISPA.2014.37

- M. Chidananda, A. Manjunatha, A. Jaiswal, and B. Madhu, “Detecting Malicious Cloud Bandwidth Consumption using Machine Learning,” Int. J. Eng. Technol., vol. 8, no. 5, pp. 2199–2205, Oct. 2016. https://doi.org/10.21817/ijet/2016/v8i5/160805210

- E. Randa, I. Mohammed, A. Babiker, and A. N. Mustafa, “Bandwidth Management on Cloud Computing Network,” vol. 17, no. 2, pp. 18–21, 2015. https://doi.org/10.9790/0661-17221821

- G. F. Anastasi, M. Coppola, P. Dazzi, and M. Distefano, “QoS Guarantees for Network Bandwidth in Private Clouds,” Procedia – Procedia Comput. Sci., vol. 97, pp. 4–13, 2016. https://doi.org/10.1016/j.procs.2016.08.275

- S. Jlassi, A. Mammar, I. Abbassi, and M. Graiet, “Towards correct cloud resource allocation in FOSS applications,” Futur. Gener. Comput. Syst., vol. 91, pp. 392–406, 2019. https://doi.org/10.1016/j.future.2018.08.030

- A. Kovari and P. Dukan, “KVM & OpenVZ virtualization based IaaS Open Source Cloud Virtualization Platforms: OpenNode, Proxmox VE,” pp. 335–339, 2012.

- Z. Xiao, W. Song, and Q. Chen, “Dynamic Resource Allocation using Virtual Machines for Cloud Computing Environment, IEEE Transaction On Parallel And Distributed Systems,” pp. 1–11, 2013.

- J. McDermott, B. Montrose, M. Li, J. Kirby, and M. Kang, “The Xenon Separation VMM: Secure Virtualization Infrastructure for Military Clouds”, Naval Research Laboratory Center for High Assurance Computer Systems, Washington. https://doi.org/0.1109/MILCOM.2012.6415673

- M. Masdari, S. Shahab, and V. Ahmadi, “An overview of virtual machine placement schemes in cloud computing”, Journal of Network and Computer Applications, vol. 66, pp. 106–127, 2016. https://doi.org/0.1016/j.jnca.2016.01.011

- R.P. Patel, “Cloud computing and virtualization technology in radiology”, Clinical Radiology, vol. 67, pp. 1095 -1100, 2012.

- L. Shing-Han, C. David, C. Shih-Chih, P.S. Chen, L. Wen-Hui and C. Cho, “Effects of virtualization on information security”, Computer Standards & Interfaces, vol. 42, pp. 8, 2015. https://doi.org/10.1016/j.csi.2015.03.001

- E. Baccarelli, D. Amendola, and N. Cordeschi, “Minimum-energy bandwidth management for QoS live migration of virtual machines,” Comput. Networks, vol. 93, pp. 1–22, 2015. https://doi.org/10.1016/j.comnet.2015.10.006

- J. Chase, R. Kaewpuang, W. Yonggang, and D. Niyato, “Joint Virtual Machine and Bandwidth Allocation in Software Defined Network ( SDN ) and Cloud Computing Environments,” pp. 2969–2974, 2014.

- H. Tan, L. Huang, Z. He, Y. Lu, and X. He, “Journal of Network and Computer Applications DMVL: An I / O bandwidth dynamic allocation method for virtual networks,” J. Netw. Comput. Appl., vol. 39, pp. 104–116, 2014. https://doi.org/10.1016/j.jnca.2013.05.010

- X. Chen and S. Mcclellan, “Improving Bandwidth Allocation in Cloud Computing,” no. August, 2016.

- J. Cao, Z. Ma, J. Xie, X. Zhu, F. Dong, and B. Liu, “Towards tenant demand-aware bandwidth allocation strategy in cloud datacenter,” Futur. Gener. Comput. Syst., 2017. https://doi.org/10.1016/j.future.2017.06.005

- J. P. D. Comput, H. Yu, J. Yang, H. Wang, and H. Zhang, “Towards predictable performance via two-layer bandwidth allocation,” J. Parallel Distrib. Comput., vol. 126, pp. 34–47, 2019. https://doi.org/10.1016/j.jpdc.2018.11.013

- M. Balaji, C. Aswani, and G. S. V. R. K. Rao, “Predictive Cloud resource management framework for enterprise workloads,” J. King Saud Univ. – Comput. Inf. Sci., vol. 30, no. 3, pp. 404–415, 2018. https://doi.org/10.1016/j.jksuci.2016.10.005

- I. Odun-ayo, A. Falade, and V. Samuel, “Cloud Computing and Open Source Software: Issues and Developments “ C,” vol. I, pp. 140-145, 2018.

- S. C. Mondesire, A. Angelopoulou, S. Sirigampola, and B. Goldiez, “Simulation Modelling Practice and Theory Combining virtualization and containerization to support interactive games and simulations on the cloud,” Simul. Model. Pract. Theory, no. August, pp. 1–12, 2018.

- V. Chang et al., “Journal of Network and Computer Applications,” vol. 77, no. May 2016, pp. 87–105, 2017. https://doi.org/10.1016/j.jnca.2016.10.008

- U. Agreement, R. Issued, B. Internet, T. Advisory, G. Technical, and W. Group, “Port Blocking,” no. August 2013.

- S. Mustafa, B. Nazir, A. Hayat, R. Khan, and S. A. Madani, “Resource management in cloud computing: Taxonomy, prospects, and challenges q”, Comput. Electr. Eng., vol. 47, pp. 186–203, 2015. https://doi.org/10.1016/j.compeleceng.2015.07.021

- D. Ardagna, B. Panicucci, M. Trubian, and L. Zhang, “Energy-aware autonomic resource allocation in multitier virtualized environments,” IEEE Trans Serv Comput; vol. 5, pp. 2–19, 2012.

- D. Ardagna, S. Casolari, M. Colajanni, and B. Panicucci, “Dual time-scale distributed capacity allocation and load redirect algorithms for cloud systems.” J Parallel Distrib Comput; vol 72, pp. 796–808, 2012.

- L. Chunlin, and L. Layuan, “Multi-Layer RM in cloud computing. J Network Syst Manage”, 2013. http://dx.doi.org/10.1007/s10922-012-9261-1

- S. Ali, S. Jing, S. Kun, “Profit-aware DVFS enabled RM of IaaS cloud. Int J Comput Sci Issues (IJCSI)”, vol. 10 pp. 237–47, 2013.

- R.K. Grewal, and P.K. Pateriya, “A rule-based approach for effective resource provisioning in hybrid cloud environment. Adv Intell Syst Comput”, vol. 203, pp. 41–57, 2013.

- Y. Ge, Y. Zhang, Q. Qiu, and Y. Lu, “A game theoretic resource allocation for overall energy minimization in mobile cloud computing system, In: Proceedings of the 2012 ACM/IEEE international symposium on low power electronics and design ISLPED”, vol. 12,. pp. 279–84, 2012.

- D. Sheet, “Zscaler Bandwidth Control.”

- X. Xu, Q. Zhang, S. Maneas, S. Sotiriadis, and C. Gavan, “Simulation Modelling Practice and Theory VMSAGE: A virtual machine scheduling algorithm based on the gravitational effect for green Cloud computing,” Simul. Model. Pract. Theory, no. June, pp. 1–17, 2018. https://doi.org/10.1016/j.simpat.2018.10.006

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- I Odun-Ayo, T Williams, Olamma Iheanetu, Modupe Odusami, Sherenne Bogle, "A Systematic Mapping Study of Innovative Cloud Applications." IOP Conference Series: Materials Science and Engineering, vol. 811, no. 1, pp. 012034, 2020.

- Cansel Doğan Aydoğan, Sibel Sü Eröz, "Turizm İşletmelerinde Bulut Bilişim Sisteminin Teknoloji Kabul Modeli ile Değerlendirilmesi." Alanya Akademik Bakış, vol. 9, no. 1, pp. 1, 2025.

No. of Downloads Per Month

No. of Downloads Per Country