Effects of Educational Support Robots using Sympathy Expression Method with Body Movement and Facial Expression on the Learners in Short and Long-term Experiments

Volume 4, Issue 2, Page No 183-189, 2019

Author’s Name: Yuhei Tanizaki1,a), Felix Jimenez2, Tomohiro Yoshikawa1, Takeshi Furuhashi1, Masayoshi Kanoh3

View Affiliations

1Graduate School of Engineering, Nagoya University, 464-8603, Japan

2Information Science and Technology, Aichi Prefectural University, 480-1198, Japan

3Engineering, Chukyo University, 466-8666, Japan

a)Author to whom correspondence should be addressed. E-mail: tanizaki@cmplx.cse.nagoyau.ac.jp

Adv. Sci. Technol. Eng. Syst. J. 4(2), 183-189 (2019); ![]() DOI: 10.25046/aj040224

DOI: 10.25046/aj040224

Keywords: Educational-Support Robot, Sympathy Expression Method, Body Motion

Export Citations

Recently, educational-support robots have been attracting increasing attention as studying-support gadgets. Previous studies used the sympathy expression method in which the robot expressed emotions in sympathy with the learners; however, the robots considered in those studies expressed only facial emotions. Presently, there is no study that uses body movements together with facial expressions in the sympathy expression method. Thus, in this paper, we examine the effects of two types of robots that have different method of expressing emotions on learners in two experiments.

Received: 19 January 2019, Accepted: 08 March 2019, Published Online: 28 March 2019

1. Introduction

Recent developments in robotics have prompted an increase in educational-support robots that assist in studying. For instance, a robot supports students at school [1] or helps them to learn the English language[2]. Koizumi introduced the robot as a ”watching over” in situations where children learn while discussing the programming that controls the assembly and motion of the car robot by the Lego block [3]. Our research focus on educational support robots to learn with people. The serious problem that these robots face is that the learner gets tired of the robot because they find the behavior of the robots to be monotonous [4], [5]. To address this problem, previous researches developed a sympathy expression method that allows a robot to express emotions sympathetic to learners [6]. Through the subject experiments, the previous research reported that the robot using empathy expression method reduced the monotonicity of learning and reduced tiredness. However, sympathy expression method has been studied only for a robot that expresses emotions by changing facial expressions. In the area of human-robot interactions, conventional research stated that body motions of robots are useful in the interactions between humans and robots as well as in expressing emotions [7]. Thus, sympathy expression method using both facial expression change and body motion may be better than one based only on facial expression change. This paper reported the Experiment1 to measure the short-term impression and the Experiment2 to measure studying effects and longterm impression.

2. Sympathy expression method

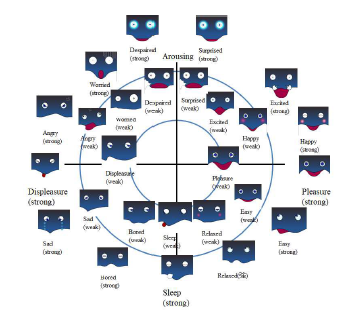

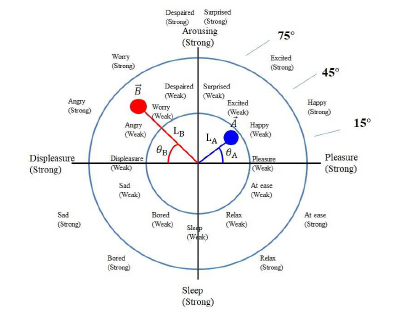

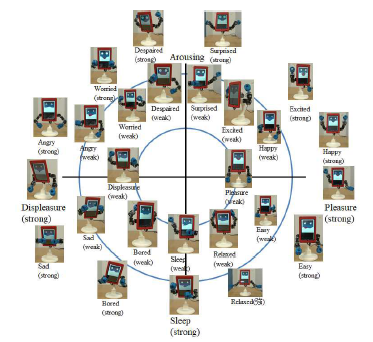

The sympathy expression method is based on Russell’s circumplex model of affect (shown in Fig.1). This method can be used to express emotions in the circumplex model using the correct answer vector A~ and the incorrect answer vector B~; thus, the learners believe that the robot sympathizes with them. If learner correctly answers the question, the robot expresses emotions using A~; in contrast, the robot expresses emotions using B~ for incorrect answer. A~ moves in the area 0≤LA≤1.0 and −90◦≤θA≤90◦. B~ moves in the area −1.0≤LB≤0 and −90◦≤θB≤90◦. Lcosθ refers to the axis of “Pleasure-Displeasure.” Lsinθ refers to the axis of “Arousing-Sleep.” The emotion vectors move as follows [6].

if (learners solve the problem correctly) LA←LA+0.2 LB←LB+0.2 else LA←LA−0.2 LB←LB−0.2 if (the time of answer < reference time)

Figure 1: Sympathy expression method

Figure 1: Sympathy expression method

if (learners solve the problem correctly) θA←θA+15 else θB←θB+15 else if (learners solve the problem correctly) θA←θA−15 else θB←θB−15

In these experiments, the reference time is decided by the answer time in the earlier question. In the 1st question of studying, reference time is decided by the average answer time before studying. However, in first studying, reference time is 60. All robots utilized in these experiments were assumed to have the same sympathy expression method to enable a fair comparison.

3. Robot

3.1. Overview

In this study, we used a tablet robot ,“Tabot”, whose head consists of tablets. as shown in Fig. 2. Tabot can express a lot of facial expressions by expressing the agent on the screen of the tablet.. It has 14 degrees of freedom: the neck has 3 degrees of freedom, the arms have 10 degrees of freedom, and the legs have 1 degree of freedom. So, it can do many body motions. It can express many emotions by combination of body motions and facial expressions. In these experiments, 2 Tabots were utilized for reducing the effects of the shape of robot.

Figure 2: The Tabot used in the experiment

Figure 2: The Tabot used in the experiment

3.2. Facial expressions and body motions

The face displayed in its tablet and the changes in

Tabot’s facial expressions were developed based on a specific design. Two types of facial expressions were used to express the emotions corresponding to each emotion in the circumplex model. Fig. 3 shows example of facial expression of the Tabot. Conversely, Tabot’s body motions were created on the basis of [8] and [9], which discussed the relation between human emotion and physical activity. Two types of body motions can be used to express each emotion and the corresponding emotions in the circumplex model. The body motions and facial expressions that expressed the same emotions were combined. We implemented facial expression as well as a combination of facial expressions and body motions such that emotions can be communicated precisely to the learners. Fig. 4 shows example of combination of facial expression and body motion of the Tabot.

Figure 4: Combination of facial expression and body motion

Figure 4: Combination of facial expression and body motion

4. Experiment 1

4.1. Method

The subjects learned with the robot comprising the sympathy expression method and a studying system. When a subject answered a question, the studying system displayed a correct or a incorrect response. Subjects learned by answering the questions displayed in the studying system. 4 university students and 8 graduate students in the science stream were gathered for this experiment. The participants had never used a Tabot previously. The twelve learners used two types of robots. The first type of robot was called “RobotFacial-Group”, which expressed emotions using only facial expressions. The second type of robot was called “Robot-Combination-Group”, which used both facial expressions and body motions. The robots were used in a random manner. The subjects answered 20 questions in each group.

The upper side of Tabot’s tablet displayed facial expressions. The lower side of the robot’s tablet displayed studying systems. Tabot expressed emotions when the studying system gave either a correct or a incorrect response.

4.2. Overview of the studying system

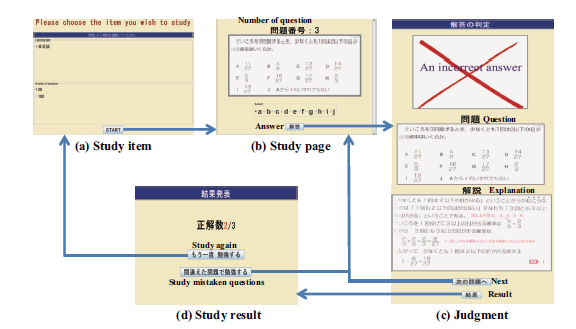

We used the studying system (Fig. 5) for math questions called “Synthetic Personality Inventory 2 (SPI2).”

SPI2 is used as a recruitment test for employment. Moreover, it uses junior high school level math questions. Therefore, university students do not require knowledge for SPI2.

Subjects first log in with an account number. Fig. 5(a) shows a menu of the study contents (i.e.,math questions). The column used for choosing the number of questions is shown under the study items. When a learner selected “20,” 20 questions were shown randomly. Subsequently, repeating the same selection would display different twenty questions that could be repeated until all 100 questions are displayed. Thus, it enables learners to answer all the questions within the chosen study item. When a subject selected a study content and the number of questions, the studying screen (Fig. 5(b)) appeared and the studying process began. The subject answered the question from the selection list. Subsequently, the system displayed whether it was the correct answer as shown in Fig. 5(c). When the subject selected “Next” as shown in Fig. 5(c), the system moved on to the next question. When the subject selected “Result” as shown in Fig. 5(c), or solved all the questions, the system moved on to the results page (Fig. 5(d)), which presented the number of correct answers. When the subject selected “Study again,” the menu of the studying items was displayed (Fig. 5(a)). When the subject selected “Study incorrect answers,” the study page presented questions that were answered incorrectly (Fig. 5(b)).

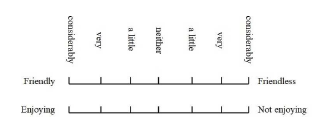

4.3. Evaluation criterion

Impression evaluation used the rating scale method, which is a quantitative evaluation of impressions as shown in Fig.6. The rating scale method has 14 questions; it uses a range of values, e.g, “Friendly – Friendless,” “Emotional – Intelligent,” “Be pleased with me

– Be not pleased with me,” and “Enjoying studying Not enjoying studying”. The scores of the rating scale method range from 1 to 7. In Fig. 6, the left score is 7, and the right score is 1. In each group, subjects answered this questionnaire at the end of studying. Furthermore, subjects answered a questionnaire on the general comments about impressions. We defined the sum of scores of all questions as the score of good impressions. We conducted paired t-test for impression evaluation.

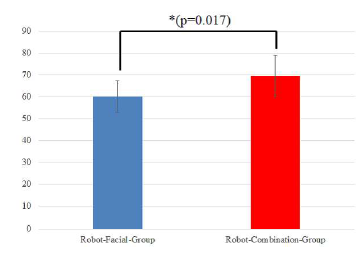

4.4. Result

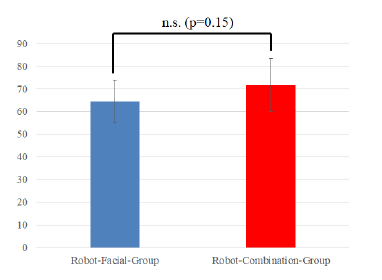

Fig. 7 indicates the average impression score in two groups. This graph indicates the score of the RobotCombination-Group is better than that of the RobotFacial-Group. The result of paired t-test showed that the good impression score of the Robot-CombinationGroup is significantly different from that of the RobotFacial-Group. These results show that the robot that expresses emotions using both body motions and facial expressions can give learners a better impression than the one that expresses emotions using facial expressions only.

4.5. Discussion

The result of the experiment indicate that the sympathy expression method that expresses emotions using both body motions and facial expressions can give learners a better impression than a method that expresses emotions using facial expressions only. In the general comments column, somebody stated, “It was difficult for me to look at the robot’s facial expressions.” This implies that it is possible for some learners to not notice that the facial expression of the robot was changing because they concentrated on the questions of the studying system. Therefore, the impression made by the Robot-Combination-Group was quite different from that made by the Robot-Facial-Group.

Figure 6: Examples of the rating scale method

Figure 6: Examples of the rating scale method

5. Experiment 2

5.1. Method

The subjects learned with a robot comprising a sympathy expression method and a studying system. When a subject answers question, the studying system shows a correct or incorrect judgment. Subjects learned by answering the questions which were displayed by the studying system.

20 university students who had never studied with Tabot previously were gathered for this experiment. 20 subjects were divided into two groups of 10 students each; “Robot-Facial-Group” and “Robot-CombinationGroup.” The subjects in Robot-Facial-Group studied with Tabot, which expresses only facial expressions.

The subjects in Robot-Combination-Group studied with Tabot, which expresses a combination of facial expressions and body motions. These subjects were instructed in math by the studying system for 40 minutes, 2 or 3 times a week, for 1 month. Thus, they studied 12 times in a month. During the studying of 1st and 12th time, subjects used studying system on their own to measure their ability before and after studying. These are the pre-test and the post-test scores. From the 2nd time to 11th time, subjects learned using the studying system with the Tabot.

The upper side of Tabot’s tablet showed facial expressions. The lower side of Tabot’s tablet showed the studying system. Tabot expressed emotions when the studying system gave a correct or an incorrect response.

The robot and studying system used in this experiment is the same as those used in Experiment1.

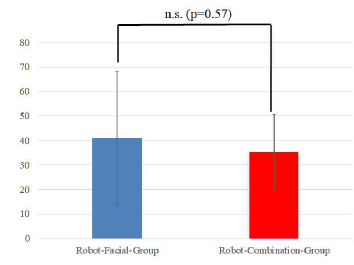

Figure 7: Impression score in experiment1

Figure 7: Impression score in experiment1

5.2. Evaluation criterion

Studying effect was calculated based on the improvement score got by difference of the pre-test score and post-test score. Each of these scores was calculated by 100 questions shown by the studying system. Impression evaluation was calculated in rating scale method, that is same as Experiment1. In all groups, subjects answered the questionnaire after the 11th studying. We defined the sum of scores of all questions as the score of good impressions. We conducted a Welch’s t-test to improve the studying effect and the impression evaluation. To admit a significant difference based on the fact that p values is 5%, we adjusted the significance level (p = 0.025) using the Bonferroni method.

5.3. Result

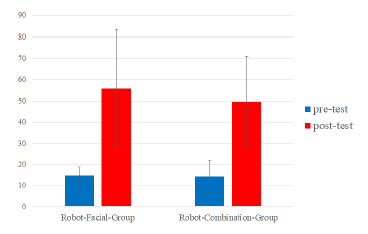

5.3.1. Improvement score

Fig. 8 indicates the pre-test average score and the posttest average score in two groups. Fig. 9 indicates the average improvement score in two groups. Fig.9 indicates that the score of the Robot-Facial-Group is higher than the score of the Robot-Combination-Group. We conducted Welch’s t-test to compare the studying effects. The results show that there was no significant difference in the improvement score between the two groups.

Figure 8: Score of pre- and post-test

Figure 8: Score of pre- and post-test

5.3.2. Questionnaire

Fig. 10 shows the average impression score in two groups. This graph shows that the average impression score of the Robot-Combination-Group is higher than that of Robot-Facial-Group. We conducted Welch’s t-test to compare the impression scores. The results showed there was no significant difference in the impression score between the two groups.

Figure 10: Impression score in Experiment2

Figure 10: Impression score in Experiment2

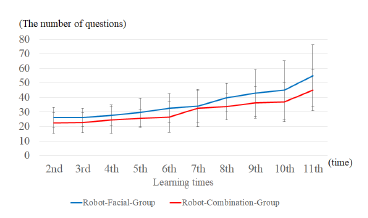

5.4. Discussion

The result of studying effect indicates that it is higher in Robot-Facial-Group than in Robot-CombinationGroup. Table.1 shows the number of answering questions in Robot-Facial-Group. Table.2 shows the number of answering questions in Robot-CombinationGroup. The average number of answering questions is shown in Fig. 11. The answering questions were defined as the number of answered questions in each studying session. The average number of answering questions shows that its number was higher in RobotFacial-Group than in Robot-Combination-Group. The time to express emotion by body motion is longer than the time to express emotions by facial expression change. Thus, the fact that the robot cannot move to the next problem while expressing emotion produced the difference in the average number of answers between the two groups. Moreover, we determined that the studying time in Robot-Combination-Group was shorter than that in Robot-Facial-Group. We believe that it is possible that the studying effect can be influenced by the number of answering questions.

In contrast, the result of the questionnaire shows that the robot in the Robot-Combination-Group gave a better impression than that in the Robot-Facial-Group. Therefore, the body motion of the robot manifested emotion efficiently as reported in conventional research [7]. Therefore, we believe that learners felt the more sympathy from the robot expressing emotions using body motions than from the robot expressing emotions using facial expressions only.

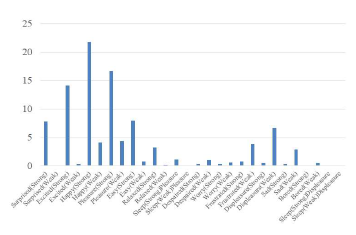

However, in this experiment, we could not find significant difference in the impression score between the two groups. Thus, we calculated the percentage of the expressed emotions. Fig. 12 shows the percentage of emotions expressed in the Robot-Combination-Group. It shows that only three types of emotion out of 28 were often expressed by robots in the Robot-Combination Group. Therefore, learners may feel that robots in this group always expressed the same emotion. Therefore, robots that expressed emotions using body motions and facial expressions did not show any significant difference compared to the robots that expressed emotions using facial expressions only.

Table 1: The number of answering questions in Robot-Facial-Group

| No. | 2nd | 3rd | 4th | 5th | 6th | 7th | 8th | 9th | 10th | 11th |

| 1 | 36 | 29 | 41 | 48 | 52 | 51 | 50 | 59 | 61 | 70 |

| 2 | 26 | 23 | 18 | 25 | 38 | 41 | 39 | 39 | 38 | 38 |

| 3 | 20 | 14 | 25 | 24 | 27 | 30 | 36 | 26 | 27 | 38 |

| 4 | 21 | 24 | 25 | 21 | 19 | 20 | 31 | 27 | 33 | 44 |

| 5 | 25 | 32 | 29 | 36 | 29 | 38 | 46 | 53 | 70 | 80 |

| 6 | 24 | 26 | 22 | 21 | 31 | 29 | 31 | 36 | 39 | 41 |

| 7 | 31 | 31 | 29 | 38 | 38 | 40 | 50 | 72 | 79 | 93 |

| 8 | 16 | 18 | 20 | 17 | 18 | 14 | 22 | 23 | 14 | 30 |

| 9 | 38 | 31 | 36 | 36 | 33 | 36 | 51 | 40 | 36 | 45 |

| 10 | 24 | 33 | 31 | 30 | 40 | 39 | 41 | 55 | 53 | 69 |

Table 2: The number of answering questions in Robot-Combination-Group

| No. | 2nd | 3rd | 4th | 5th | 6th | 7th | 8th | 9th | 10th | 11th |

| 1 | 24 | 32 | 39 | 26 | 32 | 37 | 38 | 47 | 40 | 43 |

| 2 | 19 | 18 | 20 | 15 | 27 | 28 | 30 | 40 | 45 | 54 |

| 3 | 25 | 25 | 25 | 29 | 30 | 35 | 40 | 43 | 36 | 37 |

| 4 | 22 | 18 | 24 | 23 | 32 | 33 | 30 | 38 | 43 | 41 |

| 5 | 21 | 23 | 27 | 24 | 30 | 26 | 32 | 28 | 36 | 40 |

| 6 | 18 | 19 | 17 | 27 | 22 | 17 | 23 | 30 | 35 | 26 |

| 7 | 17 | 17 | 24 | 26 | 20 | 28 | 29 | 29 | 34 | 41 |

| 8 | 23 | 28 | 26 | 29 | 47 | 38 | 52 | 51 | 63 | 66 |

| 9 | 17 | 18 | 23 | 30 | 40 | 28 | 38 | 37 | 47 | 46 |

| 10 | 24 | 23 | 28 | 23 | 38 | 39 | 49 | 59 | 81 | 83 |

Figure 11: The average number of answering questions

Figure 11: The average number of answering questions

Figure 12: The percentage of expressed emotion

Figure 12: The percentage of expressed emotion

6. Conclusion

This paper examined the effects of sympathy expression method using body motion and facial expression in short-term and long-term experiments. We used “Tabot”, a tablet-type robot that has a tablet head. Tabot has a sympathy expression method. In the experiment we performed, we used two types of’ Tabot; robots that express emotions using facial expressions only and those that use a combination of body motions and facial expressions to express emotions. The results of Experiment1 suggest that the robot that expressed emotions using body motions and facial expressions prompted more impression to the learners than the robot that expressed emotions using facial expressions only. The results of Experiment2 suggest that robots that express emotions using facial expressions can better prompt learners to improve their studying effect than those that express emotions using the combination of body motions and facial expressions. Moreover, we determined that the robots that express emotions using facial expressions and body motions prompted more impression to the learners than those that express emotions using facial expressions only. However, robot that express emotions using body motions and facial expressions has issues relating to the time of expressing emotions and the kind of emotions they expressed.

In the future, we plan to improve the algorithm for sympathy expression in a way that solves the problem of time of expressing emotions and the kind of emotions expressed by the robot.

Acknowledgment

This work was supported by MEXT KAKENHI (Grant-in-Aid for Scientific Research (B), No.16H02889)

- T. Kanda, T. Hirano, D. Eaton and H. Ishiguro, “Interactive robots as social partners and peer tutors for children: A field trial,” Hum-Comout. Interact., vol.10, no. 1, pp. 61–84, 2004

- O.H. Kwon, S.Y. Koo, Y.G. Kim, and D.S. Kwon, “Telepresence robot system for English tutoring,” IEEE Workshop on Advanced Robotics and its Social Impacts, pp. 152–155, 2010

- S. Koizumi, T. Kanda, and T. Miyashita, “Collaborative studying Experiment with Social Robot (in Japanese),” Journal of the Robotics Society of Japan, vol. .29, no. 10, pp. 902–906, 2011

- T. Kanda, T. Hirono, E. Daniel and H. Ishiguro, “Participation of Interactive Humanoid Robots in Human Society: Application to Foreign Language Education,” Journal of the Robotics Society of Japan, vol. .22, no. 5, pp. 636–647, 2004

- F. Jimenez, M. Kanoh, T. Yoshikawa, T. Furuhashi, “Effects of Collaborative studying with Robots That prompts Constructive Interaction,” Proceedings of IEEE International Conference on Systems, Man and Cybernetics, pp. 2983–2988, 2014

- F. Jimenez, T. Yoshikawa, T. Furuhashi and M. Kanoh, “Effects of Collaborative studying with Robots using Model of Emotional Expressions,” journal of Japan Society for Fuzzy Theory and Intelligent Informatics, vol. 28, no. 4, pp. 700–704, 2016

- T. Kanda, M. Imai, T. Ono, H. Ishiguro, “Numerical Analysis of Body Movements on Human-Robot Interaction,” IPSJ Journal, Japan, Vol.44, No.11, pp.2699-2709,2003

- Y. Toyama and L. Ford, “US-Japan Body Talk augmented New version Gestures _ Facial expressions _ Gesture’s dictionary,” Sanseido, Japan, 2016

- D. Morris and Y. Toyama, “Body talk New style version World gesture dictionary,” Sanseido, Japan, 2016

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Ryo Yoshizawa, Felix Jimenez, Kazuhito Murakami, "Proposal of a Behavioral Model for Robots Supporting Learning According to Learners’ Learning Performance." Journal of Robotics and Mechatronics, vol. 32, no. 4, pp. 769, 2020.

- Kota Tanaka, Maho Shigematsu, Masayoshi Kanoh, Felix Jimenez, Mitsuhiro Hayase, Naoto Mukai, Tomohiro Yoshikawa, Takahiro Tanaka, "The Effect of Adding Japanese Honorifics When Naming a Driving-Review Robot." Journal of Robotics and Mechatronics, vol. 36, no. 6, pp. 1577, 2024.

No. of Downloads Per Month

No. of Downloads Per Country