Building an Online Interactive 3D Virtual World for AquaFlux and Epsilon

Volume 3, Issue 6, Page No 501-514, 2018

Author’s Name: Omar Al Hashimia), Perry Xiao

View Affiliations

London South Bank University, School of Engineering, SE1 0AE, UK

a)Author to whom correspondence should be addressed. E-mail: alhashio@lsbu.ac.uk

Adv. Sci. Technol. Eng. Syst. J. 3(6), 501-514 (2018); ![]() DOI: 10.25046/aj030659

DOI: 10.25046/aj030659

Keywords: 3D modelling, Virtual Reality(VR), AquaFlux and Epsilon, 3ds Max, Web 3D applications, Virtual User Manual (VUM)

Export Citations

In today’s technology, 3D presentation is vital in conveying a realist and comprehensive understanding of a specific notion or demonstrating certain functionality for a specific device or tool, especially on the World Wide Web. Therefore, the importance of this field and how its continuous enhancement has become one of the dominant topics in web development research. Virtual Reality (VR) combined with the use of a 3D scene and 3D content is one of the best delivering mechanisms of this realist ambience to users. AquaFlux and Epsilon are clinical instruments that were built, designed, and developed at London South Bank University as research projects for medical and cosmetic purposes. Currently, They have been marketed and used in almost 200 institutions internationally. Nevertheless, considering the type of these tools, they often involve on-site thorough training, which is costly and time-consuming. There is a real necessity for a system or an application where the features and functionalities of these two instruments can be illustrated and comprehensively explained to clients or users. Virtual User Manual (VUM) environment would serve this purpose efficiently, especially if it is introduced in 3D content. The newly created system consists of a detailed virtual guide that will assist users and direct them on how to use these two devices step-by-step. Presenting this work in a VR immersed environment will benefit clients, user and trainees to fully understand all the features and characteristics of AquaFlux and Epsilon and to master all their functionalities.

Received: 31 October 2018, Accepted: 16 December 2018, Published Online: 23 December 2018

1. Introduction

This paper is an extension of work originally presented in Advances in Science and Engineering Technology International Conferences (ASET) 2018 [1]. The current research paper presents the development process of a web-based interactive 3D virtual world that illustrates and covers all steps of how AquaFlux and Epsilon operate. VR collectively used with 3D objects presentation will efficiently serve the purpose of illustrating all features and functions of any newly purchased device in an immersed world that gives viewers a real feel of the experience. The popular change to VR in online training or education is going to promote a new learning concept, where clients, trainees or even students not only gain knowledge but also communicate with each other by changing content in a variety of ways. The main feature of VR is the prospect of social interaction, providing the ability for immediate actions and reactions in real time. VR environment has constantly been associated with 3D modelling; it is by far one of the best ways to illustrate and show any 3D content, object, scene, model, etc. The word virtualisation generally depicts the separation of a resource or request for a service from the underlying physical delivery of that service. An additional factor that makes virtualisation very practical and useful is the interactivity that 3D multimedia applications can provide it, particularly when the whole project displayed on-line using the WWW [2]. Moreover, users of the current Internet age are ready to shift from 2D online presentations to 3D. The arrival and the broad use of 3D web materials have amplified the necessity to acquire improved 3D technologies and generate an extremely advanced and practical product. Therefore, it is suitable to upgrade and improve the usage of those 3D technologies and interactive environments to new fields, like e-learning, Virtual Learning Environment (VLE), museums, e-commerce, online training, Learning Management System (LMS), tourism, health and the government part. Adapting these enhanced 3D virtual interactive apparatus into those latest fields has immensely improved and upgraded the user understanding and became stimulating. Online 3D VR worlds and contents have amplified users’ perceptions as they deliver and comprise physical world appearances and attributes to users and permit them to connect to it resulting in immersing users into their environments. 3D VR world, used through the WWW, would be as viable as using an application locally. Therefore, the concept of VR can be incorporated with social media to additionally increase its attraction. Nonetheless, a new VMware report explains that the promising future of 3D/VR technology in our daily applications can be entirely used solely if joined with the improvement of a competent and simple to use ways of building, controlling, search and demonstration of interactive 3D multimedia content, that can be practised by skilled and novice users [3]. In this context, London South Bank University’s engineering lab has created and designed AquaFlux and Epsilon, which are clinical instruments. Displaying these medical devices in the VR approach would demonstrate their capabilities as being practised in the real world. Xu (2015) states AquaFlux as:

a new condenser based, closed chamber technology for measuring water vapour flux density from arbitrary surfaces, including in-vivo measurements of transepidermal water loss (TEWL), skin surface water loss (SSWL) and perspiration. It uses a cylindrical measurement chamber [4].

Compared to other technologies, AquaFlux has greater sensitivity, greater repeatability, and most importantly, the measurement results are independent of the external environment. Biox (2014) describes Epsilon as:

a new instrument for imaging dielectric permittivity (Ԑ) of a wide variety of soft materials, including animal and plant tissues, waxes, fats, gels, liquids, and powders. Its proprietary electronics and signal processing transform the sensor’s native non-linear signals into a calibrated permittivity scale for imaging properties like hydration. [5].

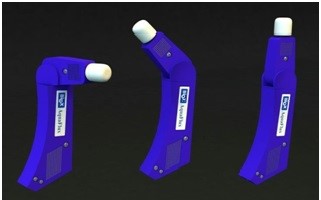

The Epsilon user manual describes that “The system consists of a hand-held probe, a parking base, and an in-vitro stand, securely stored in a purpose-designed case” [6]. Both devices are shown in Figure 1.

Figure. 1. Medical instruments AquaFlux (L), Epsilon (R)

Figure. 1. Medical instruments AquaFlux (L), Epsilon (R)

2. The utilization of 3D contents and related work

After the advent of Web 2.0, there has been a major improvement in web applications. Web 2.0 supports and encompasses various functions, like team working, communicating, and the connection between computer and Internet users. 3D immersive virtual worlds (3DVW) are one of the important applications of Web 2.0, which are computer-generated, virtual, online, graphics, multimedia and 3D worlds [7]. The major notion of building such an object in 3D is to illustrate to users the reality of the content. 3D modelling is an essential element in the area of VR technology [8]. The development of a 3D content can be divided into three main steps:

- 3D modelling

- Layout and animation

- Rendering

Normally, 3D models are created using 3D modelling software, like 3ds Max, or Maya, or their open source equivalents – although open source tends to be less complex with fewer advanced features. A 3D scene model is created from geometrical shape objects, for example, rectangles, triangles, circles, cones, etc. e-learning contents are placed into hypermedia documents, causing difficulty in 3D integration into HTML files. Web browsers are not yet designed to deal with the (normally large) 3D data files which require a large number of computational resources. It takes time and effort to digest such proposals. Therefore, readily available Mozilla and Google proposals can be adopted as standards. Mozilla and Google use the same technologies: OpenGL interfaced with JavaScript [9].

3D objects are widely used in science, technology, engineering, health, education, cosmetics, simulation, e-learning etc. It has been used in the areas named above joined with VR to stimulate the displayed content as it is applied and practised in the physical world. Another aspect that 3D contents and VR play a very active role in, is the Vocational Education System. It is a system that is specifically designed to support the industry and manufactures by offering vocational and technical courses delivered in VR ambience and PLE (Personal Learning Environment) which allows users, learners to control their learning and manage their own learning experience (distance learning). Kotsilieris, Dimopoulou (2013) point out:

The development of 3D Virtual Worlds plays an important role in e-learning and distance learning. Through three main features: I) creating the illusion of a 3D environment, II) support the application of avatars as virtual representations of human users, III) offer communication and interaction tools to their users. The evolution of virtual worlds is a result of a rapidly evolving field of electronic games. In brief, Virtual Worlds are designed to offer real-time communication tools, interaction capabilities and collaboration [10].

Some up-and-coming technologies will overpower some difficulties that are faced currently in areas like education, technology, health, engineering and others. These include computer graphics, augmented reality, computational dynamics and virtual worlds. Lately, we have witnessed a number of novel thoughts emerging in the literature related to the future of education. As has been pointed out by Potkonjak, Gardner, Callaghan, Mattila, Guetl, Petrović, Jovanović (2016):

Technological examples mostly related are e-learning, virtual laboratories, VR and virtual worlds [11].

Accordingly, the benefit of adopting VR in the medical and healthcare sectors is to teach and train medical students, trainees and clients on how to use medical devices and instruments and how to conduct some medical tasks. Web-based and online 3D objects used in medical training tools and environments showed to develop the educational process. This web-based virtual medical system of devices for cardiac diagnostic and monitoring functionalities has been created and built to assist in the process of training medical students, qualified health personnel and non-medical staff to carry out an Electrocardiogram (ECG), an Automatic External Defibrillator (AED) and a blood pressure device [12].

Those applications guarantee an interactive e-learning experience in the medical field. Also, considering the main objective to emulate real patients, anatomic regions, and clinical tasks and to represent real-life conditions in which this medical tool is built for. These virtual environments allow interaction between users and the system as well as manipulation with very sensitive reactions similar to that of real-life objects. This type of system will promote learning by practising, which makes the whole experience straightforward and enjoyable [12]. Using VR in such projects will help to achieve an extremely immersive experience [13].

Another related work in this regards is the ARCO project (Augmented Representation of Cultural Objects). The ARCO project is specifically built for the tourism and heritage industry. Its main objective is to build up an entire virtual museum that contains a collection of technologies for producing, changing, controlling and demonstrating cultural objects in VR environment that are accessible globally via the Internet [14].

3. The implementation of the interactive Virtual User Manual (VUM) of the medical instruments

The design process of AquaFlux and Epsilon medical devices was slightly difficult and experienced the array of choices for selecting the suitable 3D modelling software; in this project, the software modelling tool used is 3D Studio Max (3ds Max). In addition, adding interactivity between users (clients) and the devices, the software Adobe Flash CS6 is used. Other modelling software products were used at the early stages of this project, like Google Sketchup, Blender and Unity were used as a final result of the research was to create, build and develop the objects and all 3D scenes in 3ds Max for its professionalism and the complete collection of functions and options that it had to offer.

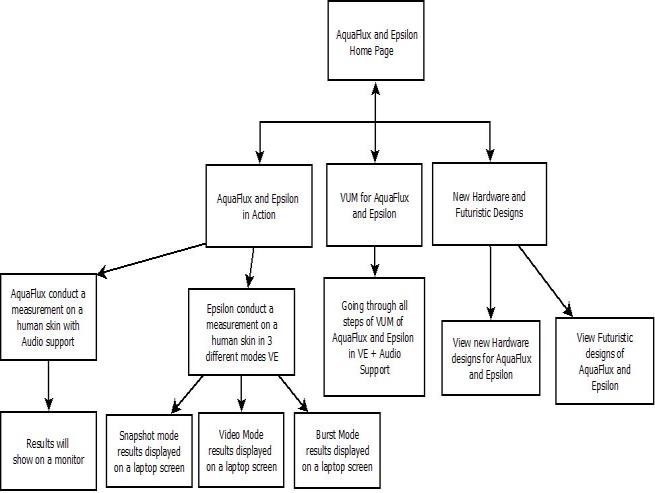

Figure. 2. Flowchart of AquaFlux and Epsilon online 3D Environment

Figure. 2. Flowchart of AquaFlux and Epsilon online 3D Environment

AquaFlux and Epsilon are clinical devices used for skin treatment, belonging to the health and medical sector. Figure 2 shows the workflow diagram that illustrates all the steps of the AquaFlux and Epsilon 3D virtual environment system and describes the development processes of the Virtual User Manual (VUM). At the home page, users have three options: AquaFlux and Epsilon in Action, users can perform virtual skin measurements using the instruments. For VUM of AquaFlux and Epsilon, users can go through all the training steps, with audio and illustrative text support. For New Hardware and Futuristics Designs, users can view the new hardware designs and futuristic concepts for both medical instruments.

The health sector is largely connected with the utilisation of leading technologies like 3-dimensional objects and virtual presentation for demonstration, education, testing and performing a number of medical operations and routines. 3D Virtual Worlds (3DVWs) have been used in a variety of applications in the medical sector and applied in health-related activities [7].

3.1. The development and design of AquaFlux and Epsilon using 3ds Max modelling software

3ds Max modelling tool can swiftly and professionally produce 3D scenes and objects [15]. Like in various 3D development tools, initially, we have to create and design the medical tools. AquaFlux and Epsilon contain a base part and a probe; on the base part, there are little design obstacles, e.g. cabling input port and buttons that have to be switched left or right in AquaFlux’s case. The probe and the base have been calculated with an actual ruler to let us build the models in an extremely realistic form. Additionally, considering some photos to get an accurate proportion and size for every model, for instance, how large the probe would be in comparison to a human hand.

Figure. 3. AquaFlux probe measured by a ruler

Figure. 3. AquaFlux probe measured by a ruler

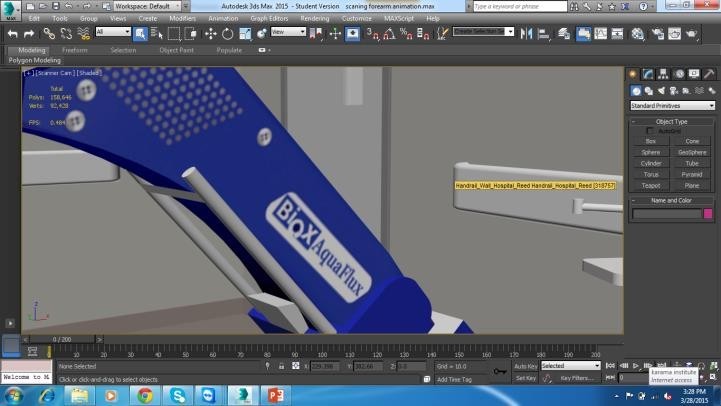

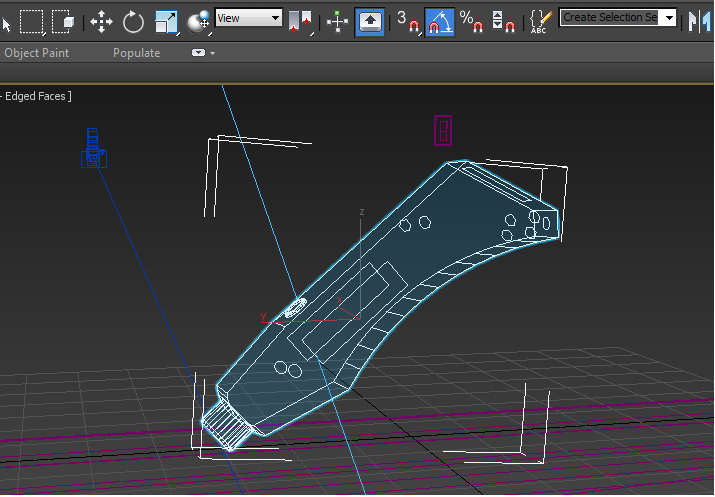

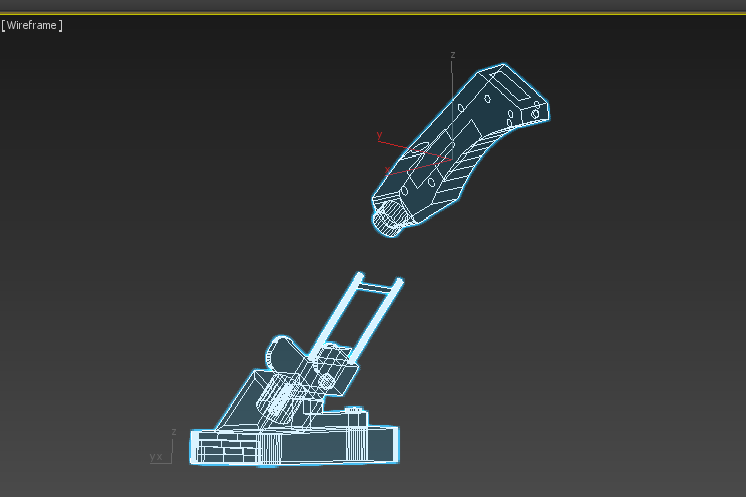

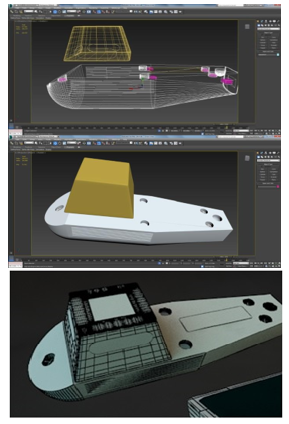

Once the developer assesses the accurate size of every object, we use 3ds Max to design the medical devices. Modelling software is shown in the figures below for AquaFlux and Epsilon:

Figure. 4. Tools box menu 3ds Max software used to model objects

Figure. 4. Tools box menu 3ds Max software used to model objects

Figure. 5. AquaFlux probe modelling in 3ds Max

Figure. 5. AquaFlux probe modelling in 3ds Max

Figure. 6. Snapshot of AquaFlux probe and base design in 3ds Max

Figure. 6. Snapshot of AquaFlux probe and base design in 3ds Max

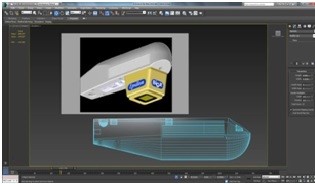

The designer is able to choose a diverse object’s shapes from the modelling menu e.g. a box, cone, and sphere, tube and cylinder and gives names for all parts. As the process of modelling is progressing, the developer has to construct a real reference to check how the recently created model is comparable to the real object via situating the real model’s image close to the newly designed one as shown in Fig 8:

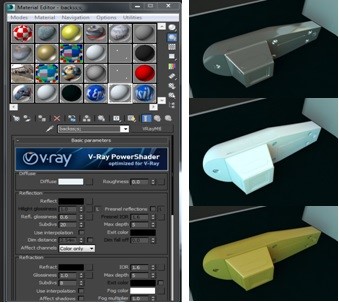

3.2. Adding materials to AquaFlux and Epsilon

Materials and colours are considered early to befit the model reference if it is really sufficient to satisfy the client’s demands. The selection process of the material is closely examined to achieve a minimum rendering time and to present a very real product. Materials that are rich in glossiness and reflection will consume most of the processing (rendering) time, however, without materials, the model would be very unreal to viewers. Vray materials are used in this project, and the third-party renderer is Vray for the whole work. Vray is one of the industry standards for generating specialised pictures. For the Epsilon Probe and other objects, a semi-gloss with a whitish material is used with 0.6 glossiness and a subdivision of 20. Those settings were chosen to reduce the time it usually takes during the rendering process, as rendering is a crucial part of any 3D creation projects.

Figure. 7. Different stages of modelling Epsilon’s probe in 3ds Max

Figure. 7. Different stages of modelling Epsilon’s probe in 3ds Max

Figure. 8. The original picture of Epsilon compared to an Epsilon object.

Figure. 8. The original picture of Epsilon compared to an Epsilon object.

In this project, High Definition Range Imaging (HDRI) is used for the background to make it slightly more practical for the lights and shadows. HDRI is absolutely valuable to emulate shadows and lightings of a real environment that will be projected on the objects in the 3D scene. Although using HDRI will add some extra time during rendering each scene, the glossiness and reflectivity of the model are changed slightly low, to improve the overall execution-time process.

Figure. 9. Colours added to AquaFlux using the material box menu

Figure. 9. Colours added to AquaFlux using the material box menu

Figure. 10. Materials menu in 3sd Max (L), Materials added to Epsilon probe (R)

Figure. 10. Materials menu in 3sd Max (L), Materials added to Epsilon probe (R)

Once the materials eventually determined and added taking into account the time and quality of the work, we arrange all 3D contents for the next process: Animation. In order for all the objects in our 3D scene to be animated correctly, all objects in the selected scene must be grouped together via animation attach buttons in the command panel under edit geometry menu. This means all objects should be grouped, attached or sometimes called fused together. If any object, for any reason, has broken up and not been linked and left out of the attached objects group, the keyframes associated with that model will disappear after this stage and the model will no more be animated.

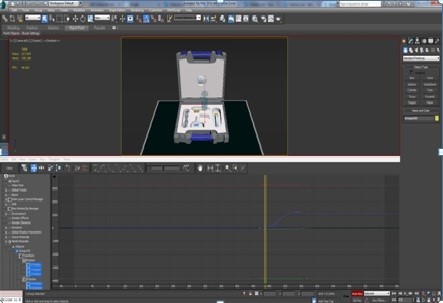

3.3. The process of animating AquaFlux and Epsilon

Animating 3D objects is an essential phase of our project’s design. Almost a third of the time of this project was used on the animation process of our objects that were modelled and built earlier. Planning is critical at this stage for each planned scenario. Planning should be prepared and anticipated for every object in our scene: how the object will interact with other objects, the proposed movement and the path of that motion that the individual object will experience in the virtual world. It is a somewhat prolonged process. Contrary to character’s animation, this type of animation is slightly easier as it does not require bones and additional complicated rigging tools. Animating objects in this work has been accomplished in two ways: attaching an object into a group of objects and using the collapse utility to cave in multiple selected objects into a single object. The necessary points for all movements must be thoroughly calculated prior to the animation phase. In the animation stage, the Timeline is set to 1000 frames and 15 frames per second because this work is specifically designed for online environment only. The number of frames per second is decreased to suit the file size of the images that will be rendered to be used on the WWW. Applying curve editor menu options for animating all objects and scenes. When modifying curve points, all animated models can be managed via (speed, timing, and movements options).

Figure. 11. Adding keyframes (redline) in 3ds Max

Figure. 11. Adding keyframes (redline) in 3ds Max

Figure. 12. Using the Curve editor in 3ds Max

Figure. 12. Using the Curve editor in 3ds Max

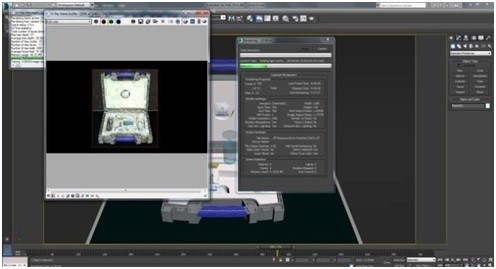

3.4. The process of rendering Aqua Flux and Epsilon

After the animation is completed and setting keyframes from a specific position into where we want our object to stop at a certain point. Currently, all objects are now prepared for the last phase of the modelling process, which is rendering. In rendering the developer has to monitor all frames being rendered. Nevertheless, at this point in our work, it is extremely vital to monitor the speed and time that each object takes to complete rendering. Every frame is closely checked if it is in the correct position (number) and whether all models have the exact shadows and lighting. Occasionally models are spotted floating that are unnoticed in the animation process. In rendering, a small window will pop up showing how rendering each square pixel of the object, calculating the objects materials, colours, shadows, light reflections, etc. In the rendering options box, it is possible to select the format of the rendered images and their file locations that will be exported into Adobe Flash CS6 later on for adding interactivity via linking all rendered images, scenes and objects.

Figure. 13. Rendering window after all keyframes and files setup completed

Figure. 13. Rendering window after all keyframes and files setup completed

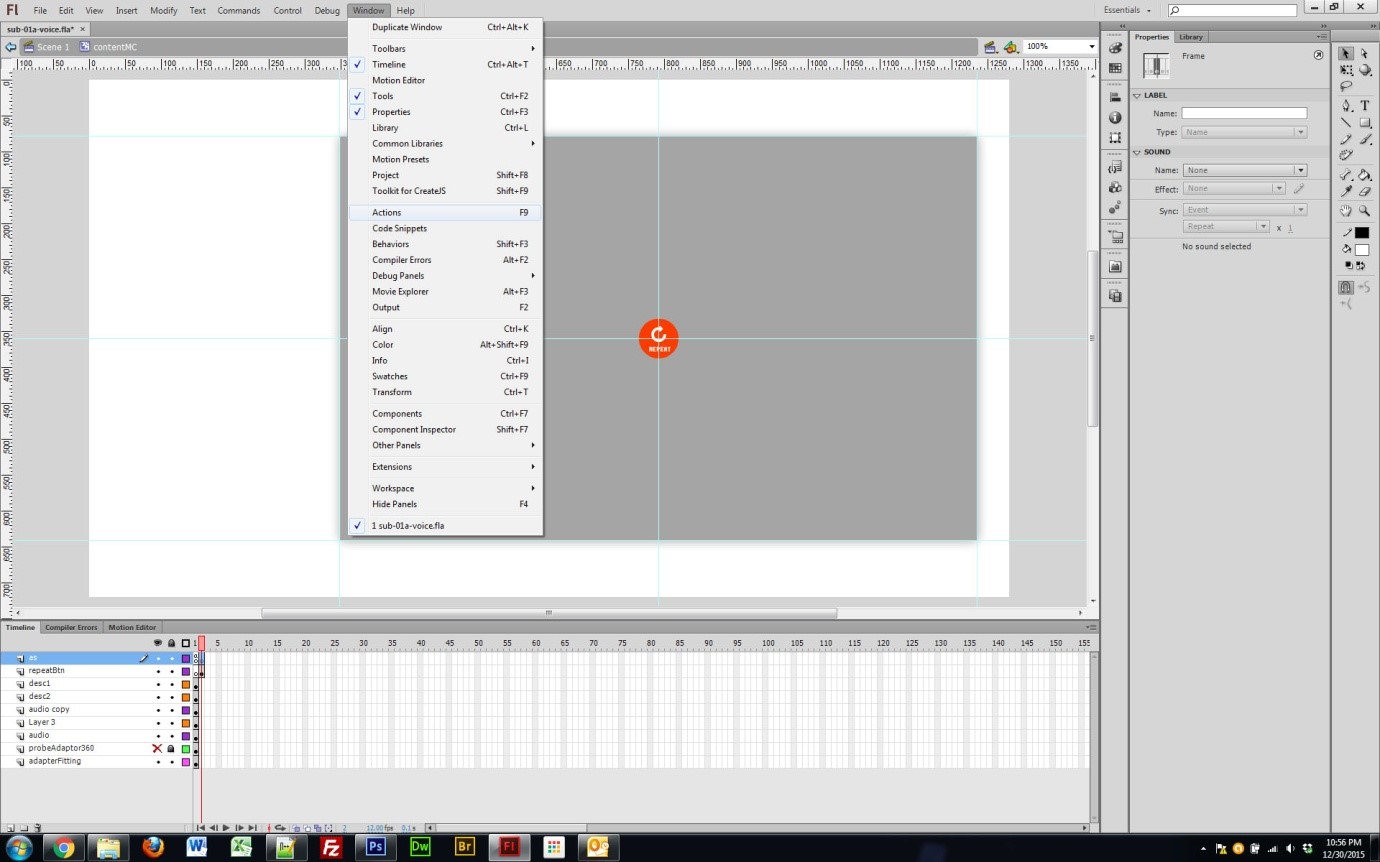

3.5. Creating links and adding interactivity to AquaFlux and Epsilon

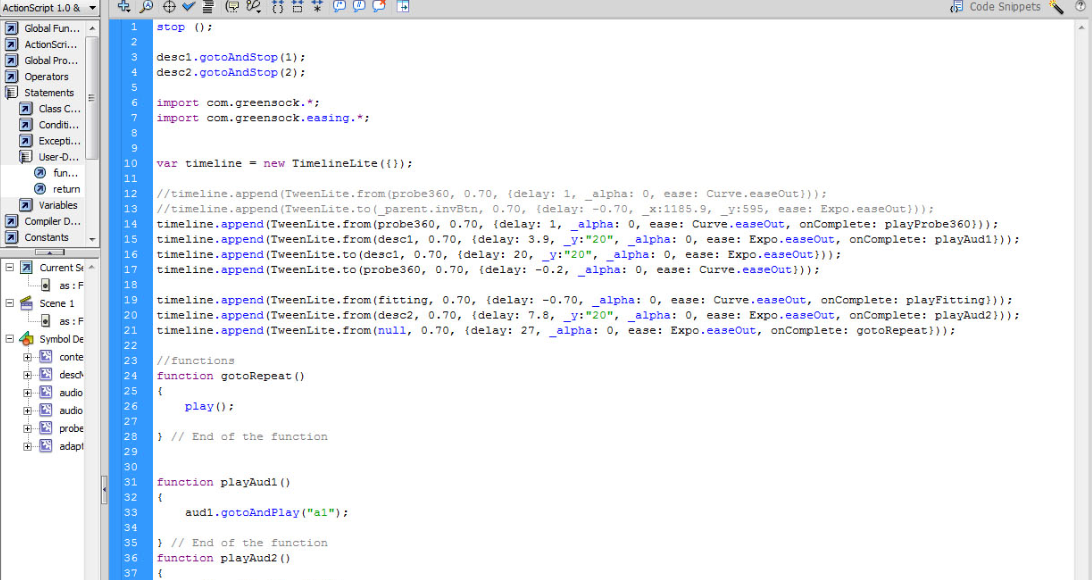

All rendered image sequences of the medical devices AquaFlux and Epsilon have to be exported from 3ds Max software into Adobe Flash CS6 in order to add interactivity to the project via creating interactive buttons.

Figure. 14. Adding ActionScript into a scene in Flash CS6

Figure. 14. Adding ActionScript into a scene in Flash CS6

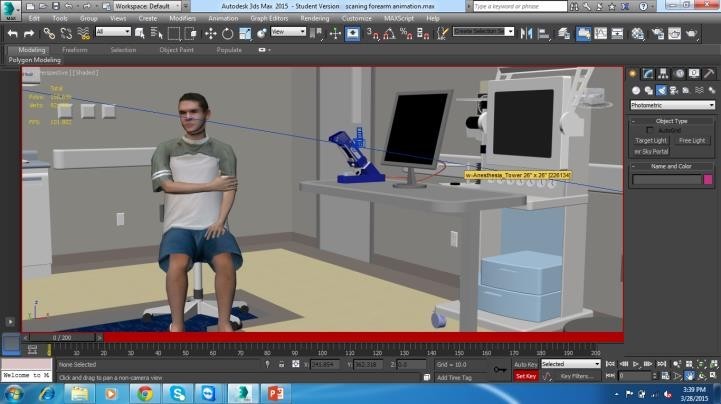

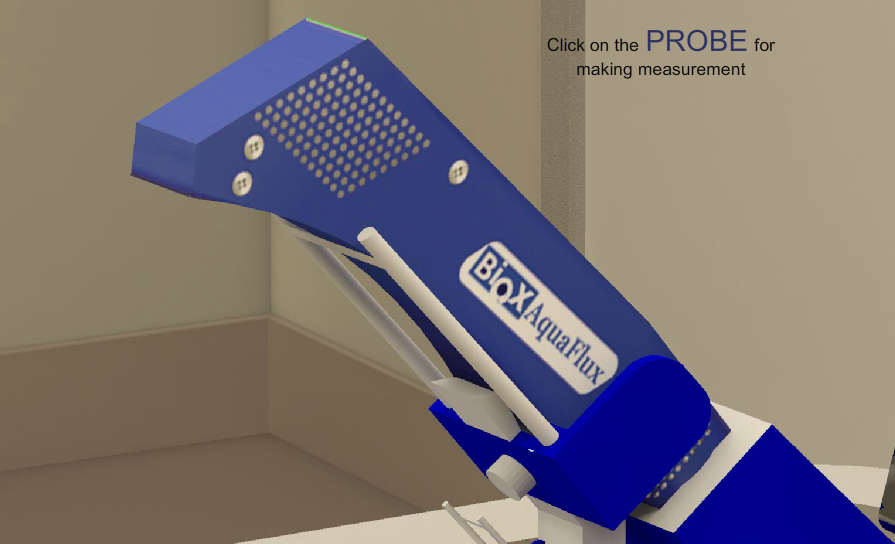

AquaFlux VUM is a completely interactive website that demonstrates all functions, features and a sample skin measurement process conducted on a human.

The AquaFlux VUM is an interactive system. Users can click on the device’s probe to command it to go towards a human’s forearm to perform a sample skin measurement. Each step of the VUM is assisted with audio instructions recorded earlier to assist in exemplifying the operational process for this medical device.

Figure. 15. Syncing a voice clip with the text frame in Epsilon scene

Figure. 15. Syncing a voice clip with the text frame in Epsilon scene

Figure. 16. ActionScript code for linking the button to play, replay and stop the audio clip

Figure. 16. ActionScript code for linking the button to play, replay and stop the audio clip

The above steps (Figures 14, 15 and 16) can be repeated to all the 3D scenes and objects that required some form of connectivity and interactivity with the user in a VR atmosphere by using buttons in Flash CS6.

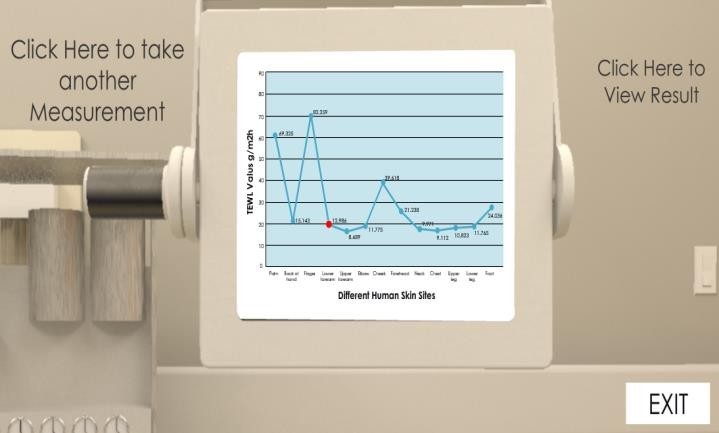

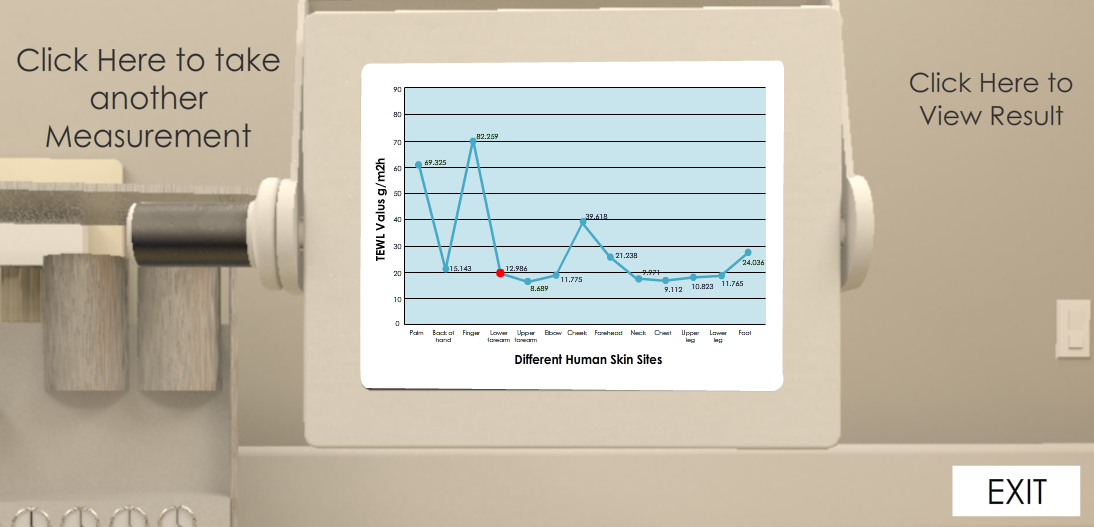

Figure. 17: The result of the process of skin measuring will be shown on a screen

Figure. 17: The result of the process of skin measuring will be shown on a screen

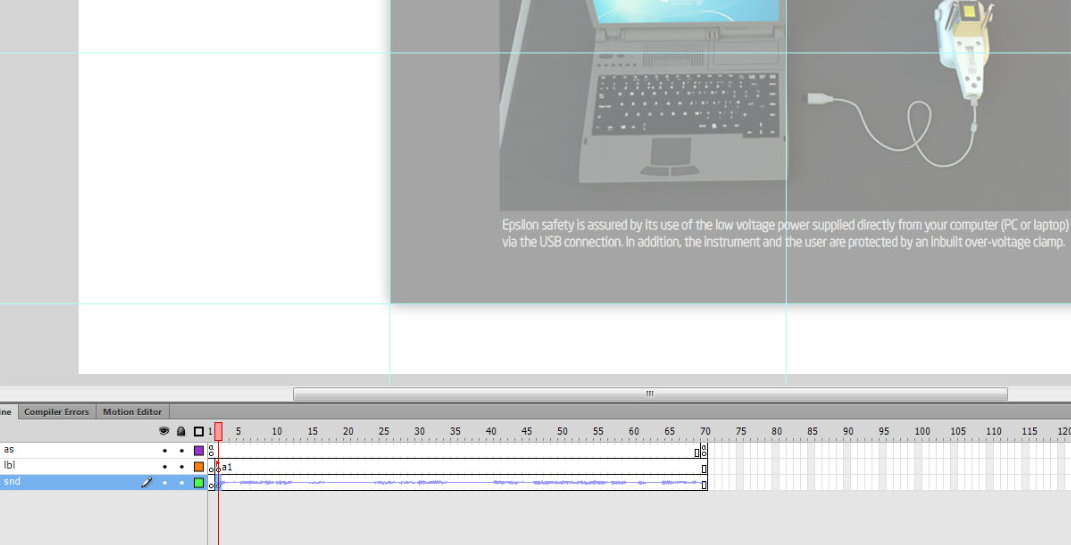

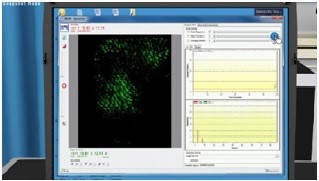

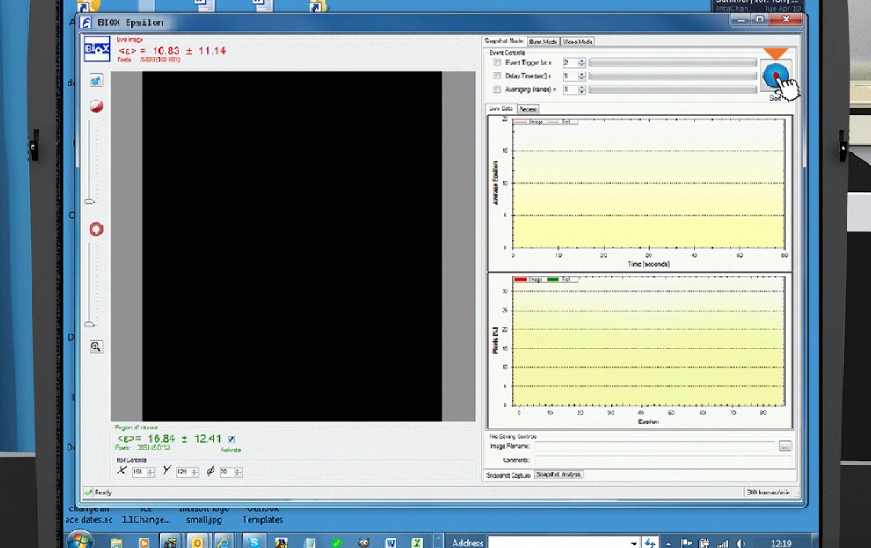

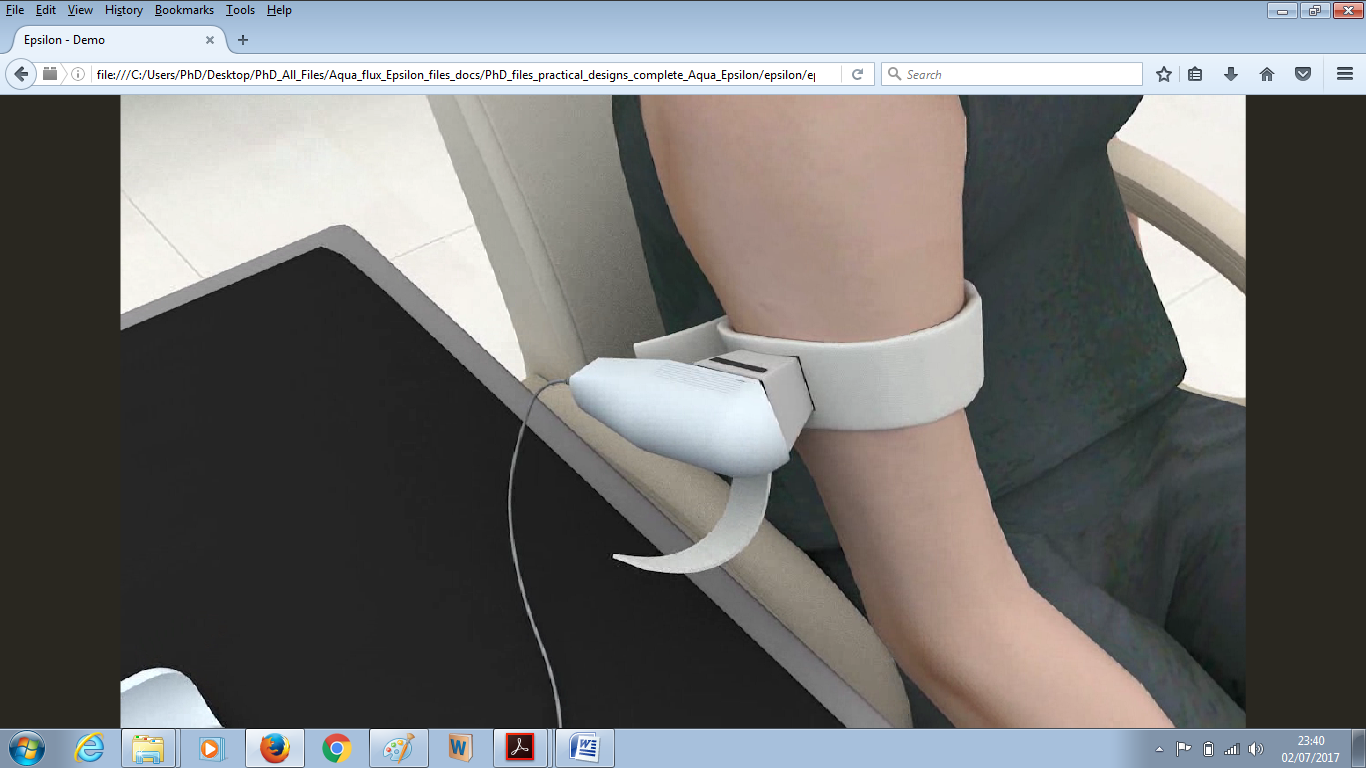

Epsilon VUM follows a similar approach to conducting a human skin measurement procedure for medical purposes. Figure 18 shows the Epsilon instrument in action.

Figure. 18. Skin measurement process taking place on a patient using Epsilon

Figure. 18. Skin measurement process taking place on a patient using Epsilon

Once carrying out the measuring process, the scene’s camera will move towards the laptop’s screen to display the scan results as shown in Figure 19.

Figure. 19. The result of the Epsilon scanning process is displayed once clicked on the blue button, top right corner.

Figure. 19. The result of the Epsilon scanning process is displayed once clicked on the blue button, top right corner.

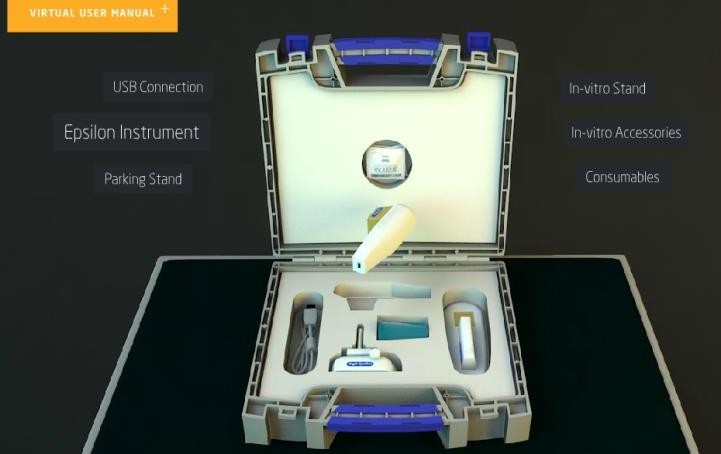

Figure. 20. Epsilon protective case and all its components are shown in a 3-dimensional interactive atmosphere

Figure. 20. Epsilon protective case and all its components are shown in a 3-dimensional interactive atmosphere

In Epsilon VUM, users are able to perform human skin scanning in three various approaches, snapshot, burst and video mode. All results of scans (images and videos) are stored in a folder on the laptop’s hard disc. Scan buttons and tabs are all interactive.

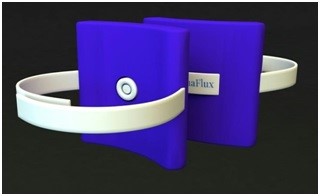

4. Designing and introducing new accessory, hardware and holders of Aqua Flux and Epsilon

During the final stages of this project, a fresh design plan has evolved, which will contribute positively to the entire skin measurement process. Moreover, it will make a great addition to the practicality of the two devices. The notion was to develop and build a novel part (holder) for both tools. It will contribute massively to the benefit of the entire process making it more effective and competent by allowing the simultaneous measurement of several patients. The newly designed holder for Epsilon was thoroughly considered and particularly built to suit the Epsilon structure and patient’s easement. The material added to the newly developed holder was a cloth semblance material to emulate the real world object.

Figure. 21. Epsilon newly designed holder strap in action

Figure. 21. Epsilon newly designed holder strap in action

In relation to Aquaflux, it was rather sensitive to create the novel holder due to the shaped probe. After a thorough analysis of the structure of the device, AquaFlux’s new accessory was completed. The new holder already considered both patients relive and client’s ease. AquaFlux probe is made of metal and fairly heavier in comparison to Epsilon. Thus, the black frame around the border of the holder is recommended to be manufactured of the magnet material providing AquaFlux with further support, firm placing, and accurate reading.

Figure. 22. AquaFlux newly designed holder strap with magnet head

Figure. 22. AquaFlux newly designed holder strap with magnet head

Conclusively, the novel ideas of designing and building new hardware for these medical tools has optimised the functionality of both devices. Furthermore, to provide a broad number of people concurrently with the service, futuristic concepts and designs have evolved. The innovative designs of AquaFlux allow it to rotate in all directions giving it extra flexibility and ease of use for all parties involved in the process as shown in Figure 23 below:

Figure. 23. Rotatable AquaFlux for further flexible positions

Figure. 23. Rotatable AquaFlux for further flexible positions

The AquaFlux arm-band is an entirely new idea, extremely lightweight and it allows further ease of use. It is padded and soft which can be used almost everywhere on the human’s body. It has a Bluetooth capability to connect to a PC or laptop wirelessly and spare the trouble of cabling that can be distracting.

Figure. 24. AquaFlux wireless stripe, newly designed hardware

Figure. 24. AquaFlux wireless stripe, newly designed hardware

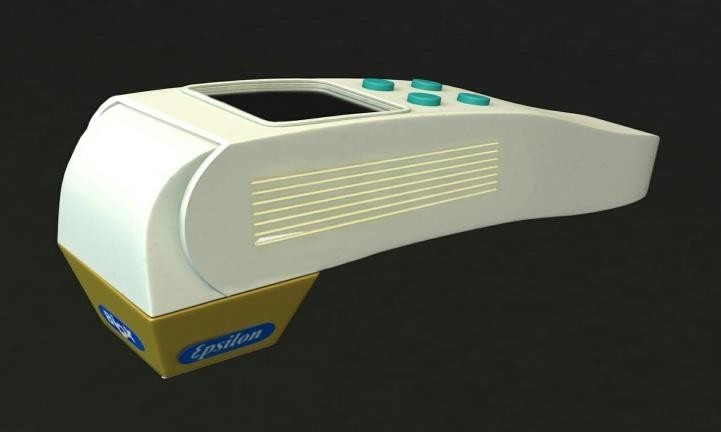

Figure. 25. Epsilon turning head, on probe monitor and operational buttons

Figure. 25. Epsilon turning head, on probe monitor and operational buttons

Epsilon’s turning head assists to carry out skin measurements in vertical/horizontal positions. A fresh screen is supplemented to the initial probe for the purposes of accessibility, simplicity and comfort. Additionally, Functional buttons have been supplemented to the top surface of the handle to offer on probe actions, e.g. on/off, scan, reset. Epsilon device can be wholly moveable.

5. Virtual Reality creation

Virtual Reality is a second world (simulated environment) accessed by various users, and it resembles our world in most of its characteristics depending on what is required from that virtual world to present. Users in virtual worlds can be presented by avatars or walkthrough scenes to conduct a variety of activities.

There are currently two methods to create a VR environment:

- Using a 360-degree video: this method is easier than the second one, as you can record real-life video footages and use those footages in building the content. This method needs another tool at the later stages to view the 3D content, HMD (Head Mounted Display) unit. With this HMD unit, users can experience the new world of computer simulated reality that imitates physical existence in the actual world.

- Using 3D animation: this method is when all selected objects will be created and developed in 3D modelling tool e.g. 3ds Max, Blender, Maya and many more. It is more expensive as it requires more tools and software to be involved in the construction process as well as requiring the expertise to develop, create, animate, render and subsequently publish the product online. Another way of creating VR is by using game engine software such as Unity. This method is mainly used for creating and developing games.

From the two methods mentioned above, it is clear that the second method does require a huge amount of effort and experience. All aspects of the second method need to be carefully and professionally designed and planned. No real images or footages that can help the creator to replace any physical existence in the virtual world; all have to be created, built and designed from scratch. In addition, it is the method that has been adopted in this work.

Figure. 26. AquaFlux interactive user manual website contains three links

Figure. 26. AquaFlux interactive user manual website contains three links

6. Results

6.1. AquaFlux Interactive User Manual

AquaFlux VUM online system is a completely interactive website that displays a sample skin measurements conducted on a human and illustrates all functions and features of the medical tool. The following Figures 26, 27, 28, 29, 30, 31, 32 and 33 present few steps on how to use the VUM.

By clicking on the button labelled Aquaflux in Action, will present a sample of skin measuring process conducted on a client at a clinic.

Figure. 27. AquaFlux in its base, waiting for a user to click the link above it

Figure. 27. AquaFlux in its base, waiting for a user to click the link above it

Figure. 28. AquaFlux in action, moving to a patient’s forearm for a measurement

Figure. 28. AquaFlux in action, moving to a patient’s forearm for a measurement

After clicking on the link in Figure 28, the AquaFlux probe will move towards a patient’s forearm to conduct a sample skin measurement, by placing its head that has a cap into the patient’s skin.

Figure. 29. AquaFlux monitor shows measurement results and other user’s options

Figure. 29. AquaFlux monitor shows measurement results and other user’s options

Once clicked on view results link (top right corner), the reading will show on the screen with an accurate reading of the forearm. A user, a trainee or a client also has another option to take another measurement (top left) or to exit to the main page via the exit button (bottom right).

Figure. 30. The 2nd option link to view the VUM

Figure. 30. The 2nd option link to view the VUM

From the main page, clicking the second option takes the user into the AquaFlux VUM page. Here, users can click on the VUM (top right) link to view AquaFlux setting up the process, calibration, caps, AC adaptors, connectors, probe parking, holding the probe, software familiarisation. Also, the process of how a user can fit and slide the probe into the parking base and an explanation of the AquaFlux rear panel unit.

Figure. 31. AquaFlux 3D components illustrated in the protective case

Figure. 31. AquaFlux 3D components illustrated in the protective case

As shown previously in Figure 31, once the mouse is placed over one of the other six links, it will popup the part and rotate it in 3D mode; this gives a chance to the user to know all the right names for the AquaFlux device components.

Figure. 32. Clicking on the VUM menu, the background becomes blurry

Figure. 32. Clicking on the VUM menu, the background becomes blurry

In Figure 38, users can click on VUM’s options’ menu to display all list items of AquaFlux components. The menu is designed to illustrate all parts of the medical device as well as demonstrating the role of each part in the measuring process displayed in a 3D environment.

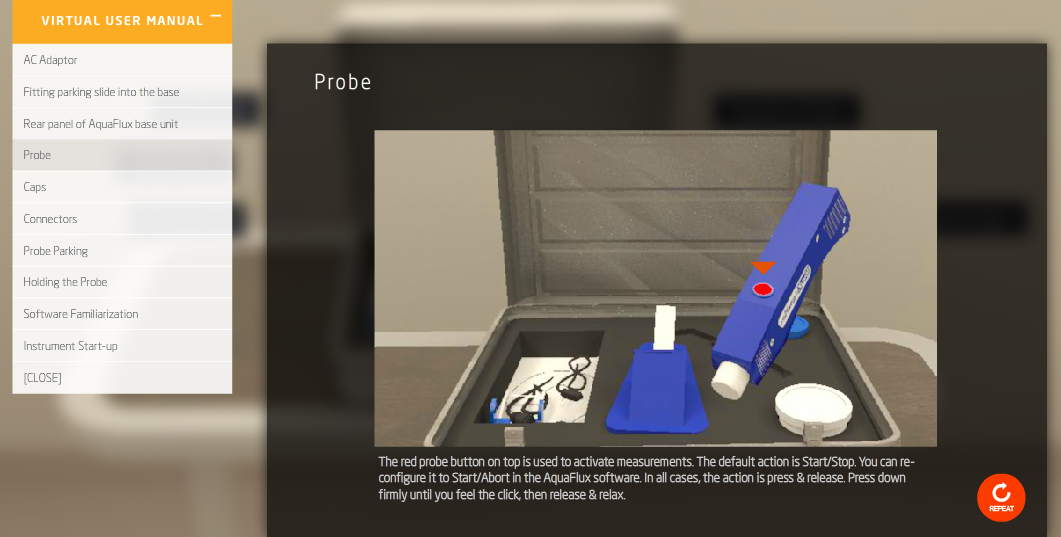

Figure. 33. Clicking on one of the options in the VUM menu (probe).

Figure. 33. Clicking on one of the options in the VUM menu (probe).

In Figure 33, the user clicked on probe item in the VUM’s drop options box, a window appeared to the right of the screen containing instructions and info regarding the AquaFlux’s probe with brief descriptions at the bottom of the page. Users can start again the step by clicking on the repeat button (bottom right corner). To go back, users need to click on the [CLOSE] link first then clicking on the link back to the home page.

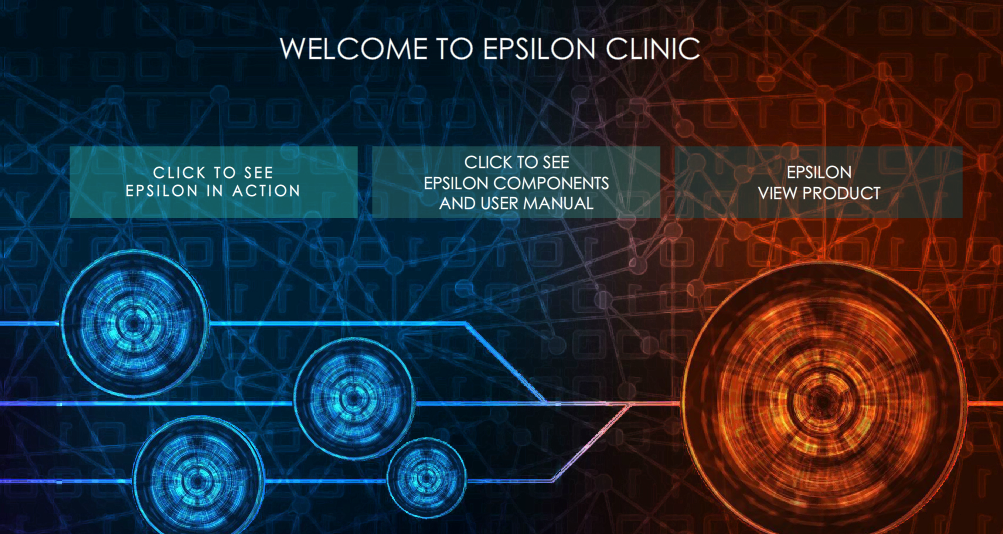

Figure. 34. The main page of Epsilon VUM contains three main links

Figure. 34. The main page of Epsilon VUM contains three main links

Figure. 35. Clicking on the 1st link to see Epsilon in action

Figure. 35. Clicking on the 1st link to see Epsilon in action

6.2. Epsilon Interactive User Manual

Epsilon VUM online system is a completely interactive website that displays a sample skin measurement conducted on a human and shows all functions and features of the device.

After clicking on the Epsilon probe (see Figure 35) the probe will move towards the patient’s hand and placed there. Then the scene will switch into the laptop. After that, the camera moves towards the screen to see the result of skin measurement taken. All the above steps are conducted with the assistance of audio feature.

Figure. 36. Epsilon probe moving towards the patient’s hand for a measurement

Figure. 36. Epsilon probe moving towards the patient’s hand for a measurement

Figure. 37. Clicking on the button (top right) to start scanning human skin

Figure. 37. Clicking on the button (top right) to start scanning human skin

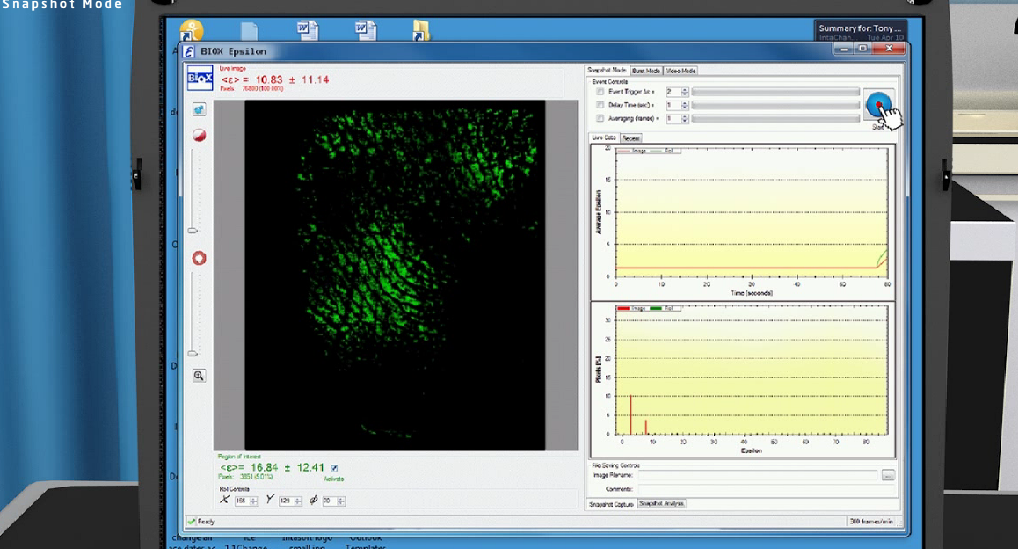

Figure. 38. Scanning in a snapshot mode

Figure. 38. Scanning in a snapshot mode

After clicking on the scan button, scanning will begin in a snapshot mode, in Epsilon users can scan in three various approaches (snapshot mode, burst mode and video mode).

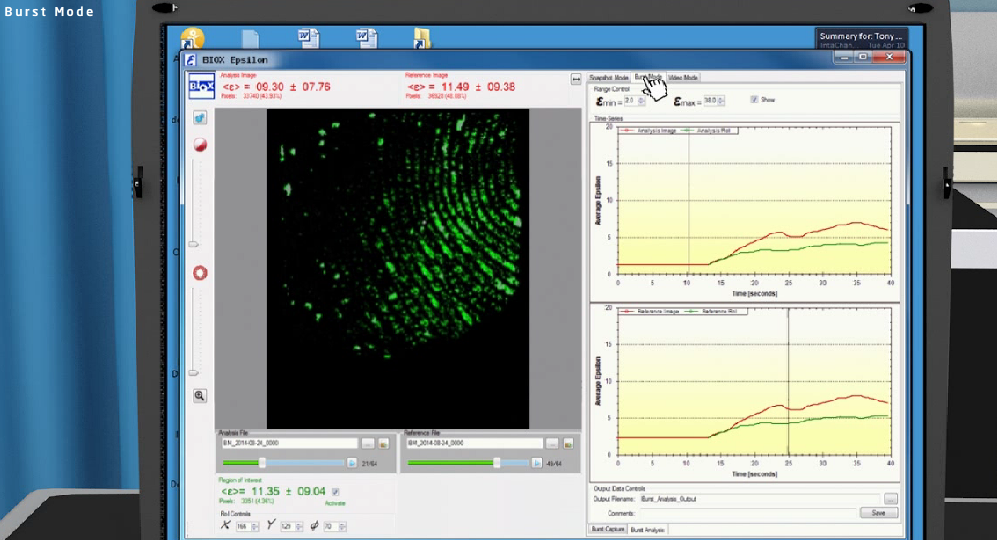

Figure. 39. Scanning in a burst mode (clicking the tab at the top to switch mode)

Figure. 39. Scanning in a burst mode (clicking the tab at the top to switch mode)

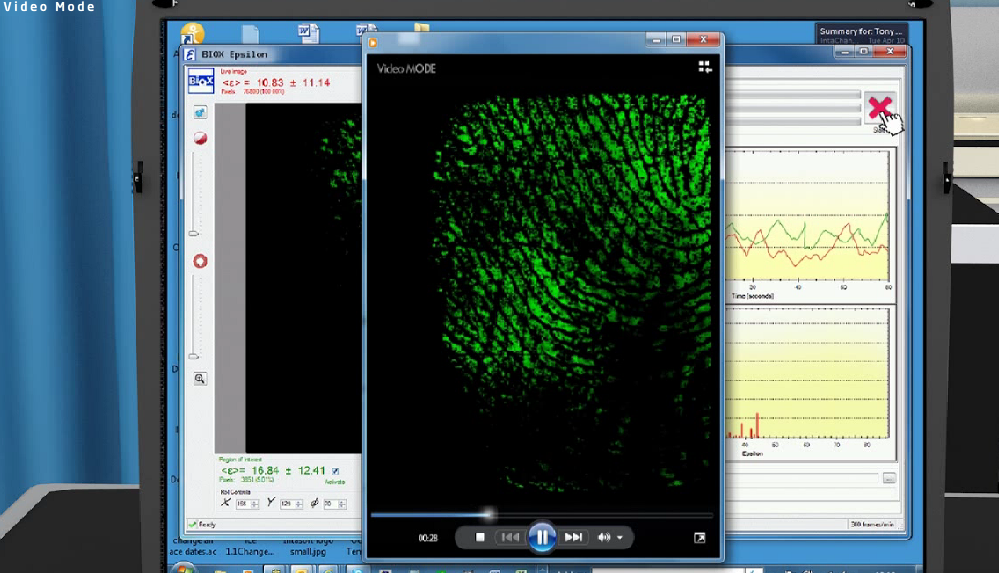

Figure. 40. Scanning in a video mode

Figure. 40. Scanning in a video mode

All files, images and videos of the three different scanning modes saved in their respective folders, snapshot, burst and video folders, users can go to their folders and view, replay or send the captured files. This VUM is assisted with an audio feature to clarify any unclear steps throughout the demonstration process.

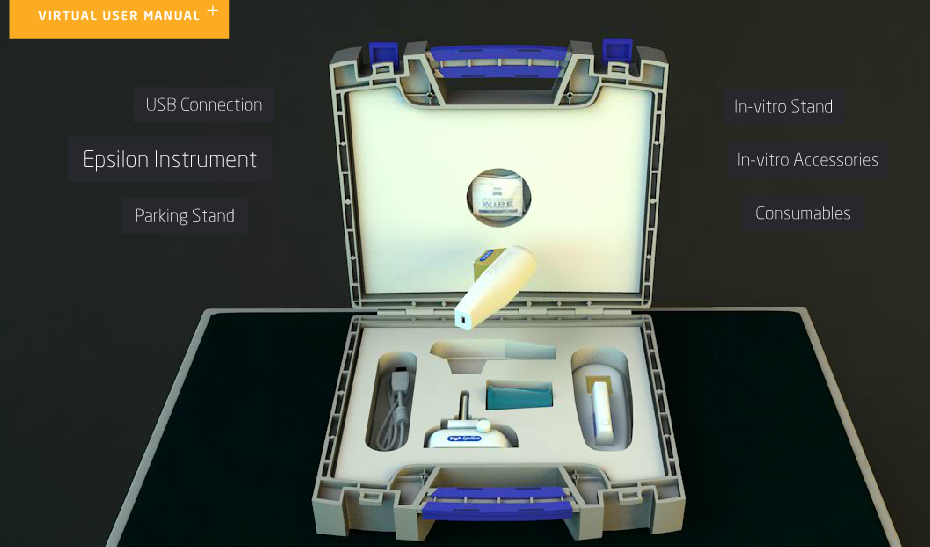

Figure. 41. Epsilon 3D components in a sturdy case

Figure. 41. Epsilon 3D components in a sturdy case

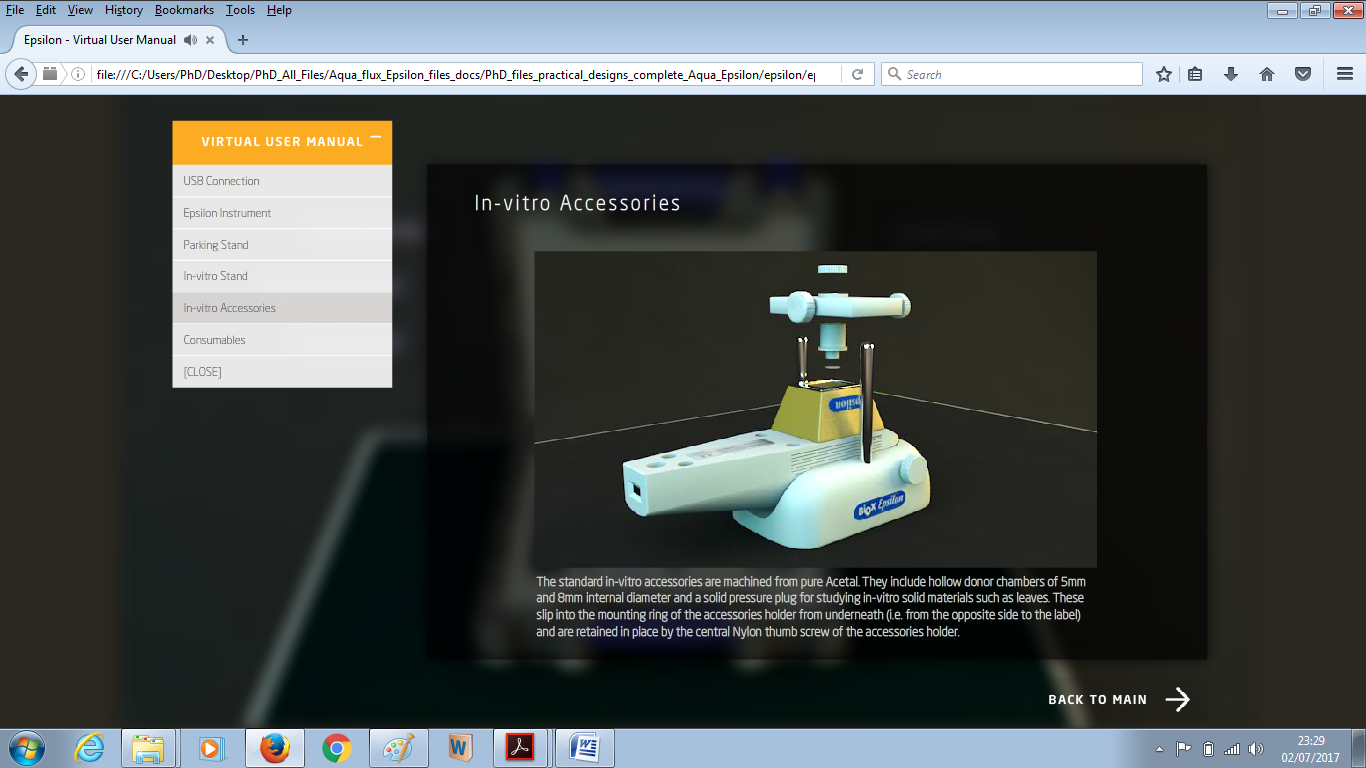

As previously explained in the AquaFlux VUM (Section 7.1), the second link in Epsilon’s main page as shown in Figure 34, will take the user to the Epsilon’s VUM menu. Every part or component located in the protective case will pop up once the user places the mouse on it and start rotating in 3D 360 degree with a brief thumbnail defining that part. The use of mixed technologies of 3D designs and VR results in achieving an incredible engulfing feeling as if being at the real location of the demonstration process [13].

Figure. 42. Epsilon VUM drop down menu

Figure. 42. Epsilon VUM drop down menu

In a similar manner to the AquaFlux pervious VUM, Epsilon’s VUM contains interactive links to demonstrate USB connection, Epsilon instrument, parking stand, in-vitro stand, in-vitro accessories and consumables.

Figure. 43. Epsilon probe sliding into the stand in the VUM menu options

Figure. 43. Epsilon probe sliding into the stand in the VUM menu options

Figure. 44. Epsilon in-vitro stand and accessories demonstrated with the aid of audio feature and 3D ambience in the VUM menu options

Figure. 44. Epsilon in-vitro stand and accessories demonstrated with the aid of audio feature and 3D ambience in the VUM menu options

Figure 44 shows the VUM of Epsilon in operation from the World Wide Web, with the help of audio and illustrative text displayed in a virtual environment and user interaction, the demonstration process will be very smooth, efficient and comprehensive for all users.

7.3 Epsilon and Aqua Flux new holders

The third and final button on the Aqua Flux and Epsilon main pages will take the user into the new addition that evolved during the process of working on this project, which is adding a new set of holders to each instrument (Aqua Flux and Epsilon) that will certainly enhance the measurement process and will provide a more efficient and accurate reading.

Figure. 45. Epsilon’s holder in operation

Figure. 45. Epsilon’s holder in operation

Figure. 46. Aqua Flux’s holder in operation

Figure. 46. Aqua Flux’s holder in operation

7. Evaluating Aqua Flux and Epsilon VUM

The VUM of Aqua Flux and Epsilon was intended to serve clients, users, and trainees who are interested in purchasing AquaFlux and Epsilon medical instruments and wanted to have a clear and detailed illustrative idea on how these two devices function, and what their features are. From a methodological and marketing point of view, it was vital to conduct a usability study that will show the products’ advantages, disadvantages and point out any areas of excellence and parts that require further improvements.

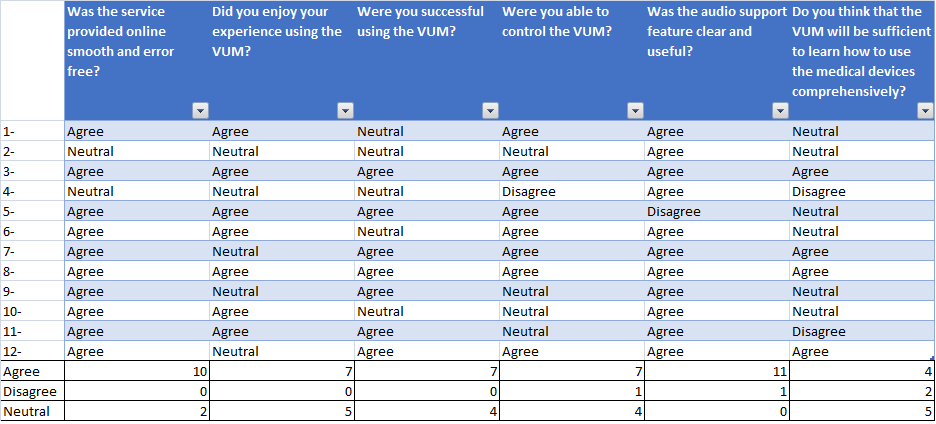

The usability study was conducted by a variety of users, mainly people from a non-IT background. A questionnaire was designed to tackle the most obvious and fundamental questions that could arise while using such a system, moving on to more technical questions. The following table shows the user’s feedback on the survey questions that involved 12 independent participants:

Figure. 47. Survey’s questions with user’s feedback. 12 users participated

Figure. 47. Survey’s questions with user’s feedback. 12 users participated

The following illustrative charts show the results of the individual survey questions.

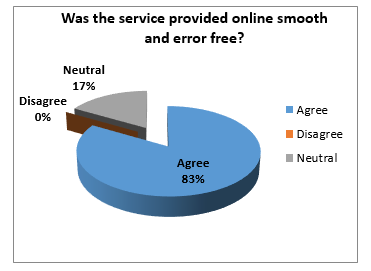

Figure. 48. Shows the success rate of the VUM system being smooth and error-free

Figure. 48. Shows the success rate of the VUM system being smooth and error-free

The above chart shows that 83% of the participants found that the service provided online was smooth and error-free, whereas 17% were neutral. The result indicates the strength of the virtual system.

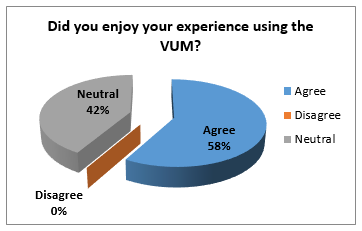

Figure. 49. Shows that the user experience of using VUM was enjoyable

Figure. 49. Shows that the user experience of using VUM was enjoyable

Figure 49 Shows that 58% of the participants enjoyed their VUM experience, 42% were neutral, and 0% disagreed. The result suggests that the system provides an enjoyable experience.

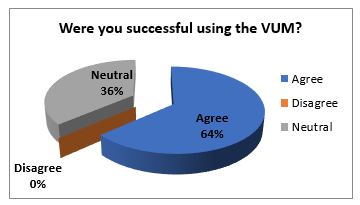

Figure. 50. Shows the success rate of using the VUM

Figure. 50. Shows the success rate of using the VUM

The above chart shows 64% of the participants were successful in using the VUM while 36% were impartial and non disagreed. The chart suggests that the VUM system is a success.

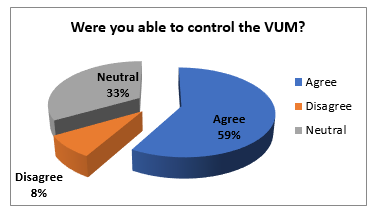

Figure. 51. Shows a high rate of users controlling the VUM

Figure. 51. Shows a high rate of users controlling the VUM

The chart demonstrates that 59% of the participants were able to control the VUM system, whereas 33% were neutral and 8% disagreed. This shows that although a high percentage was able to control it, a minority found it difficult. The result suggests more improvement is required to achieve a higher control success rate.

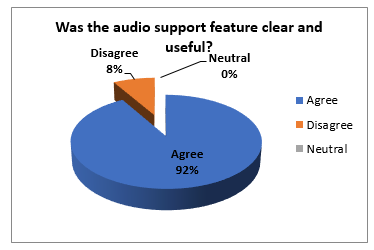

Figure. 52. Shows the success rate of applying the audio feature into VUM

Figure. 52. Shows the success rate of applying the audio feature into VUM

Figure 52 shows that 92% of the participants found that using the support of the audio feature is clear and useful, whereas only 8% disagreed. This is strong evidence to support the excellence of audio feature.

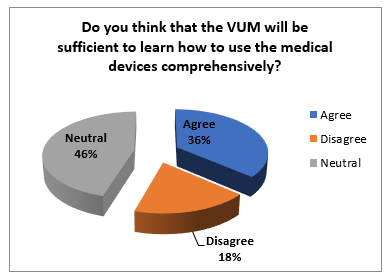

Figure. 53. Shows some users agreed that VUM system is comprehensive

Figure. 53. Shows some users agreed that VUM system is comprehensive

Figure 53 shows that 36% of the participants thought that the VUM system is sufficient and comprehensive, whereas 46% were neutral and 18% disagreed. Although there was a high percentage of neutral responses, a higher percentage agreed rather than disagreed. However, this indicates that there is room for further improvement perhaps by introducing further feature at a later stage.

From the previously illustrated charts and results in relation to all survey’s questions, there are two main issues to discuss:

- Aspects of excellence: presented by

- The service is smooth and free of

- Users successfully used the VUM system.

- The audio feature provided was clear and useful.

- Aspects of improvements: presented by

- The VUM is a sufficient tool to teach users how to use the medical devices comprehensively.

The VUM or the interactive virtual environment of both AquaFlux and Epsilon showed great ease of use, efficiency and practicality. With this virtual environment demonstration at hand and its availability online, clients, trainees, and users who are interested in purchasing one of those skin measurement instruments or wanting to see how they operate by obtaining accurate results. In addition, the VUM demonstration process will help immensely in conveying an accurate thought and a precise sense and enjoyable experience of these two devices and how they operate and what their main functions are. Future ideas, improvements, and upgrades are well on the horizon, Augmented Reality (AR), for example, is a fascinating notion that can support this research and enhance it further. Virtual Reality and AR are opening new ways of interactivity between users and the constructed environments [16]. Moreover, Holograms Technology (3D Hologram) provides a remarkable interactivity surface in team works ambience [17].

The results of the survey strongly suggest that the system is operating effectively although there is room for slight improvements.

8. Conclusions

The objective of the research paper was to introduce a total understanding of an online 3D contents and utilising it in an effective way and not limited to displaying it on a webpage. The main contribution to the field is using an interactive virtual environment to demonstrate the process of using a medical device in conducting a human skin measurement for medical purposes with the aid of audio feature. The new web-based 3D interactive VUM for AquaFlux and Epsilon will replace the old method of illustrating how these medical devices work and what are all their features and functionalities via providing each client with an accessible URL after purchasing the instruments. The web-based virtual system is self-explanatory and easy to use as well as the availability of audio support that guides users step by step throughout the process. Novel holders and futuristic concepts have been introduced to display the capabilities of the new addition and to utilise the marketing process using 3D contents with the interactive virtual world to deliver real novel ideas globally.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgement

This research work is part of a postgraduate degree. I would like to take this opportunity to thank London South Bank University for their continuous support and giving us all access needed to use, test, and, experiment these two medical instruments. The authors would like to thank the reviewers for their invaluable comments and recommendations.

- Al Hashimi, O. and Xiao, P. (2018). Developing a web-based interactive 3D virtual environment for novel skin measurement instruments. 2018 Advances in Science and Engineering Technology International Conferences (ASET), Abu Dhabi, 2018, pp. 1-8. doi: 10.1109/ICASET.2018.8376823

- Cellary, W. and Walczak, K. (2012). Interactive 3D Multimedia Content. London: Springer London

- VMware Inc, V. (2006). Virtualization Overview White Paper. Palo Alto CA: VMware.

- Anon, Instrument Development and Digital Signal Processing in Skin Measurements Zhang Xu School of Engineering London South Bank University Supervisors, Oct

- Xiao, P. (2014). Biox Epsilon Model E100: Contact Imaging System. Available at: biox.biz/home/brochures.php

- Biox (2013). Epsilon E100Manual. London: Biox.

- Ghanbarzadeh, R., Ghapanchi, A.H. & Blumenstein, M., 2014. Application areas of multi-user virtual environments in the healthcare context. Studies in Health Technology and Informatics, 204, 38–46.

- Shen, W. & Zeng, W., 2011. Research of VR Modeling Technology Based on VRML and 3DSMAX. , 487–490.

- Neto, C., 2009. Developing Interaction 3D Models for E-Learning Applications.

- Kotsilieris, T. & Dimopoulou, N., 2013. “ The Evolution of e-Learning in the Context of 3D Virtual Worlds ” Department of Health & Welfare Units Administration, Technological Educational. , 11(2), pp.147–167.

- Potkonjak, V., Gardner, M., Callaghan, V., Mattila, P., Guetl, C., Petrović, V. and Jovanović, K. (2016). Virtual laboratories for education in science, technology, and engineering: A Computers & Education, 95, pp.309-327.

- Violante, M.G. (2015). Politecnico di Torino Porto Institutional Repository Design and implementation of 3D Web-based interactive.

- Naber, J., Krupitzer, C. and Becker, C. (2017). Transferring an Interactive Display Service to the Virtual Reality. Mannheim: IEEE, pp.1-4.

- Walczak, K., White, M. & Cellary, W., 2004. Building Virtual and Augmented Reality Museum Exhibitions *. , 1(212), pp.135–145.

- Yang, X. et al., 2008. Virtual Reality-Based Robotics Learning System. , (September), 859–864.

- Voinea, A., Moldoveanu, F. and Moldoveanu, A. (2017). 3D Model Generation of Human Musculoskeletal System Based on Image Processing. In: 21st International Conference on Control Systems and Computer Science. Bucharest: IEEE, 1-3.

- Wahab, N., Hasbullah, N., Ramli, S. and Zainuddin, N. (2016). Verification of A Battlefield Visualization Framework in Military Decision Making Using Holograms (3D) and Multi-Touch Technology. In: 2016 International Conference on Information and Communication Technology (ICICTM). Kuala Lumpur: IEEE, pp.1-2. High Frequency Surface Structure (HFSS) (15 ed.). Available: http://www.ansys.com

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Laura Medioni, Yvan Gary, Myrtille Monclin, Côme Oosterhof, Gaetan Pierre, Tom Semblanet, Perrine Comte, Kévin Nocentini, "Trajectory optimization for multi-target Active Debris Removal missions." Advances in Space Research, vol. 72, no. 7, pp. 2801, 2023.

No. of Downloads Per Month

No. of Downloads Per Country