Emergence of Fun Emotion in Computer Games -An experimental study on fun elements of Hanafuda-

Volume 3, Issue 6, Page No 314-318, 2018

Author’s Name: Yuki Takaoka1,a), Takashi Kawakami2, Ryosuke Ooe2

View Affiliations

1Division of Engineering, Graduate School of Engineering, Hokkaido University of Science, 006-8585, Japan

2Department of Information and Computer Science, Faculty of Engineering, Hokkaido University of Science, 006-8585, Japan

a)Author to whom correspondence should be addressed. E-mail: 9172001@hus.ac.jp

Adv. Sci. Technol. Eng. Syst. J. 3(6), 314-318 (2018); ![]() DOI: 10.25046/aj030638

DOI: 10.25046/aj030638

Keywords: Hanafuda, Definition of fun, Questionnaire

Export Citations

In recent years, research on game AI has expanded, and now it has become possible to construct even AI of complex games. In accordance with this trend, we constructed the AI of the Hanafuda with a certain degree of complexity. Because of applying the method used in other games to the ball game, we could create a computer player with a certain strength. However, some players feel that strong players are not fun. Therefore, we tried to build a computer player that feels interesting. In the previous experiments, the evaluation for the constructed player was not good. In this research, we conducted a questionnaire survey on players of Hanafuda to raise the evaluation of computer players. The result proved that there are some elements of fun in common among the players.

Received: 28 August 2018, Accepted: 29 October 2018, Published Online: 25 November 2018

1. Introduction

Researches on game AI have been actively conducted. Especially in recent years the advancement of computers has made AI for games that has been considered difficult. There are “Mafia (also known as “Werewolf”) [1]” and “Poker [2]” in what has been reported. Especially in Mafia, it is proposed to use LSTM (Long Short Term Memory) for analysis of games. Also on poker, it has evolved to defeat Texas Hold’em professional player. In this way, it is possible to create a game AI with a high degree of difficulty and advancement of technology is felt. In response to this trend, we have studied “Hanafuda”, which are card games in Japan. The first step was to strengthen the AI of the Hanafuda. Hanafuda is a unique game in Japan, it cannot be said that research is progressing. Therefore, AI which is installed in various Hanafuda game is moderate in ability, never strong. In order to improve AI’s ability, we applied UCT (UCB applied to Tree) algorithm to the Hanafuda. As a result, improvement in ability was seen [3].

However, strong AI was not fun AI. Player who battle against AI are sometimes felt boring. Sometimes players do not want to play against AI. Therefore, we aimed to build an AI that made the human player interesting. In order to achieve this goal, definition of “fun” is necessary. Without the definition of “fun”, computer players cannot produce entertainment. In our research, we have done so far, we defined the following two points as “fun”.

- increase the variance of get or lose scores

- adjust the final score of one match to near ±0

These definitions are those that we have devised their own, it was not accurate. A more precise definition is needed when conducting this research. Therefore, in this research, we aim to accurately define the “fun” of the Hanafuda. Specifically, a questionnaire is made to the people who play the Hanafuda, and the result is analyzed.

The structure of this paper is as follows: First, the Hanafuda that this study targeted is described. Secondly, the results of the questionnaire conducted by this research are described. Finally, we analyze the results obtained and describe the policy of future research.

2. Hanafuda

Hanafuda is traditional Japanese card game, and it is the name of the card used in this game. There are 48 cards in this game. Players aim to acquire them according to the rules. Winning or losing is decided with the card taken by the player.

2.1. Game rules

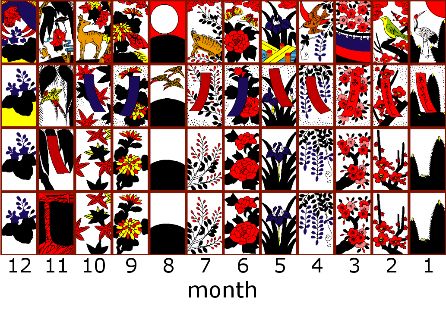

Hanafuda has various rules. The most mainstream among them is “Koi-Koi”. Koi-Koi is a game that makes a combination of specific cards. This game is done by two players. Players scramble for cards with each other. Cards can be taken by matching with cards of the same suit. Figure 1. shows Hanafuda cards and correspondence between the card and the month. The rows of cards belong to the same suit. However, the value of the card is different even within the same suit. Each card belongs to one of “Hikari”, “Tane”, “Tan” and “Kasu”. The value of the card affects when making a winning hand. Figure 2. shows a relationship between cards and classification. Other cards belong to “Kasu”.

In the player’s turn, select a card on player’s hand. If there is card of the same suit in the field, the player can acquire them. At this time, if there are two cards of the same suit in the field, select either one to acquire. If there are three cards, acquire all cards. On the other hand, if there is no card of the same suit in the field, player puts the selected card in field. After the selected card has been processed, excavate the top card of deck, and do in the same way. Up to this point is the turn of the player. At this point, if the winning hand is completed, it is selected whether to continue the game. If player continue, the player aims to create a new winning hand. When player do not continue, player receive points from the opponent player according to the winning hand. If the winning hand is not completed, give a turn to the opponent. The above is a rough rule of Koi-Koi. Based on this, the following section explains the flow of Koi-Koi.

Figure 2. A relationship between cards and classification

Figure 2. A relationship between cards and classification

2.2. The flow of Koi-Koi

- Select the dealer

First, select the dealer. Selection method is random card draw. Each player draws a card; the player who draws a card close to January is a dealer. - Deal the cards

The dealer deals cards. Eight cards are dealt to each hand and eight cards are dealt to the field. - Game start

A turn is started from the dealer. After that, continue to play until the hand cards runs out or until turn player stops the game on the way. The turn player collects cards according to the rules written in 2.1. If both players run out of cards, give 6 points to the dealer and the next game is started. - Game end

It is one game until cards are dealt and either player gains points. One match is made twelve times in a game. It is the win of the player who has the highest score when one match is over.

2.3. Winning Hans of Koi-Koi

Winning hands of Koi-Koi is as shown in Table 1.

Table 1. Examples of winning hands of Koi-Koi

| Name | Combination | Points |

| Hikari | (an example) | 6~ |

| Inoshikachou | 6 | |

| Shichigosan | 6 | |

| Omotesugawara | 6 | |

| Akatan | 6 | |

| Aotan | 6 | |

| Tsukimi-Zake | 5 | |

| Hanami-Zake | 5 | |

| Tane | omission | 1~ |

| Tan | omission | 1~ |

| Kasu | omission | 1~ |

Hikari is determined by the combination of acquired “Hikari” cards. Tane is completed by any five “Tane” cards. Tan is completed by any five “Tan” cards. Kasu is completed by any four “Kasu” cards. Each winning hand, one additional point is awarded for every additional card.

3. Previous study

Previously, we conducted research on “fun”. It was to entertain the players by playing the game according to the definitions of interest we defined. In this research, UCT used for game AI was used. In this chapter, we will describe experiments conducted and UCT which is the method used.

3.1. Monte Carlo method and Monte Carlo Tree Search

Normally, the Monte Carlo method is a method of obtaining results by repeating simulation many times. When this technique is used for a game, it progresses the game at random and judges whether the action is good or bad based on winning or losing. Specifically, play randomly according to the rules of the game, and get win or lose. Next, calculate the expected value of the selected action based on the outcome. This flow is performed for all actions that can be selected at a point in the game, and actions with the highest expected value are selected.

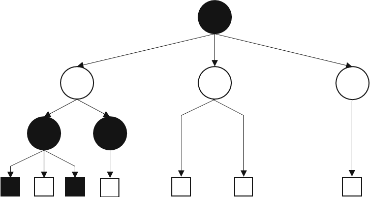

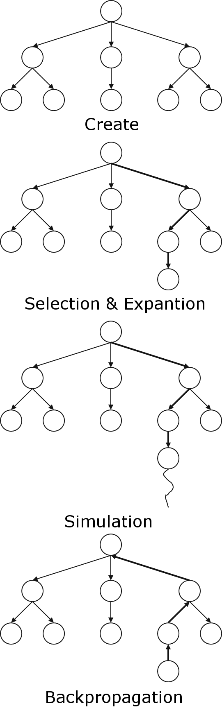

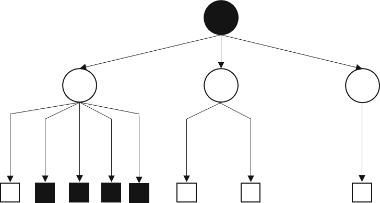

Monte Carlo Tree Search (hereinafter called “MCTS”) is applied to this method for tree search. Characteristics of MCTS are shown in Figure 3. and Figure 4. The MCTS assigns many simulations to the actions that are considered useful (Figure 3.). And when the number of selections of action exceeds a certain value, the tree is expanded (Figure 4.).

Figure 3. MCTS: many simulations at useful action

Figure 3. MCTS: many simulations at useful action

The flow of MCTS is as follows.

- Create a game tree with the current board as the root. In the child node, put behaviors that can be taken at the root node.

- Proceed the game to the end according to the rules (hereinafter called this act “playout”). At this time, nodes selected a certain number of times are expanded.

- When winning or losing is confirmed, record it on all the selected nodes.

- Repeat the specified number of times with 1 to 3 steps as 1 time.

Figure 5. shows these procedures.

The advantage of MCTS is, is where it is not necessary design of the evaluation function. By this, it was widely used for games where evaluation of the board surface was difficult. As an example, there is CrazyStone [4] of Go. CrazyStone adopted the MCTS, its ability to win the then Go Tournament at that time.

3.2. UCT

UCT incorporates UCB (Upper Confidence Bound) for tree search. UCB was developed by Auer to solve the Multi-Armed Bandit problem [5]. A commonly used example of the Multi-Armed Bandit problem is a model that gambler plays slot machines. In order to maximize profits, gambler is a matter of thinking which slot machine to play. Gambler uses UCB to solve this problem. The UCB is a value calculated from the play situation of the machine, and by using it, gamblers can make a lot of profit.

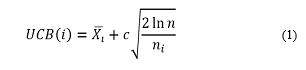

UCB is calculated by (1).

In (1), is the expected value of the i th slot machine and ni is the coin insertion number of the i th machine, n is the total number of coins inserted into the machine. Also, c is a constant, and the strategy that emphasizes by changing this will change. A commonly used value is 1, which is a well-balanced value between search and profit acquisition.

In (1), is the expected value of the i th slot machine and ni is the coin insertion number of the i th machine, n is the total number of coins inserted into the machine. Also, c is a constant, and the strategy that emphasizes by changing this will change. A commonly used value is 1, which is a well-balanced value between search and profit acquisition.

UCT handles each child node as a Multi-Armed Bandit problem and performs a tree search. Update the UCB with simulation results and seek the most valuable behavior.

3.3. Research to produce “fun” by UCT

Among the many definitions of fun, we have defined the definition of “fun” in the previous research as follows. [6].

- increase the variance of get or lose scores

- adjust the final score of one match to near ±0

The first definition is to avoid becoming a boring match. It tends to be a boring match if there is little exchange of scores. We decided that match will be interesting if player makes active exchange of score, and adopted this definition. The second definition is to improve the impression of the match. Human players tend to worsen the impression of match if they lose a lot to computer players. Conversely, even if a human player wins too much, it is not good and it is necessary to balance. Therefore, we judged that we should adjust the score to around ± 0 without major win or loss. We adopted this definition for the time being because we got a response that suggested this definition to several players and that it is reasonable.

In order to produce the “fun” that we defined, we attempted to change the usage of UCT and realize it. Specifically, change the decision method when selecting a card in hand as follows.

In (2), point is the score obtained by the computer player, and i is the number of the hand cards. Equation (2) selects a card that loses score if the score of the computer player is plus. For example, the score of the computer player is +7 points. At this time, the computer player selects a card which can be brought closest to 0. In this way we tried to produce “fun”.

In (2), point is the score obtained by the computer player, and i is the number of the hand cards. Equation (2) selects a card that loses score if the score of the computer player is plus. For example, the score of the computer player is +7 points. At this time, the computer player selects a card which can be brought closest to 0. In this way we tried to produce “fun”.

However, the experiment result was not good. The comments of the human players who fought against the computer player are shown in Table 2.

Table 2. Human player’s comment

| Name | Good point | Bad point |

| A | · It is not too strong, but it will not let me win easily.

· Not a one-sided match, I can enjoy a match to a certain extent. |

· Computer players sometimes do strange selection. |

| B | · I could see it trying to make a game. | · The strength was not constant. I felt it was often too weak. |

Analyzing these comments, the following can be said.

- It is not so strong that human player cannot win.

- Computer player is trying to entertain them.

- It feels too weak for some people.

- There are scenes in which selection is considered strange.

Accordingly, “fun” defined by us is insufficient, and more precise definition is necessary. In this research, as a first step for accurate definition, it is to investigate the “fun” that the players of the Hanafuda think.

3.4. Other research

Ikeda et al. ‘s research is an example of research that produces fun. Ikeda et al. researched “computer Go which entertain human players”. For that purpose, Ikeda et al. interviewed experts “What are the elements entertaining Go?” [7]. From the results, researches on elements such as “What is humanness” are under way. Finally, Ikeda et al. said that discovery about “AI like human” was obtained, and they are thinking whether I can create “interesting AI” using this. We decided to conduct research along this trend. As in the case of Ikeda et al.’s research, this research is conducted as a first step to narrow down the elements of fun.

4. Questionnaire survey

In this chapter, the questionnaire survey conducted and the results are described.

4.1. Survey method

We sent a question by e-mail to the person who consented to the investigation and carried out a survey by getting it sent back. The implementation period was from 16th March to 2nd April 2018. There were 76 people who agreed to the survey, 61 of whom returned the mail within the period, and the response rate was 80.3%. Therefore, the total number of respondents is 61 people. In addition, this questionnaire survey is targeted to players who regularly play Hanafuda, and it is not a questionnaire after collecting participants and making a match.

4.2. Survey content

We made the following items as questionnaire contents:

- Attributes of survey target

- Age and gender

- Number of years that they’ve been playing Hanafuda

- Frequency of playing Hanafuda

- Questions about fun

- Elements making the Hanafuda interesting

- Elements making the Hanafuda uninteresting

- Recommendations for Hanafuda

Question 2 is a form of free description.

5. Questionnaire results

5.1. Attributes of survey target

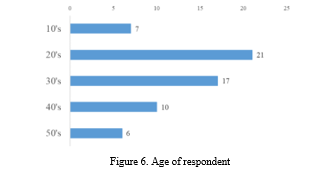

- Age and gender

Regarding gender, all respondents were male. The age was wide, ranging from teens to 50’s, with an average age of 31.9 years.

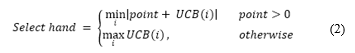

- Number of years that they’ve been playing Hanafuda

The minimum was one year and a half, and the maximum was 15 years.

Figure 7. Play number of years

Figure 7. Play number of years

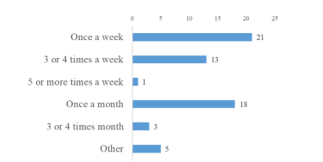

- Frequency of playing Hanafuda

The most frequent answers were about once a week. The next most frequent answer was about once a month, under it 3 to 4 times a week. In addition, there was an answer that it does not go regularly but does it at a specific time. Incidentally, this answer includes not only using actual cards, but also those play on electronic devices.

Figure 8. Frequency of playing Hanafuda

Figure 8. Frequency of playing Hanafuda

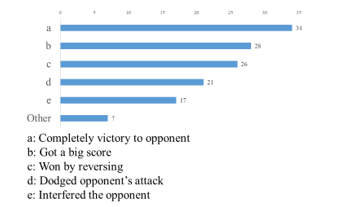

Figure 9. Elements making the Hanafuda interesting

Figure 9. Elements making the Hanafuda interesting

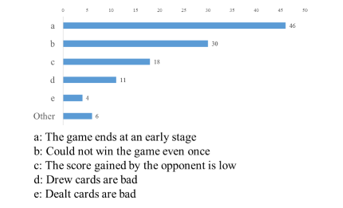

Figure 10. Elements making the Hanafuda uninteresting

Figure 10. Elements making the Hanafuda uninteresting

5.2. Questions about fun

- Elements making the Hanafuda interesting

We asked the fun elements of the Hanafuda. The results are shown in Figure 6.

Elements with many responses are as follows:

- Completely victory to opponent

- Got a big score

- Won by reversing

These items were the top 3.

- Elements making the Hanafuda uninteresting

Contrary to 1., we asked the suffering elements of the Hanafuda. The results are shown in Figure 7.

- Recommendations for Hanafuda

We asked about the recommendations for the Hanafuda. The most frequent content is about “Tskumi-Zake and Hanami-Zake”. Tsukimi-Zake and Hanami-Zake can get 5 points with two cards. Therefore, the degree of difficulty is low, and the player can easily make a Winning-Hand. It seems that there are many players who view this point as a problem. The second frequent answer was about the rules of Hanafuda. There are various rules in the Hanafuda, but what is mainstream now is “Koi-Koi”. There seems to be some players who wish that not only this but also more diverse rules become common. Other, many recommendations on rules and dissemination were received.

6. Future research policy

Future research will conduct a detailed questionnaire survey based on the obtained answers. In the present situation, only the element of the “fun” of the Hanafuda that each player thinks were obtained, and it is necessary to seek elements that satisfy many players. As a specific procedure, we conduct a questionnaire survey of 2 choices for each element obtained in this research. For example, “Yes / No” answers to the question “Do you feel that “Completely victory to opponent” is interesting?”. Based on the questionnaire survey, we aim to create computer players that many players feel interesting. It is also necessary to ask many players questions that can refine questionnaire questions and analyze the essence of interest. After conducting detailed questionnaires, detailed analysis is also required.

7. Conclusion

In this research, we investigated interesting elements of the game with the goal of creating a computer player that makes human players fun. Among various kinds of games, this research targeted the Hanafuda that are games in Japan. Survey was conducted by sending questions to players who play Hanafuda. By the reply sent back, we got the fun that each player thinks. In future, it is necessary to further investigate and seek more definite definition of fun.

- M. Kondoh, et al., “Development of Agent Predicting Werewolf with Deep Learning” in International Symposium on Distributed Computing and Artificial Intelligence, Toledo Spain, 2018. https://doi.org/10.1007/978-3-319-94649-8_3

- M. Moravcík, et al., “Deepstack: Expert-level artificial intelligence in heads-up no-limit poker”, Science, 356(6337), 508-513, 2017. https://doi.org/10.1126/science.aam6960

- Y. Takaoka, et al., “A study on strategy acquisition on imperfect information game by UCT search” in the 2017 IEEE/SICE International Symposium on System Integration, Taipei, Taiwan, 2017. https://doi.org/10.1109/SII.2017.8279334

- R. Coulom, “Monte-Carlo Tree Search in Crazy Stone” in Game Prog. Workshop, Tokyo, Japan, 2007.

- P. Auer, et al., “Finite-time Analysis of the Multiarmed Bandit Problem”, Machine Learning, 47(2-3), 235-256, 2002. https://doi.org/10.1023/A:1013689704352

- Y. Takaoka, et al., “A Fundamental Study of a Computer Player Giving Fun to the Opponent”, Computer Science & Communications, 6(1), 32-41, 2018. https://doi.org/10.4236/jcc.2018.61004

- M. Yamanaka et al., “Bad Move Explanation for Teaching Games with a Go Program”, IPSJ SIG Technical Report, 2016-GI-35(5), 1-8, 2016. (Japanese)

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

No. of Downloads Per Month

No. of Downloads Per Country