Visualizing Affordances of Everyday Objects Using Mobile Augmented Reality to Promote Safer and More Flexible Home Environments for Infants

Volume 3, Issue 6, Page No 74-82, 2018

Author’s Name: Miho Nishizakia)

View Affiliations

Department of System Design, Tokyo Metropolitan University, 191-0065, Japan

a)Author to whom correspondence should be addressed. E-mail: mnishiza@tmu.ac.jp

Adv. Sci. Technol. Eng. Syst. J. 3(6), 74-82 (2018); ![]() DOI: 10.25046/aj030607

DOI: 10.25046/aj030607

Keywords: Augmented Reality (AR), Affordance, Visualization, Accident, Development, Infant

Export Citations

This study presented a prototype augmented reality (AR) application that helps visualize the affordances of everyday objects for infants in their home environments to prevent accidents and promote development. To detect and visualize affordances, we observed 16 infants regarding how they perceive and handle common objects from 4 to 12 months of age in their homes in Tokyo, Japan and in Lisbon, Portugal. Based on the longitudinal observation data, we developed an AR application for handheld devices (iPhone and iPad) and tested two types of vision-based markers. Ten types of basic objects were selected from the results of the observation and embedded into the AR markers. AR contents illustrated infants’ actions toward objects based on actual video data recorded for security purposes. To confirm the prototype’s advantages and improvements, informal user interviews and user tests were conducted. The results demonstrated that the prototype reveals the relationship between infants and their home environments, what kinds of objects they have, how they perceive objects, and how they interact with these objects. Our study demonstrates the potential of this application’s AR contents to enable adults to better understand infants’ behavior towards objects by considering the affordances of everyday objects. Specifically, our app assists in improving the perspective of adults who live with infants and promotes the creation of more flexible and safer environments.

Received: 15 August 2018, Accepted: 20 October 2018, Published Online: 01 November 2018

1. Introduction

1.1. The Environment and Augmented Reality

Augmented reality (AR) technology enables us to visualize our living environment in novel ways. In 1968, Ivan Sutherland created the first AR experience generated using a head-mounted display [2], [3]†. In the early 1990s, the term “augmented reality” was coined with the first technological development underlying augmented devices [4], [5]. Azuma described AR as systems with three technical components: (1) use of real and virtual elements, (2) real-time interactivity, and (3) 3D registration [6]. The third characteristic “3D registration,” refers to the system’s ability to anchor virtual content in the real world, that is, in a part of the physical environment [7].

AR enhances user perceptions of and interactions with the real world by seamlessly merging virtual content with reality [5], [7]. Whereas virtual reality technology replaces reality by creating a whole immersive virtual environment, AR enhances the reality of an experience without changing it by projecting partially virtual/digital content in a non-immersive way.

Current AR devices require four elements: displays, input devices, tracking, and computers. Displays include head-mounted, handheld, and spatial displays. Mobile AR systems use handheld displays such as cellular phones, smartphones, and tablets. The first mobile-phone-based AR application was demonstrated in 2004 [8], and the mobile AR market has since grown consistently [6], [9], [10–12]. Such applications became well established with the advent of Pokémon Go in July 2016. This successful location-based mobile game required users to find virtual characters in the real world, and it immediately became an international sensation. It infiltrates users’ physical lives and changes their locomotor behavior with potential effects to their health. Pokémon Go was launched for the Apple Watch in December 2016 and helped further promote health effects. Even now, in 2018, this game remains popular [13].

AR application development for mobile devices has become a large and fast-growing area as AR glasses and head-mounted displays are being improved further. The AR market is valued at $83 billion, whereas the VR market is valued at only $25 billion [14]. Azuma suggested the following potential application areas for AR: medical visualization, equipment maintenance and repair, annotation, robot path planning, entertainment, and military aircraft navigation and targeting [7]. Thus far, AR has been used in fields such as architecture, clinical psychology, cognitive and motor rehabilitation, education, and entertainment. AR has become popular; nonetheless, more research is needed on how to use it to better living experiences. The use of AR could be increased by improving device and recognition technologies. However, few studies and applications of AR currently exist for solving everyday issues.

1.2. Accidents and Developments in Home Environments

Young children spend most of their time at home while they grow up. Although most people think of accidents as things that happen outside the house, accidental injuries among infants and young children at home have been a major issue worldwide. For example, 14.5% of aged 0–4 years children’s deaths are caused by home accidents in Japan [15]. Similarly, in the UK and the USA, the 0–4 year age group is at the highest risk of home accidents and the accidents at home are the leading cause of deaths [16–18]. Therefore, many studies have explored how to prevent accidents at home [19–23]. However, suitable methods for collecting actual data at home and for conducting quantitative and qualitative analyses have not yet been established.

Most injury alerts are based on hospital reports [24–27]. The Japan Pediatric Society publishes “Injury alerts” since 2008 and “Follow-up articles” since 2011 based on members’ reports on their website. Although such information is useful and important, it is often incomprehensible to ordinary people. Therefore, such information needs to be presented clearly and be accompanied by detailed explanations. Several studies have highlighted that accidental injuries suffered by children at home are preventable [21–24]. This study uses an AR system to visualize and simulate infants’ behavior in a concise and clear manner to provide caregivers of children with insights into their own living environments.

1.3. Visualizing Possibilities-Affordances

It is too difficult to protect infants from all dangerous possibilities. At the same time, just to prevent accidents, children may be banned from exploring various things at all stages of development. It is important to avoid overly protective behavior or overcontrolling children. Therefore, we employed James Gibson’s theory of affordance [28,29] to deal with infants’ unexpected behaviors at home during growth. The affordance is an essential concept of ecological approach; it refers possibilities or opportunities for behavior that the environment offers an animal. Gibson proposed that an affordance is a fact in the environment as well as a fact embodied by an act. It implies the complementarity of the animal and the environment [29]. Thus, the affordances of the home environment in which we spend most of the time and our way of life are inseparable. Furthermore, according to Gibson, “the affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill [29].”

Perceiving the information of affordances is not the same even if people share the same objects or space. Although adults know the way to use objects at home as designed, infants explore every object and find a variety of possibilities. We do not have to know the name of the object or how to use it to perceive what it affords. Empirical studies on individual affordance have been advanced in a series of pioneering studies since 1960 [30–34]. However, researchers have defined affordance in a variety of ways. The generalization methods are still being investigated as they have not yet been thoroughly established [35–39]. Experiments on infants’ natural activities have been conducted in a laboratory playroom [40–42]; however, these kinds of studies are few in number and little is known about everyday home environments. Therefore, the current study aims to present a method adaptable to the home environment that would help visualize resources to support healthy growth of children from a variety of aspects.

This study has three main objectives: First, to identify infants’ unique behaviors in home environments by the longitudinal observations during the first year; second, to examine data for two countries whether there is a difference or not; and third, to present an AR mobile application prototype that enables users to simulate infants’ behaviors toward objects that assists caregivers to understand intuitively and hence provide better environments for infants.

2. Methods

2.1. Participants

We recruited ten healthy infants (6 males, 4 females) in Japan and six healthy infants (4 males, 2 females) in Portugal from middle or upper middle-class families for the longitudinal observations. In Japan, eight Japanese families and one Hungarian family were from the Tokyo area. In Portugal, all Portuguese families were from the Lisbon area.

2.2. Procedure

Observation. Longitudinal observations were conducted to clarify how infants behave at home during their first year. We observed the activities of 16 infants at their homes from 4 to 12 months of age. An experimenter or infants’ caregivers recorded the infants’ behaviors with digital video cameras (EX-ZR500; Casio Corp) for over 60 min per month. The recordings needed to cover several natural behaviors (e.g., it is preferable to not film only one activity such as napping). When caregivers recorded the videos, they were asked to send the recorded video data to us every month via a mail or file transfer service. Although parts of monthly data from both countries were unavailable because of family reasons, we collected 122 h of digital videos for Japan and 53 h for Portugal in total.

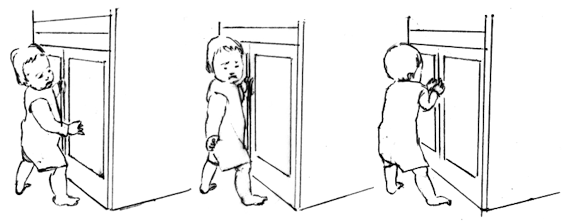

Design and Development. This process consisted of four steps: (1) sketch and paper prototyping; (2) design of an iOS application using Adobe Illustrator CC and Photoshop CC; (3) development of the iOS application using OpenCV 2.4.9, Objective-C, and Swift 4.0; and (4) creation of AR movies based on videos from the observations using Adobe Photoshop CC and Adobe Lightroom CC. All AR movies were illustrated manually as line drawings to protect privacy and make it easy to zoom in infant–object interactions. More than three drawings were needed for every second and each playtime is less than 60 s. Line drawings were digitized using Lightroom and Photoshop. Every image was saved at 448 × 336 pixel resolution and combined and exported as QuickTime movies. An example of line drawings of a cabinet with an infant (11 months, 17 days) is shown in Figure 1.

Figure1. Example of line drawings (cabinet).

Figure1. Example of line drawings (cabinet).

2.3. Data Coding

Two coders scored the infants’ attribute, house style, behaviors, postures, milestones, ages, places, durations, objects/people (if infants directly touched), and surfaces from digital video recordings by using the Datavyu coding software [43], with reference to the caregiver’s comments or notes.

2.4. Ethical Considerations

All procedures for this study were approved by the relevant institutional review boards in consideration of ethical standards for research on human subjects. Informed consent was obtained from all families.

3. Results

3.1. Reality from Observation

A prototype AR application was developed to enable the simulation of children’s actions in the first year based on recorded data from observations. We observed infants’ spontaneous interactions between surrounding objects at their home from the recorded data. Two infants (one Japanese and one Portuguese) were excluded from the analysis because the data for these babies were not enough 9 months.

Number of Objects at Home. Infants’ interactions with surrounding objects were counted using the observational data. All objects that infants chose to interact with met the following two requirements: 1) Infants directly touched the object by him/herself, and 2) objects observed at infants’ homes were items that were used daily, such as furniture.

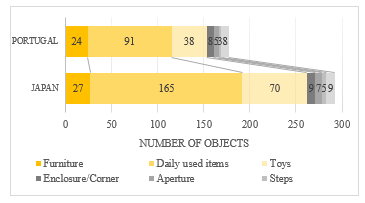

On the whole, Japanese infants, between 4 to 12 months of age, encountered 292 types of objects at their homes. As for Portuguese infants, 177 types objects were observed at their homes. Figure 2 shows the kinds of objects that exist and how many objects exist in each country. According to the ecological psychologist James Gibson’s definition of the term objects, in this study, we categorized the two types of objects: detached objects and attached objects [29]. A detached object can be displaced from the surface while an attached object cannot. We identified 262 detached objects including furniture, daily used items, toys, and 30 attached objects including enclosures/corners, aperture, steps, and concave/convex objects, in our recordings in Japan. Similarly, in Portugal, we observed 153 detached objects and 24 attached objects. A number of differences were found in the detached objects, especially daily used items and toys. Japanese infants could reach more stationary items (e.g., crayons, erasers, scales, pencils, pens, drawing boards, papers, and notebooks), and kitchen items (e.g., cups, dishes), laundry items (e.g., clothespins, hangers, laundry basket), and room conditioner (e.g., thermometer, humidifier, hot water bottle, heater, stove) than Portuguese infants. Regarding toys, more handmade toys, digital video games, and character items were observed in Japanese homes than in Portuguese homes.

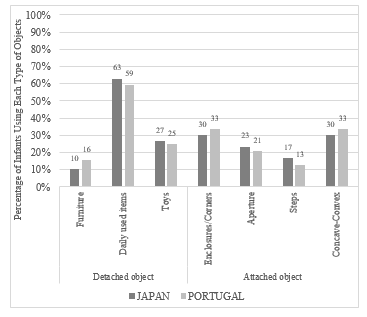

Figure 3 shows the percentage of the number of objects that infants interacted with during the observation period in each country. The detached objects, especially the daily-used objects, were observed most frequently among all categories in both countries. It suggested that the tendency of interaction with objects of these categories is similar even though the country is different.

Based on this result, we selected ten objects which were common, continuous, and frequently-observed, for this prototype AR application. Detached objects included cabinet, chair, cushion, futon/mattress, sofa, and table. Attached objects included bathtub, door, threshold, and wall.

Figure 2. The number of objects that infants directly touched at home in each country. The yellow bars indicate detached objects and the monochrome bars indicate attached objects.

Figure 2. The number of objects that infants directly touched at home in each country. The yellow bars indicate detached objects and the monochrome bars indicate attached objects.

Figure 3. Percentage of each object-category in each country.

Figure 3. Percentage of each object-category in each country.

Actions and Perceptions. The results of the observations showed infants’ spontaneous activities involving objects in their homes. It combines both developmental possibilities and accident risks. To clarify how often every action occurred or were supposed to occur, we counted the number of possibilities of development, accidents, and both accidents and development from all recordings. In this case, we measured the possibilities because we attempted to protect the infant from the accident before accidents occurred during observation. Therefore, the moments also applied to such cases: Parents say “watch out” to the infant or supported the infant’s posture before falling.

The number of sessions were 1771 in total (M = 196.78, SD = 110.17) for Japanese infants, and 910 (M = 182, SD = 117.64) for Portuguese infants. The conditions of three types of selections are as follows. Accidents were linked to injury-inducing behavior such as losing balance (slip, trip, and fall down), drinking or eating harmful substances/objects, and touching something dangerous for infants. Demonstrations of preventative actions by people in the immediate vicinity before the occurrence of accidents are also included. This includes the same aforementioned actions. Development is associated with motor, perceptual, and cognitive progress to a greater degree than before. Both accident and development are recognized in the same session.

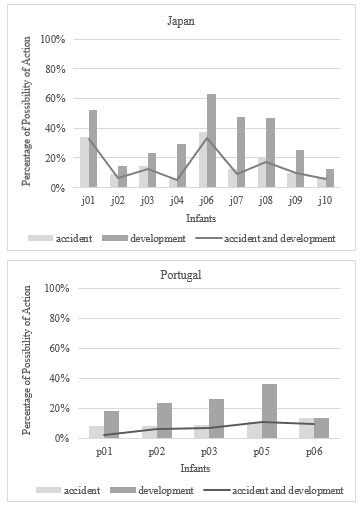

Figure 4. Percentage of action possibilities related to development, accidents, and both in each country per infant.

Figure 4. Percentage of action possibilities related to development, accidents, and both in each country per infant.

In both countries, for all sessions, the number of developments was higher than accidents and the combination of accident and development. Figure 4 shows the percentage of action for possibilities of development, accidents, and both in every infant’s total sessions per country. The values for accidents and both accidents and development were close in most cases. It indicates that the accidents tend to involve developmental issues that might have affected the situation.

There were over 30% of possibilities of accidents for two infants (Japan: j01 and j06). Common features in these incidents included the detached objects, furniture, and daily used items in both countries. These objects were adapters, cords of light/AV equipment/laptop PC/phone, pencils on tables, cream of tube package, washing machines, bathtubs, sinks, fence, tripods, strings, steps, low tables, cabinets, and doors. However, the only observed baby products that caused accidents were the walker and the high chair. Needless to say, the accidents are related to motor developmental changes; however, the results suggest that many normal products that are designed for a particular purpose were perceived differently by infants, and this difference may cause accidents (e.g., dressers, cabinets and washing machines sometimes offer opportunities to touch, hang on, climb on, and enter inside, for infants. However, there are a number of accidents related to washing machines in particular [44]).

3.2. AR application and vision-based tracking

Vision-based tracking is defined as registration/recognition and tracking approaches [5, 6]. In this study, two types of vision-based marker codes were examined (Figure 5). In Figure 5 (a), OpenCV, MATLAB, and Illustrator, are used for compounding dots and object icons. Figure 5 (b) uses Vuforia and Illustrator for combining textures and icons. Both markers can be attached to real-world objects that can be printed using a printer.

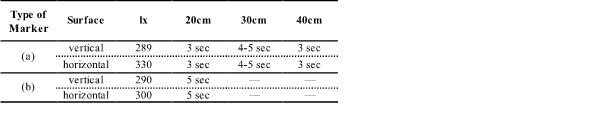

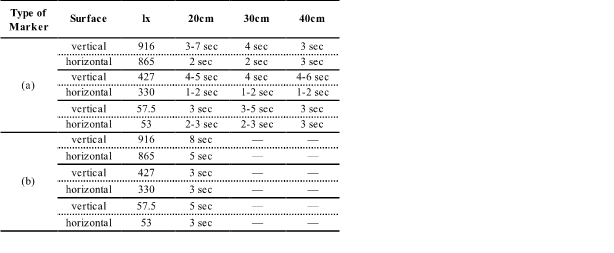

We measured the tracking times to determine if there is a difference between two types of markers (Figure 5) along the two directions of surface (horizontal and vertical) under two lighting conditions (A: Fluorescent light, B: Flat-surface Fluorescent light.). Tables 1 and 2 show the relation between velocity and illuminance of the two directions of surface and the distance from marker to device. To confirm the accuracy of the marker recognition related to two differnt light condition, we used an illuminance meter (KONICA MINOLTA T‑10). Differences between two markers at distances greater than 30 cm, regardless of light conditions, were observed (Table 1 and Table 2). Marker (b) indicates more unstable properties than marker (a) in both conditions. Moreover, additional differences were found between the two orientations of the surface under the lighting (B). Although the illuminance was sufficient to capture an AR maker, the tracking time was slightly longer for vertical surface than for horizontal surface. This suggests that surface orientation affects tracking in the same manner as other AR marker systems.

Figure 5. Examples of AR markers. Left: dot-type marker (a), Right: texture-type marker (b).

Figure 5. Examples of AR markers. Left: dot-type marker (a), Right: texture-type marker (b).

Table 1. Change of velocity related to illuminance and distance between marker and device under fluorescent light (A).

Note. Fluorescent light’s product # is FPL36EX-N, 4 tubes × 20, 2900 lm/tube.

Table 2. Change of velocity related to illuminance and distance between marker and device under flat-surface fluorescent light (B).

Note. Flat-surface fluorescent light’s product # is ELF-554P, 4 tubes × 1, F55bx/Studiobiax32, 4100 lm/tube, 3,200 K.

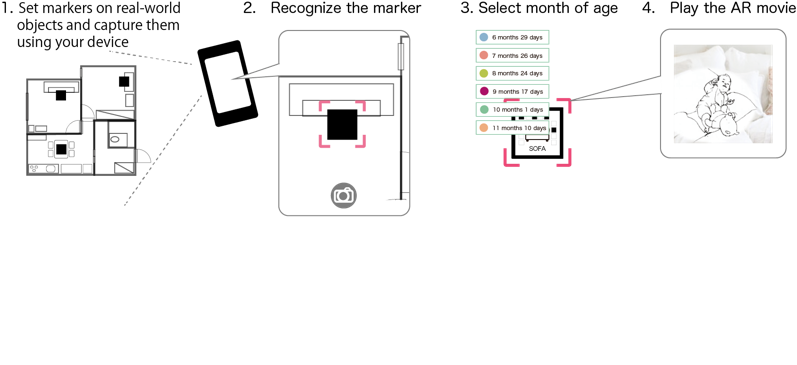

Overview. Figure 6 depicts an overview of how to use the prototype application. First, in order to watch AR movies, the user needs to put markers on objects at his/her home. After starting the application, the user can tap the camera icon. Once tapped, the camera window appears and the user capture the AR marker in a manner similar to taking a photograph. When the camera recognizes the marker, a pop-up showing the list of movies according to the age in months is shown. As the user select the movie, AR movie begins to play.

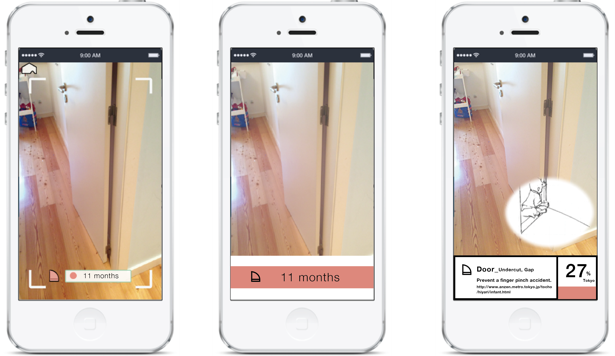

Every marker contains approximately 10 AR movies that illustrate the interactions of infants between 4 and 12 months of age, with objects. The prototype enables the user to simulate how infants interact with the environment and know the difference according to the objects and infants’ age. Furthermore, users can also learn about infants and objects. We introduced a notification window of an example for positive and negative tips in Figure 7. Users can find further information as they tap the object icon. In the case of risk information, it is composed with recorded conversations from the data, comments from caregivers, and links to websites.

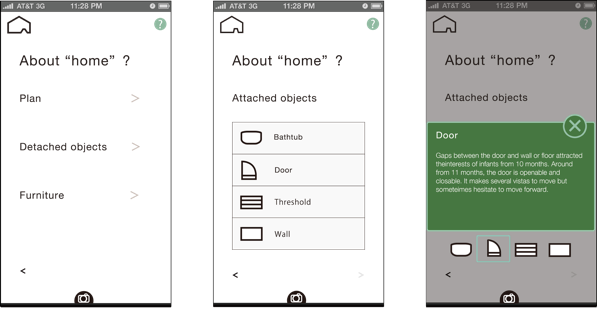

Figure 8 shows another example of attached objects for further references. This small dictionary was implemented in the application to serve as an introduction for the application users. The users were able to select the content to understand objects at home from the perspective of infants from the floor plan, attached objects, and detached objects.

3.3. User Experience

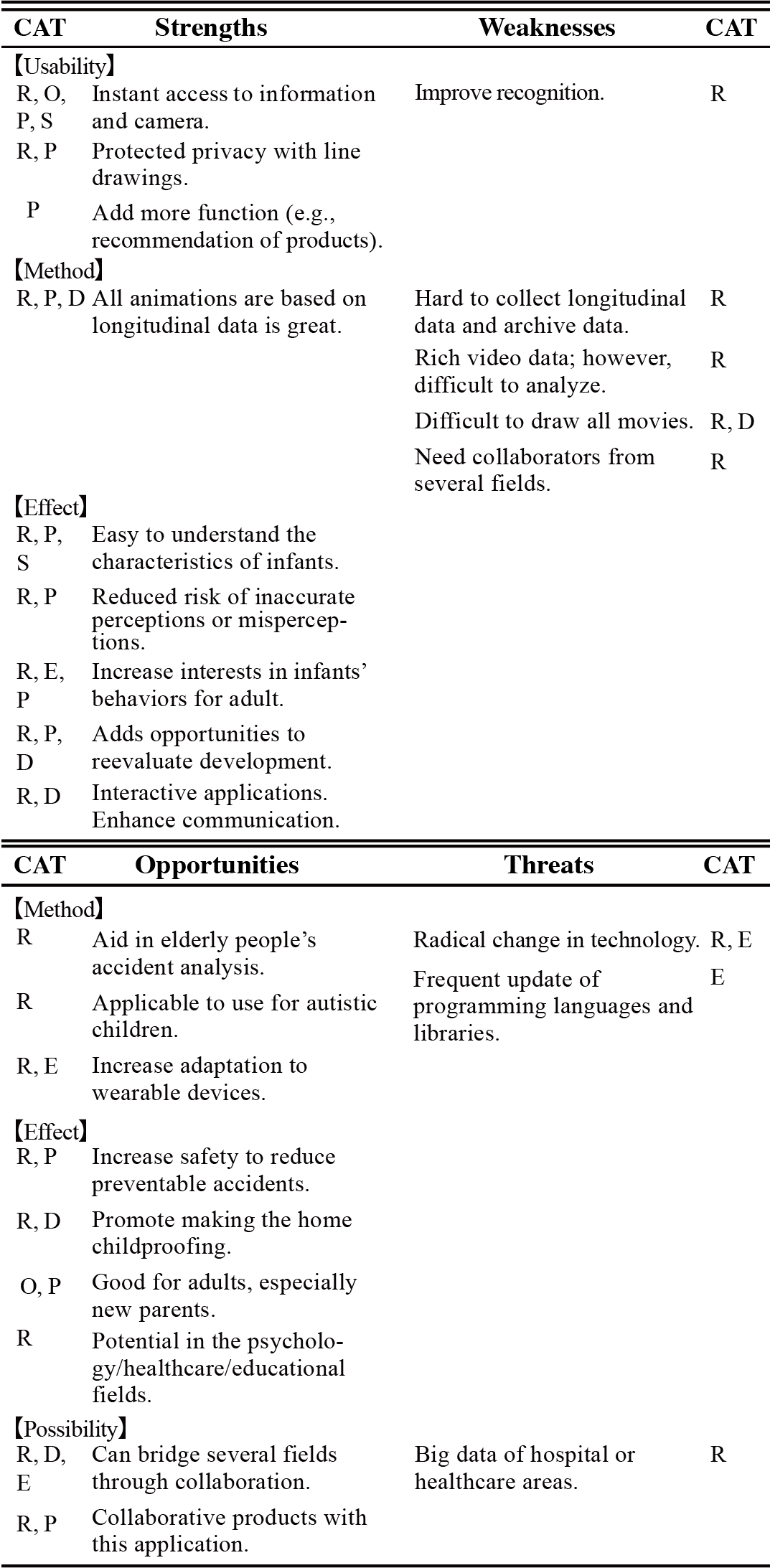

To determine whether our application is functional, informal user interviews and tests were conducted with 142 people from six countries (Italy, Japan, Portugal, Spain, UK, and USA) from 2013 to 2017 as part of testing phase for the prototype. The application has been improving since 2013 with the addition of longitudinal observation data and updating functions. The interviewees were spread across 18 fields and nine occupations, and consist of architects, designers, developers, engineers, office workers, researchers, students, teachers, and infants’ parents. The interviews revealed the advantages of the prototype and possible improvements. To examine what potential users need to know about infants, we conducted a SWOT analysis based on all results of the interviews. Table 3 shows a summary of this analysis.

As a result of the interviews, the application’s advantages were categorized into strengths and opportunities. These indicate that visualization of infant behavior using mobile devices using AR technology was able to gain adults’ understanding of infants’ and the home environment’s specific affordances as a whole. Regardless of nationality, occupation, and sex, the interviewees showed interest in the infants’ behaviors as “unexpected” interactions with daily used items.

Figure 6. How to use the application.

Figure 6. How to use the application.

Figure 7. Example of notifications.

Figure 7. Example of notifications.

Figure 8. Example of content with pop-up windows of an attached object.

Figure 8. Example of content with pop-up windows of an attached object.

Table 3. Summary of SWOT analysis for the prototype.

Note. Interviewees’ categories (CAT) are as follows: D = designers/architects; E = engineers/developers; O = office workers; P = parents; R = researchers; S = students.

In terms of strengths, almost all interviewees supported the method of converting digital recorded videos to line drawings. In particular, line drawings ensured a greater feeling of security for the infants’ parents compared to embedded raw videos. In terms of reality and security, this method is assumed to be appropriate at the present time. With respect to usability, we found that all interviewees were familiar with the operation of smartphone devices. This suggests that the development of applications for handheld devices are appropriate for everyday use. In the case of interface, the application’s simple graphic and motion design enabled users to access the desired information and to reach the camera icons easily. However, it was also observed that tracking times were affected by the lighting conditions, especially in the indirect lighting condition, it appeared to unstable at times.

Regarding opportunities, researchers who specialized in robotics, design, architecture, and computer science suggested possibilities for expanding the scope of the application to several other fields, such as preventing accidents among the elderly or children with autism. Furthermore, derivatives of the product possibilities based on the application and the longitudinal data were suggested.

Improvements are suggested in the sections where weakness and threats are discussed. Most of weaknesses in the collection of data and its processing were identified. Researchers concerned that data collecting in natural settings are rich resources for visualization; however, they also referred to the difficulty in analysis compared to the data from laboratory experiments. In contrast, this study is considered to be better at collaborating with other fields because of its interdisciplinary work; hence, engineers suggested technical improvements of the AR technology through continuous updates and researchers pointed out the analysis methods.

4. Discussion

The first year of a child’s life is full of indefinite positive and negative opportunities that enable children to perceive, act, and learn. These affordances can be changed based on the relationship between an infant and the surrounding environmental conditions, namely, age, sex, motor skills, body scale, order of birth, object, surface, layout, space, and other people. The affordances of the environment that are available to infants are different from those available to adults and infants’ actions are also unpredictable to adults. This may result in carelessness or overprotection. Our AR application attempts to consider both positive and negative affordances. In this respect, our application differs from previous injury prevention systems. This feature of the prototype could make a contribution by changing “preventable” incidents into prevention and even more promotion, whichever is appropriate.

4.1. Everyday Life and Objects from Observational Data

In this study, we examined the data from two countries based on longitudinal observations conducted in Japan and Portugal during the first year and measured infants’ activities and objects’ types and numbers that they directly encountered. Even though the number of infants were smaller than those in our cross-sectional method, the 175 hours of recorded data captured infants’ changing processes in the real world. Therefore, we found the common tendencies in the daily use of products that tend to be associated with the accidents. This means that the infants’ interactions with these objects are sometimes unpredictable to adults and vary depending on the conditions of the body-environment relationship, layout of objects and people. Adopting only an adult viewpoint in this aspect leads to carelessness or overprotection.

Concretely, this prototype attempted to visualize how infants cope with ten types of normal objects based on the recorded data as evidence. This visualization was enabled using AR technology and facilitated adults’ intuitive understanding by focusing on the decisive moments of the infants’ action toward the object. Essentially, this application provides objects’ potential issues and values from the view of infants. This means that the object was re-defined from an infant’s perspective because the number of possible accidents related to normal detached objects compared to those of special objects for babies remained the same. Moreover, the number of normal, daily-used objects were observed to be greater than that of baby products. This is necessary to provide different affordances to infants. The application shows concrete and specific examples with AR. Thus, it is possible to help to prevent “preventable” accidents.

4.2. AR Mobile Application

We measured the recognition rate and the accuracy of the vision-based system for AR applications to determine whether the AR systems functioned appropriately. The results showed differences between two AR systems depending on the conditions. We found no differences for distances in the 20–40 cm range; however, the tracking time was affected by the surface orientation. It took slightly longer to recognize the AR marker on the vertical surface than on the horizontal surface. Although this kind of problem is also found in Apple’s ARKit, the subsequent update of ARKit will make it possible to recognize not only horizontal surfaces but also vertical surfaces as well. This platform is expected to become available in the summer of 2018 [45]. Therefore, we must re-examine and determine which platform is ideal to develop the application in the long run. Moreover, in real-world scenarios, marker tracking is sometimes unstable under indirect lighting. This suggests that the proposed application needs further improvements for everyday use. In summary, the marker-based AR system would be the most practical and adaptable application for the unique situation of a given user, considering the function and size of application at present. However, the number of objects in our everyday environments is increasing and its arrangements are also changing with time. A marker-less vision-based tracking system will be developed in the future using our on-going longitudinal recorded video resources.

We referred to Apple’s human interface guidelines [46] to develop the application’s interface. We focused on helping people understand and interact with the content when designing the interface. Considering that the evaluation of the AR system is important even though its conception is fairly recent, compared to developing AR studies. In 2008, Gabbard and Swan suggested the necessity to learn from user studies for user interface design, usability, and discovery, early in the development of emerging technologies such as AR [47,48].

4.3. Visualizing Home Environment with AR

We examined the validity of the aims and methods of this AR application through informal users interviews and user tests. Since 1995, when the first user-based experiments in AR literature were presented, usability studies have been published to address issues in three related areas, namely, perception, performance, and collaboration [2]. In this study, we focused on perception and performance.

The results revealed how users interact with virtual movies in an AR application in the real-world. Experts from 18 fields of research showed interests in the prototype and suggested improvements. This means that the visualization of affordances using AR technology is acceptable and the applications abilities are not limited to only parents. Furthermore, it indicated that our approach is actually suitable for interdisciplinary research with aging, architecture, computer science, design, education, healthcare, psychology, rehabilitation, and robotics. This may lead to the development of safety measures from positive and negative aspects in the everyday environment in the form of attractive and safe products, measurements and standard, AI-ready data, and smart homes.

Regarding data, almost all interviewees agreed that collecting longitudinal data in natural settings is worth exploring and considered as a good resource for finding solutions. This is one of our application’s features. It is valued by professionals such as researchers, developers, and designers. However, this might also be a weakness because every AR animation requires significant time and effort to draw, because even a single movie requires at least 100 hand-drawings at the present. There is room for improvement and a more efficient method.

We fill our homes with objects; therefore, the application is aimed at enabling a re-examination of the way everyday objects are used, designed, and improved flexibly from the perspective of infants. Visualizing affordances related to an infant’s home environment with an AR application would provide another structure to explore the creative processes of individuals who are responsible for coordinating the placement of objects at home, and the thinking behind possible solutions to the challenges we are facing currently to reduce preventable accidents and promote development for infants.

5. Conclusions and Future Directions

The work reported in this study represents an essential first step in adapting the concept of affordance theory to visualizing infants’ perceptions and actions within the home environment and an attempt to illustrate the reality in a different perspective to caregivers of infants to prevent the accidents at home. Numerous incidents in the home environment result in injuries and even death of young children. Almost all the ordinary incidents of everyday life can pose danger to infants depending on the conditions. In this study, we demonstrate that AR technology is a promising and useful tool for visualizing invisible affordances of everyday objects. Our AR movies are based on the developmental level, or rather, the incidents anticipated owing to the developmental level of young children. To the scenery, we add the facts about infants and objects and enable caregivers to simulate and visualize other possible incidents. Thus, our app is different from injury reporting or warning apps.

Several limitations exist in this study. First, since we needed to add information on the developmental process of children, we conducted longitudinal observation; we could not determine how one specific fall becomes dangerous, because infants experienced thousands of steps and dozens of falls per day during natural locomotion [40]. It may be difficult to finish collecting data for a whole developmental period as that would delay analysis; however, we continue to collect data and increase the number of participants from year to year. Second, technical problems exist; adding AR markers and creating line drawing illustrations from videos are time-consuming. Therefore, an automatic process for generating line drawings from videos needs to be designed.

More broadly, from the results of interviews, we found that the prototype AR application has been easy and efficient for everyday use because smart devices and AR are common now. Our goal is to build an integrated system that will allow researchers, engineers, designers, and users worldwide to collaborate and contribute to the use of the proposed technology to promote the development of young children and prevent accidents in the home environment. We have started collecting data in one more country, as more evidence must be obtained by processing large amounts of relevant data in future works to generate further meaningful insights.

Conflict of Interest

The authors declare that there are no conflicts of interest associated with this manuscript.

Acknowledgment

We thank all the children and parents who participated in this research. We also thank Sachio Uchida, Tesuaki Baba and Shohan Hasan for their help, and João Barreiros and Rita Cordovil for their support in conducting the observations. This work was supported by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Number 16K21263.

- M, Nishizaki, “Visualizing positive and negative affordances in infancy using mobile augmented reality” in Intelligent Systems Conference (IntelliSys), 1136-1140, 2017. DOI: 10.1109/IntelliSys.2017.8324272

- I. E. Sutherland, “A head-mounted three-dimensional display,” in Proceedings of FJCC 1968, Thompson Books, Washington DC, 757–764, 1968.

- M. Billinghurst, A. Clark, G. Lee, “A Survey of Augmented Reality,” Foundations and Trends in Human–Computer Interaction, 8(2-3), 73-272, 2015. http://dx.doi.org/10.1561/1100000049

- T. P. Caudell, D. W. Mizell, “Augmented reality: An application of heads-up display technology to manual manufacturing processes,” In System Sciences, 1992. Proceedings of the Twenty-Fifth Hawaii International Conference, 2, 659–669. IEEE, 1992.

- S. Aukstakalnis, Practical augmented reality: a guide to the technologies, applications, and human factors for AR and VR, Addison-Wesley 2016.

- R. Azuma, M. Billinghurst, and G. Klinker, “Editorial: Special section on mobile augmented reality” Comput., Graph., 35(4), 7–8, 2011. https://doi.org/10.1016/j.cag.2011.04.010

- R. T. Azuma, “A survey of augmented reality” Presence-Teleop. Virt., 6(4), 1892, 355–385, 1997.

- M. Mohring, C. Lessig, O. Bimber, “Video see-through AR on consumer cell-phones” in Proceedings of the 3rd IEEE/ACM International Symposium on Mixed and Augmented Reality, 252–253. IEEE Computer Society, 2004. DOI: 10.1109/ISMAR.2004.63

- O. Smørdal, G. Liestøl, and O. Erstad, “Exploring situated knowledge building using mobile augmented reality” in QWERTY 11, 1, 26–43, 2016.

- S. Rattanarungrot, M. White and B. Jackson, “The application of service orientation on a mobile AR platform — a museum scenario” 2015 Digital Heritage, Granada, 329-332, 2015. DOI: 10.1109/DigitalHeritage.2015.7413894

- D. Wagner, and D. Schmalstieg, “Making augmented reality practical on mobile phones, part 1” IEEE. Comput. Graph., 29(3), 12–15, 2009. http://doi.ieeecomputersociety.org/10.1109/MCG.2009.46

- D. Beier, R. Billert, B. Bruderlin, D. Stichling and B. Kleinjohann, “Marker-less vision based tracking for mobile augmented reality” The Second IEEE and ACM International Symposium on Mixed and Augmented Reality, 2003. Proceedings, 258-259, 2003. DOI: 10.1109/ISMAR.2003.1240709

- SUPERDATA Games & Interactive Media Intelligence, https://www.superdataresearch.com/us-digital-games-market/, last accessed 2018/08/13.

- Digi-Capital Homepage, https://www.digi-capital.com/news/20170/01/after-mixed-year-mobile-ar-to-drive-108-billion-vrar-market-by-2021/, last accessed 2018/05/03.

- The Ministry of Health, Labour and Welfare of Japan. List of Statistical Surveys. Vital Statistics 2016, https://www.e-stat.go.jp/en/stat-search/files?page=1&layout=datalist&tstat=000001028897&year=20160&month=0&tclass1=000001053058&tclass2=000001053061&tclass3=000001053066&result_back=1, last accessed 2018/05/03.

- The National Safety Council of US Homepage, http://www.rospa.com/homesafety/adviceandinformation/childsafety/accidents-to-children.aspx, last accessed 2018/05/03.

- Consumer Safety Unit. 24th Annual Report, Home Accident Surveillance System. London: Department of Trade and Industry, 2002.

- The U.S. Consumer Product Safety Commission, https://www.cpsc.gov/s3fs-public/5013.pdf, last accessed 2018/05/03.

- A. Carlsson, A. K. Dykes, A. Jansson, A. C. Bramhagen, “Mothers’ awareness towards child injuries and injury prevention at home: an intervention study” BMC Research Notes, 9, 223, 2016. https://doi.org/10.1186/s13104-016-2031-5

- D. Kendrick, C. A. Mulvaney, L. Ye, T. Stevens, J. A. Mytton, S. Stewart-Brown, “Parenting interventions and the prevention of unintentional injuries in childhood: systematic review and meta-analysis” Cochrane Database System Review. 2013 Mar 28; (3):CD006020. Epub. https://doi.org/10.1111/j.1365-2214.2008.00849.x

- B. A. Morrongiello, S. Kiriakou, “Mothers’ home-safety practices for preventing six types of childhood injuries: what do they do, and why?” J. Pediatr. Psychol., 29(4), 285–97, 2004.

- A. Carlsson, A. K. Dykes, “Precautions taken by mothers to prevent burn and scald injuries to young children at home: an intervention study” Scand. J. Public Health., 39(5), 471-478, 2011. https://doi.org/10.1177/1403494811405094

- SAFE KIDS WORLDWIDE, https://www.safekids.org/safetytips/field_venues/home, last accessed 2018/08/13.

- Japan Pediatric Society, Injury Alert and Follow-up report http://www.jpeds.or.jp/modules/injuryalert/, last accessed 2018/08/13.

- K. Kitamura, Y. Nishida, Y. Motomura, H. Mizoguchi, “Children Unintentional Injury Visualization System Based on Behavior Model and Injury Data” The 2008 International Conference on Modeling, Simulation and Visualization Methods (MSV’08), 2008.

- K. Kitamura, Y. Nishida, Y. Motomura, T. Yamanaka, H. Mizoguchi, “Web Content Service for Childhood Injury Prevention and Safety Promotion,” in Proceedings of the 9th World Conference on Injury prevention and Safety Promotion, 270, 2008.

- Y. Nishida, Y. Motomura, K. Kitamura, T. Yamanaka, “Representation and Statistical Analysis of Childhood Injury by Bodygraphic Information System” Proc. of the 10th International Conference on GeoComputation. 2009.

- J. J. Gibson, “The Senses Considered as Perceptual Systems, Houghton. Mifflin Company, Boston, 1966.

- J. J. Gibson, “The ecological approach to visual perception,” Boston: Houghton Mifflin, 1979.

- K. E. Adolph, “A psychophysical assessment of toddlers’ abi1ity to cope with slopes” J. Exp. Psychol. Human, 21, 734–750, 1995.

- E. J. Gibson and R. D. Walk, “The “visual cliff” Scientific American, 202, 67–71, 1960.

- T. Stoffregen, “Affordances and events” Ecol. Psycol., 12, 1–28, 2010. https://doi.org/10.1207/S15326969ECO1201_1

- M. Turvey, “Affordances and prospective control: An outline of the ontology” Ecol. Psycol., 4, 173–187, 1992. https://doi.org/10.1207/s15326969eco0403_3

- W. H. Warren, “Perceiving affordances: Visual guidance of stair climbing” J. Exp. Psychol. Human, 10, 683–703, 1984.

- W. Gaver, “What in the world do we hear? An ecological approach to auditory source perception” Ecol. Psycol., 5, 1–31, 1993a. https://doi.org/10.1207/s15326969eco0501_1

- W. Gaver, “How do we hear in the world? Explorations in ecological acoustics” Ecol. Psycol., 5, 285–313, 1993b. https://doi.org/10.1207/s15326969eco0504_2

- L. S. Mark, “Perceiving the preferred critical boundary for an affordance,” in Studies in perception and action III, B. G. Bardy, R. J. Bootsma, and Y. Guiard (Eds.), Mahwah, NJ: Lawrence Erlbaum Associates, 1995.

- L. S. Mark, K. Nemeth, D. Gardner, M. J. Dainoff, J. Paasche, M. Duffy, and K. Grandt, “Postural dynamics and the preferred critical boundary for visually guided reaching” J. Exp. Psychol. Human, 23(5), 1365–1379, 1997.

- W. H. Warren, and S. Whang, “Visual guidance of walking through apertures: Body-scaled information for affordances” J. Exp. Psychol. Human, 13(3), 371–384, 1987.

- K. E. Adolph, W. G. Cole, M. Komati, J. S. Garciaguirre, D.Badaly, J. M.Lingeman, G. L. Y.Chan, R. B. Sotsky, “How do you learn to walk? Thousands of steps and dozens of falls per day” Psychol. Sci., 23, 1387-1394, 2012. https://doi.org/10.1177/0956797612446346

- W. G. Cole, S. R. Robinson, & K. E. Adolph, “Bouts of steps: The organization of infant exploration” Dev. Psychobiol., 58, 341-354, 2016. https://doi.org/10.1002/dev.21374

- J. M. Franchak, and K. E. Adolph, “Visually guided navigation: Head-mounted eye-tracking of natural locomotion in children and adults” Vision Res., 50, 2766-2774, 2010. https://doi.org/10.1016/j.visres.2010.09.024

- Datavyu, http://www.datavyu.org/ last accessed 2018/10/10.

- Consumer Affairs Agency, Government of Japan, “Policy of children’s accidents prevention in 2017” http://www.caa.go.jp/policies/policy/consumer_safety/child/children_accident_prevention/pdf/children_accident_prevention_180328_0004.pdf, last accessed 2018/08/13.

- Apple, Newsroom https://www.apple.com/jp/newsroom/2018/06/apple-unveils-arkit-2/ last accessed 2018/08/13.

- Apple, human-interface-guidelines, https://developer.apple.com/design/human-interface-guidelines/ios/overview/themes/ , last accessed 2018/08/05.

- J. E. Swan J. L. Gabbard. “Survey of user-based experimentation in augmented reality” in Proceedings of 1st International Conference on Virtual Reality, 1–9, 2005.

- J. L. Gabbard and J. E. Swan, “Usability engineering for augmented reality: Employing user-based studies to inform design” Vis. Comput. Graph., IEEE, 14(3), 513–525, 2008. http://doi.ieeecomputersociety.org/10.1109/TVCG.2008.24