Virtual Watershed System: A Web-Service-Based Software Package For Environmental Modeling

Volume 3, Issue 5, Page No 382–393, 2018

Adv. Sci. Technol. Eng. Syst. J. 3(5), 382–393 (2018);

DOI: 10.25046/aj030544

DOI: 10.25046/aj030544

Keywords: Web-based Virtual Watershed, System, Model as service, Cloud-based application, Hydrologic model

The physically-based environmental model is a crucial tool used in many scientific inquiries. With physical modeling, different models are used to simulate real world phenomena and most environmental scientists use their own devices to execute the models. A complex simulation can be time-consuming with limited computing power. Also, sharing a scientific model with other researchers can difficult, which means the same model is rebuilt multiple times for similar problems. A web-service-based framework to expose models as services is proposed in this paper to address these problems. The main functions of the framework include model executions in cloud environments, NetCDF file format transmission, model resource management with various web services. As proof of concept, a prototype is introduced,implemented and compared against existing similar software packages. Through a feature comparison with equivalent software, we demonstrate that the Virtual Watershed System (VWS) provides superior customization through its APIs. We also indicate that the VWS uniquely provides friendly, usable UIs enabling researchers to execute models.

1. Introduction

Modeling has become an indispensable tool in growing environmental scientists’ understanding of how natural systems react to changing conditions. It sheds light on complex environmental mysteries and helps researchers in formulating policies and decisions on future scenarios. Environmental modeling is highly challenging as it involves complex mathematical computations, rigorous data processing, and convoluted correlations between numerous parameters. Three commonly-used and important scientific software quality measures are maintainability, quality, and scalability. Issues like data storage, coupling models, retrieval, and running are hard problems and need to be addressed with extra efforts from software engineering perspective. It is a challenging job to design integrated systems that can address all these issues.

It is essential to build high-quality software tools and design efficient frameworks for scientific research. Abundant scientific model data are generated and collected in recent years. Software engineering can assist this emergence through the creation of distributed software systems and frameworks enabling scientific collaboration with previously disparate data and models. It is challenging to implement software tools for interdisciplinary research because of the problem of communication and team building among different scientific communities. For example, the same terminology can have different meanings in different domains. This increases difficulties in comprehending software requirements when a project involves stakeholders (e.g. researchers) from different fields.

Most work described in this paper is for Watershed Analysis, Visualization, and Exploration (WC-WAVE), which is a NSF EPSCoR-supported project and initiated by jurisdictions of EPSCoR of Nevada, Idaho and New Mexico. The WC-WAVE project includes three principal components: watershed science, data cyberinfrastructure and data visualization [1]. The project main goal is to implement VWS with the collaborations between cyberinfrastructure team members and hydrologists. The platform is able to store, share, model and visualize data on-demand through an integrated system. These features are crucial for hydrologic research.

Different hydrologic models, such as ISNOBAL and PRMS, are commonly used by WC-WAVE hydrologists.

These models are leveraged to predict or examine hydrologic processes of Lehman Creek in Nevada, Dry Creek and Reynolds Creek in Idaho, and Jemez Creek in New Mexico. We propose a framework for representing model data in a standardized format called the “Network Common Data Format” (NetCDF) and exposing these hydrologic models through web services. This framework is based on our previous work introduced in [2]. To improve on our prior work we have implemented some new Docker APIs to control system components wrapped in docker containers. To simplify data extraction, modification, and storage, NetCDF data format [3] is used in the system for gridorganized scientific data. Most parts of the system framework can be reused for data-intensive purpose because it is designed with the blueprint and template concepts. ISNOBAL and PRMS are physically-based models which can produce very accurate results. However, these two models require abundant computing power. To solve the challenge, we leverage a cluster to execute models in parallel. ISNOBAL and PRMS are used in this paper to demonstrate the ideas and functionality of the proposed framework. Throughout the remainder of this paper, we refer to this prototype system as the VWS.

ISNOBAL is a grid-based DEM (Digital Elevation Model) and created to model the seasonal snow cover melting and development. The model author is Marks et al. [4] and it is initially developed for Utah, California, and Idaho mountain basins. The model determines runoff and snowmelt based on terrain, precipitation, region characteristics, climate, and snow properties [4].

PRMS is short for Precipitation-Runoff Modeling System and is initially written with FORTRAN in 1983. PRMS is prevalent physical process based distributedparameter hydrologic model and the main function of a PRMS model is to evaluate a watershed response to different climate and land usage cases [5, 6, 7]. It composed of algorithms describing various physical processes as subroutines. The model, now in its fourth version, has become more mature over the years of development. Different hydrology applications, such as measurement of groundwater and surface water interaction, the interaction of climate and atmosphere with surface water, water and natural resource management, have been done with the PRMS model [5, 6, 7].

This paper is organized as follows in its remaining sections: Section 2 introduces background and related work; Section 3 describes the system design; Section 4 describes the prototype system and how the software was built using RESTful APIs; Section 5 compares our work with related tools; and Section 6 contains the papers conclusions and outlines planned future work.

2. Background and Related Work

”How to implement software for interdisciplinary research?” is an interesting question and there exists some successful work on environments and frameworks which seek to answet this question. In this section, relevant, popular earth science applications and frameworks are introduced.

Community Surface Dynamics Modeling System (CSDMS) was a project started in 1999 to conduct expeditious research of earth surface modelers by creating a community driven software platform. CSDMS applies a component-based software engineering approach in the integration of plug-and-play components, as the development of complex scientific modeling system requires the coupling of multiple, independently developed models [8]. CSDMS allows users to write their components in any popular language. Also they can use components created by others in the community for their simulations. CSDMS treats components as pre-compiled units which can be replaced, added to, or deleted from an application at runtime via dynamic linking. Many key requirements drove the design of CSDMS, including the support for multiple operating systems, language interoperability across both procedural and object-oriented programming languages, platform independent graphical user interfaces, use of established software standards, interoperability with other coupling frameworks and use of HPC tools to integrate parallel tools and models into the ecosystem.

A leading hydrologic research organization is CUAHSI. ”CUAHSI” , and acronym for ”Consortium of Universities for the Advancement of Hydrologic Science Inc.,” represents universities and international water science-related organizations. One of most highly esteemed products is HydroShare, which is a hydrologic data and model sharing web application. Hydrologists can easily access different model datasets and share their own data. Besides this, this platform offers many distributed data analysis tools. A model instance can be deployed in a grid, cloud or high-performance computing cluster with HydroShare. Also, a hydrologist is able to publish outcomes of their research, such as a dataset or a model. In this way, scientists use the system as a collaboration platform for sharing information. HydroShare exposes its functionality with Application Programming Interfaces (APIs), which means its web application interface layer and service layer are separated. This enables interoperability with other systems and direct client access [9].

Model as a Service is proposed by Li et al. [10] for

Geoscience Modeling. It is a cloud-based solution and [10] has implemented a prototype system to execute high CPU and memory usage models remotely as a service with third party platform, such as AWS (Amazon Web Service) and Microsoft Azure.

The key idea of MaaS is that model executions can be done through a web interface with user inputs. Computer resources are provisioned with a cloud provider, such as Microsoft Azure. The model registration is done in the framework with a virtual machine image repository. If a model is registered and placed in the repository, it can be shared by other users and multiple model instances can be executed in parallel based on demand.

McGuire and Roberge designed a social network to promote collaboration between watershed scientists. Despite being highly available, the collaboration between the general public, scientists, and citizen has not been leveraged. Also, hydrologic data is not integrated in any system. The main goal of this work is to design a collaborative social network for multiple watershed scientific and hydrologic user groups [11]. However, more efforts need to be done.

The Demeter Framework by Fritzinger et al. [12] represents another attempt to utilize software frameworks as a scientific aid in the area of climate change research. A software framework named “The Demeter Framework” is introduced in the paper and one of the key ideas is a component-based approach to integrate different components into the system for the “model coupling problem.” “The model coupling problem” refers to using a model’s outputs as another model’s inputs to solve a problem.

Walker and Chapra proposed a web-based clientserver approach for solving the problem of environmental modeling compared to the traditional desktopbased approach. The authors assert that, with the improvement in modern day web browsers, client-side approaches offer improved user interfaces compared to traditional desktop software. In addition, powerful servers enable users to perform simulations and visualizations within the browser [13].

The University of New Mexico has implemented a data engine named GSToRE (Geographic Storage, Transformation and Retrieval Engine). The engine is designed for earth scientific research and the main functions of the engine are data delivery, documentation, and discovery. It follows the combination of community and open standards and implemented based on service oriented architecture. [14].

2.1. Service Oriented Architecture

Industry has shown more and more interests on Service Oriented Architecture (SOA) to implement software systems. [15]. The main idea of SOA is to have business logic decomposed into different units (or services). These units are self-contained and can be easily deployed with container techniques, such as Docker.

Representational State Transfer Protocol (REST) is primarily an architectural style for distributed hypermedia systems introduced by Fielding, Roy Thomas in his Ph.D. dissertation [16]. REST defines a way for a client-server architecture on how a client and a server should interact, by using a set of principles. REST has been adopted for building the main architecture of the proposed system. Statelessness, uniform Interface, and cache are the main characteristics of a REST clientserver architecture [16]. It is for these characteristics that REST is leveraged in our proposed system.

Statelessness Statelessness is the most important property or constraint for a client-server architecture to be RESTful. The communication between the client and the server must be stateless, which means the server is not responsible for keeping the state of the communication. It is the client’s responsibility. A request from the client must contain all the necessary information for the server to understand the request [16, 17]. Two subsequent requests to the server will not have any interdependence between each other. Introducing this property on the client-server architecture presents several benefits regarding visibility, reliability, and scalability [16, 17]. For example, as the server is not responsible for keeping the state and two subsequent requests are not interrelated, multiple servers can be distributed across a load-balanced system where different servers can be responsible for responding to different requests by a client.

Uniform Interface Another important property of a RESTful architecture is it provides a uniform interface for the client to interact with a server. Instead of an application’s particular implementation, it forces the system to follow a standardized form. For example HTTP 1.1 which is a RESTful protocol provides a set of verbs (e.g., GET, POST, PUT, DELETE, etc.) for the client to communicate with the server. The verbs, such as “GET” and “POST”, work as an interface making the client-server communication generic[16].

Cache REST architecture introduces cache constraint to improve network efficiency [16]. A server can allow a client to reuse data by enabling explicitly for labeling some data cacheable or non-cacheable. A server can serve data that will not change in the future as cached content, allowing the client to eliminate partial interaction with that data in a series of requests.

2.2. REST Components

The main components of REST architecture include resources, representations, and resource identifiers.

Resources The resource is the main abstract representation of data in REST architecture [16]. Any piece of data in a server can be represented as a resource to a client. A document, an image, data on today’s weather, a social profile, everything is considered a resource in the server. Formally, a resource is a temporarily varying function of MR(t) that maps to a set of entities for time t [16].

Representations A resource is the abstract building block of the data in a web server. For a client to consume the resource it needs to be presented in a way the client can understand. This is called representation. A representation is a presentation format for representing the current state of a resource to a consumer. Some commonly used resource representation format in the current standard are HTML (Hypertext Markup Language), XML (Extensible Markup Language), JSON

(JavaScript Object Notation), etc. A server can expose data content in different representations so that consumer can access the resources through resource identifiers (discussed in Section 2.2) in the desired format.

Resource Identifiers A resource is uniquely identified through a resource identifier in a RESTful architecture. For example, in HTTP Uniform Resource Identifier (URI) is used to identify a resource in a server. A URI can be thought as the address of a resource in the server [18]. A resource identifier is the key for a client to access and manipulate a resource in the server.

2.3. Microservice Architecture

Software as a Service (SaaS), as a new software delivery architecture, has emerged to leverage the widely-used REST standard for processing in addition to data transfer. The main advantage of SaaS is that it does not require local installation and this is a significant IT trend based on industry analysis [19].

Similarly, “microservice” decomposes an application into small components (or services) and these components communicate with each other through APIs. “Microservice” is a solution to monolithic architecture relevant problems. [20]. Because of the “microservice” characteristics, an application can be easily scaled and the deployment risks have been reduced without interrupting other services.

Traditional monolithic applications are built as a single unit using a single language stack and often composed of three parts, a front end client, a backend database and an application server sitting in the middle that contains the business logic. Here, the application server is a monolith that serves as a single executable. A monolithic application can be scaled horizontally by replicating the application server behind a load balancer to serve the clients at scale. The biggest issue with monolithic architecture is that, as the application grows, the deployment cycle becomes longer as a small change in the codebase requires the whole monolith to be rebuilt and deployed [20]. It leads to higher risk for maintenance as the application grows. These pitfalls have lead to the idea of decomposing the business capabilities of an application into self-contained services.

Being a relatively new idea, researchers have attempted to formalize a definition and characteristics of Microservices. [20] [20] has put together a few essential characteristics of a microservice architecture that are described in brief in the following sections.

Componentization via Services The most important characteristic of a microservice architecture is that service functionalities need to be componentized. Instead of thinking of components as libraries that use in-memory function calls for inter-component communication, we can think of components in terms out-ofprocess services that communicate over the network, quite often through a web service or a remote procedure call. A service has to be atomic, doing one thing and doing one thing well [21].

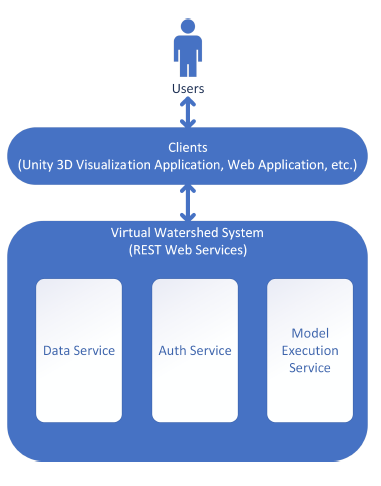

Figure 1: High-level diagram of VWS: clients and VWS communicate through REST Web Services. Possible clients include a script and applications. VWP provide data service, auth service, and model execution service.

Figure 1: High-level diagram of VWS: clients and VWS communicate through REST Web Services. Possible clients include a script and applications. VWP provide data service, auth service, and model execution service.

Organized Around Business Capabilities Monolithic applications are typically organized around technology layers. For example, a typical multi-tiered application might be split logically into persistence layer, application layer and UI layer and teams are also organized around the technologies. This logical separation creates the need for inter-team communication even for a simple change. In microservices, the product is organized around business capabilities where a service is concentrated on one single business need and owned by a small team of cross-domain members.

Decentralized Governance In a monolithic application, the product is governed in a centralized manner, meaning it restricts the product to a specific platform or language stack. But in microservices, as each service is responsible implementing an independent business capability, it allows for building different services with different technologies. As a result, the team gets to choose the tools that are best suited for each of the services.

[21], in his book Building Microservices [21], has discussed several concrete benefits of using microservices over a monolith. Several important benefits are discussed below in brief:

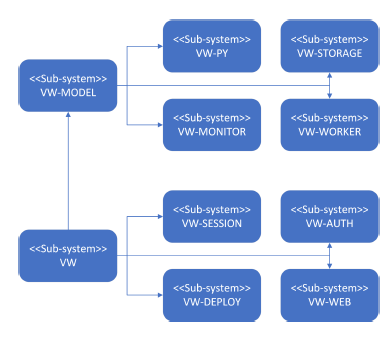

Figure 2: System-level diagram of VWS: two main system components are VW-MODEL and VW. VWMODEL contains components relative to model execution and VW contains components relative to the platform.

Figure 2: System-level diagram of VWS: two main system components are VW-MODEL and VW. VWMODEL contains components relative to model execution and VW contains components relative to the platform.

Technology Heterogeneity When independent services are built separately, it allows adoption of different technology stacks for different services. This gives a team multiple advantages: liberty to choose a technology that best suits the need and adopt technology quickly for the needs.

Scaling Monolithic applications are hard to deal with when it comes to scaling. One big problem with monolithic applications is that everything needs to be scaled together as a piece. But microservice allows having control over the scaling application by allowing to scale the services independently.

Ease of Deployment Applying changes for a monolithic application requires the whole application to be re-deployed even for a minor change in the codebase. It poses a high risk as unsuccessful re-deployments even with minor changes can take down the software. Microservices, on the other hand, allow low-risk deployments without interrupting the rest of the services. They also allow having faster development process with small and incremental re-deployments.

3. Proposed Method

Here we introduce detailed design documentation for the VMS. We present the design using many common diagrams used in software engineering, including system diagrams and workflow diagrams. The VWS aims to create a software ecosystem named the Virtual Watershed by integrating cyberinfrastructure and visualization tools to advance watershed science research. Fig. 1 shows a general high-level diagram of components of the ecosystem. The envisioned system is centered around services comprised of data, modeling, and visualization components. A high-level description of each depicted component is provided in Fig. 1.

3.1. Data Service and Modeling Service

To create a scalable and maintainable ecosystem of services, a robust data backend is crucial. The envisioned data service exposes a RESTful web service to allow easy storage and management of watershed modeling data. It allows retrieval of data in various OGC (Open Geospatial Consortium) standards like WCS, WMS to allow OGC compliant clients to retrieve data automatically. This feature is very important for data-intensive hydrologic research and is essential for a scientific modeling tool.

Hydrologists often use different modeling tools to simulate and investigate the change of different hydrologic variables around watersheds. The modeling tools are often complex to setup in local environments and takes up a good amount of time setting up [10]. Besides these modeling tools may require high computational and storage resources that make them hard to run in local environments. The proposed modeling service aims to solve these issues by allowing users to submit model execution tasks through simple RESTful web service API. This approach of allowing model-runs through a generic API solves multiple problems:

- It allows the users to run models on demand without having to worry about setting up environments.

- It allows modelers to accelerate the process of running models with different input parameters by submitting multiple model-runs to be run in parallel which might not be feasible in local environment due to lack of computational and storage resources.

- It opens the door for other services and clients to take advantage of the API to automate the process of running models in their workflow.

The primary goal of this Virtual Watershed system is to allow watershed modelers to share their data resources and execute relevant models through web services without having to install the models locally. The system is designed as a collection of RESTful microservices that communicate internally. The web service sits on top of an extensible backend that allows easy integration of models and scalability over model runs and number of users connected to the service.

The system provides a mechanism for integrating new models by conforming with a simple event driven architecture that allows registering a model in the system by wrapping it with a schema driven adaptor.

As model execution is a CPU intensive process, scaling the server with growing number of parallel model execution is an important issue. The proposed architecture aims to solve this problem by introducing a simple database-oriented job queue that provides options for adding more machines as the system grows.

3.2. System Level Design

Several different submodules comprise The Virtual

Watershed system. Each module provides different functionalities to the entire framework. This relationship between modules is described with Figure 2. The following paragraphs will explain each submodule in detail.

VW-PY: Using the VW-PY module, users can define adapters to configure compatibility between different models and the VWS. An adaptor is python code which encapsulates a model and that allows for it to be run programmatically. VW-PY handles many aspects of model ”wrapping” with this python code through an interface. Through this API, an user can define the code which handles converting between data formats, executing models, and model-progress-based event triggering. This event triggering system provides model-wrapper developers with the tools to emit model execution progress.

VW-MODEL: The VW-MODEL submodule, through a web service, exposes a RESTful API to the user/client. Users can upload, query, and retrieve model run packages using this API.

VW-WORKER: The VW-WORKER is a service which encapsulates model adaptors in a worker service that is organized in a queue data structure. This component communicates with the VW-MODEL component using a redis data-backend.

VW-STORAGE: The VW-STORAGE module provides an interface for object storage for the VWMODEL component. Developed as a generic wrapper, sysadmins can configure this interface to work with local or cloud-hosted storage providers.

VW-AUTH: The VW-AUTH module provides au-

thentication services for users/clients connecting to the VWS framework. This service provides clients with a JWT token enabling them to securely utilize the other services described in this section.

VW-SESSION: The VW-SESSION sub module coordinates different components in the VWS to provide a common session backend for a single user. Data about each session is maintained and managed with a Redis data store shared between services.

VW-WEB: This is the web-application front-end which provides users with APIs to interact with the model processing modules described above. In a standard use case of this module, users will get access to a session after logging into this system with the VWAUTH component. Once given a session, users can use this interface to access resources, run models, track progress and upload/download model run resources.

3.3. Detailed Design

The VWS is comprised of many distinct services and web applications which communicate with oneanother to facilitate model runs. We are able to provide secure and centralized communications with the aid of a common authentication gateway. VW-AUTH was developed as a micro service to provide this functionality.

It aggregates functionality for registration, authentication, and authorization for system users.

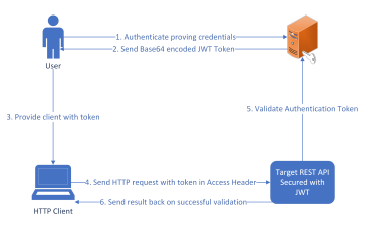

Figure 3: Workflow for accessing secure REST endpoint with JWT token

Figure 3: Workflow for accessing secure REST endpoint with JWT token

The Authentication component itself exposes RESTful endpoints that provide user access to different services, in addition to endpoints for authentication and registration. To enable the verification of a client’s authentication by other services, a JSON Web Token

(JWT) based authorization scheme is utilized. As an RFC standard for exchanging information securely between a client and a server, the using JWT ensures a high level of security in this system. A standard workflow for user authorization is depicted in Figure 3.

Users can manage uploaded models via a RESTful API endpoint provided by the Model Web Service. Using this endpoint, a properly authenticated user is able to request a model run, upload necessary input files needed by the model and start model execution. Model run data is stored by the VWS in a dedicated database located on a server operating out of the University of New Mexico [1].

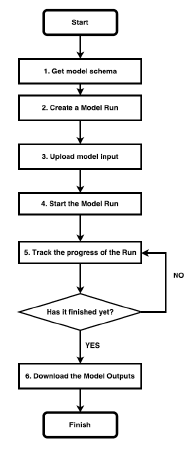

Available models in the system are self-describing with schema that indicates necessary input files, formats, and execution policies. The schema also shows the mapping between user facing and model adaptor parameters. A user/client can use this schema to understand the necessary resources required to run a given model. The a typical model run from the perspective of the clinent side application involves six steps: 1) retrieve the server-side model schema; 2) instantiate a model run session on the server; 3) upload model inputs; 4) run the model; 5) track model run progress; and finally, 6) download outputs.

Figure 4 shows a simple workflow chart from a user or client’s perspective. This workflow offers many advantages this workflow and sever-client architecture compared to traditional approaches to model running:

- Users do not need to know internal specifics of the model. Setup details and dependencies are hidden from the user, which provides a more seamless experience.

- Users don’t need to worry about installing model dependencies.

- Users can easily initiate multiple parallel model runs on a server, expediting research.

- Users have ubiquitous access to server-side data via provided RESTful APIs.

Figure 4: Workflow from user’s perspective to run a model

Figure 4: Workflow from user’s perspective to run a model

The first step in creating the architecture for exposing “models as services” is enabling the programmatic running of models. A hurdle to accomplishing this is that a model can have many different dependencies required to run. Programmatic running is accomplished in this program by providing developers the tools to create python wrappers around models. These wrappers expose dependencies to model through container images.

In creating this system we had to strongly consider problems of data format heterogeneity with model inputs and outputs. Different models commonly accept and output data in different file formats. To achieve facilitate greater data interoperability between these models, we provided an option for developers to write NetCDF adapters for each models. A model adaptor is a Python program that handles data format conversion, model execution and progress notification. Adaptor developers must provide converters which define the method of conversion and deconversion between the native formats used by the model and netCDF. An execution function must also be implemented for the model. In this method, resource conversion and model execution occurs. The wrapper reports on model execution events via a provided ”event emitter.” An event listener can catch these events as they are reported by the emitter. This listener provides a bridge between the internal progress of the model and the user with the aid of a REST endpoint.

Through an adaptor, models can be encapsulated for programmatic execution. However, to bridge frontends with actual model execution a process is required from the model worker module. Utilizing a messaging queue, we create a bridge between the client frontend and worker backend. When a run task is submitted through from the client side, it is placed into the queue and assigned a unique id. The consumer/worker process listens to the queue through a common protocol for new jobs.

The worker service is a server-side python module that has access to a model’s executable code and installed dependencies. The worker resides in an isolated server instance that contains the dependencies and libraries of the model installed. This module uses Linux containerization to facilitate easy deployment of model workers.

3.4. Deployment Workflow

A Linux-container-based deployment workflow has been devised for the for many different components of VWS. We utilized the containerization software, Docker, to enable our implementation of this workflow [22]. Docker containers have advantages over traditional virtual machines because they use fewer resources. This workflow allows for iterative deployment, simple scaling of the containerized components, and provides a strategy to register new models in the system.

Every VWS component is containerized with Docker. We have a set up a central repository of docker images which contain images for each component in this system. A docker ”image” describes a template that provides Docker with the OS, dependencies of an application and the application itself. Docker uses this template to build a working container. Each repository in the Virtual Watershed has a Dockerfile that describes how the component should be built and deployed into a query-able container. The repositories use webhooks to automate the building of docker containers.

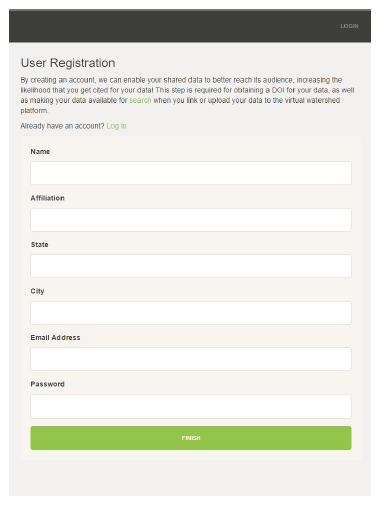

Figure 5: Registration page of Virtual Watershed System

Figure 5: Registration page of Virtual Watershed System

It is easy to register new models in this system using this containerization workflow. Using our system, a user may register a model with the creation of docker image describing the model. With this image users can declare the OS, libraries and other dependencies for a model. A typical registration of a model requires the following steps: 1) create a repository for the model; 2) develop the wrapper; 3) specify dependencies within the dockerfile; and finally, 5) create a docker image in the image repository.

4. System Prototype and Testing

The project re-used some code and similar structures to those introduced in [23, 24]. The web service frontends were built using a Python micro-service framework called Flask, with various extensions [25]. Some key libraries used were: 1) Flask-Restless, used to implement the RESTful API endpoints; 2) SQLAlchemy, to map the python data objects with the database schema [26]; 3) PostgreSQL as the database [27]; and, 4) Flask-Security with Flask-JWT which provides the utilities used for security and authentication. Celery was used to implement the task queue for model workers [28]. Redis worked as a repository for model results output by Celery. A REST specification library called Swagger was used to create the specification for the REST APIs. For the web front end, HTML5, CSS, Bootstrap, and Javascript (with ReactsJS) were used.

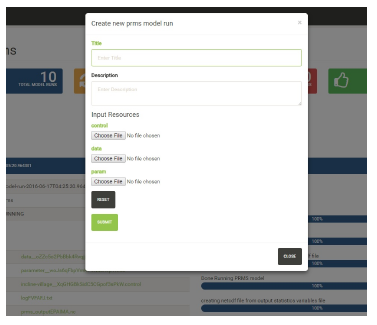

Figure 6: Dynamically generated upload form

Figure 6: Dynamically generated upload form

To efficiently develop the VWS, an MVC design pattern was used to structure the code. The primary repository used to manage and distribute code was Github. Source code for this project is made publicly available through the Virtual Watersheds GitHub repository [28]. Dockerhub [29] is used for image management, which can automatically update images when Github code is changed.

The prototype system is comprised of two main components 1) the authentication module and 2) the modeling module. First, activities related to the VWS are handled by the authentication module. All standard user management functionality is handled by this module: registration, logins, verification, password management, and authentication token generation. Figure 5 shows the registration page of Virtual

Watershed system. In addition to this interface, the VWS also provides endpoints for registration and authentication from user constructed scripts.

Modeling is necessary and commonly used in hydrologic research. Modification of the existing model simulations is a complex activity that hydrologists often have to deal with while analyzing complicated environmental scenarios. Modelers must make frequent modifications to underlying input files followed by lengthy re-runs. Programming languages could help significantly with this easily automated and repetitious task. However, it is complex and time consuming for hydrologists to write their own programs to handle file modifications for scenario based studies. To solve this challenge, the modeling module provides an intuitive user interface where users can create, upload, run and delete models. The UI informs users about the progress of the model run with a bar that displays a percentage of completion. The module also includes a dashboard where users can view the models being run, the finished model runs, and also download the model run files. A user can execute multiple PRMS models in parallel by uploading the three input resources needed for PRMS model. The upload interface as shown in Figure 6 is generated dynamically from the model schema defined for each of the models.

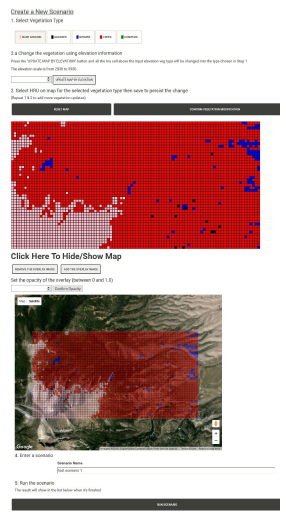

Figure 7: Scenario creation interface for PRMS model that uses Modeling REST API to execute model

Figure 7: Scenario creation interface for PRMS model that uses Modeling REST API to execute model

The modeling interface displays peak utility when users use it to programmatically run a bundle of models in parallel without having to worry about resource management. In the prototype system, the client is used to adjust input parameters for models and for model execution.

Model calibration can be a time consuming process for many hydrologists. It often requires that the modeler re-run and re-run a model many time with slightly varied input parameters. For many modelers this is a manual process. They will edit input files with a text editor and execute the model from a command line on their local machine.

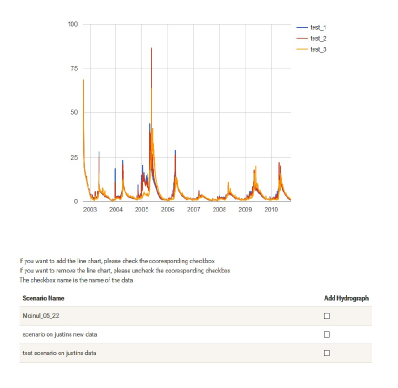

Understanding that it was crucial to address this issue, the virtual watershed protoype system was developed with a web-based scenario design tool. This tool provides a handy interface which enables for the rapid adjustment and calibration of PRMS model input variables. It also provides the tools to execute the tweaked models via the modeling web service, and visually compare outputs from differently calibrated runs. Figure 7 shows the interface developed which provides visually oriented tools for data manipulation. This interface abstracts away technical model data representations and allows users to model in intuitive ways. Figure 8 shows the output visualization after a the modeling web service has finished executing the model run.

Figure 8: Comparing the results of the scenarios created using the modeling service

Figure 8: Comparing the results of the scenarios created using the modeling service

We developed multiple IPython [30] notebooks to demonstrate the programmatic approach to running an ensemble of models in parallel by using the modeling API by Matthew Tuner, who is a PhD Student in Cognitive and Information Sciences at University of California, Merced. Figure 9, shows an important step of modeling with the PRMS model. By using the modeling API, an user can execute multiple models in parallel, programmatically. Users can use server resources to run a model many, many times without concerning themselves about limited time and CPU resources.

“ Figure 9: Example of tuning a hundred model through an IPython notebook with different parameters

Figure 9: Example of tuning a hundred model through an IPython notebook with different parameters

5. Comparison with Related Tools

A feature based comparison is made between similar software tools focused on hydrologic and environmental modeling in Table 1. The tools discussed in Section 2 are CSDMS [8], Hydroshare [9] and MAAS [10]. Though each of these tools were designed and developed with different goals in mind, all of them are a demonstration of work to assist environmental modeling in general. Each of these tools has their pros and cons from different users perspectives.

CSDMS is a community driver system where modelers can submit their models to CSDMS by implementing model interfaces provided by them. The model gets evaluated and added into the system by the CSDMS committee. CSDMS uses a language interoperability tool called Babel to handle language heterogeneity. From the user’s perspective, CSDMS provides a web and desktop based client where users access models and run them using different configurations from within the client. On the server side, CSDMS maintains an HPC cluster where they have all the models and relevant dependencies installed.

Hydroshare [9], on the other hand, is a project started to accommodate data sharing and modeling for hydrologists. The platform is in active development and frequently adds new features. The current version of Hydroshare is more concentrated towards data sharing and discovery for hydrology researchers [31]. Hydroshare also provides programmatic access to its REST API which makes it a good candidate for a data discovery backend for any other similar system.

The MaaS [10] is the most similar project to the Virtual Watershed modeling tool. The main advantage our work has over MaaS is resource management. Dr. Li et al. proposed MaaS, but they use Virtual Machine techniques in their framework. In our system, Docker containers are used, which require fewer resources. Also, we implemented APIs to check and stop a docker container based on model execution status. Existing container orchestration tools, like Docker Swarm, are limited in thier ability to manage containers in this way. Though the approach and implementation of this work differ in various ways, the end goal is similar to what we are trying to achieve. MaaS introduced models as services by creating an infrastructure using cloud platforms. The framework provides users with a web application to submit jobs. The backend that takes care of on-demand provisioning of virtual machines that containing model execution environment setup. MaaS achieves model registration by encapsulating a model as a virtual machine image in an image hub. It provides an FTP based database backend to store the model results.

6. Conclusion and Future Work

A hydrologic model execution web service platform named VWS is described in this paper. The key idea of the proposed platform is to expose model relevant services through python wrapper. This wrapper enables representation of model resources with a common data format (e.g., NetCDF), increasing inseparability. Also, it requires NetCDF format resources, which simplifies model execution on a server node. A user can login once and access all services of the VWS with the authentication/authorization component. Each VWS Component is wrapped in a docker container, which requires less resources than a traditional Virtual Machine. Docker container APIs, including the container termination API, are also implemented to autonomously manage the dynamic resource needs of this system.

This software has significant room to be expanded upon with furutre work. For example, the system currently only provides the option to integrate with GSToRE data backend. This can be improved through implementation of a generic data backend which enables integration with other existing data providers like DataONE, Hydroshare, and CUHASI. This, in turn, could provide better access to data for modeling automation. Developing a pricing component for service usage would be considered for future development. This aspect can help system manager to sustainably maintain the server and hire software developers to implement other useful features.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

We would like to thank Matthew Turner for his sample python scripts described in Section 4 and all the reviewers for their suggestions.

This material is based upon work supported by the National Science Foundation under grant numbers IIA-1301726 and IIA-1329469.

Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

- Nmepscor.org. The western consortium for water analysis, visualization and exploration — new mexico epscor., 2018. [Accessed on 10 June 2018].

- Md Moinul Hossain, Rui Wu, Jose T Painumkal, Mohamed Kettouch, Cristina Luca, SergiuMDascalu, and Frederick C Harris. Web-service framework for environmental models. In Internet Technologies and Applications (ITA), 2017, pages 104–109. IEEE, 2017.

- Russ Rew and Glenn Davis. Netcdf: an interface for scientific data access. IEEE computer graphics and applications, 10(4):76– 82, 1990.

- Danny Marks, James Domingo, Dave Susong, Tim Link, and David Garen. A spatially distributed energy balance snowmelt model for application in mountain basins. Hydrological processes, 13(12-13):1935–1959, 1999.

- Steven L Markstrom, Robert S Regan, Lauren E Hay, Roland J Viger, RichardMWebb, Robert A Payn, and Jacob H LaFontaine. Prms-iv, the precipitation-runoff modeling system, version 4. Technical report, US Geological Survey, 2015.

- Chao Chen, Ajay Kalra, and Sajjad Ahmad. Hydrologic responses to climate change using downscaled gcm data on a watershed scale. Journal of Water and Climate Change, 2018.

- Chao Chen, Lynn Fenstermaker, Haroon Stephen, and Sajjad Ahmad. Distributed hydrological modeling for a snow dominant watershed using a precipitation and runoff modeling system. In World Environmental and Water Resources Congress 2015, pages 2527–2536, 2015.

- Scott D Peckham, Eric WH Hutton, and Boyana Norris. A component-based approach to integrated modeling in the geosciences: The design of csdms. Computers & Geosciences, 53:3– 12, 2013.

- David G Tarboton, R Idaszak, JS Horsburgh, D Ames, JL Goodall, LE Band, V Merwade, A Couch, J Arrigo, RP Hooper, et al. Hydroshare: an online, collaborative environment for the sharing of hydrologic data and models. In AGU Fall Meeting Abstracts, 2013.

- Zhenlong Li, Chaowei Yang, Qunying Huang, Kai Liu, Min Sun, and Jizhe Xia. Building model as a service to support geosciences. Computers, Environment and Urban Systems, 61:141– 152, 2017.

- Michael P McGuire and Martin C Roberge. The design of a collaborative social network for watershed science. In Geo- Informatics in Resource Management and Sustainable Ecosystem, pages 95–106. Springer, 2015.

- Eric Fritzinger, Sergiu M Dascalu, Daniel P Ames, Karl Benedict, Ivan Gibbs, Michael J McMahon, and Frederick C Harris. The Demeter framework for model and data interoperability. PhD thesis, International Environmental Modelling and Software Society (iEMSs), 2012.

- Jeffrey D Walker and Steven C Chapra. A client-side web application for interactive environmental simulation modeling. Environmental Modelling & Software, 55:49–60, 2014.

- Jon Wheeler. Extending data curation service models for academic library and institutional repositories. Association of College and Research Libraries, 2017.

- Thomas Erl. Service-oriented architecture (soa): concepts, technology, and design. Prentice Hall, 2005.

- Roy T Fielding. Architectural styles and the design of networkbased software architectures, volume 7. University of California, Irvine Doctoral dissertation, 2000.

- Alex Rodriguez. Restful web services: The basics. IBM developerWorks, 33, 2008.

- Leonard Richardson and Sam Ruby. RESTful web services. O’Reilly Media, Inc., 2008.

- Peter Buxmann, Thomas Hess, and Sonja Lehmann. Software as a service. Wirtschaftsinformatik, 50(6):500–503, 2008.

- James Lewis and Martin Fowler. Microservices: a definition of this new architectural term. MartinFowler. com, 25, 2014.

- Sam Newman. Building Microservices. O’Reilly Media, Inc., 2015.

- Dirk Merkel. Docker: lightweight linux containers for consistent development and deployment. Linux Journal, 2014(239):2, 2014.

- Md Moinul Hossain. A Software Environment for Watershed Modeling. PhD thesis, University of Nevada, Reno, 2016.

- Rui Wu. Environment for Large Data Processing and Visualization Using MongoDB. Master’s thesis, University of Nevada, Reno, 2015.

- Miguel Grinberg. Flask web development: developing web applications with python. O’Reilly Media, Inc., 2018.

- Rick Copeland. Essential sqlalchemy. ” O’Reilly Media, Inc.”, 2008.

- Bruce Momjian. PostgreSQL: introduction and concepts, volume 192. Addison-Wesley New York, 2001.

- Ask Solem. Celery: Distributed task queue., 2018. [Accessedon 10 June 2018].

- Docker. Docker hub, 2018. [Accessed on 10 June 2018].

- Fernando P´erez and Brian E Granger. Ipython: a system for interactive scientific computing. Computing in Science & Engineering, 9(3), 2007.

- Daniel P Ames, Jeffery S Horsburgh, Yang Cao, Jirˇ ́ı Kadlec, Timothy Whiteaker, and David Valentine. Hydrodesktop: Web services-based software for hydrologic data discovery, download, visualization, and analysis. Environmental Modelling & Software, 37:146–156, 2012.

- Kazuki Iehira, Hiroyuki Inooue, "Detection Method and Mitigation of Server-Spoofing Attacks on SOME/IP at the Service Discovery Phase", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 25–32, 2026. doi: 10.25046/aj110103

- Shaista Ashraf Farooqi, Aedah Abd Rahman, Amna Saad, "Federated Learning with Differential Privacy and Blockchain for Security and Privacy in IoMT A Theoretical Comparison and Review", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 65–76, 2025. doi: 10.25046/aj100606

- Koji Oda, Toshiyasu Kato, Yasushi Kambayashi, "Evaluation of a Classroom Support System for Programming Education Using Tangible Materials", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 5, pp. 21–29, 2024. doi: 10.25046/aj090503

- Le Truong Vinh Phuc, Mituteru Nakamura, Masakazu Higuchi, Shinichi Tokuno, "Effectiveness of a voice analysis technique in the assessment of depression status of individuals from Ho Chi Minh City, Viet Nam: A cross-sectional study", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 73–78, 2024. doi: 10.25046/aj090408

- Viktor Denkovski, Irena Stojmenovska, Goce Gavrilov, Vladimir Radevski, Vladimir Trajkovik, "Exploring Current Challenges on Security and Privacy in an Operational eHealth Information System", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 2, pp. 45–54, 2024. doi: 10.25046/aj090206

- Taiki Yamakami, Akinori Minaduki, "Analysis of Components and Effects of Chest Compression Posture using CPR Training System", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 2, pp. 17–25, 2024. doi: 10.25046/aj090203

- Pegah Yazdkhasti, Julian Luciano Cárdenas–Barrera, Chris Diduch, "Smart Agent-Based Direct Load Control of Air Conditioner Populations in Demand Side Management", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 1, pp. 114–123, 2024. doi: 10.25046/aj090111

- Jong-Hwa Yoon, Dal-Hwan Yoon, "Implementation of a GAS Injection Type Prefabricated Lifting Device for Underwater Rescue Based on Location Tracking", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 78–86, 2023. doi: 10.25046/aj080609

- Nizar Sakli, Chokri Baccouch, Hedia Bellali, Ahmed Zouinkhi, Mustapha Najjari, "IoT System and Deep Learning Model to Predict Cardiovascular Disease Based on ECG Signal", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 08–18, 2023. doi: 10.25046/aj080602

- Mohamed Nayef Zareer, Rastko Selmic, "Modeling Control Agents in Social Media Networks Using Reinforcement Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 62–69, 2023. doi: 10.25046/aj080507

- Ahmed Slimani, Benyoucef Merah, Mohammed Nasser Tandjaoui, Chellali Benachaiba, "The Effect of Introduction of Wind Energy System on the Energy Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 28–34, 2023. doi: 10.25046/aj080504

- Lucksawan Yutthanakorn, Siam Charoenseang, "Augmented Reality Based Visual Programming of Robot Training for Educational Demonstration Site", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 8–16, 2023. doi: 10.25046/aj080502

- Nelson Bolívar Benavides Cifuentes, Gonzalo Fernando Olmedo Cifuentes, "Transmission of the CAP Protocol through the ISDB-T Standard", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 5, pp. 1–7, 2023. doi: 10.25046/aj080501

- Abdelmadjid Allaoui, Mohamed Nacer Tandjoui, Chellali Benachaiba, "Fuzzy MPPT for PV System Based on Custom Defuzzification", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 4, pp. 36–40, 2023. doi: 10.25046/aj080405

- Sergii Grybniak, Yevhen Leonchyk, Igor Mazurok, Oleksandr Nashyvan, Alisa Vorokhta, "Waterfall: Salto Collazo. High-Level Design of Tokenomics", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 231–243, 2023. doi: 10.25046/aj080326

- Christoph Domnik, Daniel Erni, Christoph Degen, "Measurement System for Evaluation of Radar Algorithms using Replication of Vital Sign Micro Movement and Dynamic Clutter", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 29–39, 2023. doi: 10.25046/aj080304

- Paulo Gustavo Quinan, Issa Traoré, Isaac Woungang, Ujwal Reddy Gondhi, Chenyang Nie, "Hybrid Intrusion Detection Using the AEN Graph Model", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 44–63, 2023. doi: 10.25046/aj080206

- Jin Uk Yeon, Ji Whan Noh, Innyeal Oh, "Temperature-Compensated Overcharge Protection Measurement Technology", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 24–29, 2023. doi: 10.25046/aj080203

- Ossama Embarak, "Multi-Layered Machine Learning Model For Mining Learners Academic Performance", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 850–861, 2021. doi: 10.25046/aj060194

- Ossama Embarak, "Dismantle Shilling Attacks in Recommendations Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 684–691, 2021. doi: 10.25046/aj060174

- Clemens Gnauer, Andrea Prochazka, Elke Szalai, Sebastian Chlup, Anton Fraunschiel, "Technical Aspects and Social Science Expertise to Support Safe and Secure Handling of Autonomous Railway Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 283–294, 2022. doi: 10.25046/aj070632

- Jasmin Softić, Zanin Vejzović, "Operating Systems Vulnerability – An Examination of Windows 10, macOS, and Ubuntu from 2015 to 2021", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 230–235, 2022. doi: 10.25046/aj070625

- Segundo Moisés Toapanta Toapanta, Rodrigo Humberto Del Pozo Durango, Luis Enrique Mafla Gallegos, Eriannys Zharayth Gómez Díaz, Yngrid Josefina Melo Quintana, Joan Noheli Miranda Jimenez, Ma. Roció Maciel Arellano, José Antonio Orizaga Trejo, "Prototype to Mitigate the Risks, Vulnerabilities and Threats of Information to Ensure Data Integrity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 139–150, 2022. doi: 10.25046/aj070614

- Gianvito Mitrano, Antonio Caforio, Tobia Calogiuri, Chiara Colucci, Luca Mainetti, Roberto Paiano, Claudio Pascarelli, "A Cloud Telemedicine Platform Based on Workflow Management System: A Review of an Italian Case Study", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 87–102, 2022. doi: 10.25046/aj070610

- Tarek Nouioua, Ahmed Hafid Belbachir, "The Security of Information Systems and Image Processing Supported by the Quantum Computer: A review", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 77–86, 2022. doi: 10.25046/aj070609

- Penio Lebamovski, "Advantages of 3D Technology in Stereometry Training", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 39–48, 2022. doi: 10.25046/aj070605

- Kohei Yamagishi, Tsuyoshi Suzuki, "Regular Tessellation-Based Collective Movement for a Robot Swarm with Varying Densities, Scales, and Shapes", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 31–38, 2022. doi: 10.25046/aj070604

- Jabrane Slimani, Abdeslam Kadrani, Imad EL Harraki, El hadj Ezzahid, "Long-term Bottom-up Modeling of Renewable Energy Development in Morocco", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 5, pp. 129–145, 2022. doi: 10.25046/aj070515

- Lukanyo Mbali, Oliver Dzobo, "Design of an Off-Grid Hybrid Energy System for Electrification of a Remote Region: a Case Study of Upper Blink Water Community, South Africa", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 5, pp. 17–26, 2022. doi: 10.25046/aj070503

- Hiroaki Hanai, Akira Mishima, Atsuyuki Miura, Toshiki Hirogaki, Eiichi Aoyama, "Realization of Skillful Musical Saw Bowing by Industrial Collaborative Humanoid Robot", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 5, pp. 1–9, 2022. doi: 10.25046/aj070501

- Deeptaanshu Kumar, Ajmal Thanikkal, Prithvi Krishnamurthy, Xinlei Chen, Pei Zhang, "Analysis of Different Supervised Machine Learning Methods for Accelerometer-Based Alcohol Consumption Detection from Physical Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 147–154, 2022. doi: 10.25046/aj070419

- Ahmed Abdelaziz Elsayed, Mohamed Ahmed Abdellah, Mansour Ahmed Mohamed, Mohamed Abd Elazim Nayel, "µPMU Hardware and Software Design Consideration and Implementation for Distribution Grid Applications", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 59–71, 2022. doi: 10.25046/aj070409

- Diad Ahmad Diad, Domra Kana Janvier, Abdelhakim Boukar, Valentin Oyoa, "The use of Integrated Geophysical Methods to Assess the Petroleum Reservoir in Doba Basin, Chad", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 34–41, 2022. doi: 10.25046/aj070406

- Sethakarn Prongnuch, Suchada Sitjongsataporn, Patinya Sang-Aroon, "Low-cost Smart Basket Based on ARM System on Chip Architecture: Design and Implementation", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 15–23, 2022. doi: 10.25046/aj070403

- Young-Jin Park, Hui-Sup Cho, "Lung Cancer Tumor Detection Method Using Improved CT Images on a One-stage Detector", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 1–8, 2022. doi: 10.25046/aj070401

- Heidi Fleischer, Sascha Statkevych, Janne Widmer, Regina Stoll, Thomas Roddelkopf, Kerstin Thurow, "Automated Robotic System for Sample Preparation and Measurement of Heavy Metals in Indoor Dust Using Inductively Coupled Plasma Mass Spectrometry (ICP-MS)", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 139–151, 2022. doi: 10.25046/aj070316

- Noureddine El Abid Amrani, Ezzrhari Fatima Ezzahra, Mohamed Youssfi, Sidi Mohamed Snineh, Omar Bouattane, "A New Technique to Accelerate the Learning Process in Agents based on Reinforcement Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 62–69, 2022. doi: 10.25046/aj070307

- Hanae Naoum, Sidi Mohamed Benslimane, Mounir Boukadoum, "Encompassing Chaos in Brain-inspired Neural Network Models for Substance Identification and Breast Cancer Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 32–43, 2022. doi: 10.25046/aj070304

- Sylvia Melzer, Stefan Thiemann, Hagen Peukert, Ralf Möller, "Towards a Model-based and Variant-oriented Development of a System of Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 19–31, 2022. doi: 10.25046/aj070303

- Valentyn Tsapenko, Mykola Tereschenko, Vadim Shevchenko, Ruslan Ivanenko, "Methodology for Calculating Shock Loads on the Human Foot", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 58–64, 2021. doi: 10.25046/aj060208

- Othmani Mohammed, Lamchich My Tahar, Lachguar Nora, "Power Management and Control of a Grid-Connected PV/Battery Hybrid Renewable Energy System", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 2, pp. 32–52, 2022. doi: 10.25046/aj070204

- Leidy Dayhana Guarin Manrique, Hugo Ernesto Martínez Ardila, Luis Eduardo Becerra Ardila, "Intermediation in Technology Transfer Processes in Agro-Industrial Innovation Systems: State of Art", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 6, pp. 66–75, 2021. doi: 10.25046/aj060610

- Ning Yu, Martin Wirsing, "Modelling and Testing Services with Continuous Time SRML", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 6, pp. 60–65, 2021. doi: 10.25046/aj060609

- Hideya Yoshiuchi, Tomohiro Matsuda, "Service Robot Management System for Business Improvement and Service Extension", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 6, pp. 34–42, 2021. doi: 10.25046/aj060606

- Caglar Arslan, Selen Sipahio?lu, Emre ?afak, Mesut Gözütok, Tacettin Köprülü, "Comparative Analysis and Modern Applications of PoW, PoS, PPoS Blockchain Consensus Mechanisms and New Distributed Ledger Technologies", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 279–290, 2021. doi: 10.25046/aj060531

- Hung-Ming Chi, Liang-Yu Chen, Tzu-Chien Hsiao, "Extraction of Psychological Symptoms and Instantaneous Respiratory Frequency as Indicators of Internet Addiction Using Rule-Based Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 203–212, 2021. doi: 10.25046/aj060522

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Dunisani Thomas Chabalala, Julius Musyoka Ndambuki, Wanjala Ramadhan Salim, Sophia Sudi Rwanga, "Application of Geographic Information Systems and Remote Sensing for Land Use/Cover Change Analysis in the Klip River Catchment, KwaZulu Natal, South Africa", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 65–72, 2021. doi: 10.25046/aj060509

- Rim Mrani Alaoui, Abderrahim El-Amrani, Ismail Boumhidi, "Model Reduction H? Finite Frequency of Takagi-Sugeno Fuzzy Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 53–58, 2021. doi: 10.25046/aj060507

- Christoph Rüeger, Jean Dobrowolski, Petr Korba, Felix Rafael Segundo Sevilla, "Analysis of Grid Events Influenced by Different Levels of Renewable Integration on Extra-large Power Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 43–52, 2021. doi: 10.25046/aj060506

- Chia-En Yang, Yang-Ting Shen, Shih-Hao Liao, "SyncBIM: The Decision-Making BIM-Based Cloud Platform with Real-time Facial Recognition and Data Visualization", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 16–22, 2021. doi: 10.25046/aj060503

- Jong-Jin Kim, Sang-Gil Lee, Cheol-Hoon Lee, "A Scheduling Algorithm with RTiK+ for MIL-STD-1553B Based on Windows for Real-Time Operation System", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 385–394, 2021. doi: 10.25046/aj060443

- Mykoniati Maria, Lambrinoudakis Costas, "Software Development Lifecycle for Survivable Mobile Telecommunication Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 259–277, 2021. doi: 10.25046/aj060430

- Ahmed R. Sadik, Christian Goerick, "Multi-Robot System Architecture Design in SysML and BPMN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 176–183, 2021. doi: 10.25046/aj060421

- Valerii Dmitrienko, Serhii Leonov, Aleksandr Zakovorotniy, "New Neural Networks for the Affinity Functions of Binary Images with Binary and Bipolar Components Determining", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 91–99, 2021. doi: 10.25046/aj060411

- Montaño-Arango Oscar, Ortega-Reyes Antonio Oswaldo, Corona-Armenta José Ramón, Rivera-Gómez Héctor, Martínez-Muñoz Enrique, Robles-Acosta Carlos, "Multidisciplinary Systemic Methodology, for the Development of Middle-sized Cities. Case: Metropolitan Zone of Pachuca, Mexico", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 80–90, 2021. doi: 10.25046/aj060410

- Massimo Donelli, Giuseppe Espa, Mohammedhusen Manekiya, Giada Marchi, Claudio Pascucci, "A Reconfigurable Stepped Frequency Continuous Wave Radar Prototype for Smuggling Contrast, Preliminary Assessment", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 13–20, 2021. doi: 10.25046/aj060402

- Erika Quiroga, Karen Gutiérrez, "Smart Mobility: Opportunities and Challenges for Colombian Cities", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 332–338, 2021. doi: 10.25046/aj060338

- Rotimi Adediran Ibitomi, Tefo Gordan Sekgweleo, Tiko Iyamu, "Decision Support System for Testing and Evaluating Software in Organizations", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 303–310, 2021. doi: 10.25046/aj060334

- Evens Tebogo Moraba, Tranos Zuva, Chunling Du, Deon Marais, "Parametric Study for the Design of a Neutron Radiography Camera-Based Detector System", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 248–256, 2021. doi: 10.25046/aj060327

- Juan Luis Mata-Machuca, "Synchronization in a Class of Fractional-order Chaotic Systems via Feedback Controllers: A Comparative Study", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 146–154, 2021. doi: 10.25046/aj060317

- Nganyang Paul Bayendang, Mohamed Tariq Kahn, Vipin Balyan, "Power Converters and EMS for Fuel Cells CCHP Applications: A Structural and Extended Review", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 54–83, 2021. doi: 10.25046/aj060308

- Niranjan Ravi, Mohamed El-Sharkawy, "Enhanced Data Transportation in Remote Locations Using UAV Aided Edge Computing", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 1091–1100, 2021. doi: 10.25046/aj0602124

- Jason Valera, Sebastian Herrera, "Design Approach of an Electric Single-Seat Vehicle with ABS and TCS for Autonomous Driving Based on Q-Learning Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 464–471, 2021. doi: 10.25046/aj060253

- Ayoade F. Agbetuyi, Owolabi Bango, Ademola Abdulkareem, Ayokunle Awelewa, Tobiloba Somefun, Akinola Olubunmi, Agbetuyi Oluranti, "Investigation of the Impact of Distributed Generation on Power System Protection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 324–331, 2021. doi: 10.25046/aj060237

- Mohab Gaber, Sayed El-Banna, Mahmoud El-Dabah, Mostafa Hamad, "Designing and Implementation of an Intelligent Energy Management System for Electric Ship power system based on Adaptive Neuro-Fuzzy Inference System (ANFIS)", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 195–203, 2021. doi: 10.25046/aj060223

- Zarina Din, Dian Indrayani Jambari, Maryati Mohd Yusof, Jamaiah Yahaya, "Challenges in IoT Technology Adoption into Information System Security Management of Smart Cities: A Review", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 99–112, 2021. doi: 10.25046/aj060213

- Haoxuan Li, Ken Vanherpen, Peter Hellinckx, Siegfried Mercelis, Paul De Meulenaere, "Towards a Hybrid Probabilistic Timing Analysis", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1360–1368, 2021. doi: 10.25046/aj0601155

- Saleem Sahawneh, Ala’ J. Alnaser, "The Ecosystem of the Next-Generation Autonomous Vehicles", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1264–1272, 2021. doi: 10.25046/aj0601144

- Abba Suganda Girsang, Antoni Wibowo, Jason, Roslynlia, "Comparison between Collaborative Filtering and Neural Collaborative Filtering in Music Recommendation System", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1215–1221, 2021. doi: 10.25046/aj0601138

- Rosula Reyes, Justine Cris Borromeo, Derrick Sze, "Performance Evaluation of a Gamified Physical Rehabilitation Balance Platform through System Usability and Intrinsic Motivation Metrics", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1164–1170, 2021. doi: 10.25046/aj0601131

- Teodoro Díaz-Leyva, Nestor Alvarado-Bravo, Jorge Sánchez-Ayte, Almintor Torres-Quiroz, Carlos Dávila-Ignacio, Florcita Aldana-Trejo, José Razo-Quispe, Omar Chamorro-Atalaya, "Variation in Self-Perception of Professional Competencies in Systems Engineering Students, due to the COVID -19 Pandemic", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1024–1029, 2021. doi: 10.25046/aj0601113

- Essamudin Ali Ebrahim, Nourhan Ahmed Maged, Naser Abdel-Rahim, Fahmy Bendary, "Open Energy Distribution System-Based on Photo-voltaic with Interconnected- Modified DC-Nanogrids", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 982–988, 2021. doi: 10.25046/aj0601108

- Syeda Nadiah Fatima Nahri, Shengzhi Du, Barend Jacobus van Wyk, "Active Disturbance Rejection Control Design for a Haptic Machine Interface Platform", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 898–911, 2021. doi: 10.25046/aj060199

- Lesia Marushchak, Olha Pavlykivska, Galyna Liakhovych, Oksana Vakun, Nataliia Shveda, "Accounting Software in Modern Business", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 862–870, 2021. doi: 10.25046/aj060195

- Sonia Souabi, Asmaâ Retbi, Mohammed Khalidi Idrissi, Samir Bennani, "A Recommendation Approach in Social Learning Based on K-Means Clustering", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 719–725, 2021. doi: 10.25046/aj060178

- Ademola Abdulkareem, Divine Ogbe, Tobiloba Somefun, Felix Agbetuyi, "Optimal PMU Placement Using Genetic Algorithm for 330kV 52-Bus Nigerian Network", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 597–604, 2021. doi: 10.25046/aj060164

- Eugeny Smirnov, Svetlana Dvoryatkina, Sergey Shcherbatykh, "Technological Stages of Schwartz Cylinder’s Computer and Mathematics Design using Intelligent System Support", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 447–456, 2021. doi: 10.25046/aj060148

- Carol Dineo Diale, Mukondeleli Grace Kanakana-Katumba, Rendani Wilson Maladzhi, "Ecosystem of Renewable Energy Enterprises for Sustainable Development: A Systematic Review", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 401–408, 2021. doi: 0.25046/aj060146

- Carol Dineo Diale, Mukondeleli Grace Kanakana-Katumba, Rendani Wilson Maladzhi, "Environmental Entrepreneurship as an Innovation Catalyst for Social Change: A Systematic Review as a Basis for Future Research", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 393–400, 2021. doi: 10.25046/aj060145

- Najat Messaoudi, Jaafar Khalid Naciri, Bahloul Bensassi, "Mathematical Modelling of Output Responses and Performance Variations of an Education System due to Changes in Input Parameters", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 327–335, 2021. doi: 10.25046/aj060137

- Abdurazaq Elbaz, Muhammet Tahir Güneşer, "Optimal Sizing of a Renewable Energy Hybrid System in Libya Using Integrated Crow and Particle Swarm Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 264–268, 2021. doi: 10.25046/aj060130

- Dionisius Saviordo Thenuardi, Benfano Soewito, "Indoor Positioning System using WKNN and LSTM Combined via Ensemble Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 242–249, 2021. doi: 10.25046/aj060127

- Mariusz Rohmann, Dirk Schräder, "Switching Capability of Air Insulated High Voltage Disconnectors by Active Add-On Features", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 43–48, 2021. doi: 10.25046/aj060105

- Olorunshola Oluwaseyi Ezekiel, Oluyomi Ayanfeoluwa Oluwasola, Irhebhude Martins, "An Evaluation of some Machine Learning Algorithms for the detection of Android Applications Malware", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1741–1749, 2020. doi: 10.25046/aj0506208

- Teodoro Diaz-Leyva, Omar Chamorro-Atalaya, "Analysis of Learning Difficulties in Object Oriented Programming in Systems Engineering Students at UNTELS", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1704–1709, 2020. doi: 10.25046/aj0506203

- Nesma N. Gomaa, Khaled Y. Youssef, Mohamed Abouelatta, "On Design of IoT-based Power Quality Oriented Grids for Industrial Sector", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1634–1642, 2020. doi: 10.25046/aj0506194

- Karamath Ateeq, Manas Ranjan Pradhan, Beenu Mago, "Elasticity Based Med-Cloud Recommendation System for Diabetic Prediction in Cloud Computing Environment", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1618–1633, 2020. doi: 10.25046/aj0506193

- Tarek Frikha, Hedi Choura, Najmeddine Abdennour, Oussama Ghorbel, Mohamed Abid, "ESP2: Embedded Smart Parking Prototype", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1569–1576, 2020. doi: 10.25046/aj0506188

- Sara Ftaimi, Tomader Mazri, "Handling Priority Data in Smart Transportation System by using Support Vector Machine Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1422–1427, 2020. doi: 10.25046/aj0506172

- Poonam Ghuli, Manoj Kartik R, Mohammed Amaan, Mridul Mohta, N Kruthik Bhushan, Poonam Ghuli, Shobha G, "Recommendation System for SmartMart-A Virtual Supermarket", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1408–1413, 2020. doi: 10.25046/aj0506170

- Kgabo Mokgohloa, Grace Kanakana-Katumba, Rendani Maladzhi, "Development of a Technology and Digital Transformation Adoption Framework of the Postal Industry in Southern Africa: From Critical Literature Review to a Theoretical Framework", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1190–1206, 2020. doi: 10.25046/aj0506143

- Adewale Opeoluwa Ogunde, Mba Obasi Odim, Oluwabunmi Omobolanle Olaniyan, Theresa Omolayo Ojewumi, Abosede Oyenike Oguntunde, Michael Adebisi Fayemiwo, Toluwase Ayobami Olowookere, Temitope Hannah Bolanle, "The Design of a Hybrid Model-Based Journal Recommendation System", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1153–1162, 2020. doi: 10.25046/aj0506139

- Ademola Abdulkareem, Divine Ogbe, Tobiloba Somefun, "Review of Different Methods for Optimal Placement of Phasor Measurement Unit on the Power System Network", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1071–1081, 2020. doi: 10.25046/aj0506130

- Carlos M. Oppus, Maria Aileen Leah G. Guzman, Maria Leonora C. Guico, Jose Claro N. Monje, Mark Glenn F. Retirado, John Chris T. Kwong, Genevieve C. Ngo, Annael J. Domingo, "Design of a Remote Real-time Groundwater Level and Water Quality Monitoring System for the Philippine Groundwater Management Plan Project", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1007–1012, 2020. doi: 10.25046/aj0506121

- Kishan Bhushan Sahay, Pankaj Kumar Singh, Rakesh Maurya, "Standalone Operation of Modified Seven-Level Packed U-Cell Inverter for Solar Photovoltaic System", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 959–966, 2020. doi: 10.25046/aj0506114

- Nagaraj Vannal, Saroja V Siddamal, "Design and Implementation of DFT Technique to Verify LBIST at RTL Level", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 937–943, 2020. doi: 10.25046/aj0506111

- Nu’man Amri Maliky, Nanda Pratama Putra, Mochamad Teguh Subarkah, Syarif Hidayat, "Prediction of Vessel Dynamic Model Parameters using Computational Fluid Dynamics Simulation", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 926–936, 2020. doi: 10.25046/aj0506110

- Luisella Balbis, "Optimal Irrigation Strategy using Economic Model Predictive Control", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 781–787, 2020. doi: 10.25046/aj050693

- Jashandeep Bhuller, Paolo Dela Peña, Vladimir Christian Ocampo II, Julio Simeon, Lawrence Materum, "Minimizing Collisions of Self-Driving Cars by a Control System Using Predetermined Two-Dimensional Grid Localization", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 731–737, 2020. doi: 10.25046/aj050688