Interactive Virtual Reality Educational Application

Volume 3, Issue 4, Page No 72-82, 2018

Author’s Name: Shouq. Al Awadhi, Noor. Al Habib, Dalal Al-Murad, Fajer Al deei, Mariam Al Houti, Taha Beyrouthy, Samer Al-Korka)

View Affiliations

American University of Middle East, Egaila, Kuwait

a)Author to whom correspondence should be addressed. E-mail: samer.alkork@aum.edu.kw

Adv. Sci. Technol. Eng. Syst. J. 3(4), 72-82 (2018); ![]() DOI: 10.25046/aj030409

DOI: 10.25046/aj030409

Keywords: Computer software and applications, Engineering, Information Technology

Export Citations

Virtual Reality (VR) technology has become one of the most advanced techniques that is used currently in many fields. The role of education is extremely important in every society; therefore, it should always be updated to be in line with new technologies and lifestyles. Applying technology in education enhances the way of teaching and learning. This paper clarifies a virtual reality application for educational resolutions. The application demonstrates a virtual educational environment that is seen through a Virtual Reality headset, and it is controlled by a motion controller. It allows the user to perform scientific experiments, attend online live 360° lectures, watch pre-recorded lectures, have a campus tour, and visit informative labs virtually. The application helps to overcome many educational issues including hazardous experiments, lack of equipment, and limited mobility of students with special needs.

Received: 19 May 2018, Accepted: 09 July 2018, Published Online: 11 July 2018

1.Introduction

“This paper is an extension of work originally presented in BioSMART, the 2nd International Conference on Bio-engineering for Smart Technologies” titled ‘Virtual Reality Application for Interactive and Informative Learning’ [1]. Significantly, education has been improving throughout the past years by involving technology in this field. In addition, teaching and learning methods are being enhanced by using software and high-tech devices which are making education more effective. Assuming that, every new technology gets linked to education can solve at least one of the problems that face the field especially the students and the teachers. However, a lot of problems are still hindering students and teachers in schools and universities. Distractions and loss of attention during the lecture due to many factors are some of the problems that face the students, and that may lower their educational skills and scores. Furthermore, students are taking the lessons with lack of interest and excitement in the classroom. It is also difficult to visualize the explication of the lessons for some students [2]. Nevertheless, hazardous mistakes, which require practical implementation, are done in labs. For example, some chemical interactions and electrical experiments mistakes lead to considerable serious injuries.

Another problem is, some schools and universities cannot afford some expensive experimental materials. Also, it is hard to provide some biological organs all the time in schools. Finally, the lack of resources plays a huge role in decreasing the educational level of students with disabilities because some of them find it difficult to interact and perform experiments easily due to their special needs and the movements struggles that they face. Therefore, with the purpose of eliminating all these educational matters, technology must be involved with education essentially to build a better sophisticated and educative society.

2. Objectives

Virtual Reality (VR) is defined as “a computer with software that can generate realistic images and sounds in a real environment and enable the user to interact with this environment” [3]. Developing Virtual Reality applications is benefiting education in many aspects. Creating a virtual educational environment can help with solving a lot of educational problems. To be able to have this virtual educational environment different devices have to be connected together. Those devices are Virtual Reality headset, gesture controller or motion sensor, and 360° camera. For the VR headset, it will allow the user to be a part of the virtual world and see the virtual environment. The gesture controller will help him to interact inside this virtual environment. However, the 360° camera will take a realistic 360° images and videos, which will be displayed inside the VR environment. In the other hand, many objectives are obtained by developing an educational virtual reality application. Assisting students in comprehending lectures easily and attracting students’ attentions by using virtual visualized clarifications of the lecture are some of those objectives. Moreover, the application gives instructors a huge benefit in delivering information easily to the students by supporting their ideas with virtual visualizations. It helps also in performing hazardous experiments without being in danger since the experiments are going to be performed virtually, or student can practice those experiment virtually to be more cautious when they perform it in real-life. In addition, it can solve lack of equipment in labs and affording luxurious equipment problems because the equipment can be provided virtually. Finally, integrating the VR headset with the motion controller will give students with special needs the chance to perform experiments with less movements struggles.

3. Background and Literature Review

Since 1950s, virtual reality was on the boundary of technology but with lack of achievements [4]. However, there was a huge improvement in the virtual reality field in 2012 [5]. The VR headsets are considered as the main tool that enters the user to the virtual world. They are designed to able the user to see the virtual world through special lenses. Also, having a three-dimensional environment is required to be displayed on those VR headset. There are some useful software which help with creating those virtual three-dimensional environments. Those environments can be used as applications that allow the users to learn, watch videos and pictures, and play games.

The literature review discusses the required hardware and software to create a virtual environment and interact with it. First, the hardware part is divided into gesture sensors, VR headsets, and 360⁰ cameras. Second, the software part compares some common software that are used in creating virtual environments and building three-dimensional (3D) applications. Lastly, some existing VR application were mentioned with their purposes.

3.1. Hardware

3.1.1. Gesture Sensors

Gesture controlling technology is a way that is used to detect body motion and gesture. This technology will enable users to interact with some objects and devices without physically touching them [6]. There are many kinds of gesture controllers that can work with the VR technology.

Myo armband is a gesture controller that controls arms’ motion [7]. Moreover, Microsoft has produced a sensor controller called Kinect. The motion controller Kinect can be fixed separately on any object like a desk for example, and it is used mostly for gaming [8]. On the other hand, Oculus VR, which is an American technology company, produces touch controllers that are named Oculus touch that are consisting of two controllers that have buttons to control motion by thumbs [9].

Another controller is Leap Motion which is a small device that helps the user to interact with the objects using their free hands. This controller tracks fingers and hands by using three sensors. It can be connected to the PC through a USB port. In addition, it has its own Software Development Kits (SDKs) that helps in programming [10].

3.1.2. Headsets

VR headsets allow the users to enter through a Three-dimensional sphere, so they can look at, move around, and interact with the 3D virtual environments as if they are real environments.

As an example of the VR headsets, HTC Vive headset is considered as a complete set that contains a headset, two motion controllers, and two base stations for defining a whole-room area. HTC Vive has a unique tracking system that tracks the users’ movements in 10-foot cube from his position. Also, it provides SDKs for the VR devices in order to simplify the programming process for developers. In addition, it has 32 sensors for 360⁰ motion tracking and a front facing camera which helps in making the virtual world more realistic. The motion tracking system of HTC Vive has two controllers which come with two wireless Lighthouses cameras that senses the signals coming from the headset and the controller’s sensors. Then, it programs these signals to make the users moves and interacts virtually in the virtual environment [11].

On the other hand, Samsung Gear VR is considered as one of the most affordable VR headsets. However, it needs specific Samsung Galaxy smartphones to work with. This need constricts the users of Samsung Gear VR headsets because they have to use one of those specific Samsung phones which are Galaxy note 7, S7, S7 Edge, Note 5, S6, S6 edge, and S6 Edge Plus [12].

Oculus Rift headset is another example of the existing VR headsets. This headset can make the user totally immersed inside the virtual environment. In addition, it provides the user with precise picturing scenes and enjoyable interactive experience. It works with 2160 x 1200 resolution, and it is considered to be lighter than its first competitor HTC Vive, which is mentioned previously, since it only weights 470 grams. Oculus offers a package consisting of a single VR headset along with touch controllers for 798$ [13].

However, Oculus Rift DK2 is the oldest version of Oculus Rift. It is a VR headset that provides a stereoscopic 3D view which maintains excellent depth and scale. Oculus Rift DK2 has a 360⁰ head tracking technology and an external camera for enhancing the Rift’s positional tracking ability. In addition, its motherboard contains an ARM Cortex-M3 microcontroller that is used to utilize the ARM Thumb-2 Instruction Set Architecture, so the device provides full programmability and enables high performance [14].

Lastly, Google Cardboard is a VR headset that was released in 2014. It considered as one of the lightest VR headsets due to the light materials which are used to build it with. Those materials are cartoon, woven nylon, and rubber as the outer headset, and it comes with two lenses. Also, it has some specifications that able the users to enter the virtual world [15].

3.1.3. 360⁰ Cameras

360⁰ cameras are common nowadays. They are provided with one or more lenses to take a certain degree photos and videos. There are many available types in the market.

As an example of the 360⁰ cameras, the Bubl 360⁰ camera utilize four lenses to capture the images, and it can capture 14 mega pixel spherical images and videos. It uses Wi-Fi to able the users to share the recorded videos and photos through social network. It also allows live-streaming directly to the user’s PC or mobile. Bubl 360⁰ camera also have the MicroSD card which save all the contents [16].

The 360fly 4K camera is also one of the common 360⁰ cameras, and it has the ability to capture 16 mega pixel photos and videos. It has a water resistant up to 1 ATM, so it can capture photos and videos under the water. Furthermore, it allows user to live-steam directly, and it has a high resolution [17].

However, the Gear 360⁰ camera records and capture videos and photos with 15 mega pixels. It has two lenses that are horizontal and vertical. Each lens captures 180⁰ of the view, so the view in total will be 360⁰. It also has water resistant. The Gear 360⁰ camera was invented for the Samsung VR headset and Samsung smartphone [18].

In addition, the LG 360⁰ camera can capture both 360⁰ and 180⁰ images and videos. Also, it allows the users to capture those images with 13 mega pixels. Android 5.0 or later updates and IOS8 or later updates devices holders can use this camera. It is friendly to use with YouTube and Google Street view applications which allow the users to explore some of the 360⁰ videos and photos and share them as well [19].

Finally, Ricoh Theta S camera is a small 360⁰ camera that has two lenses each with 180⁰ field of view. It can take 360⁰ images and video, and it also support the live-streaming mode. It can be connected to the PC through a USB port or a Wi-Fi connection [20].

3.1.4. Software

There are many existing software that provide creating virtual environments like Unity3D, Open Wonderland, and Unreal.

First, Open Wonderland is considered as a 3D toolkit which can build virtual reality environments. It is a Java based application that has many services. Also, it supports many platforms such as IOS, Android, Mac, and Windows [21].

Second, Unreal is a software that support C# and Blueprints programming languages. Blueprints Visual Scripting System helps those users who are unused to deal with programming languages to create their virtual environments easily in this software. Moreover, it has many services including multiplayer capability, more detailed graphics, and flexible plugin architecture [22].

Lastly, Unity 3D software is a software that helps the developer to create the virtual scenes. It supports three programming languages which are Boo, C#, and JavaScript. It also has existed tutorials and online chat, where all of the users can communicate and help each other, on their official website. Furthermore, it has a free version which helps with lowering the cost of building a virtual application [23].

3.1.5. Existing Application

Virtual Reality is used in different fields, so many VR application were created in order to so some tasks in each of those fields.

One of these VR applications is the “Interactive Pedestrian Environment Simulator”. This Simulator is used for cognitive monitoring and evaluation. It demonstrates a virtual traffic environment that helps in ensuring the safety of pedestrian and elderly people at the road. The application was built by using Unity3D software [24] and was demonstrated using Oculus Rift DK2 connected to Leap Motion and Myo by Thalmic Labs [25].

Moreover, Titan of Spare application, which is considered to be as an educational simulator, which allows students to orbit the solar system and discover the planets virtually. It provides the chance for them to learn and discuss about the space in an exciting and motivating technique. Students are allowed to perform several errands including zooming in and out the system, discovering the planets in the solar system, and gaining information about each planet. The application was developed for Google cardboard, Oculus Rift, HTC Vive, and Samsung Gear platforms [26].

Another simulator is Virtual Electrical Manual ‘VEMA’ which is a virtual electronics lab that facilitate the way of learning and understanding the electrical circuits. It allows the students performing circuits virtually to prevent dangerous incidents with electricity. It contains different menus such as equipment menu, capacitor menu, construction of Direct Current (DC) circuits menu, Alternating Current (AC) menu, and resonance menu. Several information is demonstrated in each menu to unsure the students’ understanding. The developers of this application used Wirefusion software to build up the 3D objects in the virtual lab, and they used JavaScript as a programming language in order to interact with the 3D objects [27].

Additionally, the Chemistry Lab Application was developed as an educational animated application to provide the chance for students to perform chemical experiments virtually. It reduces the chances of having real-life injuries and causing serious damages due to the chemicals use. Developers used Autodesk Maya software for creating the 3D objects in this virtual lab. HTC Vive headset and its controllers were used to enter user to the virtual world of this application and to interact with the 3D objects inside the labs there. Unity3D software was used to build this application [28].

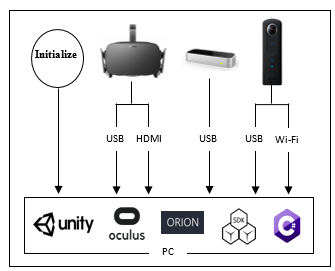

4. Methods

The proposed system of the application consists of both hardware and software modules. After comparing the common existing devices together and doing some calculations to see which fits our needs, the hardware module was designed. First, the common VR headsets were compared deepening on the frequency, price, angle of view, weight, resolution, connection, SDKs, platforms, and integration. Meanwhile, the common 360⁰ cameras were compared depending on price, SDKs, number of lenses, connection, resolution, RAM, Angle, battery life, frequency, and integration. For the gesture controllers, there were few controllers on the market, so it was easy to take a decision. The most important criteria that was taken into consideration while choosing the motion controller was the accuracy because it will affect the whole project. Without an accurate controller, the application will not work in an effective way. Moreover, the tracking area of the motion sensor should be wide as much as possible to make sure that the motions will be within the range. In addition, the controller must be easy to use, so the user feels comfortable while moving. Since there is a limited budget, so the price of the motion sensor must be affordable. As a result, for the hardware implementation, Oculus Rift as VR headset, Leap Motion as gesture motion controller sensor, and Ricoh Theta S as 360ᵒ camera were chosen to be integrated together with a VR ready PC. Figure 1 shows the connections between all the devices that are used in this project. As shown in Figure 1, Oculus Rift should be connected to the PC using USB and HDMI ports. Moreover, Leap Motion controller should be connected to the PC using USB port, and Ricoh Theta S 360⁰ camera can be connected to the PC using USB port or Theta’s Wi-Fi connection that it provides. All the devices should be initialized on the PC at the beginning. The software and the needed SDKs of each device should be installed on the PC.

Figure 1: High Level Design Flowchart

Figure 1: High Level Design Flowchart

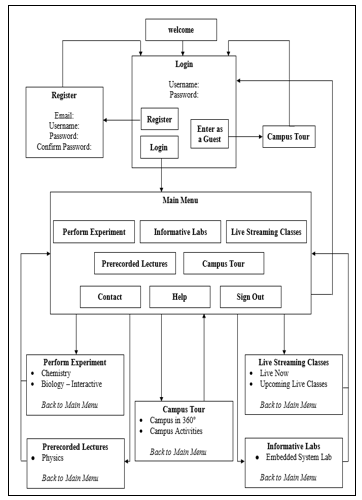

Figure 2: User Interface Hierarchy Flowchart

Figure 2: User Interface Hierarchy Flowchart

Before choosing the software, the specifications of the

Before choosing a software to build the application with, the specifications of the most common software that provide building a virtual environment were compared together in order to get the final decision. Those common software are Unity3D, Open Wonderland, and Unreal. Some of the main criteria of choosing the software were, the software should be easy to be dealt with and there should be existing tutorials to help with learning how to use it well. In addition, the programming languages, graphics capability, and the services that each software provides were taken into consideration. As a result, Unity3D game engine was chosen because it satisfies all the needs of this project.

For the software implementation, Figure 2 shows the user interface hierarchy, and it describes the flow of the application in general. Each box in the flowchart shown in Figure 2 represents a main scene. Meanwhile, each main scene has some sub-scenes that execute a specific task such as performing experiments, watching pre-recorded lectures, having a campus tour, attending online live-streaming classes, and identifying elements and components in the informative labs. To perform those tasks, many taught courses such as Biology, Chemistry, and Physics were referred to in those scenes.

As a start, a welcome scene will be showed to the user once he plays the application. Then, he will be directed to the “Main Menu” where three main buttons which are “Enter as a Guest”, “Register”, and “Login” can be found. The “Enter as a Guest” button will give the user an access to have a tour around the campus only, so the user will have to register in order to get a full access to the application. However, the application needed to be secured since it is used for an educational purpose, so a registration and a login feature were added. The user is required to fill in the needed information which are the “Username”, “Email”, “Password”, and “Confirm Password” in the registration scene. Once the user is done with filling the information there, the system will check if the “Username” is valid or not and if the “Password” and “Confirm Password” fields are identical or not. If there is any error, the system will show a message regarding the error. Otherwise, the system will complete the registration process and add the user to the database. After that, the user will be able to login, and once he enters his username and password, the system will check the database if this user has an authorization or not. If the username is not found or the password is not correct, the system will show a message about this error. If the login is successful, the user will be directed to the “Main Menu” where he can choose to enter to one of the provided sub-scenes. If the user chose “Perform experiment” scene, he will be directed to that scene where he can choose between entering the Biology or the Chemistry labs to either interact with the objects or perform experiments virtually ending up with small quizzes to test his understanding. On the other hand, if the user chose to enter the “Informative labs” scene, he will be directed to a scene where he can visit an embedded system lab virtually to notify and learn about each equipment and their location inside the lab. For the live-streaming sub-scene, the user can enter “Upcoming Live Classes” scene to check the time schedule of the upcoming live classes. Then, if there is an available live class at any time, the user can enter “LIVE NOW” scene to attend the given lecture virtually. Moreover, by entering the “Prerecorded Lecture” scene, the user will be able to choose the “Physics Lecture” scene where a physical experiment was recorded to be displayed to the user, so he can refer to it whenever needed. In the “Campus Tour” scene, the users can choose to attend a scene where they can watch 360⁰ pictures and videos of the campus facilities or visit a scene where they can display photos and videos of the campus activities taken with Ricoh Theta S 360⁰ camera. Each sub-scene has a “Back to Main Menu” button, so the user can easily shift between scenes. Finally, the users can find the contact information of the university, manage the settings, get some help, and sign out from their accounts by clicking on the required button showed on the “Main Menu”. Some Assets, codes, equations, object, and animations were used to implement the application.

4.1. Assets and codes

To be able to build the application using Unity3D game engine many assets and codes, which were written with different programming languages, were used. To show the hands, interact with the objects, and trigger the items inside the scenes Leap Motion assets [29] were used. In addition, Curved Keyboard [30] asset was used to show the keyboard that helps the users with entering characters in the “Login” and “Register” scenes. Furthermore, Curved UI assets [31] were downloaded into unity3D software to provide a curved interface that has 180⁰ of view, so the interface will be flexible and easy to use. It is also a scalable interface, and it can support different VR Headsets including Oculus Rift, Gear VR, HTC Vive, and Google Cardboard. In addition, it supports many controllers other than the Leap Motion, which is the used gesture controller sensor for this project, such as the mouse, Vive controller and Oculus Touch. Lastly, Theta Wi-Fi Streaming asset [32] were downloaded to be able to have online live-streaming classes.

For codes and programing, Unity3D software support C# and JavaScript as programming languages. Both of these languages were used to write some codes in order to perform some actions inside the application. In this application, many functions were programmed to transfer the user from scene to scene, show and hide User Interface (UI) elements, display two-dimensional (2D) and 360⁰ videos, generate animations by using Unity3D particle systems, login, register, and calculate the grades of the Biology and Chemistry experiments based on the user performance. In addition, a virtual tutor was programmed to give the user immediate instructions and feedbacks depend on his actions, so the user will be helped to move easily through the application in general.

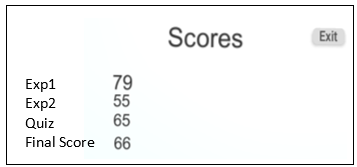

4.2. Equations

Since there are quizzes and graded experiments in the application, few equations were used to calculate the score of the students’ performance. First, the Chemistry lab has two multi-leveled experiments and a quiz. The total score of the Chemistry lab is divided to cover all those multi-leveled experiments and the final grade of the quiz. In total of a 100 scores, the first multi-leveled experiment worth 25%, the second multi-leveled experiment worth also 25%, and the quiz worth 50%. Equation 1 is used to calculate the grade of each level of the experiments. The symbol TIME represents the time consumption of each student to finish each experiment. Depending on the code, if the time consumption of the user is 60 seconds or below, TIME will get the value 60. In addition, the symbol TRIALS represents the number of trials the student use to complete the experiment. However, both of TIME and TRIALS mentioned in “(1)” have 50% of each level’s grade which is already worth 25% of the total score as previously mentioned.

Score = ( ( 50/TRIALS ) + ( (50*60) /TIME ) )/2 (1)

The quiz of the Chemistry lab is divided into five questions, so each one worth 10 scores out of 50% of the total score. Equation 2 is used to calculate the quiz grades. The symbol x represents the answer of each question, so if the right answer is chosen the value of x will be 1. Otherwise, the value of x will be 0. The symbol TIME in “(2)” represents the time consumption of the user to solve each question in the quiz. Depending on the code, if the time consumption of the user is 15 seconds or less, TIME will be valued as 15.

Score = ( ( (15 * x) /TIME ) * 10 ) (2)

For the Biology lab, it was built to be more interactive than being experimental, so the student will be only evaluated depending on the quiz grades. Equation 2, which is used to calculate the Chemistry lab quiz, is also used to calculate the grade of the Biology lab quiz too. As the Chemistry lab, the Biology lab is divided into five questions, and each of them worth 10 scores out of 50. Since there is no experimental part on the Biology lab, the total score of it is 50.

Figure 3: Hardware Integration

Figure 3: Hardware Integration

Figure 4: Main Menu User Interface

Figure 4: Main Menu User Interface

4.3. Three-Dimensional (3D) Objects and Animations

Inside the virtual environment of this application some animations and three-dimensional objects were used. For example, Chemistry and Biology labs are animated labs which are based on animations, 3D objects, and 3D characters. In addition, the pre-recorded class in this application was built to have a combination between those 3D objects and a 2D video. Furthermore, the virtual tutor was created using 3D objects and characters. Some of those three-dimensional objects were built using tools from Unity3D game engine, and some of them were predefined from the software itself. The location, position, size, and color of those objects and animations were modified and customized to fit the project’s needs. Some of these objects are interactive, so the user can hold, release, and move them inside the scenes. Those objects were created and designed in a way that appears close to the real-life objects. Hence, the objects which some of them are the labs equipment will be recognizable and observable to the user while doing the experiments or moving through the application. Moreover, fire, bubbles, and liquid drops are examples of the animations that are used in the Chemistry lab scene. Those specific animations were created using particle systems offered by Unity3D software.

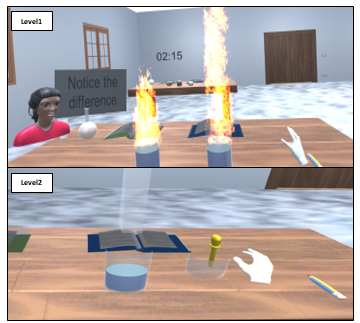

Figure 5: Chemistry Lab Scene Level 1 and Level 2

Figure 5: Chemistry Lab Scene Level 1 and Level 2

5. Final Results and Achievements

As a result, Oculus Rift as a virtual reality headset, Leap Motion as a motion sensor and gesture controller, and Ricoh Theta S 360ᵒ camera were integrated together with a PC that has Unity3D game engine to build a fully interactive educational VR application. Users can interact with the application using their free hands as shown in Figure 3. The interaction between the hands and the application was done due to the detection of the users’ gestures using Leap Motion controller.

5.1. User Interface

The user interface was constructed to be curved using the CurvedUI asset [31], and it was customized to fit the application’s needs. Having a curved user interface can give the user 180⁰ cylindrical view, which will make the menu fully interactive from any angle. Figure 4 shows the use of the CurvedUI asset on the “Main Menu” inside the application.

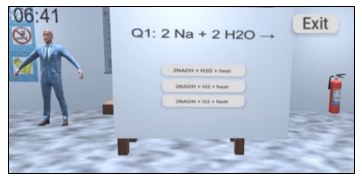

5.2. Chemistry Lab Scene

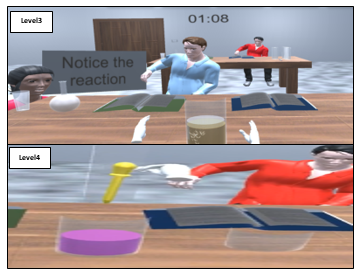

The Chemistry lab scene is divided into five levels scenes other than the main scene where the student will be able to identify the equipment that will be used during the coming levels virtually. This feature will help in solving the problem of lack of equipment and the problem of affording expensive ones in real life. In level 1 and 2, experiment 1 will be demonstrated. However, in level 3 and 4, experiment 2 will be demonstrated. Students will not be able to choose the number of the level, so he will start by level 1, pass through the next levels ascendingly, and end up with the quiz.

Figure 6: Chemistry Lab Scene Level 3 and Level 4

Figure 6: Chemistry Lab Scene Level 3 and Level 4

In the first level, the students will be allowed to perform a dangerous real-life experiment which is “The Reaction of Sodium with Water” that produces fire [33], so the user will try to place two virtual different sized pieces of Sodium into water and notice the difference between the reactions them. In fact, the larger the amount of Sodium the more fire will be produced as shown in Figure 5. Then, the students will check the acidity of the product in level 2 by dropping Phenolphthalein virtually on it. After dropping Phenolphthalein, if the product color turned to pink color, the product will be considered as a base.

In level 3, the students will be able to recognize the reaction between Sodium and Alcohol. As level 1, the student will try to place two different sizes of Sodium inside the Alcohol. Then, they will try to notice the reaction. Unlike the reaction of Sodium with water, the reaction between Sodium with Alcohol will produce bubbles in real-life [34]. The more the amount of Sodium is used, the more bubbles will be produced. As level 2, the student will check the acidity of the product by dropping Phenolphthalein into it, and if the color of the product is changed into pink as shown in Figure 6, it will be considered as a base [35].

After the experimental part, a quiz will be provided in level 5 including five questions to test students’ understanding as shown in Figure 7.

Figure 8: Chemistry Lab Scores

Figure 8: Chemistry Lab Scores

Finally, the scores of experiment 1, experiment 2, and the quiz will be displayed on the score scene as shown in Figure 8.

Regarding the applicability of VR teaching within the Chemistry lab scene, some situations are programmed to be aligned with the real-life incidents. For example, if the sodium was dropped by mistake on the ground in this application, the student can sit and pick it up as if it is real. This will give the students the chance to try and learn about how to do it in reality. Also, as mentioned previously, the different amounts of produced fire and bubbles due to the size of Sodium are examples of the applicability of VR teaching because this virtual experimental practice will lead the student to get main idea. However, some other incidents are not programmed yet. In brief, applying this experiment virtually will give the students the chance to practice the experiment and see its reaction, so if this experiment is performed in real-life the students will be more cautious. As a result of using this education supplementary virtual reality application, serious injuries will be prevented or reduced.

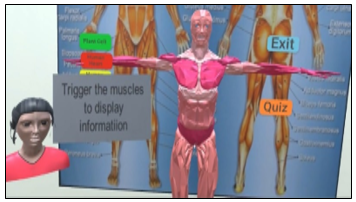

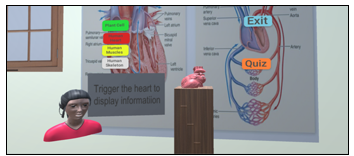

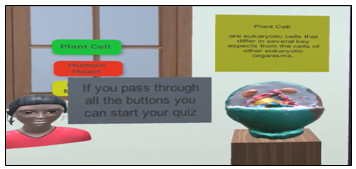

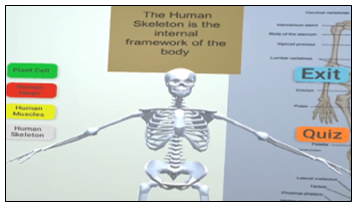

5.3. Biology Lab Scene

The Biology lab was built in order to help the students with learning and interacting with the biological organs virtually because it is hard to deal with them in real-life. Also, it is difficult to afford real biological organs, so this lab helps solving this problem. In the Biology interactive and informative lab scene, the user can interact with the different virtual biological objects such as human muscle, human heart, human skeleton, and a plant cell. A box of information about each object will appear to the user by triggering the objects.

Figure 9 represents the human muscles scene, where students can see a 3D virtual human muscle module. By following the virtual tutor guidance, the student will be asked to trigger the human muscle module so that a list of information about the human muscles will be displayed in the information box.

Figure 11: Human Skeleton Scene

Figure 11: Human Skeleton Scene

The human heart scene shown in Figure 10 includes a 3D human heart module. By triggering this heart module, a list of general information about the human heart will be appeared to the student. Students will be asked in the quiz later questions which are related to the information.

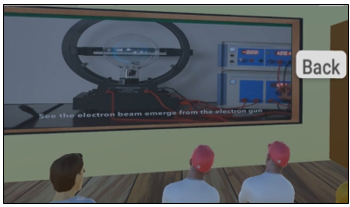

Figure 13: Prerecorded Physics Lecture Scene

Figure 13: Prerecorded Physics Lecture Scene

Figure 14: Informative Embedded System Lab Scene

Figure 14: Informative Embedded System Lab Scene

The human skeleton shown in Figure 11 will be displayed in the human skeleton scene in the Biology lab. In this scene, students will be asked to trigger the skeleton itself, so a box of information about this body part will be shown to them.

Moreover, the plant cell scene shown in Figure 12 includes a 3D plant cell module. Students can see the small components of the cell in a clear and interesting way that will help them to understand easily. Also, they can gain some information about the plant cell after looking at the information box that will be displayed when the plant cell is triggered.

After recognizing all the elements in the Biology lab, students can take a provided quiz that consist of five questions to test their understating. Then, the scores scene, where only the quiz grades will be appeared since there is no experimental part in the Biology virtual lab, will be displayed.

5.4. Pre-recorded Physics Lecture Scene

A pre-recorded Physics lecture scene was created in this application to include one of the Physics experiments that need expensive equipment. Some educational societies cannot afford the equipment, so this feature provides a way to visualize what are being taught in the class with a lower cost. The recorded experiment in this scene is known as “Charge to Mass Ratio experiment” [36]. As shown in Figure 13, this scene is a combination between animated 3D characters and a 2D video.

5.5. Informative Lab Scene

In the informative lab scene as shown in Figure 14, the user can attend a real-pictured 360⁰ embedded system lab. This picture was taken using Ricoh Theta S 360⁰ camera. Some floating buttons were added to the scene, so when the user press those buttons, a clear image of different equipment that are used inside the lab with its description will be shown. This lab will help students to gain more information about the use of those equipment in real-life.

Figure 15: Live-streaming Scene

Figure 15: Live-streaming Scene

5.6. Live-streaming Scene

In the live-streaming scene shown in Figure 15, the live-streaming feature was developed to provide the chance to attend virtually 360⁰ online live classes after checking the lectures’ timing on the provided schedule. To be able to have the live-streaming feature, the PC should be connected with Ricoh Theta S 360⁰ camera’s wi-fi that it provides. Having this attribute will help in increasing the number of the available seats in some particular classes that are full seated in real-life.

5.7. Campus Tour Scenes

Campus Tour considered as multiple scenes where the user can have a virtual tour inside the campus. He can see a gallery where 360⁰ images and videos of the campus facilities are provided including the activities that have been done in the campus. All of the 360⁰ photos and videos were taken using Ricoh Theta S 360⁰ camera to make the user’s tour be close to the reality. One of the advantages of this tour is having a virtual orientation around the campus, which will help in recognizing the campus before applying to this university or this school for example. Another advantage is, the virtual campus tour will help new students in recognizing the campus’s facilities. In addition, it will allow the students, who missed the activities, to attend them virtually as if they were there.

5.8. Virtual Tutor

A virtual tutor, which is shown in Figure 16, was created to guide the user inside the application. It was programmed to give immediate instructions and feedbacks depending on the user’s actions. For example, it gives the user the instruction on doing the experiment inside the Chemistry lab, and it tells him well done if he passed the experiment. Also, it is used to tell the user which button to click or what movement should he do in order to perform the tasks.

5.9. Testing and Evaluation

In order to evaluate the performance of the application and to achieve the design specifications, different methods were applied to the hardware and software. First method, we moved through the application step by step and checked whether all the scenes are operating correctly or not. At the same time, the functionality of the hardware parts such as sensors were checked to make sure they are working and tracking well. In addition, all the bugs and errors that were found have been fixed.

Another followed method was, evaluating the application by multiple users and to get their feedbacks regarding their experience and the issues they faced. This includes their technical experience about the functionality of the buttons, effects, clicks, animations, and the response time of the actions. The application was tested with an approximate total of more than 50 students and 20 instructors from our university which is the American University of the Middle East, 16 instructors and 12 students from the BioSMART conference which is the Second International Conference on Bio-engineering for Smart Technologies held in Paris, and over 200 people with different positions in Knowledge Summit held in Dubai. Most of the feedbacks supported the idea of having a fully interactive educational application for practical and informative learning. On the other hand, some of them concerned about the difficulty of dealing with the virtual environment using Leap Motion gesture controller. In fact, this concern is normal because the Leap Motion controller needs some practice in order to get used to it. Some people refused the idea of replacing real teaching process with VR teaching. However, this application is an education supplementary application that will help in reducing some educational issues, so it will support the real teaching processes. At last, it was important to take these feedbacks into consideration to give the user a better experience and to improve the quality and the performance of the application. As a future plan, when there is an availability the application will be tested on people with disabilities to check their performance and to see their acceptance to the idea of this application.

5.10. New-Work

Additional to what was presented on BioSMART conference, some upgrades to the application were added. A Database using C# was created to collect data and results in one place, so it can be easily accessed and tracked. This Database keeps profile of each user whether instructor or student. Having the Database in the application will help the user to know how well he is doing, and it will help instructors in evaluating the students based on their reports easily. As shown in Figure 17 below, each user’s folder contains three file which are the user’s information file, the Chemistry lab scene scores file, and the Biology lab scene scores file. For the user’s information file, it contains the username, email, and password of the user as shown in Figure 17. On the other hand, the scores scenes will contain only numbers that represents the scores of each experiment and quiz in those scenes. On the other hand, the database can be used also to report the user’s activity and attendance of live streaming classes and prerecorded lectures for example. However, it is a basic database, so some other upgrades will be provided to add some more professional features like those.

6. Conclusion

In conclusion, the evolution of technology succeeded in spreading the use virtual reality nowadays in several fields including education. Since that, a virtual reality application for educational purpose such as practical learning was built to solve some of the educational issues. This provided solution will facilitate the way of learning and teaching. Student’s understanding and attention will be increased, and teachers will have an easier way to deliver the information to them using this application. In addition, dangerous mistakes in labs will be prevented or reduced due to the practical virtual implementation, so students will be able to practice dangerous experiments safely. Also, the number of lack of equipment problems will be decreased since those equipment will be provided simply inside the virtual environment. Nevertheless, students with disability will be involved in performing experiments with less movements struggles. Furthermore, this application is supporting gaining information in an interesting way because of the use of virtual reality technology. As an outcome, Oculus Rift as a virtual reality headset, Leap Motion as a motion sensor and gesture controller, and Ricoh Theta S as a 360ᵒ camera were integrated together with Unity3D game engine to produce this educational tool that can help in developing the educational field.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

This project was supported and Funded by the American University of the Middle East (AUM) where we spent time doing the project at the Research and Innovation Center with endless support from the professors, instructors, and engineers. We would like to show our gratitude to them for assisting, helping, and shearing their pearl of wisdom with us to complete this project.

- S. AlAwadhi N. AlHabib, D. Murad, F. AlDeei, M. AlHouti, T.Beyrouthy, S. AlKork., “Virtual reality application for interactive and informative learning,” 2017 2nd International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, 2017, pp. 1-4. doi: 10.1109/BIOSMART.2017.8095336

- G. Leman and W. Tony, “Virtual worlds as a constructivist learning platform: evaluations of 3D virtual worlds on design teaching and learning,” Journal of Information Technology in Construction (ITcon), pp. 578-593, 2008.

- P. Brey, “Virtual Reality and Computer Simulation,” The handbook of information and computer ethics, pp. 361-363, 2008.

- G. Burdea and P. Coiffet, Virtual Reality Technology, Canada: John Wiley & Sons, 2003.

- G. V. B. Yuri Antonio, “Overview of Virtual Reality Technologies,” Southampton, United Kingdom, 2013.

- G. Daniel, “Will gesture recognition technology point the way?,” vol. 37, no. 10, pp. 20 – 23 , 2004.

- N. Rachel, “Armband adds a twitch to gesture control,” New Scientist, vol. 217, no. 2906, p. 21, 2013.

- X. Zhang, Z. Ye and L. Jin, “A New Writing Experience: Finger Writing in the Air Using a Kinect Sensor,” vol. 20, no. 4, pp. 85 – 93, 2013.

- Higgins, Jason Andrew, Benjamin E. Tunberg Rogoza, and Sharvil Shailesh Talati. “Hand-Held Controllers with Capacitive Touch Sensors for Virtual-Reality Systems.” U.S. Patent Application 14/737,162, 2016.

- “Leap Motion Unity Overview,” Leap motion, 2016. [Online]. Available: https://leapmotion.com.

- L. Prasuethsut, “Why HTC Vive won our first VR Headset of the Year award,” WAREABLE, 2 November 2016. [Online]. Available: https://www.warea [2]ble.com.

- G. Carey, “Spec Showdown: Oculus Rift vs. Samsung Gear VR,” 6 January 2016. [Online]. Available: http://www.digitaltrends.com.

- “intoducing Oculus Ready PCs,” 2016. [Online]. Available: https://www.oculus.com.

- B. Lang, “Oculus Rift DK2 Pre-order, Release Date, Specs, and Features,” Road to VR, 5 October 2016. [Online]. Available: http://www.roadtovr.com.

- “Cardboard,” google, 2016. [Online]. Available: https://vr.google.com.

- “Be a part of 360 Spherical Movement,” Bublcam, 2015. [Online]. Available: https://www.bublcam.com.

- “360fly 4K Specifications,” 360fly, 2016. [Online]. Available: https://360fly.com.

- “GEAR 360,” samsung, 2016. [Online]. Available: http://www.samsung.com.

- “LG 360 CAM,” LG, 2016. [Online]. Available: http://www.lg.com.

- “High-spec model that captures all of the surprises and beauty from 360°,” THETA, 2016. [Online]. Available: https://theta360.com.

- J. Kaplan and Y. Nicole, “Open Wonderland: An Extensible Virtual World Architecture,” vol. 15, no. 5, pp. 38 – 45, 2011.

- ] G. A. Kaminka, M. M. Veloso, S. Schaffer, C. Sollitto, R. Adobbati, R. Adobbati, A. Scholer and S. Tejada, “GameBots: a flexible test bed for multiagent team research,” Communications of the ACM – Internet abuse in the workplace and Game engines in scientific research, vol. 45, no. 1, pp. 43-45, 2002.

- “Unity 3D,” Unity 3D, 2016. [Online]. Available: https://unity3d.com.

- J. Orlosky and M. Weber, “An Interactive Pedestrian Environment Simulator for Cognitive Monitoring and Evaluation,” pp. 57-60 , 2015.

- N. Owano, “Thalmic Labs’ Alpha users explore Myo with Oculus,” 2014.

- “Titans of space,” 2016. [Online]. Available: http://www.titansofspacevr.com.

- V. M. Travassos and M. C. Ferreira, “Virtual labs in electrical engineering education – The VEMA environment,” Information Technology Based Higher Education and Training, 2014.

- C. Smith, “Chemistry Lab VR,” 2016. [Online]. Available: https://devpost.com.

- “Leap Motion Unity Overview,” Leap motion, 2016. [Online]. Available: https://leapmotion.com.

- “Unity 3D,” Unity 3D, 2016. [Online]. Available: https://unity3d.com.

- “Curved UI – VR Ready Solution To Bend / Warp Your Canvas!,” Unity 3D, 2016. [Online]. Available: https://www.unity3d.com

- High-spec model that captures all of the surprises and beauty from360°,” THETA, 2016. [Online]. Available: https://theta360.com

- C. Bobbert, “Solvation and chemical reaction of sodium in water clusters,” The European Physical Journal D – Atomic, Molecular, Optical and Plasma Physics, vol. 16, no. 1, p. 95–97, 2001.

- J. Clark, “REACTING ALCOHOLS WITH SODIUM,” 2009. [Online]. Available: http://www.chemguide.co.uk/.

- Repp, Julia, Christine Böhm, JR Crespo López-Urrutia, Andreas Dörr, Sergey Eliseev, Sebastian George, Mikhail Goncharov, “PENTATRAP: a novel cryogenic multi-Penning-trap experiment for high-precision mass measurements on highly charged ions.” Applied Physics B 107, no. 4 (2012): 983-996.

- Al Kork SK, Denby B, Roussel P, Chawah P, Buchman L, Adda-Decker M, Xu K, Tsalakanidou F, Kitsikidis A, Dagnino FM, Ott M, Pozzi F, Stone M, Yilmaz E, Uğurca D, Şahin C “A novel human interaction game-like application to learn, perform and evaluate modern contemporary singing: “human beat box””. In: Proceedings of the 10th international conference on computer vision theory and applications (VISAPP2015), 2015

- Ulmer, S., C. Smorra, A. Mooser, Kurt Franke, H. Nagahama, G. Schneider, T. Higuchi et al. “High-precision comparison of the antiproton-to-proton charge-to-mass ratio.” Nature 524, no. 7564 (2015): 196, 2015.

- Al Kork S, Amirouche F, Abraham E, Gonzalez M. “Development of 3D Finite Element Model of Human Elbow to Study Elbow Dislocation and Instability”. ASME. Summer Bioengineering Conference, ASME Summer Bioengineering Conference, Parts A and B ():625-626, 2009

- E. Saar, “Touching reality: Exploring how to immerse the user in a virtual reality using a touch device,” p. 43, 2014.

- D. D. Carlos, Corneal Ablation and Contact Lens Fitting: Physical, Optical and Visual Implicatios., Spain: Universidad de Valladolid, 2009.

- Patrick CHAWAH et al., “An educational platform to capture, visualize and analyze rare singing”, Interspeech 2014 conference, Singapore, September 2014

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Elliott Wolbach, Michael Hempel, Hamid Sharif, "Leveraging Virtual Reality for the Visualization of Non-Observable Electrical Circuit Principles in Engineering Education." Virtual Worlds, vol. 3, no. 3, pp. 303, 2024.

- Yunyang Zhang, Fan Zhang, Yingjie Kong, Chengpeng Tang, "VR multi-class." In 2020 International Conference on Virtual Reality and Visualization (ICVRV), pp. 342, 2020.

- M. Subramanian, Rajeev Kumar Gupta, Vijay Karthic R, Kaustubh Pandurang Jadhav, Almighty Cortezo Tabuena, Yvon Mae Hilario Tabuena, "Reimagining Mythology with Virtual Reality: Postmodernist Techniques for Immersive Retellings in Educational Platforms." In 2025 First International Conference on Advances in Computer Science, Electrical, Electronics, and Communication Technologies (CE2CT), pp. 858, 2025.

- Zhe Peng, Yichen Du, Mu-Yen Chen, "Computer Digital Technology‐Based Educational Platform for Protection and Activation Design of Ming Furniture." Wireless Communications and Mobile Computing, vol. 2021, no. 1, pp. , 2021.

- Jorge Bacca-Acosta, Julian Tejada, Carlos Ospino-Ibañez, "Learning to Follow Directions in English Through a Virtual Reality Environment." In Designing, Deploying, and Evaluating Virtual and Augmented Reality in Education, Publisher, Location, 2021.

- Kalaphath Kounlaxay, Dexiang Yao, Min Woo Ha, Soo Kyun Kim, "Design of Virtual Reality System for Organic Chemistry." Intelligent Automation & Soft Computing, vol. 31, no. 2, pp. 1119, 2022.

- Andreas Marougkas, Christos Troussas, Akrivi Krouska, Cleo Sgouropoulou, "Virtual Reality in Education: A Review of Learning Theories, Approaches and Methodologies for the Last Decade." Electronics, vol. 12, no. 13, pp. 2832, 2023.

- Tebogo John Matome, Mmaki Jantjies, "Student Perceptions of Virtual Reality in Higher Education." In Balancing the Tension between Digital Technologies and Learning Sciences, Publisher, Location, 2021.

- Mevlüt Bagci, Alexander Mehler, Giuseppe Abrami, Patrick Schrottenbacher, Christian Spiekermann, Maxim Konca, Jakob Schreiber, Kevin Saukel, Marc Quintino, Juliane Engel, "Simulation-Based Learning in Virtual Reality: Three Use Cases from Social Science and Technological Foundations in Terms of Va.Si.Li-Lab." Technology, Knowledge and Learning, vol. , no. , pp. , 2025.

- Andreas Marougkas, Christos Troussas, Akrivi Krouska, Cleo Sgouropoulou, "How personalized and effective is immersive virtual reality in education? A systematic literature review for the last decade." Multimedia Tools and Applications, vol. 83, no. 6, pp. 18185, 2023.

No. of Downloads Per Month

No. of Downloads Per Country