Predicting Smoking Status Using Machine Learning Algorithms and Statistical Analysis

Volume 3, Issue 2, Page No 184–189, 2018

Adv. Sci. Technol. Eng. Syst. J. 3(2), 184–189 (2018);

DOI: 10.25046/aj030221

DOI: 10.25046/aj030221

Keywords: Machine Learning, Smoker Status Prediction, Healthcare

Smoking has been proven to negatively affect health in a multitude of ways. As of 2009, smoking has been considered the leading cause of preventable morbidity and mortality in the United States, continuing to plague the country’s overall health. This study aims to investigate the viability and effectiveness of some machine learning algorithms for predicting the smoking status of patients based on their blood tests and vital readings results. The analysis of this study is divided into two parts: In part 1, we use One-way ANOVA analysis with SAS tool to show the statistically significant difference in blood test readings between smokers and non-smokers. The results show that the difference in INR, which measures the effectiveness of anticoagulants, was significant in favor of non-smokers which further confirms the health risks associated with smoking. In part 2, we use five machine learning algorithms: Naïve Bayes, MLP, Logistic regression classifier, J48 and Decision Table to predict the smoking status of patients. To compare the effectiveness of these algorithms we use: Precision, Recall, F-measure and Accuracy measures. The results show that the Logistic algorithm outperformed the four other algorithms with Precision, Recall, F-Measure, and Accuracy of 83%, 83.4%, 83.2%, 83.44%, respectively.

1. Introduction

As of 2009, smoking has been considered the leading cause of preventable morbidity and mortality in the United States, continuing to plague the country’s overall health [1]. Patients admitted to a hospital are often asked their smoking status upon admission, but a simple yes/no answer can be misleading. Patients who answer no can previously be smokers, or have recently quit smoking. The ‘no’ responses also do not consider their household member’s smoking status, which can lead to continued exposure to secondhand smoke. Lastly, a ‘no’ response could still experience tobacco exposure through other forms, such as chewing tobacco. This study aims to use machine learning algorithms to predict a patient’s smoking status based on medical data collected during their stay at a medical center. In the future, these predictive models may be useful for evaluating a patient’s smoking status who is unable to speak.

Smoking has been proven to negatively affect your health in a multitude of ways. Smoking and secondhand smoke can magnify current harmful health conditions, and has been linked as the cause for others. Smoking and secondhand smoke often trigger asthma attacks for persons suffering from Asthma, and almost every case of Buerger’s disease has been linked to some form of tobacco exposure. Various forms of cancer are caused by smoking, secondhand smoke, and other tobacco products [2]. In addition to being deemed the cause of certain cancers, most commonly known for causing lung and gum cancer, smoking and secondhand smoke also prevents the human body from fighting against cancer. Gum disease is often caused by chewing tobacco products, but continuing to smoke after gum damage can inhibit the body from repairing itself, including the gums. Smoking, secondhand smoke, and tobacco products are included in creating and preventing the recovery of the following additional diseases or health conditions: chronic obstructive pulmonary disease (COPD), diabetes, heart disease, stroke, HIV, mental health conditions such as depression and anxiety, pregnancy, and vision loss or blindness [3].

smokers population, which in turn can help better treat and handle patients with previous or current tobacco use more effectively.

Machine learning techniques are being applied to a growing number of domains including the healthcare industry. The fields of machine learning and statistics are closely related, but different in terms a number of terminologies, emphasis, and focus. In this work, machine learning is used to predict the smoking status of patients using several classification algorithms. Such algorithms include Multilayer Perceptron, Bayes Naïve, Logistic Regression, J48, and Decision Tree. The algorithms are used with the objective of predicting a patient’s smoking status based on vitals. To determine if smoking has negative effects on vitals, One-way ANOVA analysis with SAS tool will be used repeatedly to determine whether different blood test readings from the patients are statistically different between smokers and non-smokers. The dataset used in this study was obtained from a community hospital in the Greater Pittsburgh Area [4]. The data set consists of 40,000 patients as well as 33 attributes.

The remainder of this paper is structured as follows: Section 2 discusses related work and the used dataset. Section 3 presents analytic methods and results. Section 4 discussed the results provides further recommendations; and Section 5 concludes the study.

2. Related Work

The I2b2 is a national center for Biomedical Computing based at Partners HealthCare System in Boston Massachusetts [5]. I2b2 announced an open smoking classification task using discharge summaries. Data was obtained from a hospital (covered outpatient, emergency room, inpatient domains). The smoking status of each discharge summary was evaluated based on a number of criteria. Every patient was classified as “smoker”, “non-smoker”, or “unknown”. If a patient is a smoker, and temporal hints are presented, then smokers can be classified as “past smoker” or “current smoker.” Summaries without temporal hints remained classified as “smoker”.

Uzuner et. al. utilized the i2b2 NLP challenge smoking classification task to determine the smoking status of patients based on their discharge records [6]. Micro-average and macro-averaged precision, recall, and F-measure were metrics used to evaluate performance in the study. A total of 11 teams with 23 different submissions used a variety of predictive models to identify smoking status through the challenge with 12 submissions scoring F-measures above 0.84. Results showed that when a decision is made on the patent smoking status based on the explicitly stated information in medical discharge summaries, human annotators agreed with each other more than 80% of the time. In addition, the results showed that the discharge summaries express smoking status using a limited number of key textual features, and that many of the effective smoking status identifiers benefit from these features.

McCormick et. al., also utilized the i2b2 NLP challenge smoking classification task using several predictive models on patient’s data to classify a patient’s smoker status [7]. A classifier relying on semantic features from an unmodified version of MedLEE (a clinical NLP engine) was compared to another classifier which relied on lexical features. The classifiers were compared to the performance of rule based symbolic classifiers. The supervised classifier trained by MedLEE stacked up with the top performing classifier in the i2b2 NLP Challenge with micro-averaged precision of 0.90, recall of 0.89, and F-measure of 0.89.

Dumortier et. al. studied a number of machine learning approaches to use situational features associated urges to smoke during a quit attempt in order to accurately classify high-urge states. The authors used a number of classifiers including Bayes, discriminant analysis, and decision tree learning methods. Data was collected from over 300 participants. Sensitivity, specificity, accuracy and precision measures were used to evaluate the performance of the selected classifiers. Results showed that algorithms based on feature selection achieved high classification rates with only few features. The classification tree method (accuracy = 86%) outperformed the naive Bayes and discriminant analysis methods. Results also suggest that machine learning can be helpful for dealing with smoking cessation matters and to predict smoking urges [8].

3. Data Analysis and Results

The analysis is divided into two parts. In part 1, we use One-way ANOVA analysis with SAS tool to show the statistically significant difference in blood test readings between smokers and non-smokers. In part 2, we use five machine learning algorithms – Naïve Bayes, MLP, Logistic regression classifier, J48 and Decision Table – to predict the smoking status of patients. To compare the effectiveness of these algorithms we use four metrics, namely Precision, Recall, F-measure and Accuracy measures.

3.1. Statistical Analysis using ANOVA Test

In this work, One-way ANOVA analysis with SAS tool [9] was used repeatedly to determine whether different blood test readings from the patients are statistically different between smokers and non-smokers. So, our hypothesis are as follows.

Null Hypothesis (H0): There is no statistical difference in blood test readings between smokers and non-smokers.

Alternative Hypothesis (H1): There is a statistical difference in blood test readings between smokers and non-smokers.

The One-way ANOVA test, based on a 0.05 significance level, and the decision rule will be based on the p-value from the SAS outputs. If the p-value is less than 0.05, the null hypothesis is rejected and the alternative hypothesis is accepted. On the other hand, if the p-value is greater than 0.05, the null hypothesis is accepted. The analysis will be repeated for all blood tests, each of which is listed in Table 1 along with a brief description of its significance.

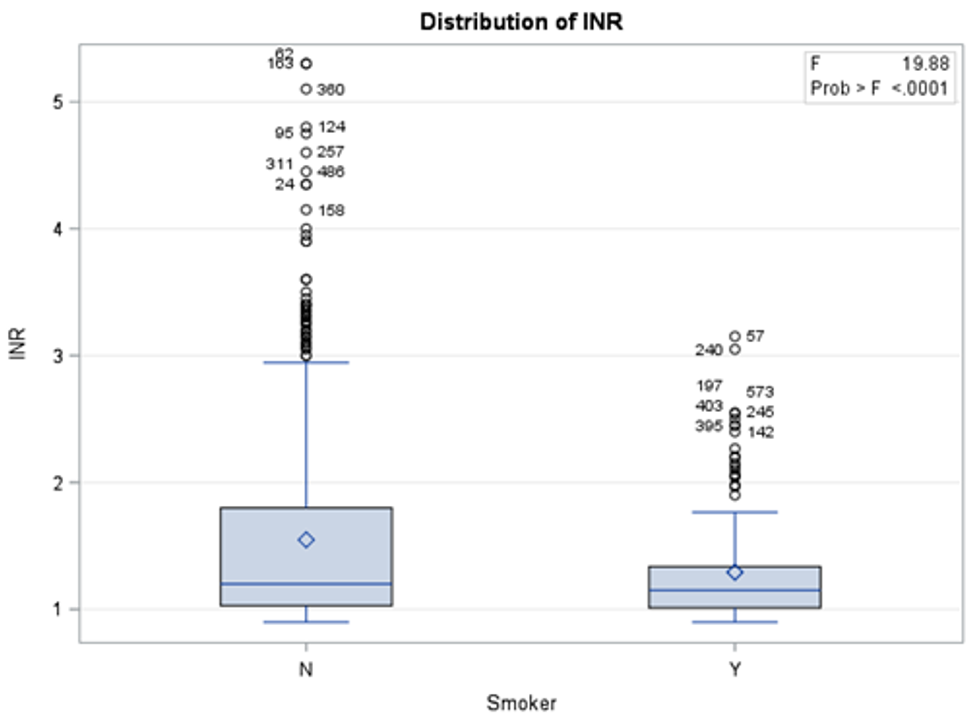

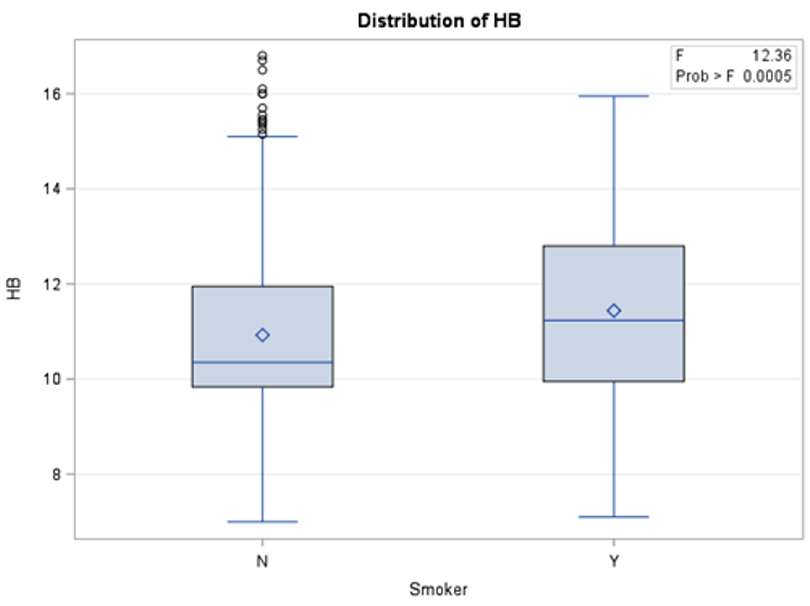

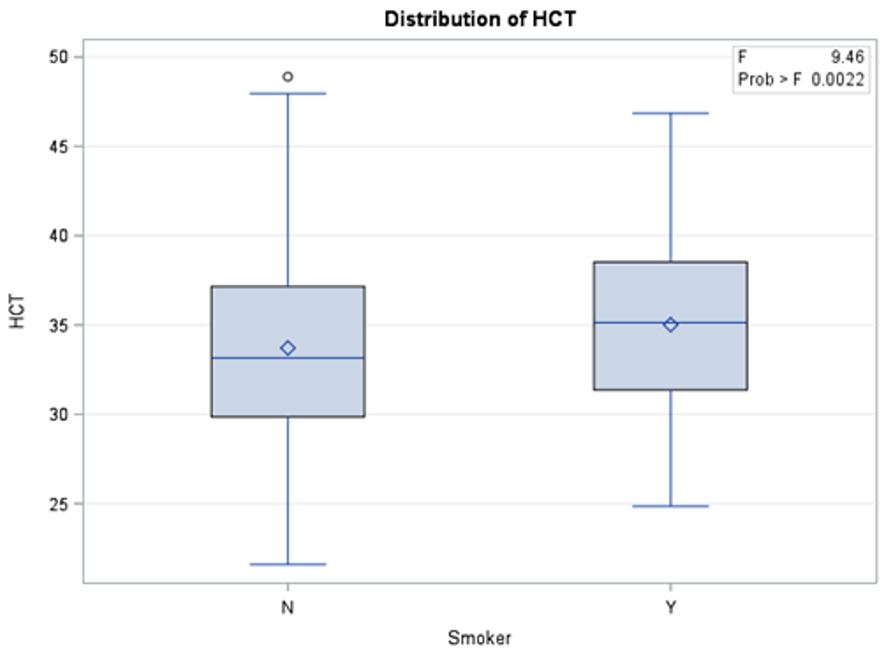

The results in Table 2 show that there is a significant statistical difference between smokers and non-smokers when it comes to three blood tests: INR, HB, and HCT. To investigate whether these differences were in favor of smokers or non-smokers, descriptive analysis was used (Figures 1, 2, and 3) to show the distribution of each blood test between smokers and non-smokers.

Figure 1 shows that non-smokers have higher values of INR than smokers. According to Mayo Clinic [10], an INR range of 2.0 to 3.0 is generally an effectiveness of anticoagulants. This shows that non-smokers have a more effective therapeutic range than smokers.

Table 1: Lab value definitions

| Blood Test | Significance |

| INR | Measures the effectiveness of the anticoagulants |

| Platelets | Involved in clotting |

| Glucose | Main source of energy and sugar |

| RBC | Red blood cells: carry oxygen and waste products |

| HB | Hemoglobin: Important enzyme in the RBCs |

| HCT | Hematocrit: measures the %RBC in the blood |

| RDW | Red blood cell distribution width |

Table 2: Consolidated statistical results

| Vital Reading | P-Value | Decision |

| INR | <0.0001 | Reject the Null Hypothesis |

| Platelets | 0.2935 | Accept the Null Hypothesis |

| Glucose | 0.1559 | Accept the Null Hypothesis |

| RBC | 0.0882 | Accept the Null Hypothesis |

| HB | 0.0005 | Reject the Null Hypothesis |

| HCT | 0.0022 | Reject the Null Hypothesis |

| RDW | 03509 | Accept the Null Hypothesis |

Figure 1: Distribution of INR blood test results between smokers and non-smokers

Figure 1: Distribution of INR blood test results between smokers and non-smokers

Figure 2 shows that non-smokers have lower values of HB than smokers. According to Mayo Clinic, an HB range between 12.0 and 17.5 is considered normal. This shows that although the readings of HB blood tests were statistically different between non-smokers and smokers, the difference was in general within the normal range.

Figure 3 shows that non-smokers have lower values of HCT than smokers. According to Mayo Clinic, an HB range between 37.0 and 52.0 is considered normal. This shows that although the readings of HCT blood tests were statistically different between non-smokers and smokers, the difference was in general within the normal range.

Figure 2: Distribution of HB blood test results between smokers and non-smokers

Figure 2: Distribution of HB blood test results between smokers and non-smokers

Figure 3: Distribution of HCT blood test results between smokers and non-smokers

Figure 3: Distribution of HCT blood test results between smokers and non-smokers

3.2. Classification Analysis using Machine Learning

In this work, the Waikato Environment for Knowledge Analysis (Weka) (https://www.cs.waikato.ac.nz/ml/weka/) will be utilized to analyze the dataset [11]. The machine learning models utilized in this study include five classification algorithms, namely, Naïve Bayes, Multilayer Perceptron, Logistic, J48, and Decision Table.

3.2.1. Classifiers Description

Table 3 provides a summary about the classification algorithms characteristics and features. Naive Bayes is a popular versatile algorithm based on Bayes’ Theorem, from the English mathematician Thomas Bayes. Bayes’ Theorem provides the relationship between the probability of two events and the conditional probabilities of those events. The Naïve Bayes Classifier assumes that the presence of one feature of a class is not related to the presence or absence of another. Naïve Bayes classifier is a well-known algorithm because of its reputation for computational efficiency and overall predicative performance [12].

Table 3: Summary of classifiers characteristics and feature

| Algorithm | Characteristics and Feature |

| Naïve Bayes | Computationally efficient, independence assumptions between the features, needs less training data, works with continuous and discrete data. |

| MLP | Many perceptrons organized into layers, ANN models are trained but not programmed, consist of three layers: input layer, hidden layer, and output layer. |

| Logistic | Multinomial logistic regression model with a ridge estimator |

| J48 | Creates a binary tree, selects the most discriminatory features, and comprehensibility |

| Decision Table | Groups class instances based on rules, easy to understand, provides good performance |

Multilayer Perceptron (MLP) is an Artificial Neural Network (ANN) model that maps sets of input data onto sets of suitable output data. ANN models are trained, not programmed. This means that the model takes a training set of data and applies what it has learned to a new set of data (the test data). The MLP ANN model is similar to a logistic regression classifier, with three layers: input layer, hidden layer, and output layer. The hidden layer exits to create space where the input data can be linearly separated. More hidden layers may be used for added benefit and performance, but MLP is used because of its overall performance [13].

The Logistic algorithm is a classifier for building and using multinomial logistic regression model with a ridge estimator to classify data. The version implemented using Weka states that it is slightly modified from the normal Logistic regression model, mainly to handle instance weights [14].

The J48 algorithm is a popular implementation of the C4.5 decision tree algorithm. Decision tree models are predictive machine learning models that determine the output value based on the attributes of input data. Each node of a decision tree signifies each attribute of the input data. The J48 model creates a decision tree that identifies the attribute of the training set that discriminates instances most clearly. Instances that have no ambiguity are terminated and assigned an obtained value, while other cases look for an attribute with the most information gain. When the decision tree is complete, and values are assigned to their respective attributes, target values of a new instance are predictively assigned [15].

Lastly, the Decision Table algorithm utilizes a simple decision table to classify data. Decision tables are best described to programmers as an if-then-else statement, and less complicated as a flow chart. A decision table groups class instances based on rules. These rules sort through instances and their attributes and classify each instance based on those rules. Decision tables are often easier to understand than other algorithm models while providing necessary performance [16].

Each model was run with a 66% split, using 66% of input as the training data and 34% as the test data. All algorithms are implemented through Weka after preprocessing, Table 4.

Table 4: Weka Schema

| Algorithm | Weka Schema Attribute |

| Naïve Bayes | weka.classifiers.bayes.NaiveBayes |

| MLP | weka.classifiers.functions.MultilayerPerceptron -L 0.3 -M 0.2 -N 500 -V 0 -S 5 -E 20 -H a |

| Logistic | weka.classifiers.functions.Logistic -R 1.0E-8 -M -1 -num-decimal-places 4 |

| J48 | weka.classifiers.trees.J48 -C 0.25 -M 2 |

| Decision Table | weka.classifiers.rules.DecisionTable -X 1 -S “weka.attributeSelection.BestFirst -D 1 -N 5” |

3.2.2. Preprocessing

A sample of the large dataset was used for analysis due to available resources. A few samples were created prior to this study for previous work. The sample contains 534 total patients, with 311 non-smokers and 87 smokers. This remains relatively consistent with the overall ratio of smokers to non-smokers in the full dataset. The smoker attribute contained 136 missing values, accounting for 25% of the patients in the sample. To account for missing values, we utilized a Weka filter called “ReplaceMissingValues”. This filter replaces missing values through the selected attribute with modes and means of the values in the training set.

After addressing missing values for the class attribute, oversampling was applied to add additional data for analysis. Oversampling was added to try and alter the ratio of smokers to non-smokers closer to the original dataset. The SMOTE algorithm was used through Weka and applied three times, bringing the total instances to about 1000 patients. All preprocessing is done using Weka as listed in Table 5.

Table 5: Weka Filters

| Algorithm | Type | Weka Attribute |

| SMOTE | Filter | weka.filters.supervised.instance.SMOTE |

| ReplaceMissingValue | Filter | weka.fliters.unsupervised.attribute.-ReplaceMissingValue |

3.2.3. Means of Analysis

To evaluate the performance of the machine learning model’s, four different measures are used, namely: Precision, Recall, F-measure, and Accuracy, shown in equations 1-4. Precision shows the percent of positive marked instances that truly are positive. Recall is the percentage of positive instances that are correctly identified. Recall is also referred to as sensitivity. F-measure or F-score is a measure of accuracy, that considers the harmonic mean of precision and recall. Accuracy is simply the amount of correctly classified instances from an algorithm.

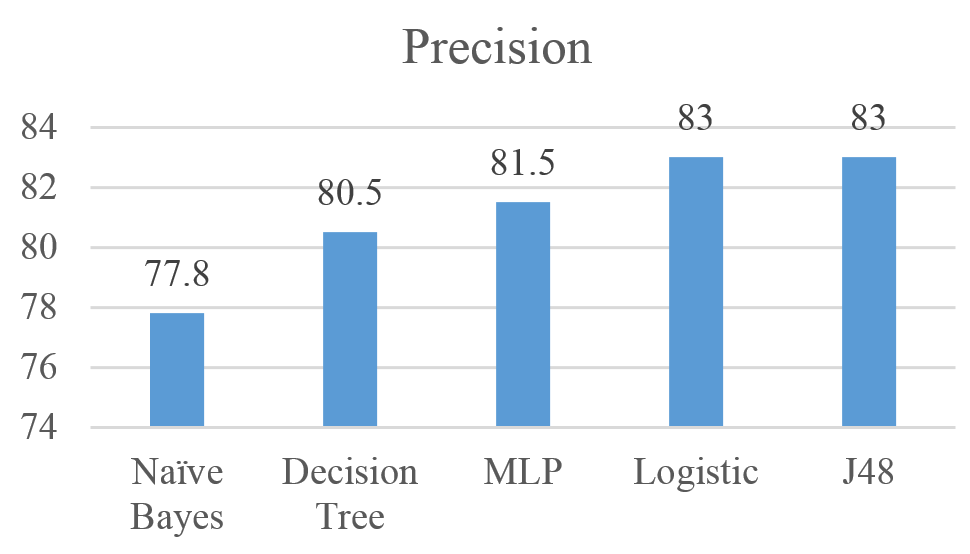

Figures 4 shows the performance of the five algorithms using the Precision measure. Results show that the J48 and Logistic achieved the highest precision with 83%, followed by MLP, Decision Tree, and Naïve Bayes with Precision values of 81%, 80.5%, and 77.8% respectively.

Figure 4: Precision for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Figure 4: Precision for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

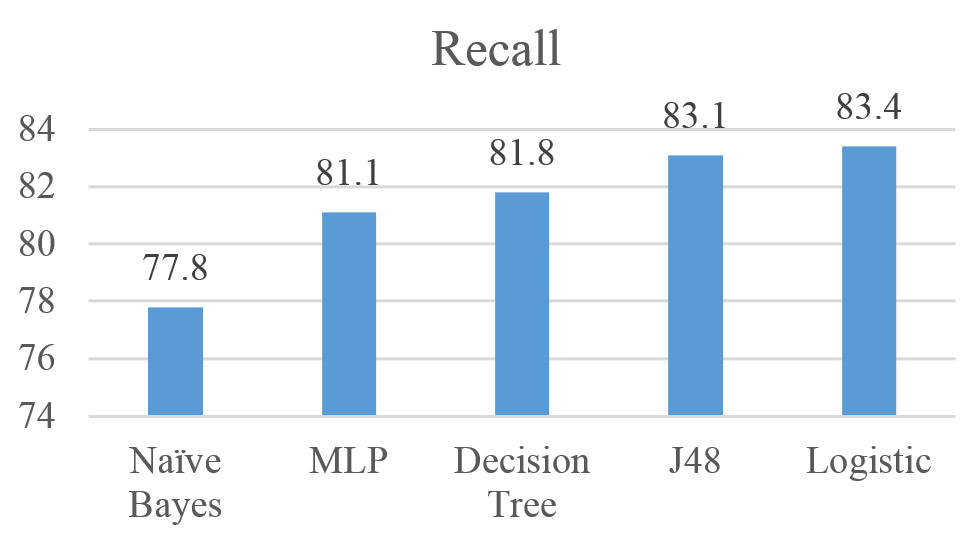

Figures 5 shows the performance of the five algorithms using the Recall measure. Results show that the J48 achieved the highest Recall with 83.4%, followed by Logistic, MLP, Decision Tree, and Naïve Bayes with Recall values of 83.1%, 81.8%, 81.1% and 77.8% respectively.

Figure 5: Recall for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Figure 5: Recall for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

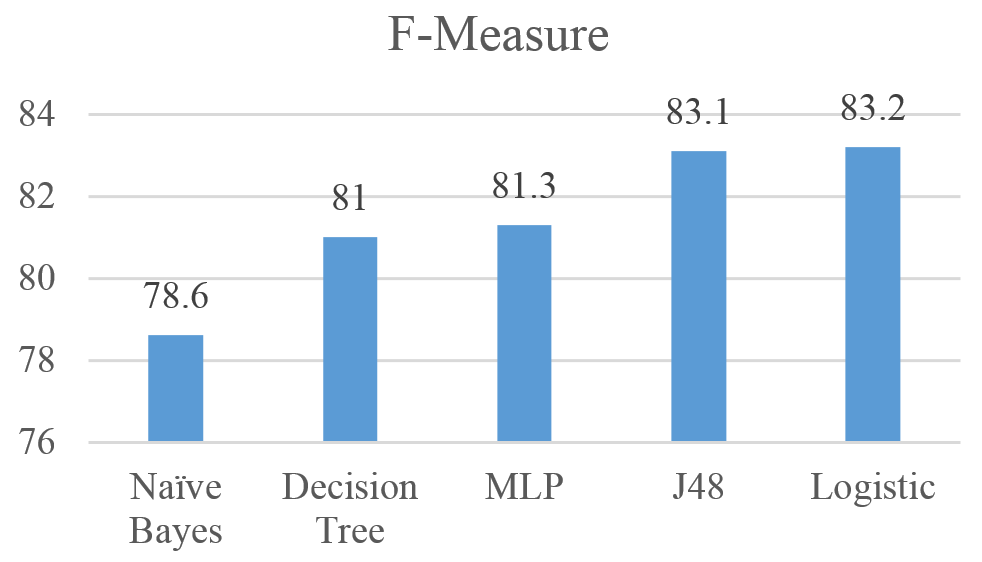

Figures 6 shows the performance of the five algorithms using the F-Measure. Results show that the Logistic achieved the highest F-Measure with 83.2%, followed by J48, MLP, Decision Tree, and Naïve Bayes with Recall values of 83.1%, 81.3%, 81% and 78.8% respectively.

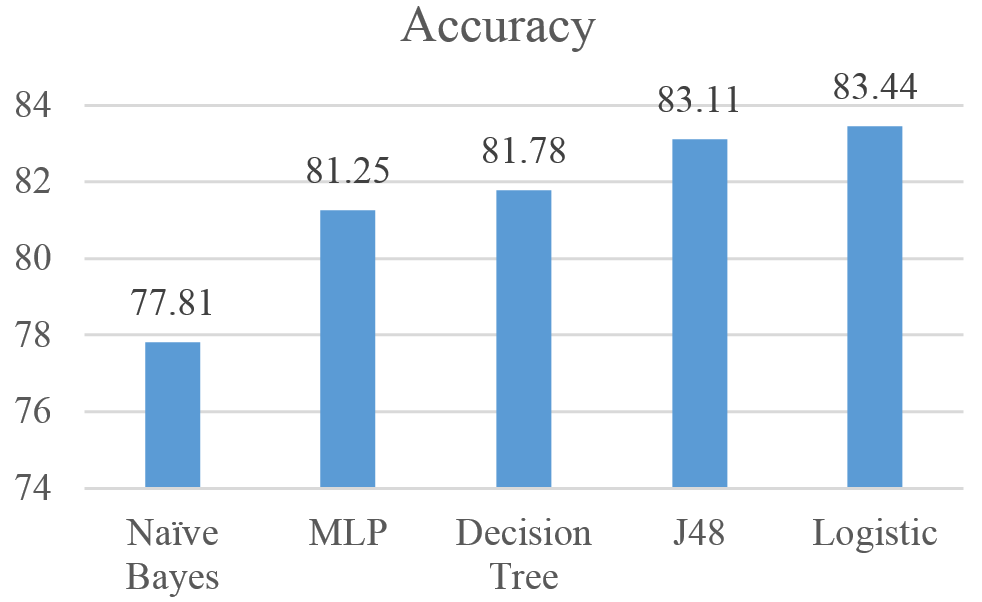

Figures 7 shows the performance of the five algorithms using the Accuracy measure. Results show that the Logistic achieved the highest F-Measure with 83.44%, followed by J48, Decision Tree, MLP, and Naïve Bayes with Accuracy values of 83.11%, 81.78%, 81.25% and 77.81% respectively.

Figure 6: F-measure for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Figure 6: F-measure for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Figure 7: Accuracy for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Figure 7: Accuracy for Naïve Bayes, MLP, Logistic, J48, and Decision Tree results

Overall, the results show an indication that the five algorithms are relatively reliable when it comes to predicting the smoking status of patients. Logistic algorithm outperformed the four other algorithms with Precision (83%), Recall (83.4%), F-Measure 83.2%, and Accuracy (83.44%).

4. Recommendations & Further Study

The study addressed the potential of machine learning algorithms to predict the status of smoking among a smoker population. Results showed the potential of such algorithms to predict the smoking states with accuracy level of 83.44%. However, this study has few limitations. There are several items that could be addressed to further this study and improve outcomes, beginning with data preprocessing. Several other methods are available to handle missing values in the dataset. In this study, the ReplaceMissingValues filter was applied in Weka to handle missing values. The Weka Filter replaces null values with means and modes from the training set. Using a method such as replacing null values with moving averages could produce results that are more realistic to the actual smoking status of patients. Other methods of handling missing values could also be explored.

Another improvement could be to increase the size of the sample dataset. In this study, a sample of 534 patients was used and the SMOTE model was applied in preprocessing. Using a larger sample set could also bring the results closer to what would be expected when applying these tests to larger sets of patients. In most cases, there will be more than 534 patient entries to analyze. So, learning the results of these tests on larger real-world sets could further prove the value of these tests.

Lastly, other models may outperform those tested in this study. While five algorithms were tested, and showed wholesome results, others may provide better marks. Clustering models may be a point of interest as those tested in this study are all classifier models. There is an abundant amount of classifier models out there and their results are worth testing.

5. Conclusion

This study showed that five machine learning models can be used reliably to determine the smoking status of patients given blood tests and vital readings attributes. These algorithms are Naïve Bayes, MLP, Logistic, J46 and Decision Tree. Logistic algorithm outperformed the other four algorithms with precision, recall, F-Measure, and accuracy of 83%, 83.4%, 83.2%, 83.44%, respectively.

Using One-way ANOVA analysis with SAS tool, the study also confirmed that there is a significant statistical difference between smokers and non-smokers when it comes to three blood tests: INR, HB, and HCT. The difference was within the normal range with HB and HCT, but it was in favor of non-smokers with INR which measures the effectiveness of anticoagulants. In the future, the models could be implemented in hospital systems to identify patients who do not specify smoking status. Also, the findings from SAS confirms the negative health effects of being a smoker.

- Centers for Disease Control and Prevention: https://www.cdc.gov/tobacco/campaign/tips/diseases/?gclid=CjwKEAjw5_vHBRCBtt2NqqCDjiESJABD5rCJcbOfOo7pywRlcabSxkzh0VIifcvYI05u-hQ9SsI9RRoCDZfw_wcB

- The Mayo Clinic (2017). Retrieved May 01, 2017, from http://www.mayoclinic.org/

- , Dube, A., McClave, C., James, R., Caraballo, , R., Kaufmann, & T., Pechacek, (2010, September 10). Vital Signs: Current Cigarette Smoking Among Adults Aged =18 Years United States, 2009. Retrieved from Centers for Disease Control and Prevention: https://www.cdc.gov/mmwr/preview/mmwrhtml/mm5935a3.htm

- , Keyes, C., Frank, A., Habach and R. Seetan. Artificial Neural Network Predictability: Patients’ Susceptibility to Hospital Acquired Venous Thromboembolism. The 32th Annual Conference of the Pennsylvania Association of Computer and Information Science Educators (PACISE), At Edinboro University of Pennsylvania. March 31st and April 1st, 2017.

- Partners Healthcare. (2017). Informatics for Integrating Biology & the Bedside. Retrieved May 23, 2017, from i2b2: https://www.i2b2.org/NLP/DataSets/Main.php

- , Uzuner, I., Goldstein, Y., Luo, & I., Kohane, (2008). Identifying Patient Smoking Status from Medical Discharge Records. J Am Med Inform Assoc, 15(1), 14-24. doi:10.1197/jamia.m2408

- , McCormick, N., Elhadad & P., Stetson (2008). Use of Semantic Features to Classify Patient Smoking Status. Retrieved March 5, 2017, from Columbia.edu: http://people.dbmi.columbia.edu/noemie/papers/amia08_patrick.pdf

- , Dumortier, E., Beckjord, S., Shiffman,, & E., Sejdic (2016). Classifying smoking urges via machine learning. Computer methods and programs in biomedicine, 137, 203-213.

- SAS Institute Inc., SAS 9.4 Help and Documentation, Cary, NC: SAS Institute Inc., 2017.

- The Mayo Clinic (2017). Retrieved May 01, 2017, from http://www.mayoclinic.org/

- , Frank, M., Hall, and I., Witten (2016). The Weka Workbench. Online Appendix for “Data Mining: Practical Machine Learning Tools and Techniques”, Morgan Kaufmann, Fourth Edition, 2016.

- , Frank, & R., Bouckaert, (2006, September). Naive bayes for text classification with unbalanced classes. In European Conference on Principles of Data Mining and Knowledge Discovery (pp. 503-510). Springer, Berlin, Heidelberg.

- , Gardner, and S. Dorling. “Artificial neural networks (the multilayer perceptron) – a review of applications in the atmospheric sciences.” Atmos. Environ. 32, no. 14-15 (1998): 2627-2636.

- , Hosmer, S., Lemeshow, and R., Sturdivant. Applied logistic regression. Vol. 398. John Wiley & Sons, 2013.

- , Dietrich, B., Heller, and B., Yang. Data Science and Big Data Analytics: Discovering, Analyzing, Visualizing and Presenting Data. (2015). Wiley.

- , Lu, & H., Liu, (2000). Decision tables: Scalable classification exploring RDBMS capabilities. In Proceedings of the 26th International Conference on Very Large Data Bases, VLDB’00 (p. 373).

- Vikas Thammanna Gowda, Landis Humphrey, Aiden Kadoch, YinBo Chen, Olivia Roberts, "Multi Attribute Stratified Sampling: An Automated Framework for Privacy-Preserving Healthcare Data Publishing with Multiple Sensitive Attributes", Advances in Science, Technology and Engineering Systems Journal, vol. 11, no. 1, pp. 51–68, 2026. doi: 10.25046/aj110106

- David Degbor, Haiping Xu, Pratiksha Singh, Shannon Gibbs, Donghui Yan, "StradNet: Automated Structural Adaptation for Efficient Deep Neural Network Design", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 6, pp. 29–41, 2025. doi: 10.25046/aj100603

- Glender Brás, Samara Leal, Breno Sousa, Gabriel Paes, Cleberson Junior, João Souza, Rafael Assis, Tamires Marques, Thiago Teles Calazans Silva, "Machine Learning Methods for University Student Performance Prediction in Basic Skills based on Psychometric Profile", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 4, pp. 1–13, 2025. doi: 10.25046/aj100401

- khawla Alhasan, "Predictive Analytics in Marketing: Evaluating its Effectiveness in Driving Customer Engagement", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 3, pp. 45–51, 2025. doi: 10.25046/aj100306

- Khalifa Sylla, Birahim Babou, Mama Amar, Samuel Ouya, "Impact of Integrating Chatbots into Digital Universities Platforms on the Interactions between the Learner and the Educational Content", Advances in Science, Technology and Engineering Systems Journal, vol. 10, no. 1, pp. 13–19, 2025. doi: 10.25046/aj100103

- Ahmet Emin Ünal, Halit Boyar, Burcu Kuleli Pak, Vehbi Çağrı Güngör, "Utilizing 3D models for the Prediction of Work Man-Hour in Complex Industrial Products using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 6, pp. 01–11, 2024. doi: 10.25046/aj090601

- Haruki Murakami, Takuma Miwa, Kosuke Shima, Takanobu Otsuka, "Proposal and Implementation of Seawater Temperature Prediction Model using Transfer Learning Considering Water Depth Differences", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 4, pp. 01–06, 2024. doi: 10.25046/aj090401

- Brandon Wetzel, Haiping Xu, "Deploying Trusted and Immutable Predictive Models on a Public Blockchain Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 72–83, 2024. doi: 10.25046/aj090307

- Anirudh Mazumder, Kapil Panda, "Leveraging Machine Learning for a Comprehensive Assessment of PFAS Nephrotoxicity", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 62–71, 2024. doi: 10.25046/aj090306

- Taichi Ito, Ken’ichi Minamino, Shintaro Umeki, "Visualization of the Effect of Additional Fertilization on Paddy Rice by Time-Series Analysis of Vegetation Indices using UAV and Minimizing the Number of Monitoring Days for its Workload Reduction", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 29–40, 2024. doi: 10.25046/aj090303

- Henry Toal, Michelle Wilber, Getu Hailu, Arghya Kusum Das, "Evaluation of Various Deep Learning Models for Short-Term Solar Forecasting in the Arctic using a Distributed Sensor Network", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 12–28, 2024. doi: 10.25046/aj090302

- Tinofirei Museba, Koenraad Vanhoof, "An Adaptive Heterogeneous Ensemble Learning Model for Credit Card Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 9, no. 3, pp. 01–11, 2024. doi: 10.25046/aj090301

- Toya Acharya, Annamalai Annamalai, Mohamed F Chouikha, "Optimizing the Performance of Network Anomaly Detection Using Bidirectional Long Short-Term Memory (Bi-LSTM) and Over-sampling for Imbalance Network Traffic Data", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 144–154, 2023. doi: 10.25046/aj080614

- Samiha Fairooz, Shakila Yeasmin Miti, Zihadul Islam, Meem Tasfia Zaman, "A Secure Medical History Card Powered by Blockchain Technology", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 96–106, 2023. doi: 10.25046/aj080611

- Renhe Chi, "Comparative Study of J48 Decision Tree and CART Algorithm for Liver Cancer Symptom Analysis Using Data from Carnegie Mellon University", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 57–64, 2023. doi: 10.25046/aj080607

- Ng Kah Kit, Hafeez Ullah Amin, Kher Hui Ng, Jessica Price, Ahmad Rauf Subhani, "EEG Feature Extraction based on Fast Fourier Transform and Wavelet Analysis for Classification of Mental Stress Levels using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 46–56, 2023. doi: 10.25046/aj080606

- Nizar Sakli, Chokri Baccouch, Hedia Bellali, Ahmed Zouinkhi, Mustapha Najjari, "IoT System and Deep Learning Model to Predict Cardiovascular Disease Based on ECG Signal", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 6, pp. 08–18, 2023. doi: 10.25046/aj080602

- Yehan Kodithuwakku, Chanuka Bandara, Ashan Sandanayake, R.A.R Wijesinghe, Velmanickam Logeeshan, "Smart Healthcare Kit for Domestic Purposes", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 170–177, 2023. doi: 10.25046/aj080319

- Kitipoth Wasayangkool, Kanabadee Srisomboon, Chatree Mahatthanajatuphat, Wilaiporn Lee, "Accuracy Improvement-Based Wireless Sensor Estimation Technique with Machine Learning Algorithms for Volume Estimation on the Sealed Box", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 108–117, 2023. doi: 10.25046/aj080313

- Marcos Felipe, Haiping Xu, "HistoChain: Improving Consortium Blockchain Scalability using Historical Blockchains", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 89–99, 2023. doi: 10.25046/aj080311

- Chaiyaporn Khemapatapan, Thammanoon Thepsena, "Forecasting the Weather behind Pa Sak Jolasid Dam using Quantum Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 3, pp. 54–62, 2023. doi: 10.25046/aj080307

- Der-Jiun Pang, "Hybrid Machine Learning Model Performance in IT Project Cost and Duration Prediction", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 108–115, 2023. doi: 10.25046/aj080212

- Paulo Gustavo Quinan, Issa Traoré, Isaac Woungang, Ujwal Reddy Gondhi, Chenyang Nie, "Hybrid Intrusion Detection Using the AEN Graph Model", Advances in Science, Technology and Engineering Systems Journal, vol. 8, no. 2, pp. 44–63, 2023. doi: 10.25046/aj080206

- Ossama Embarak, "Multi-Layered Machine Learning Model For Mining Learners Academic Performance", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 850–861, 2021. doi: 10.25046/aj060194

- Fabrizio Striani, Chiara Colucci, Angelo Corallo, Roberto Paiano, Claudio Pascarelli, "Process Mining in Healthcare: A Systematic Literature Review and A Case Study", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 151–160, 2022. doi: 10.25046/aj070615

- Roy D Gregori Ayon, Md. Sanaullah Rabbi, Umme Habiba, Maoyejatun Hasana, "Bangla Speech Emotion Detection using Machine Learning Ensemble Methods", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 6, pp. 70–76, 2022. doi: 10.25046/aj070608

- Deeptaanshu Kumar, Ajmal Thanikkal, Prithvi Krishnamurthy, Xinlei Chen, Pei Zhang, "Analysis of Different Supervised Machine Learning Methods for Accelerometer-Based Alcohol Consumption Detection from Physical Activity", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 147–154, 2022. doi: 10.25046/aj070419

- Zhumakhan Nazir, Temirlan Zarymkanov, Jurn-Guy Park, "A Machine Learning Model Selection Considering Tradeoffs between Accuracy and Interpretability", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 4, pp. 72–78, 2022. doi: 10.25046/aj070410

- Ayoub Benchabana, Mohamed-Khireddine Kholladi, Ramla Bensaci, Belal Khaldi, "A Supervised Building Detection Based on Shadow using Segmentation and Texture in High-Resolution Images", Advances in Science, Technology and Engineering Systems Journal, vol. 7, no. 3, pp. 166–173, 2022. doi: 10.25046/aj070319

- Osaretin Eboya, Julia Binti Juremi, "iDRP Framework: An Intelligent Malware Exploration Framework for Big Data and Internet of Things (IoT) Ecosystem", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 185–202, 2021. doi: 10.25046/aj060521

- Arwa Alghamdi, Graham Healy, Hoda Abdelhafez, "Machine Learning Algorithms for Real Time Blind Audio Source Separation with Natural Language Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 125–140, 2021. doi: 10.25046/aj060515

- Baida Ouafae, Louzar Oumaima, Ramdi Mariam, Lyhyaoui Abdelouahid, "Survey on Novelty Detection using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 73–82, 2021. doi: 10.25046/aj060510

- Radwan Qasrawi, Stephanny VicunaPolo, Diala Abu Al-Halawa, Sameh Hallaq, Ziad Abdeen, "Predicting School Children Academic Performance Using Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 5, pp. 08–15, 2021. doi: 10.25046/aj060502

- Zhiyuan Chen, Howe Seng Goh, Kai Ling Sin, Kelly Lim, Nicole Ka Hei Chung, Xin Yu Liew, "Automated Agriculture Commodity Price Prediction System with Machine Learning Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 376–384, 2021. doi: 10.25046/aj060442

- Hathairat Ketmaneechairat, Maleerat Maliyaem, Chalermpong Intarat, "Kamphaeng Saen Beef Cattle Identification Approach using Muzzle Print Image", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 4, pp. 110–122, 2021. doi: 10.25046/aj060413

- Md Mahmudul Hasan, Nafiul Hasan, Dil Afroz, Ferdaus Anam Jibon, Md. Arman Hossen, Md. Shahrier Parvage, Jakaria Sulaiman Aongkon, "Electroencephalogram Based Medical Biometrics using Machine Learning: Assessment of Different Color Stimuli", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 3, pp. 27–34, 2021. doi: 10.25046/aj060304

- Dominik Štursa, Daniel Honc, Petr Doležel, "Efficient 2D Detection and Positioning of Complex Objects for Robotic Manipulation Using Fully Convolutional Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 915–920, 2021. doi: 10.25046/aj0602104

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, "Development of an EEG Controlled Wheelchair Using Color Stimuli: A Machine Learning Based Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 754–762, 2021. doi: 10.25046/aj060287

- Antoni Wibowo, Inten Yasmina, Antoni Wibowo, "Food Price Prediction Using Time Series Linear Ridge Regression with The Best Damping Factor", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 694–698, 2021. doi: 10.25046/aj060280

- Mohammad Kanan, Siraj Essemmar, "Quality Function Deployment: Comprehensive Framework for Patient Satisfaction in Private Hospitals", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1440–1449, 2021. doi: 10.25046/aj0601163

- Javier E. Sánchez-Galán, Fatima Rangel Barranco, Jorge Serrano Reyes, Evelyn I. Quirós-McIntire, José Ulises Jiménez, José R. Fábrega, "Using Supervised Classification Methods for the Analysis of Multi-spectral Signatures of Rice Varieties in Panama", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 552–558, 2021. doi: 10.25046/aj060262

- Phillip Blunt, Bertram Haskins, "A Model for the Application of Automatic Speech Recognition for Generating Lesson Summaries", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 526–540, 2021. doi: 10.25046/aj060260

- Sebastianus Bara Primananda, Sani Muhamad Isa, "Forecasting Gold Price in Rupiah using Multivariate Analysis with LSTM and GRU Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 245–253, 2021. doi: 10.25046/aj060227

- Hyeongjoo Kim, Sunyong Byun, "Designing and Applying a Moral Turing Test", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 2, pp. 93–98, 2021. doi: 10.25046/aj060212

- Byeongwoo Kim, Jongkyu Lee, "Fault Diagnosis and Noise Robustness Comparison of Rotating Machinery using CWT and CNN", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 1279–1285, 2021. doi: 10.25046/aj0601146

- Md Mahmudul Hasan, Nafiul Hasan, Mohammed Saud A Alsubaie, Md Mostafizur Rahman Komol, "Diagnosis of Tobacco Addiction using Medical Signal: An EEG-based Time-Frequency Domain Analysis Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 842–849, 2021. doi: 10.25046/aj060193

- Reem Bayari, Ameur Bensefia, "Text Mining Techniques for Cyberbullying Detection: State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 783–790, 2021. doi: 10.25046/aj060187

- Inna Valieva, Iurii Voitenko, Mats Björkman, Johan Åkerberg, Mikael Ekström, "Multiple Machine Learning Algorithms Comparison for Modulation Type Classification Based on Instantaneous Values of the Time Domain Signal and Time Series Statistics Derived from Wavelet Transform", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 658–671, 2021. doi: 10.25046/aj060172

- Carlos López-Bermeo, Mauricio González-Palacio, Lina Sepúlveda-Cano, Rubén Montoya-Ramírez, César Hidalgo-Montoya, "Comparison of Machine Learning Parametric and Non-Parametric Techniques for Determining Soil Moisture: Case Study at Las Palmas Andean Basin", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 636–650, 2021. doi: 10.25046/aj060170

- Ndiatenda Ndou, Ritesh Ajoodha, Ashwini Jadhav, "A Case Study to Enhance Student Support Initiatives Through Forecasting Student Success in Higher-Education", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 230–241, 2021. doi: 10.25046/aj060126

- Lonia Masangu, Ashwini Jadhav, Ritesh Ajoodha, "Predicting Student Academic Performance Using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 6, no. 1, pp. 153–163, 2021. doi: 10.25046/aj060117

- Sara Ftaimi, Tomader Mazri, "Handling Priority Data in Smart Transportation System by using Support Vector Machine Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1422–1427, 2020. doi: 10.25046/aj0506172

- Othmane Rahmaoui, Kamal Souali, Mohammed Ouzzif, "Towards a Documents Processing Tool using Traceability Information Retrieval and Content Recognition Through Machine Learning in a Big Data Context", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 1267–1277, 2020. doi: 10.25046/aj0506151

- Puttakul Sakul-Ung, Amornvit Vatcharaphrueksadee, Pitiporn Ruchanawet, Kanin Kearpimy, Hathairat Ketmaneechairat, Maleerat Maliyaem, "Overmind: A Collaborative Decentralized Machine Learning Framework", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 280–289, 2020. doi: 10.25046/aj050634

- Pamela Zontone, Antonio Affanni, Riccardo Bernardini, Leonida Del Linz, Alessandro Piras, Roberto Rinaldo, "Supervised Learning Techniques for Stress Detection in Car Drivers", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 6, pp. 22–29, 2020. doi: 10.25046/aj050603

- Kodai Kitagawa, Koji Matsumoto, Kensuke Iwanaga, Siti Anom Ahmad, Takayuki Nagasaki, Sota Nakano, Mitsumasa Hida, Shogo Okamatsu, Chikamune Wada, "Posture Recognition Method for Caregivers during Postural Change of a Patient on a Bed using Wearable Sensors", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 1093–1098, 2020. doi: 10.25046/aj0505133

- Khalid A. AlAfandy, Hicham Omara, Mohamed Lazaar, Mohammed Al Achhab, "Using Classic Networks for Classifying Remote Sensing Images: Comparative Study", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 770–780, 2020. doi: 10.25046/aj050594

- Khalid A. AlAfandy, Hicham, Mohamed Lazaar, Mohammed Al Achhab, "Investment of Classic Deep CNNs and SVM for Classifying Remote Sensing Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 652–659, 2020. doi: 10.25046/aj050580

- Rajesh Kumar, Geetha S, "Malware Classification Using XGboost-Gradient Boosted Decision Tree", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 536–549, 2020. doi: 10.25046/aj050566

- Nghia Duong-Trung, Nga Quynh Thi Tang, Xuan Son Ha, "Interpretation of Machine Learning Models for Medical Diagnosis", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 469–477, 2020. doi: 10.25046/aj050558

- Oumaima Terrada, Soufiane Hamida, Bouchaib Cherradi, Abdelhadi Raihani, Omar Bouattane, "Supervised Machine Learning Based Medical Diagnosis Support System for Prediction of Patients with Heart Disease", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 269–277, 2020. doi: 10.25046/aj050533

- Sathyabama Kaliyapillai, Saruladha Krishnamurthy, "Differential Evolution based Hyperparameters Tuned Deep Learning Models for Disease Diagnosis and Classification", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 253–261, 2020. doi: 10.25046/aj050531

- Haytham Azmi, "FPGA Acceleration of Tree-based Learning Algorithms", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 237–244, 2020. doi: 10.25046/aj050529

- Hicham Moujahid, Bouchaib Cherradi, Oussama El Gannour, Lhoussain Bahatti, Oumaima Terrada, Soufiane Hamida, "Convolutional Neural Network Based Classification of Patients with Pneumonia using X-ray Lung Images", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 5, pp. 167–175, 2020. doi: 10.25046/aj050522

- Young-Jin Park, Hui-Sup Cho, "A Method for Detecting Human Presence and Movement Using Impulse Radar", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 770–775, 2020. doi: 10.25046/aj050491

- Anouar Bachar, Noureddine El Makhfi, Omar EL Bannay, "Machine Learning for Network Intrusion Detection Based on SVM Binary Classification Model", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 638–644, 2020. doi: 10.25046/aj050476

- Adonis Santos, Patricia Angela Abu, Carlos Oppus, Rosula Reyes, "Real-Time Traffic Sign Detection and Recognition System for Assistive Driving", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 600–611, 2020. doi: 10.25046/aj050471

- Amar Choudhary, Deependra Pandey, Saurabh Bhardwaj, "Overview of Solar Radiation Estimation Techniques with Development of Solar Radiation Model Using Artificial Neural Network", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 589–593, 2020. doi: 10.25046/aj050469

- Maroua Abdellaoui, Dounia Daghouj, Mohammed Fattah, Younes Balboul, Said Mazer, Moulhime El Bekkali, "Artificial Intelligence Approach for Target Classification: A State of the Art", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 445–456, 2020. doi: 10.25046/aj050453

- Shahab Pasha, Jan Lundgren, Christian Ritz, Yuexian Zou, "Distributed Microphone Arrays, Emerging Speech and Audio Signal Processing Platforms: A Review", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 331–343, 2020. doi: 10.25046/aj050439

- Ilias Kalathas, Michail Papoutsidakis, Chistos Drosos, "Optimization of the Procedures for Checking the Functionality of the Greek Railways: Data Mining and Machine Learning Approach to Predict Passenger Train Immobilization", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 287–295, 2020. doi: 10.25046/aj050435

- Phathutshedzo Makovhololo, Tiko Iyamu, "Diffusion of Technology for Language Challenges in the South African Healthcare Environment", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 257–265, 2020. doi: 10.25046/aj050432

- Yosaphat Catur Widiyono, Sani Muhamad Isa, "Utilization of Data Mining to Predict Non-Performing Loan", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 4, pp. 252–256, 2020. doi: 10.25046/aj050431

- Hai Thanh Nguyen, Nhi Yen Kim Phan, Huong Hoang Luong, Trung Phuoc Le, Nghi Cong Tran, "Efficient Discretization Approaches for Machine Learning Techniques to Improve Disease Classification on Gut Microbiome Composition Data", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 547–556, 2020. doi: 10.25046/aj050368

- Ruba Obiedat, "Risk Management: The Case of Intrusion Detection using Data Mining Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 529–535, 2020. doi: 10.25046/aj050365

- Krina B. Gabani, Mayuri A. Mehta, Stephanie Noronha, "Racial Categorization Methods: A Survey", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 388–401, 2020. doi: 10.25046/aj050350

- Dennis Luqman, Sani Muhamad Isa, "Machine Learning Model to Identify the Optimum Database Query Execution Platform on GPU Assisted Database", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 214–225, 2020. doi: 10.25046/aj050328

- Linda Acosta-Salgado, Auguste Rakotondranaivo, Eric Bonjour, "Analysis and Improvement of an Innovative Solution Through Risk Reduction: Application to Home Care for the Elderly", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 158–165, 2020. doi: 10.25046/aj050321

- Gillala Rekha, Shaveta Malik, Amit Kumar Tyagi, Meghna Manoj Nair, "Intrusion Detection in Cyber Security: Role of Machine Learning and Data Mining in Cyber Security", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 3, pp. 72–81, 2020. doi: 10.25046/aj050310

- Nuno Martins, Jéssica Campos, Ricardo Simoes, "Activerest: Design of A Graphical Interface for the Remote use of Continuous and Holistic Care Providers", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 635–645, 2020. doi: 10.25046/aj050279

- Ahmed EL Orche, Mohamed Bahaj, "Approach to Combine an Ontology-Based on Payment System with Neural Network for Transaction Fraud Detection", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 551–560, 2020. doi: 10.25046/aj050269

- Bokyoon Na, Geoffrey C Fox, "Object Classifications by Image Super-Resolution Preprocessing for Convolutional Neural Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 476–483, 2020. doi: 10.25046/aj050261

- Johannes Linden, Xutao Wang, Stefan Forsstrom, Tingting Zhang, "Productify News Article Classification Model with Sagemaker", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 2, pp. 13–18, 2020. doi: 10.25046/aj050202

- Carlotta Patrone, Maryna Mezzano Kozlova, Monica Brenta, Francesca Filauro, Donatella Campanella, Antonietta Ribatti, Elisabetta Scuderi, Tiziana Marini, Gabriele Galli, Roberto Revetria, "Hospital Warehouse Management during the construction of a new building through Lean Techniques", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 256–262, 2020. doi: 10.25046/aj050132

- Lorenzo Damiani, Roberto Revetria, Emanuele Morra, Pietro Giribone, "A Smart Box for Blood Bags Transport: Simulation Model of the Cooling Autonomy Control System", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 249–255, 2020. doi: 10.25046/aj050131

- Michael Wenceslaus Putong, Suharjito, "Classification Model of Contact Center Customers Emails Using Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 174–182, 2020. doi: 10.25046/aj050123

- Saleh Albahli, Rehan Ullah Khan, Ali Mustafa Qamar, "A Blockchain-Based Architecture for Smart Healthcare System: A Case Study of Saudi Arabia", Advances in Science, Technology and Engineering Systems Journal, vol. 5, no. 1, pp. 40–47, 2020. doi: 10.25046/aj050106

- Rehan Ullah Khan, Ali Mustafa Qamar, Mohammed Hadwan, "Quranic Reciter Recognition: A Machine Learning Approach", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 173–176, 2019. doi: 10.25046/aj040621

- Mehdi Guessous, Lahbib Zenkouar, "An ML-optimized dRRM Solution for IEEE 802.11 Enterprise Wlan Networks", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 6, pp. 19–31, 2019. doi: 10.25046/aj040603

- Toshiyasu Kato, Yuki Terawaki, Yasushi Kodama, Teruhiko Unoki, Yasushi Kambayashi, "Estimating Academic results from Trainees’ Activities in Programming Exercises Using Four Types of Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 5, pp. 321–326, 2019. doi: 10.25046/aj040541

- Nindhia Hutagaol, Suharjito, "Predictive Modelling of Student Dropout Using Ensemble Classifier Method in Higher Education", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 206–211, 2019. doi: 10.25046/aj040425

- Mukundan Kandadai Agaram, "Intelligent Foundations for Knowledge Based Systems", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 4, pp. 73–93, 2019. doi: 10.25046/aj040410

- Fernando Hernández, Roberto Vega, Freddy Tapia, Derlin Morocho, Walter Fuertes, "Early Detection of Alzheimer’s Using Digital Image Processing Through Iridology, An Alternative Method", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 3, pp. 126–137, 2019. doi: 10.25046/aj040317

- Abba Suganda Girsang, Andi Setiadi Manalu, Ko-Wei Huang, "Feature Selection for Musical Genre Classification Using a Genetic Algorithm", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 2, pp. 162–169, 2019. doi: 10.25046/aj040221

- Konstantin Mironov, Ruslan Gayanov, Dmiriy Kurennov, "Observing and Forecasting the Trajectory of the Thrown Body with use of Genetic Programming", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 248–257, 2019. doi: 10.25046/aj040124

- Bok Gyu Han, Hyeon Seok Yang, Ho Gyeong Lee, Young Shik Moon, "Low Contrast Image Enhancement Using Convolutional Neural Network with Simple Reflection Model", Advances in Science, Technology and Engineering Systems Journal, vol. 4, no. 1, pp. 159–164, 2019. doi: 10.25046/aj040115

- Zheng Xie, Chaitanya Gadepalli, Farideh Jalalinajafabadi, Barry M.G. Cheetham, Jarrod J. Homer, "Machine Learning Applied to GRBAS Voice Quality Assessment", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 329–338, 2018. doi: 10.25046/aj030641

- Richard Osei Agjei, Emmanuel Awuni Kolog, Daniel Dei, Juliet Yayra Tengey, "Emotional Impact of Suicide on Active Witnesses: Predicting with Machine Learning", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 501–509, 2018. doi: 10.25046/aj030557

- Sudipta Saha, Aninda Saha, Zubayr Khalid, Pritam Paul, Shuvam Biswas, "A Machine Learning Framework Using Distinctive Feature Extraction for Hand Gesture Recognition", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 5, pp. 72–81, 2018. doi: 10.25046/aj030510

- Kaoutar Jenoui, Abdellah Abouabdellah, "A decision-making-approach for the purchasing organizational structure in Moroccan health care system", Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 2, pp. 195–205, 2018. doi: 10.25046/aj030223