Low-cost Hybrid Wheelchair Controller for Quadriplegias and Paralysis Patients

Low-cost Hybrid Wheelchair Controller for Quadriplegias and Paralysis Patients

Volume 2, Issue 3, Page No 687-694, 2017

Author’s Name: Mohammed Faeik Ruzaij Al-Okby1, 2, a), Sebastian Neubert3, Norbert Stoll3, Kerstin Thurow1

View Affiliations

1Center for Life Science Automation (celisca), University of Rostock, Rostock 18119, Germany

2Technical Institute of Babylon, Al-Furat Al-Awsat Technical University (ATU), Babylon, Iraq

3Institute of Automation, University of Rostock, Rostock 18119, Germany

a)Author to whom correspondence should be addressed. E-mail: Mohammed.Faeik.Ruzaij@celisca.de

Adv. Sci. Technol. Eng. Syst. J. 2(3), 687-694 (2017); ![]() DOI: 10.25046/aj020388

DOI: 10.25046/aj020388

Keywords: Intelligent Wheelchair, Voice Recognition, Quadriplegia, Orientation Detection, Embedded System

Export Citations

Wheelchair controller design is very important for handicapped users such as quadriplegia, amputee, paralyzed and elderly. The safe use, easiness, and comfort are an important factor that can directly affect the user’s social efficiency and quality of life. In this paper, the design, implementation, and test of a new low-cost hybrid wheelchair controller for quadriplegics and paralysis patient have been proposed. Two sub-controllers are combined together to build the hybrid controller which are the voice controller and the head tilt controller. The voice controller consists of two voice recognition (VR) modules. The first module uses the dynamic time warping (DTW) and the second used both the hidden Markov model (HMM) and the DTW to process the user’s voice and recognize the required commands. This controller has been tested with three global languages English, German, and Chinese at two different noise levels 42 and 72 dB. The voice controllers can be used for motion commands as well as for controlling other parameters like lights, light signals, sound alarm, etc. The second sub-controller is the head tilt controller. It consists of two sophisticated orientation detection modules BNO055 to detect and track the user’s head and the wheelchair-robot orientation. The controller has an auto-calibrated algorithm to calibrate the user head orientation with reference wheelchair orientation to adjust the control commands and the speed of the system motors in case of ascending or descending a hill or when passing non-straight roads. The head tilt controller was tested successfully for indoor and outdoor applications.

Received: 04 May 2017, Accepted: 22 May 2017, Published Online: 05 June 2017

1. Introduction

Quadriplegia, paralyzed, hand amputees and elderly users lost the ability to control their hands and they need a special control system to use an electrical wheelchair which is normally controlled via joystick. Thus, they still need the help of others to move and realize their daily activities. Nowadays the population of this type of users increases dramatically due to rapid increases in accidents and elderly people. The main goal of the presented work is to help this type of patients to control the electrical wheelchair without the need of others help. The active body regions for quadriplegia patients include the head, neck, shoulders and some chest muscles. One of the effective control signals in this body regions is the user’s voice. It can be used as a simple solution to control the intelligent application using voice recognition (VR) technology. The VR technology means the processing and converting of the user audio signal to an electrical signal, which can be used by a computer or microcontroller as a control signal. Two main strategies have been used to build VR-based systems. The first uses a computer program to implement the voice recognition and then the computer controls the target system. The second uses embedded modules to implement the VR tasks. In the proposed work the second approach is preferred due to small size, low cost, and less power consumption [1-6].

Other signals which can be used for quadriplegia patients can be acquired from the user’s skin surface in the active body regions that are still under the user control such as shoulders, face and head scalp using different bio-signal acquisition techniques, like electromyography (EMG) [7-8], electroencephalography (EEG) [9-10], and electrooculography (EOG) [11]. These methods have many drawbacks. The bio-signals are highly affected by the interferences and noises from the power sources and also from other body bio-signals [12-14]. The acquisition of the EEG, EMG, and EOG need to use and connect the user’s body with special electrodes which may be uncomfortable and limit the user motion.

Head tilts and body movements have been used by other researchers. User’s head tilts around basic axes x, y and z-axes were used to control the motion of the wheelchair. Several scenarios have been used to achieve this goal. Ultrasound and infrared sensors and detectors have been used to track the head motion with reference to the sensor array [15-18]. Cameras and optical sensors have been used to detect the head orientation and used it as a control signal [19]. Nowadays, accelerometer, micro electrical mechanical system (MEMS) based inertial and orientation sensors are used to detect the objects motion and gesture recognition [20-24]. Most of the above work used open loop control systems with thresholds for the control commands. Some applications use fixed speed for each motion command which is not sufficient for a real-time application.

In this paper, the design, implementation, and test of a hybrid low-cost controller for wheelchairs have been proposed. It has two sub-controllers which are the voice and head tilts to give the user more flexibility in the selection of the comfortable control method. The presented work is innovative in the sense that it uses two different voice recognition modules that are combined together in the voice controller. At the same time, the head tilts controller uses two orientation detection modules to calibrate the control commands of the user’s head with the wheelchair reference orientation in case of ascending or descending a ramp. The proposed solution is safe, comfortable, and easy to use compared with previous other solutions which used only the head orientation without taking in consideration the wheelchair orientation and the road slope.

The system has a simple design. A headset with microphone is used to pick up the voice commands and send them to the voice recognition modules VR1 and VR2. Also, the first orientation module is fixed on the headset to detect the user’s head tilts and send the orientation data to the microcontroller to take the required control commands. The user can simply control the wheelchair by his voice using specific commands or he can use his head tilt to the front, back, left, right and middle for forward, backward, left, right and stop commands respectively. The system uses the powerful ARM EFM32GG990F1024 microcontroller (Silicon Laboratories Inc., USA) which makes the system work standalone, without the need of using any computers and also enables a low cost, small size, and low energy consumption design.

2. Methodology

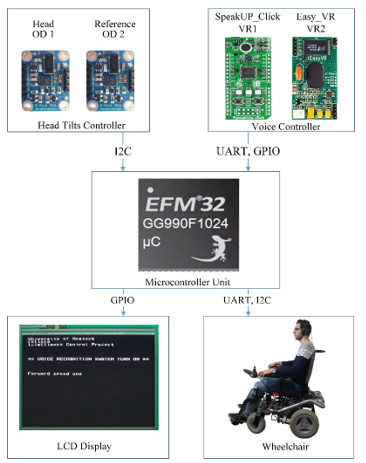

The system consists of two input units and two output units connected to the core microcontroller. The input units are the two VR modules for voice controller and also the two orientation detection modules for the head tilts controllers. The output control signals will be sent to the motor driver unit of the wheelchair to implement the motion commands and to the system LCD display to show the system status and the active controller. The microcontroller is responsible for receiving the data from the input unit, processing the received data, taking the required control commands and sending them to the output units. Fig. 1 shows the block diagram of the system. All system units will be explained in detail in next sub-section.

2.1. Microcontroller Unit (MCU)

The Silicon Labs EFM32GG990F1024 ARM microcontroller, has been selected as the core processor for the presented system. The low energy consumption (200 µA/MHz in running mode; 50 µA/MHz in sleep mode), makes it one of the most energy efficient microcontrollers. The EFM32GG990F1024 has a 32-bit central processing unit (CPU) and can operate at the speed of up to 48 MHz. The main reason and embedded features for selecting this microcontroller are:

- 5 universal synchronous/asynchronous receiver/transmitter UART/USART ports are available and can be used separately. Tow UART ports have been used for communication with the voice recognition modules. Also, another UART port is used for the communication with the motor driver unit. The remained UART/USART ports can be used for future system requirements.

- The controller has Two I2C bus interfaces: The proposed system requires only one I2C bus for communication with the two BNO055 orientation modules. The remaining I2C interface can be reserved for future works.

- 3 pulse counters: the system requires two pulse counters to communicate with the motor encoders to calculate the speed feedback of the system motors.

- Wake up time: It has a short wake-up time of 2 µs. The wake-up time is the time required to change the energy mode from different sleep modes to active mode.

Several other unique embedded features have been built in the EFM32GG990F1024 microcontroller found elsewhere [25].

2.2. Voice Controller

The voice controller consists of two voice recognition modules which are the VR1: SpeakUp Click module (MikroElektronika D.O.O., Serbia) and the VR2: Easy VR module (Veear, Italy). The two modules VR1 and VR2 connected together with the main MCU and build a strong voice controller. The voice controller uses both, dynamic time warping (DTW) and hidden Markov model (HMM) algorithms to perform the voice processing and it can work with speaker dependent SD or speaker independent SI voice recognition modes. This controller has already been tested with Jaguar lite robot. In this paper, the same controller will be integrated with a wheelchair. More details about the previous work and the used module can be found elsewhere [4, 6, 26-28].

2.3. Head Tilts Controller

The head tilts controller consists of two BNO055 orientation detection modules (Bosch Sensortec, Germany). The BNO055 module consists of 3 triaxial MEMS sensors which are an accelerometer, a gyroscope, and a magnetometer. All 3 sensors are combined with a 32-bit microcontroller in a single package to produce a nine degree of freedom (9DOF) orientation detection module. The module output has different data formats and measuring units such as Euler angles, quaternion vector, linear acceleration, gravity vector, and magnetic field strength [29]. The Euler angles have been used in the proposed system to measure the user’s head and the reference wheelchair orientation.

2.4. Motors Driver Unit and Motors

Meyra Ortopedia Smart 9.906 (MEYRA GmbH, Germany) has been used for system tests. The Meyra wheelchair uses CAN bus for communication between the joystick and the motion controller. In the presented work, the original joystick control system has been replaced with the voice and head tilt controller. Sabertooth 2×32 Amp (Dimension Engineering LLC., USA; see Fig. 2) has been used for the wheelchair motor driving. It communicates with the main ARM microcontroller using a UART bus (settings: 115,200 kbps, N, 8, 1).

Two DC motors SRG0531 (AMT Schmid GmbH & Co. KG, Germany) have been used to drive the wheelchair. Each motor has a gearbox including a helical gearset on the input side and spur gear set on the output side. The operation voltage of the motor is 24 V with a rated power from 220 to 400 Watt. The total load capacity is 160 kg and it can run with an output speed from 105 to 200 RPM (revolutions/minute). The motor operates at low-level noise which is less than 58 dB. It has an electromagnetic brake work with 12/24 V [30].

3. System Description

The microcontroller communicates with the voice controller modules VR1 and VR2 via the general-purpose input/output GPIO pins and UART communication ports respectively. The voice command is picked up and feeding to the VR1 and VR2 using a sensitive microphone with an internal impedance of 1 k Ohm at 1 kHz, frequency range of 50-13000 Hz and a sensitivity of -34 dB. The VR modules process the voice commands and send the recognition results to the microcontroller. “OR” function has been used to combine the recognition results of the VR modules which allow the system to response when any one of the both VR modules recognizes correctly. A false positive (FP) cancellation function has been programmed and added to the “OR” function to cancel the voice recognition results if the two modules show a different command recognition. The FP cancellation function converts the FP errors to a false negative FN error which mean the system cannot recognize the voice command.

The head tilts controller can be activated using the voice command “Orientation”. Two BNO055 orientation detection modules have been used to track and read the orientation data of the user’s head and the wheelchair. The first module is fixed on a headset for reading the head orientation; the second is fixed on the wheelchair chassis to read the reference wheelchair orientation. The user’s head tilts around the principal axes x and y activates the control commands of the head tilt controller. The orientation detection modules have been programmed to send orientation information in Euler angles format (Pitch, Roll, and Yaw) to the microcontroller using inter-integrated circuit I2C bus.

The use of the second module for reference orientation detection plays an important role in the control commands calibration in the case of ascending or descending a hill. The microcontroller will compare the tilt angles (Euler angles) of the user’s head with the wheelchair tilt angles instead of comparing with ground original (x, y, and z) axes. This increases the system safety, and easy use when the system passes through ramps or non-straight roads. When only the head orientation module is used for controlling the wheelchair without using the reference wheelchair orientation, the user needs to increase the head tilt angle proportional to the value of hill slope angle in the ascent and vice versa the descent to compensate the hill slope. The same procedure happens in case of a side slope in the road. The user needs to increase the head tilt more to the right when the slope of the right side is greater than left and vice versa. In the presented system only two Euler angles have been used which are the Pitch and Roll for motion control. The Yaw angle has been neglected to allow the user turn his head free around the z-axes when he is driving the wheelchair.

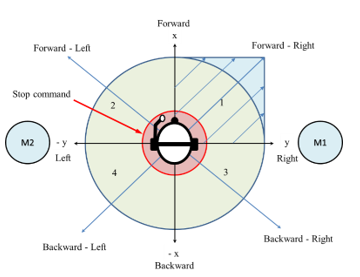

3.1. System motor controlling

The wheelchair speed and direction control have been implemented through controlling the left (M2) and the right (M1) motor rotation speed and direction. The head tilts controller controls the speed of the wheelchair motors depending on the x, and y position of the user’s head with reference to the origin point (x=0°, y=0°). If the user increases his Pitch tilt angle in the x-axis and keeps the Roll at -5°<y<5°, the both wheelchair motors will rotate in the forward direction at the same speed. If the user tilts his head in the forward direction with right side tilt (see Fig. 3) to move the wheelchair forward with a right curve direction. In this case, the left motor (M2) speed will increase (M2= x + y) and the right motor (M1) speed will decrease (M1= x – y) depending on the value of the head tilt in the x and y-axes. The same procedure applies when the user needs to go forward with a left curve where the speed of the right motor will increase and the speed of the left motor will be decreased. A similar situation will be applied for backward commands.

The user can rotate the wheelchair left or right at the same point by tilting his head left or right and keeping the value of the x-axis at -10°<x<0°. The forward command is activated when the Pitch head tilt angle become at 0°<x<25°. This tilt range will keep acceptable user viewing in the front direction. The backward command is activated when the Pitch tilt angle become at -45°<x<-10°.

3.2. Sub-Controller selection

The system design allows the user easily to select and change between the voice and head tilt as a motion controller. The system will start by listening for the user voice command to activate the required controller. If the user wants to select the voice controller hen need to give the voice command “voice”. The voice controller controls the motion of the wheelchair using a group of motion commands which are forward, left, right, stop, backward, speed one and speed two. Another group of voice commands has been used for activating the control of lights, signals and sound alarms which are light-on, light-off, signal-on, and signal-off. When the user needs to activate the head tilts controller he must give the voice command “orientation”. Then the wheelchair motion is guided by the user’s head tilts and the voice controller will still be used to turn on the lights, light signals, and sound alarms. The user can change the motion controller at any time by giving a voice command with the name of the required controller (“voice” or “orientation”). Fig. 4 shows the flowchart of the presented system.

4. Experimental Results

4.1. Voice Controller

In the previous work, the voice recognition modules VR1 and VR2 have been tested individually and also combined with “AND” and “OR” algorithms [6] in two different noise level environments with 42 dB and 72 dB. Seven voice commands to control the motion of the wheelchair have been selected for the test which are: forward, left, right, stop, backward, speed one and speed two (more detailed results can be found elsewhere [4, 6]).

In the presented work, 8 new voice commands have been used to 1) select the motion controller, 2) control the maximum speed of the wheelchair and 3) control the lights. The used commands are: voice, orientation, speed-one, speed-two, light-on, light-off, signal-on, and signal-off. Since the voice controller was tested before [4, 6], the SD voice recognition mode has been selected to control the wheelchair, which is the best choice for medical and rehabilitation application. It makes the wheelchair respond only to a specific user and not respond to any person saying the same trained commands.

“OR” with FP cancellation function has been used in the voice controller tests. The function has been tested before and the results show that the VR accuracy has been increased ≈ 3% and the FP error has been reduced to ≈ 0% with FN error rate of 5.2 % in 72 dB noisy environments [4, 6].

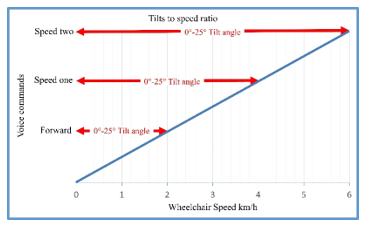

The voice commands “forward”, “speed one” and “speed two” have been used when the head tilt controller is activated to control the user’s head tilts to speed ratio. This is important to let the user increase or decrease the system speed sensitivity proportional to the head tilts angle value. The “forward” voice command sets the tilt to speed ratio as 0°-25° tilts angles have a 0-2 km/h speed with ≈ 80 m/h speed for each individual tilt degree. The command “speed one” increases the speed ratio to (0°-25° / 0-4 km/h) with ≈ 160 m/h speed for each tilts degree. The command “speed two” increases the tilts to speed ratio to (0°-25° / 0-6 km/h) with ≈ 240 m/h speed for each tilt degree. Fig. 5 shows the tilt to speed ratio of the tested voice commands.

4.2. Head Tilts Controller

The head tilts controller has been tested for indoor as well as for outdoor environments.

4.2.1. Indoor Head Tilts Controller Tests

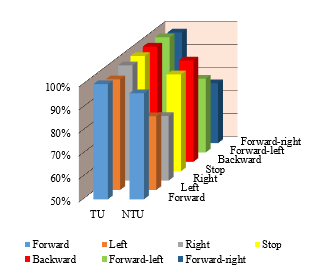

Several different tasks have been executed to test the system for the indoor motion control. The first task is used to test each control command for the head tilts controller. The users performed the test two times. The first is without previous training and the result titled NTU, and the second is after training the user for approximately 30 minutes and titled TU. Seven control commands have been tested by the users using head tilts around the x and y-axis. The tested commands are: forward, backward, left, right, stop, forward-left, forward-right. Each user tests each control command 10 times. The system speed to tilts ratio has been fixed at 2 km/h which is the primary system speed to tilt ratio. The test results before and after 30 mints training for the control command tests are explained in Fig. 6.

Fig. 6: Commands success rate of the head tilt controller for trained user TU and non-trained users NTU

The results in Fig. 6 show the control commands success rate. The success rate is the correct implementation of the tested command in speed and direction from the start to the end point. The test results revealed that the success rate is increased when the user is trained before using the system.

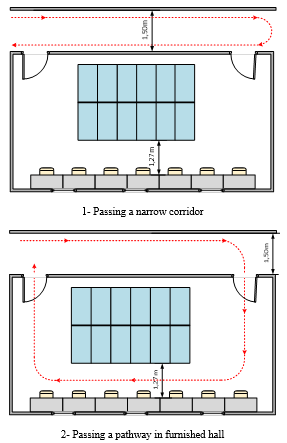

The second test for indoor environments is used to check the flexibility of driving the wheelchair at a narrow area. This test includes implementing two tasks which are: 1) driving the wheelchair through a narrow corridor and 2) driving the wheelchair through a furnished hall. The test has been performed in a laboratory environment at the University of Rostock. Five trained users implemented the two tasks of the test 5 times. The results show a successful implementation of the two tasks using the head tilts controller. All 5 users completed the tasks 1 and 2 without collisions and followed the required paths correctly. Fig. 7 shows the test paths.

4.2.2. Outdoor Head Tilts Controller Tests

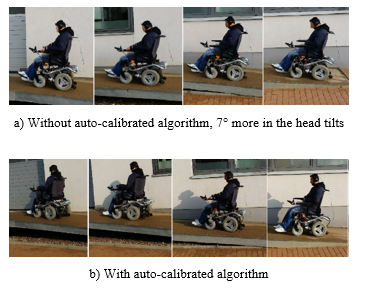

The auto-calibrated algorithm of the head tilts controller has been tested for outdoor environments. The test was designed to check the performance of the algorithm, especially in the case of ascending or descending a hill. The algorithm is used to cancel the effect of road slope on the user’s head tilt angle. The tests was performed on a small narrow and long ramp with a height of 80 cm and a slope angle of 7 degrees. The test included two tasks, the first is to pass the ramp without activating the algorithm while the second is to pass the ramp with the auto-calibrated algorithm. Five users performed the test using fixed tilt to speed ratio of 25° tilt angle to 2 km/h for both tasks.

The test results revealed that the auto-calibrated algorithm enhances the performance of the head tilts controller for driving the wheelchair while passing the ramp and makes it similar to driving the wheelchair with normal head tilt angles on a straight road. The test of the system without activating the auto-calibrated algorithm for ascending a hill show that the control of the wheelchair is more difficult. In the ascending of the hill the user needs to tilt his head 7 degrees more down (same ramp slope) compared to the motion with the auto-calibrated algorithm to compensate the hill slope (7°) and vice versa. Fig. 8 shows the tests of the system with and without the auto-calibrated algorithm.

Fig. 8: System tests for passing an 80cm height and 7° slope angle ramp with and without the auto-calibrated algorithm

4.2.3. Hysteresis and Wrong Head Orientation Function

During the test of the system, the head tilt controller is faced with a harmful situation with hysteresis around the stop command area. This problem occurs when the user brings his head into the stop area and moves his head involuntarily out of the stop area for less than 50 ms without intending the system to move. This problem makes continuous discrete motion-stop commands that can cause concern and bother the user and also unwanted motion may occur. A new function has been added to the head tilt controller to avoid this hysteresis by increasing the number of measurements for the desired control command from the head orientation sensor. Instead of using only one readout in the range of 0º<tilt angle<25º for the forward command, the system uses 10 continuous measurements at the region of 0º<tilt angle<25º for the forward command tilt area in order to implement the forward command. This procedure cancels the effect of the involuntary head movement and makes the start of the motion more smoothly.

Another function has been built and embedded in the head tilt controller to stop the system and protect the user if the head orientation goes out of the programmed range of the control commands tilts angles. The function stops the wheelchair and suspends the control commands of the head tilt controller until the headset returns to the center stop region (0°>x>-10°, 5°>y>-5°). This function protects the user when the headset falls down or the user loses consciousness.

The two functions have been tested with the system and the results revealed a successful performance for both of them. The hysteresis function increased the reaction time of the system ≈ 50 ms in the initial motion only which do not really affect the system performance.

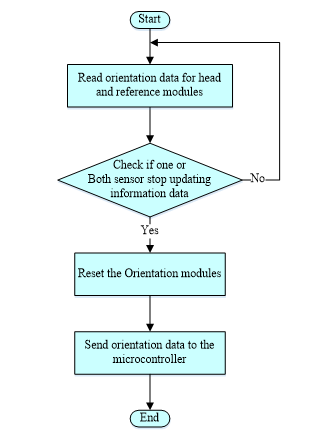

4.2.4. Orientation Sensor Error Handling

Orientation sensor error handling is one of the most important tasks in the present study since the system’s motion can cause the loss of the instantaneous updating of orientation data. This can affect the microcontroller performance and may cause restarting or hanging in the system. An error handling function has been programmed to avoid missing orientation data. The error handling function tracks changes in the orientation data and sends alert information to the user if there are any communication problems with orientation modules. It also resets and recalibrates the orientation sensors after a limited number of unchanged readings.

The function has been tested with the system and the test results show a success rate of 100% for tracking the information data, resetting and calibrating the orientation detection modules. Fig. 9 shows the flowchart of the system’s error handling function.

5. Conclusions

In this paper, the design, implementation, and testing of a hybrid wheelchair controller for quadriplegic and paralysis users has been proposed. Two sub-controllers, which are the voice and head tits controller, have been used to allow the user to select and change the active controller for more comfortable wheelchair driving. The test results of the voice controller revealed that it has a better performance using “OR” and FP cancellation function compared with “AND” and individual tests. The results revealed a ≈ 3% increase in the VR accuracy which reached 98.2% in 42 dB noise environments with a 0% FP errors and 1.8% FN errors which are important features for biomedical and rehabilitation application. The voice controller is ideal for the control of lights, light signals, sound alarms, rather than control the wheelchair motion, especially in narrow indoor areas.

The head tilts controller tests revealed that it can be used for both indoor as well as outdoor environments. The indoor tests showed accurate control and good performance in a narrow area with a successful implementation of task 1 and task 2. The outdoor tests showed that the system is ideal for non-straight roads and especially for ascending and descending a hill with the auto-calibrated algorithm.

Hysteresis, wrong head orientation function and orientation sensor error handling function have been successfully added to the head tilts controller. All the functions have been tested and the results show that they enhance the performance of the controller and make the driving of the wheelchair more safe and comfortable.

The head tilts controller tests revealed that previous training of the user is required before using it to have a better human system interaction, good adaptation, and enhancing the system performance.

The voice and the head tilt controllers have been combined together successfully, the user can select or change the wanted controller easily using voice commands.

Acknowledgment

This work is supported by the German Academic Exchange Service (DAAD, Germany), and Al-Furat Al-Awsat Technical University (ATU, Iraq). The authors would like to thank and appreciate their support and scholar funding. Also, the author would like to thank Dr.-Ing. Thomas Roddelkopf, Dr.-Ing. Steffen Junginger and Mr. Heiko Engelhardt for help and technical support.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “Design and implementation of low-cost intelligent wheelchair controller for quadriplegias and paralysis patient”, in Proc. 2017 IEEE 15th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 26-28 January 2017, pp. 399-404.

- Akira Murai, Masaharu Mizuguchi, Takeshi Saitoh, Member, IEEE, Tomoyuki Osakiand, Ryosuke Konishi, “Elevator Available Voice Activated Wheelchair”, in Proc. 18th IEEE International Symposium on Robot and Human Interactive Communication, Toyama, Japan, 27 September- 2 October 2009, pp. 730-735.

- Xiaoling Lv, Minglu Zhang and Hui Li, “Robot Control Based on Voice Command”, in Proc. IEEE International Conference on Automation and Logistics, Qingdao, September 2008, pp. 2490-2494.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “Design and Testing of Low Cost Three-Modes of Operation Voice Controller for Wheelchairs and Rehabilitation Robotics”, in Proc. 2015 IEEE 9th International Symposium on Intelligent Signal Processing (WISP), Siena, Italy, 15-17 May 2015, pp. 114-119.

- Mohammed Faeik Ruzaij, S. Poonguzhali, “Design and Implementation of Low Cost Intelligent Wheelchair”, in Proc. Second International Conference on Recent Trends in Information Technology, Chennai, 19-21 April 2012, pp. 468-471.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “Hybrid Voice Controller for Intelligent Wheelchair and Rehabilitation Robot Using Voice Recognition and Embedded Technologies”, Journal of Advanced Computational Intelligence and Intelligent Informatics, Vol. 20 No. 4-2016, Fuji Technology Press Ltd, Tokyo, Japan, pp. 615-622.

- Inhyuk Moon, Myungjoon Lee, Jeicheong Ryu, and Museong Mun, “Intelligent Robotic Wheelchair with EMG-, Gesture-,and Voice-based Interfaces”, in Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas. Nevada, 27-31October 2003, Vol. 4, pp. 3453 – 3458.

- Satoshi Ohishi and Toshiyuki Kondo, “A Proposal of EMG-based Wheelchair for Preventing Disuse of Lower Motor Function”, in Proc. Annual Conference of Society of Instrument and Control Engineers (SICE), 20-23 August 2012, Akita University, Akita, Japan, pp. 236-239.

- Kazuo Tanaka, Kazuyuki Matsunaga, and Hua O. Wang, “Electroencephalogram-Based Control of an Electric Wheelchair”, IEEE Transactions on Robotics, Vol. 21, No. 4, August 2005.

- I. Iturrate, J. Antelis and J. Minguez, “Synchronous EEG Brain-Actuated Wheelchair with Automated Navigation”, in Proc. 2009 IEEE International Conference on Robotics and Automation, International Conference Center, Kobe, Japan, May 12-17, 2009, pp. 2318-2327.

- Biswajeet Champaty, Jobin Jose, Kunal Pal, Thirugnanam A., “Development of EOG Based Human Machine Interface control System for Motorized Wheelchair”, in Proc. International Conference on Magnetics, Machines & Drives (AICERA-2014 iCMMD), Kottayam, 24-26 July 2014, pp. 1-7.

- Samuel Akwei-Sekyere, “Powerline noise elimination in biomedical signals via blind source separation and wavelet analysis,” PeerJ, Vol. 3, p.e1086, July 2015, pp.1-24.

- Wilfried Philips, “Adaptive noise removal from biomedical signals using warped polynomials,” IEEE Transactions On Biomedical Engineering, Vol. 43, no. 5, May 1996. pp. 480–492.

- Kayvan Najarian, Robert Splinter, Biomedical Signal, and Image Processing. CRC Press, 2005.

- O. Partaatmadja, B. Benhabib, A. Sun, and A. A. Goldenberg, “An Electrooptical Orientation Sensor for Robotics”, IEEE Transactions On Robotics And Automation, Vol. 8, No. 1, February 1992, pp. 111-119.

- D. J. Kupetz, S. A. Wentzell, B. F. BuSha, “Head Motion Controlled Power Wheelchair“, in Proc. 2010 IEEE 36th Annual Northeast Bioengineering Conference, New York, USA, 26-28 March 2010, pp. 1-2.

- Henrik Vie Christensen a and Juan Carlos Garcia b,”Infrared Non-Contact Head Sensor, for Control of Wheelchair Movements,” Book Title Book Editors IOS Press, 2003.

- James M Ford, Saleem J.Sheredos, “ Ultrasonic Head Controller For Powered Wheelchair,” Journal of Rehabilitation Research and Development, Vol. 32 No. 3, October 1995, pp. 280-284.

- Farid Abedan Kondori, Shahrouz Yousefi, Li Liu, Haibo Li, “Head Operated Electric Wheelchair”, in Proc. 2014 IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), San Diego, CA, USA, 6-8 April 2014, pp. 53-56.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “Auto Calibrated Head Orientation Controller for Robotic-Wheelchair Using MEMS Sensors and Embedded Technologies”, in Proc. 2016 IEEE Sensors Applications Symposium (SAS 2016), Catania, Italy, 20-22 April 2016, pp. 433-438.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “Multi-Sensor Robotic-Wheelchair Controller for Handicap and Quadriplegia Patients Using Embedded Technologies”, ”, in Proc. 2016-9th International Conference on Human System Interactions (HSI), Portsmouth, United Kingdom, 6-8 July 2016. pp.103-109.

- Deepesh K Rathore, Pulkit Srivastava, Sankalp Pandey and Sudhanshu Jaiswal, “A Novel Multipurpose Smart Wheelchair”, in Proc. 2014 IEEE Students’ Conference on Electrical, Electronics and Computer Science, Bhopal, India, 1-2 March 2014, pp. 1-4.

- Mohammed Faeik Ruzaij, Sebastian Neubert, Norbert Stoll, Kerstin Thurow, “A speed compensation algorithm for a head tilts controller used for wheelchairs and rehabilitation applications”, in Proc. 2017 IEEE 15th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Herl’any, Slovakia, 26-28 January 2017, pp. 497-502.

- Tao Lu, “A Motion Control Method of Intelligent Wheelchair Based on Hand Gesture Recognition”, in Proc. 2013 8th IEEE Conference on Industrial Electronics and Applications (ICIEA), Melbourne, VIC, 19-21 June 2013, pp. 957-962.

- EFM32GG990 DATASHEET, [Online]. Available: http://www.silabs.com. [Accessed: 20 December 2015].

- Speak Up Click User Manual Ver.101, MikroElektronika, Belgrade, Serbia, 2014.

- EasyVR 2.0 User Manual R.3.6.6., TIGAL KG, Vienna, Austria, 2014.

- RSC-4128 Speech Recognition Processor data sheet, [Online]. Available: http://www.sensory.com/wp-content/uploads/80-0206-W.pdf. [Accessed: 04 May 2016].

- BNO055 data sheet, [online]. Available: https://ae-bst.resource.bosch.com/media/_tech/media/datasheets/BST_BNO055_DS000_14.pdf. [Accessed: 15 Jun 2016].

- “AMT – Auto Move Technologies > Home > Products > Geared Motors > SRG 05.” [Online]. Available: http://www.amt-schmid.com/en/products/right_angled_drives/srg_05/srg_05.php. [Accessed: 10 October 2016].