Segmented and Detailed Visualization of Anatomical Structures based on Augmented Reality for Health Education and Knowledge Discovery

Segmented and Detailed Visualization of Anatomical Structures based on Augmented Reality for Health Education and Knowledge Discovery

Volume 2, Issue 3, Page No 469-478, 2017

Author’s Name: Isabel Cristina Siqueira da Silvaa), Gerson Klein, Denise Munchen Brandão

View Affiliations

Informatics Faculty, Exacts and Technology School, UniRitter Laureate International Universities, 91849-440, Brazil

a)Author to whom correspondence should be addressed. E-mail: isabel.siqueira@gmail.com

Adv. Sci. Technol. Eng. Syst. J. 2(3), 469-478 (2017); ![]() DOI: 10.25046/aj020360

DOI: 10.25046/aj020360

Keywords: Augmented reality, Interaction design, Health education, Anatomy visualization, Knowledge Discovery

Export Citations

The evolution of technology has changed the face of education, especially when combined with appropriate pedagogical bases. This combination has created innovation opportunities in order to add quality to teaching through new perspectives for traditional methods applied in the classroom. In the Health field, particularly, augmented reality and interaction design techniques can assist the teacher in the exposition of theoretical concepts and/or concepts that need of training at specific medical procedures. Besides, visualization and interaction with Health data, from different sources and in different formats, helps to identify hidden patterns or anomalies, increases the flexibility in the search for certain values, allows the comparison of different units to obtain relative difference in quantities, provides human interaction in real time, etc. At this point, it is noted that the use of interactive visualization techniques such as augmented reality and virtual can collaborate with the process of knowledge discovery in medical and biomedical databases. This work discuss aspects related to the use of augmented reality and interaction design as a tool for teaching anatomy and knowledge discovery, with the proposition of an case study based on mobile application that can display targeted anatomical parts in high resolution and with detail of its parts.

Received: 05 April 2017, Accepted: 02 May 2017, Published Online: 24 May 2017

1. Introduction

This paper is an extension of work originally presented in International Conference of the Chilean Computer Science Society (SCCC) [1].

The computer is a facilitator of teaching for different sciences, since the static and two-dimensional image printed in the books can be added movement and a new dimension, allowing a better representation of scientific concepts and favoring meaningful learning. In this perspective, interactive digital graphics applications can aid in the teaching-learning process of concepts, contents and chemical skills as well as the knowledge discovery process, due to the fact of stimulating self-learning [2] [3]. In the Health area for example, we have a great concentration of studies looking for viable computational solutions for the most diverse types of information management problems. The analysis and cross-checking of information are able to provide evidence to understand the development of diseases, assist health students and professionals to make decisions to contain the epidemics and improve the care for patients, avoiding extra costs with hospitalizations.

In the same way that the brains are programmed to acquire the ability to write and read, the constant use of digital technologies reprograms the brain to think from the logic of these devices. This means that a large part of the problem related to the learning of digital natives resides in the fact that it is very laborious to retrain the brain to construct thought from the linearity of reading and writing, and that this thought was already modified in function of Television and the advent of computer graphics [6]. According to Mazzarotto and Battaiola [7], three aspects are identified as possible implications of the use of such applications in the learning process: increasing learner motivation and interest in learning activity, increasing retention of subjects and improving skills Reasoning and high complexity thinking. The increase in motivation and interest would be the result mainly of the elements of curiosity, fantasy and control offered by the interactive graphic application. In addition to functionality and usability, users seek pleasure in the activity, and this search is key to choosing to purchase and use a product.The individuals that born after 1960 having grown up surrounded by all sorts of new technologies, and their minds have undergone cognitive modifications brought about by new technologies and digital media, which have propelled a new range of needs and preferences on the part of younger generations, particularly in the area of learning. Prensky [4] says that today’s students and professionals represent the first generation to grow with the technology of interactive graphics applications. They spent their whole lives surrounded by emergent technologies such as augmented reality, ubiquitous learning (u-learning), mobile learning (m-learning), serious games and learning analytics for improving the satisfaction and experiences of the users in enriched multimodal learning environments [5]. As a result of this ubiquitous digital environment and the large amount of interaction with it, students today think and process information fundamentally different from their predecessors.

Visualization and interaction techniques can aid the analysis of data, since they amplify the process of insight on related data, transforming them and/or amplifying them in the form of images [8] [9]. When an image is analyzed, a cognitive process begins, where perceptual mechanisms are activated that aim to identify patterns and segment elements [10]. The correct mapping of data to images is crucial, since one can discard relevant information or exceed the amount of irrelevant information [11] [12]. Thus, it is noted that the graphical representation should limit the amount of information that the user receives, while keeping him/her aware of the total information space and reducing the cognitive effort [13].

In this context, Augmented Reality (AR) allow the viewing of virtual objects combined with scenes from real environments through computers equipped with a video camera, or even from portable devices, such as tablets and cell phones [14]. There are three basic ideas that make up AR: immersion, interaction and involvement. Immersion is linked with the feeling of being inside environments. Interaction is the computer’s ability to detect user input and instantly modify the virtual world and actions on it. Involvement is connected with the degree of motivation for engaging a person with certain activity.

The health sciences have benefited from the power of these tools [15]. By employing virtual technology to teach anatomy, the challenges are different from traditional teaching with real anatomical parts and/or two-dimensional atlas (2D), since virtual anatomy requires three-dimensional (3D) visualizations with high resolution and interaction with them in real time [16] [17]. Students can use AR to build new knowledge based on interactions with virtual objects that bring underlying data to life [18]. Virtual objects can be manipulated in a direct way, which allows the user to interact with them in a free and natural way, as in the real world. This system not only helps students learn anatomical details, but also provides the enhancement of 3D structures that cannot be replicated by a 2D atlas. It is speculated that working directly with AR can help students learn the complex structure of anatomy better and faster than using traditional methods (cadaver dissection and the use of paper atlas).

Thus, AR can amplify the associations between anatomy-physiology and movement through its use in mobile devices, allowing greater flexibility of interaction with virtual anatomical models. However, there are not many software solutions involving AR and visualization of anatomy, human and animal, aimed at mobile devices and, among those that exist (see section 2), it is not possible to visualize and interact with detailed and segmented anatomical structures. In addition to these questions, it was identified that the response time of such tools, coupled with the quality of 3D models, does not favor their use in the classroom as an aid to teaching activities by teachers.

Based on these questions, this work aims to propose the use of AR to visualize segmented anatomical structures, in high resolution, detailing their parts textually next to the image and using cut planes, in mobile devices. Interaction questions with segmented anatomical pieces are also studied, in order to facilitate their study and learning with students in the areas of health sciences. Such proposals were validated in two case studies involving the anatomy of human bone structures and animal organs. The first case study has already been validated alongside potential users through usability testing while cognitive specialists evaluated the second case study.

This article is organized as follows. Section 2 presents works related to the topic discussed in this article. Section 3 describes the proposed tool while section 4 presents details of the validation experiment with users and results. Finally, section 5 presents the final considerations.

2. Related Work

Different studies and applications are being developed with AR focused on teaching concepts related to Health.

Ten years ago, Gomes et al. [19] were already investigating the application of AR for educational purposes in the area of Medicine. Using free tools (Linux and ArToolkit[1]), they presented examples of overlapping of organs on markers in real scenes, allowing the visualization and manipulation of the respective organ with differentiated aspects. To do so, they used sound allied to the image, making the learning more dynamic and realistic, although they did not explore mobile technology, mainly due to the technological restrictions of the time.

Safi et al. [20] indicate that, in relation to the monitoring of specific diseases, such as skin cancer, AR can help to monitor and identify problems early in order to initiate treatment or prevent disease progression. High-risk individuals may present with disturbing and abnormal skin lesions, and, in this context, AR can help the professional to quickly compare the results and the change of these lesions over a period of time based on the data (texts and images) of the patient. Minimally invasive surgeries may also be possible with AR, allowing surgeons to access information more quickly without having to shift their attention to the patient being treated. Vital statistics of this can be kept in their field of view while operating, without taking risks of distraction.

Coles et al. [21] present a virtual environment for training femoral palpation and needle insertion, the opening steps of many interventional radiology procedures. A novel augmented reality simulation has been developed that allows the trainees to feel a virtual patient using their own hands. The authors mimic the palpation haptics effect by two off-the-shelf force feedback devices have been linked together to provide a hybrid device that gives five degrees of force feedback. This is combined with a custom built hydraulic interface to provide a pulse like tactile effect. The simulation has undergone a face and content validation study in which positive results were obtained. During validation tests, experts in the field rated the device as closely reproducing the fine tactile cues of a real life palpation.

Reitzib [22] also agree with these observations, claiming that working directly with AR can help future professionals learn the complex structure of anatomy better and faster than using traditional methods (cadaver dissection and the use of paper atlas). Patient 3D images, constructed from 2D images of computed tomography scan and/or magnetic resonance imaging can be visualized overlapping their bodies with accurate visual information and real-time data retrieval.

Bacca et al. [23] contribute to existing knowledge in AR in educational settings by providing the current state of research in this topic. This research has identified relevant aspects that need further research in order to identify the benefits of this technology to improve the learning processes. In this context, the authors highlight some aspects as learning gains, motivation, interaction, collaboration and student engagement and positive attitudes. However, very few systems have considered the special needs of students in AR and there is a potential field for further research.

Jenny [24] employed Augment[2] software to defend her thesis involving the benefits of AR employed in the fields of Dentistry and Medicine. In dentistry, the AR was used in courses and in practical classes with the purpose of demonstrating dental models for the students and enabling them to compare their sculpture with reference models. It was observed that AR facilitated communication between teachers and students, clarifying the images and texts found in the textbooks.

In relation to the use of AR in Veterinary Medicine, there are still few published papers about it.

Parkes et al. [25] present a mixed reality simulator was developed to complement clinical training. Two PHANToM premium haptic devices were positioned either side of a modified toy cat. Virtual models of the chest and some abdominal contents were superimposed on the physical model. Seven veterinarians set the haptic properties of the virtual models; values were adjusted while the simulation was being palpated until the representation was satisfactory. Feedback from the veterinarians was encouraging suggesting that the simulator has a potential role in student training.

Lee et al. [26] developed an intravenous injection simulator, based on AR, to train veterinary students in the performance of canine venous puncture. The 3D models used were developed from images derived from computed tomography of beagle dogs, converted to stereo lithography format. Another application of AR in Veterinary Medicine is reported in [27] where the University of Liverpool School of Veterinary Science is using such technology to allow students to analyze the internal anatomy of animals from smartphones. The team developed a 3D image of an equine heart for the study in question.

In terms of applications, we have the application based on AR proposed by Chien et al. [15], which allows visualization of anatomical details in 3D, allowing users to disassemble, assemble and manipulate such details for educational purposes. In the article, the technologies employed are not informed and the application was not made available for mobile devices. On the other hand, the BARETA (Bangor Augmented Reality Education Tool for Anatomy) [28] system, in turn, combines AR with 3D models from rapid prototyping technology, aimed at teaching anatomy. Likewise, the project LearnAR[3] proposes an online tool, based on AR, aimed at teaching in health disciplines. Anatomy4D[4] is an application that was developed for mobile devices using AR concepts

The works discussed in this section do not allow the visualization of detailed and segmented anatomical as also parts individualized and intuitive interaction with them, a fact that motivates the researches of this work.

As conclusion of our study of related works, we observed that, although they address the use of AR in educational settings, they mostly focus on the specific applications for a human or animal body part. The visualization of detailed and segmented anatomical as also parts individualized and intuitive interaction with them is not well explored.

3. Segmentation and Visualization of Human and Animal Anatomical Structures with Augmented Reality

Understanding the complexity of human and animal anatomy something that requires a lot of time and study. It is noted that the teaching methods used are mostly based on 2D atlas studies in addition to the traditional use of cadavers or mannequin models, through which students learn the forms and the location of structures of the human/animal body, correlating them with their functions [16]. However, the use of corpses has been aggravated in terms of availability and storage due to the restricted time of access to the material [17]. Besides, the use of 2D atlases compromise the understanding of the real 3D structure of anatomical pieces while multiple needle and wire punctures can cause rapid degradation of mannequin models [21].

In the previous section, there is a series of papers discussing the use of RA as a possible solution for such questions, but there is not found a variety of solutions that allow the combination of AR to the segmented visualization of 3D anatomical parts, with intuitive interaction and support for use on mobile devices. Based on these observations, we propose a tool for collaborate with the teaching area in Health and knowledge discovery that implements some functionality not observed in the related works studied.

3.1 Requirements Gathering

For the requirements gathering regarding the present research, were interviewed teachers who work in undergraduation disciplines of the area of Health involving the teaching of concepts of human and animal anatomy together with the accomplishment of the contextual analysis of the same acting in the classroom with their students. The institution where the case study was carried out has different resources used in the classroom, such as tablets and computers, which have software and virtual atlas applications of anatomy, embryology and histology, imaging resources – such as RX, tomography, Resonance, human models in resin that replace the use of corpses to study human anatomy, anatomy and histology books, among others. Active methodology techniques such as body painting and body projection are also used for visualization and learning dynamics.

As a pedagogical proposal for the teaching of anatomy in undergraduation Health courses, teachers point out that the use of AR may help to identify anatomical structures, amplifying the associations between anatomy, physiology and movement through their use in mobile devices, in order to allowing greater flexibility interaction with the anatomical models. Such benefits are amplified with the possibility of segmented and detailed visualization of anatomical parts, in high resolution, and with intuitive interaction in real time. Thus, the proposed visualization has such characteristics.

3.2 Segmented and Detailed Visualization

From the requirements gathering, the following features were defined for the proposed visualization:

- Visualization and interaction with the use of AR aimed at assisting the teaching of human and animal anatomy, based on real case studies, facilitating their adaptation and use in the classroom according to the demands and disciplines that involve the study of the anatomy;

- Support for use in mobile devices, in order to provide users with flexibility of use (students and teachers);

- Use of 3D models with adequate degree of definition and realism;

- Trace based on markers;

- Intuitive and easy-to-understand interface;

- Possibility of segmented and detailed visualization of a human and/or animal anatomical component.

For reading 2D markings in real scenes through devices that have camera implementation, the framework Vuforia[1] was used. Vuforia accesses the database remotely, not overloading the execution of the application. The Vuforia was integrated with the game engine Unity[2] in order to generate the AR functionalities, connecting virtual images with real scenes and allowing interaction with them in real time. The 3D models were obtained from public repositories and/or builded in Blender[3] software from 2D pictures.

Four main views were made available:

- Simple anterior view;

- Simple posterior view;

- Detailed anterior view; and

- Detailed posterior view.

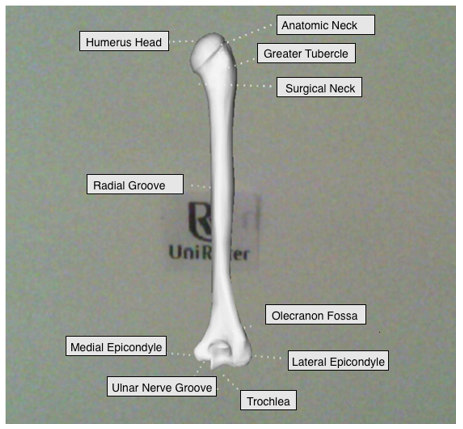

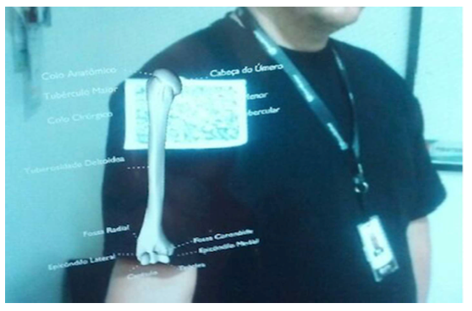

In the “previous view”, the front part of the projected structure is shown (Figure 1) while in the “anterior view”, the back part is seen. The difference of both to the detailed ones is that, in the latter, we have the description of the main bone accidents that compose a structure as showed in Figures 2 and 3. Clicking on any of the viewing options in the menu will trigger the camera of the mobile device that will capture the actual scene. As in all applications involving AR, after finding the marker in the scene, the 3D image of the structure, chosen from the menu, is incorporated into the scene. In the Figures 1 and 3, we employed a fiducial marker to facilitate the mapping of the abstract to the concrete experience for inexperienced user with the use of AR.

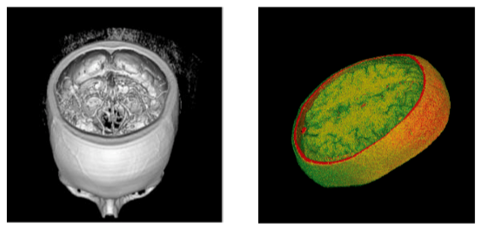

Besides these four views, a fifth view is being developed and improved: the cut plane view. Figure 4 shows two examples of cut plane views of segmented head structures.

The interaction with the anatomic objects in the scene occurs by three basics geometrics transformations: translation, scale and rotation.

4. Validation and Results

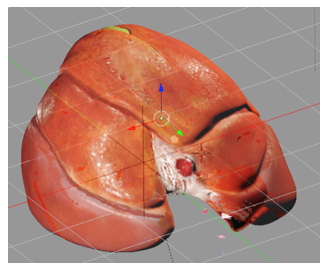

The proposed objectives was validated by two evaluation experiment based on the human humerus bone (Figures 1, 2 and 3) and the cat liver, being the last one validated considering the 3D model texture and transparency in order to show the vascular structures (Figures 5 e 6).

This experiment was based on tests of usability and has as hypothesis the following statement “The proposed application assists the teaching and the study of the human and/or animal anatomy”.

In addition to the hypothesis, the evaluation criteria were:

- Efficiency: Does the application allow users to better visualize 3D anatomical structures on mobile devices with the visualization options of structures in a segmented and detailed way?

- Usability: Is interaction with the application simple and intuitive for your users?

4.1 Case Study 1: Human Humerus Bone

4.1.1 Subjects

The application validation process had the participation of 5 (five) teachers and 23 (twenty-three) students of a Physiotherapy undergraduate course being 9 (nine) men and 14 (fourteen) women between the ages of 22) And 45 (forty-five) years. Teachers have more than 20 (twenty) years of contact with the Physical Therapy area, and only 1 (one) of these had previously had experience with devices involving AR.

4.1.2 Procedure

The application was distributed to the subjects along with a manual explaining its operation. After testing the application free form, the group was submitted to a questionnaire composed of 5 (five) affirmations based on the likert scale composed by the assertions: “fully agree”, “partially agree”, “undecided”, “partially disagree” and “fully disagree “. Users should choose only one alternative in each statement, representing the one that best matches their experience of using the application.

The questionnaire had the following statements:

1) The proposed application has an intuitive interface and simple handling and understanding.

2) The virtual visualization a segmented structure with individualized interaction helps in the understanding of its peculiarities in relation to the whole.

3) The possibility of AR visualization of a segmented part of the human or animal anatomy in mobile applications allows greater flexibility of user interaction during anatomy study.

4) The quality of the 3D rendered structure via AR provides a more immersive user experience when interacting with the virtual scene combined with the actual scene.

5) The visualization provided by the use of AR can aid the teaching and study of the anatomy more efficiently than traditional methods.

4.1.3 Results

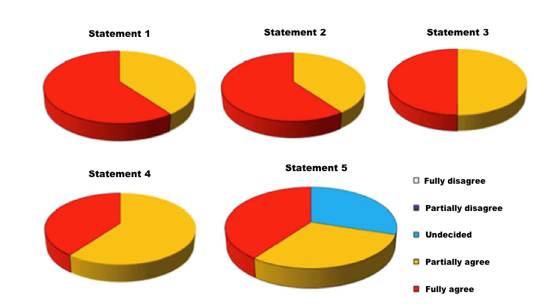

From the validation experiment performed and discussed in the previous section, we set out to analyze the results and their discussion. In this sense, Figure 7 presents graphs with the distributions of the responses from each to each of the five statements described in the previous section.

As can be seen in Figure 7, the assertions (“fully agree” + “partially agree”) make up 100% of the answers given to questions 1, 2, 3 and 4. Thus, it can be assumed that the proposed application is According to the evaluation criteria effectiveness and usability, presenting friendly interface and fulfilling its purpose by offering an intuitive interaction with the 3D representation of the humerus bone in this case study specifically.

Only affirmative 5 did not achieve full agreement – 30% of subjects were undecided on this question, probably because they needed more time to use the application in the classroom (the vast majority of participants in this experiment had never had contact with applications involving AR above).

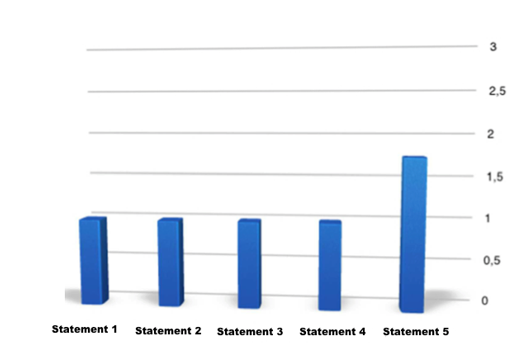

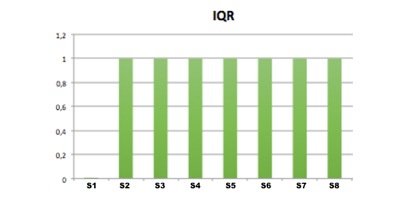

Interquartile range (IQR) was also analyzed in order to summarize the data independently of the number of observations (Figure 8). It can be seen from the graph of Fig. 8 that the variability of the responses was almost nil with an IQR equal to 1.0 in statements 1, 2, 3 and 4 and with the value of 1.75 in affirmative 5, which confirms the convergence of users’ opinions.

Figure 7. Graph with distribution of the users’ answers to the questions proposed during the evaluation experiment for the case study 1.

Figre 8. Graph with the IQR values of the users’ answers to the questions proposed during the evaluation experiment for the case study 1.

4.2 Case Study 2: Cat Liver

4.2.1 Subjects

The subjects that participated in the experiment were 9 students of the final semester of the undergraduate course in veterinary medicine, being 4 men and 5 women. Their ages ranged from eighteen to twenty-four years, the majority being less than twenty-two.

4.2.2 Procedure

The subjects were taken to a computer lab to be introduced to the AR based visualizations developed and interact with them. Initially, the functionality of the visualization was explained, presenting screens and form of interaction.

Ad the first validation experiment, subjects were then asked to start the visualization and, at the end, respond to a post-test questionnaire composed of eleven questions and based on the likert scale with the following assertions: “fully agree”, “partially agree” “undecided,” “partially disagree” and “fully disagree”. Subjects should choose only one alternative for each question in order to express their experience of using the application.

The questionnaire had the following statements:

1) The use of AR motivates me to participate in animal anatomy classes.

2) Virtual and interactive 3D models help the study and understanding of anatomical models.

3) The ability to view and interact with 3D models over real scenes via mobile devices helps learning as it is not limited to using a PC/notebook or paper atlas.

4) The interaction with the 3D model is intuitive.

5) The time elapsed between application initialization and the initial display of the 3D model is satisfactory.

6) The response time of the application to the interaction with it is satisfactory.

7) I realize that the use of AR can be applied to other content related to my undergraduate course.

8) I recommend and approve the use of AR in my undergraduate course.

4.2.3 Results

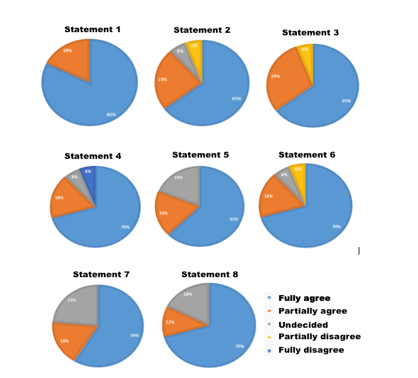

As can be seen in Figures 9 and 10 (IQR), most subjects agreed (“fully agree” and “partially agree”) with the statements (around 80).

Figure 9. Graph with distribution of the users’ answers to the questions proposed during the evaluation experiment for the case study 2.

Figre 10. Graph with the IQR values of the users’ answers to the questions proposed during the evaluation experiment for the case study 2.

The statements 2, 3 and 6 presented some answers “disagree” and the statements 4 was the only one that presented the answer “totally disagree”. Analyzing the profile of the users, it is noticed that they do not have experiences with graphic and interactive applicative, a fact that probably leads them to reject the AR interaction as an active methodology to be adopted in the classroom.

Based on these results, it is observed that, in addition to the proposed criteria, the hypothesis raised was also confirmed in this first moment, although it is felt the need to vary the sample of subjects and extend the validation time of the application in the classroom. In general, it was noted that the users approved the application and that it can be used in the teaching of human and animal anatomy in order to assist students’ learning in an interactive and visual way.

However, new experiments, with different anatomical pieces, still have to be performed.

5. Conclusions

This work presented a study about the investigation of an application of AR aimed at assisting the teaching of anatomy having, as case studies, the visualization of the human humerus bone and a cat’s liver. During its development, it can be noted that the AR is a technology that brings a differential to methods commonly employed in teaching anatomy in undergraduate courses in the area of Health, since it integrates virtual and real objects in order to contribute to a more realistic and interactive visualization of structures in the human body as well as knowledge discovery.

As pointed out by the users, the usability combined with the effectiveness of the use of the AR visualization contributed to a positive evaluation of the same and signaling the will to use it continuously in the classroom. The interface was considered easy to handle and understood by users and the application made it possible to 3D visualization with adequate degree of realism, segmented form and individual interaction, meeting the main requirements raised by the interviewees in the initial phase of this study.

Thus, it is envisaged that AR can bring a new approach to teaching anatomy, both for students and teachers, through its integration into mobile devices. This is a valid alternative to traditional methods of studying anatomy employing corpses, 2D atlases on paper and mannequin models.

In future work, it is intended to extend the application to holographic visualizations besides the construction of simulations of interaction with anatomical parts in virtual environments with support to the use of RV glasses.

Conflict of Interest

The authors declare no conflict of interest.

Acknowledgment

We thank all the teachers and students who participated in this work, from the requirements gathering to the validation experiment.

- C. S. da Silva, G. Klein, D. M. Brandao, “Segmented and detailed visualization of anatomical structures for health education” in 35th International Conference of the Chilean Computer Science Society (SCCC), Chile, 2016.

- G. Nascimento, N. Robaina, B. Moreira, P. T. Mourão and A. Salgado. “A construção do corpo humano a partir de elementos químicos,” in Brazilian Symposium on Games and Digital Entertainment, Rio de Janeiro, RJ, 2009.

- R. Sousa, F.S.C. Moita, and A. Carvalho, Tecnologias digitais na educação, Editora da Universidade Federal da Paraíba, 2011.

- M. Prensky, Computer games and learning: Digital game-based learning, Mass: MIT Press, 2005.

- L. Johnson, S. Adams Becker, V. Estrada, A. Freeman, A, Horizon report 2014: Higher education edition, The New Media Consortium, 2014.

- T. G. Mendes, “Games e educação: diretrizes do projeto para jogos digitais voltados à aprendizagem”, Master Thesis, Universidade Federal do Rio Grande do Sul, Porto Alegre, 2012.

- M. Mazzarotto, A. L. Battaiola, “Uma visão experimental dos jogos de computador na educação: a relação entre motivação e melhora do raciocínio no processo de aprendizagem” in Brazilian Symposium on Games and Digital Entertainment, Rio de Janeiro, 2009.

- S. K. Card, J. D. Mackinlay, B. Shneiderman, Readings in information visu- alization: using vision to think, USA: Morgan Kaufmann Publishers Inc., 1999.

- R. Spence, Information Visualization: design for interaction, Prentice Hall, 2007.

- M. Ward, G. Grinstein, D. Keim, Interactive Data Visualization: Foundations, CRC Press, 2015.

- C. Ware, Information Visualization: Perception for Design, Morgan Kaufmann, 2004.

- C. Ware, Visual Thinking: for Design, Morgan Kaufmann, 2008.

- R. Mazza, Introduction to Information Visualization, Springer, 2009.

- R. Azuma, “A survey of augmented reality” Presence: Teleoperators and Virtual Environments, 6(4), 55–385, 1997.

- C. H. Chien, C. H. Chien, T. S. Jeng, “An Interactive Augmented Reality System for Learning Anatomy Structure” in Proceedings of International MultiConference of Engineers and Computer Scientists, Hong Kong, 2010.

- R. F. Braz, Método didático aplicado ao ensino da anatomia humana “Anuário da produção acadêmica docente”, 3(4), 303–310, 2009.

- E. P. D. Silva, K. L. Santos, P. D. S. Barros, T. N. Silva, J. L. Souza, A. F. d. S. Mariano and M. B. A. Palma, “Utilização de cadáveres no ensino de Anatomia Humana: refletindo nossas práticas e buscando soluções”, in XIII Jornada de Ensino, Pesquisa e Extensãoo, Recife, 2013.

- E. Zhu, A. Hadadgar, I. Masiello, N. Zary, “Augmented reality in healthcare education: an integrative review,” PeerJ, 2(e469), 2014.

- W. Gomes and C. Kirner. “Desenvolvimento de Aplicações Educacionais na Medicina com Realidade Aumentada,” Bazar: Software e Conhecimento Livre, 1(1), 13-20, 2006.

- A. Safi, V. Castaneda, T. Lasser, N. Navab “Skin Lesions Classification with Optical Spectroscopy”, Springer, 2010.

- T. R. Coles, N.W. John, D. A. Gould, D. G. Caldwell, “Integrating Haptics with Augmented Reality in a Femoral Palpation and Needle Insertion Training Simulation” IEEE Transactions On Haptics, 4(3), 2011.

- J. Reitzin, “German Surgeon Successfully Uses iPad in Operating Room”, mHealthWatch, 2013.

- J. Bacca, S. Baldiris, R. Fabregat, S. Graf, & Kinshuk, “Augmented Reality Trends in Education: A Systematic Review of Research and Applications”, Educational Technology & Society, 17(4), 133–149, 2014.

- A. Jenny, “Augmented Reality in Dental and Medical Education, AR News, 2016.

- R. Parkes, N. Forrest, S. Baillie, “A Mixed Reality Simulator for Feline Abdominal Palpation Training in Veterinary Medicine”, Studies in Health Technology and Informatics, 142, 244-246, 2009.

- S. 1. Lee, J. Lee, A. Lee, N. Park, S. Lee, S. Song, A. Seo, H. Lee, J. I. Kim and K. Eom, “Augmented reality intravenous injection simulator based 3D medical imaging for veterinary medicine” Vet J. , 196(2),197-202, 2013.

- Veterinary Record, “Augmenting Reality in Anatomy”, News and Reports, 175:444, 2014.

- R. G. Thomas, N. W. John and J. M. Delieu. “Augmented reality for anatomical education” Journal of visual communication in medicine, 33(1), 6–15, 2010.