Gestural Interaction for Virtual Reality Environments through Data Gloves

Gestural Interaction for Virtual Reality Environments through Data Gloves

Volume 2, Issue 3, Page No 284-290, 2017

Author’s Name: G. Rodrigueza), N. Jofré, Y. Alvarado, J. Fernández, R. Guerrero

View Affiliations

Laboratorio de Computación Gráfica (LCG), Universidad Nacional de San Luis, 5700, Argentina

a)Author to whom correspondence should be addressed. E-mail: gbrodriguez@unsl.edu.ar

Adv. Sci. Technol. Eng. Syst. J. 2(3), 284-290 (2017); ![]() DOI: 10.25046/aj020338

DOI: 10.25046/aj020338

Keywords: Human Computer Interaction (HCI), Natural User Interface (NUI), Non-Verbal Communication (NVC),, Virtual Reality (VR), Computer Graphics

Export Citations

In virtual environments, virtual hand interactions play a key role in interactivity and realism allowing to perform fine motions. Data glove is widely used in Virtual Reality (VR) and through simulating a human hands natural anatomy (Avatar’s hands) in its appearance and motion is possible to interact with the environment and virtual objects. Recently, hand gestures are considered as one of the most meaningful and expressive signals. As consequence, this paper explores the use of hand gestures as a mean of Human-Computer Interaction (HCI) for VR applications through data gloves. Using a hand gesture recognition and tracking method, accurate and real-time interactive performance can be obtained. To verify the effectiveness and usability of the system, an experiment of ease learning based on execution’s time was performed. The experimental results demonstrate that this interaction’s approach does not present problems for people more experienced in the use of computer applications. While people with basic knowledge has some problems the system becomes easy to use with practice.

Received: 06 April 2017, Accepted: 20 April 2017, Published Online: 14 May 2017

1. Introduction

NUI set a new HCI paradigm exploiting the skills that human being has developed throughout his life for social interaction. Though most social interaction among humans takes the form of conversation, there is a large sub-text to any interaction that is not captured by a literal transcription of the words that are said. All of the factors going beyond the verbal aspects of speech are called Non-Verbal Communication (NVC) and takes many forms, or modalities like: facial expression; gaze (eye movements and pupil dilation); gestures, bodily movements; body posture; bodily contact; spatial behaviour gesture; non-verbal vocalizations, among others.

As a result of technological transition, VR has allowed to get over physical tools’ limitations by triggering the evolution of traditional devices into new more simple and intuitive ones, thus fostering the emergence to Natural User Interfaces (NUI). These enhance the natural impression of living in a simulated reality by improving the interaction between users & VR environments.

Social interaction is a key element of modern virtual environments. VR systems that enhance the presence in social interactions must collect gestural, positional, sound and biometric user information. As a solution, most VR developers propose a NUI with virtual characters simulating real human social interactions (personifying user and computer). Additionally, as a result of a physical and mental need in user’s interaction with computer games/applications, many games are designed to test the physical skills of its users setting gestures as the most common means to establish communication. In particular, studies on cognitive science state that hand gestures are which best express key information.

When developing a NUI, two different approaches are usually addressed: based on tracking devices or based on vision. In robotics, research focus on controlling a war robot or drones in high-risk areas or in remote-control surgery by tracking a robot hand. In medicine, virtual environments allow objectively quantify surgical performance, and accelerate the acquisition of baseline surgical skills without risk to real patients based on motion capture. These approaches have also greatly aided in sign language recognition research, to training of children to write, play instruments, to control objects, 3D modelling, among others. This paper’s work presents a VR system built on NUI principles, allowing the simulation of gestures and animations of human hands based on the recogni-

tion of their movements using a data glove as an input device.

2. Related Work

From bibliography, most of VR and NUI related works try to increment the immersion level by replacing classical devices (such as mouse, keyboard, joysticks) for new technological device tracking or image processing [1, 2]. From these works, it has been identified the following specified shortcomings [3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15]:

- Study of manipulation in VR environments results in a gap for vision-based works.

- Related research to gesture recognition are mostly vision-based.

- Vision-based systems that perform gesture recognition are usually limited to a tracking zone, in addition to noise and high processing requests.

- Regarding gestures recognition, the combination of two or more gestures to get a new complex gesture for a complex task has not yet been achieved.

- Works based on data gloves are scarce where the main VR primitives are only studied and those existing works only emphasize in some of them.

- In many of mentioned works the User Experience has not been analyzed.

The present work is an extension of a previous work originally presented in [16] covering the following topics:

First of all, there is a virtual character that exhibits a correlational behaviour with a user (Avatar). It is explicitly controlled by commands specified by the user through a device as an external animator. This is the most common option while considering that VR applications based on NUI concepts and Gesture Interaction should provide certain facilities to the user for a more bearable interaction [17].

Secondly, during the interaction through the avatar each gesture is a control command associated to a process. The involved process tries to match incoming data from data glove with the modelled avatar [18, 19]. The avatar’s virtual behaviour is controlled by the following standard primitives [20, 21]:

- Navigation, the displacement of the user in the virtual space and the “cognitive map” he/she builds on it.

- Selection, the action of user pointing to an object.

- Manipulation, the user modification of an object’s state; and

- System control, the dialogue between the user and the application.

In this context, this work tries to meet the provided demands by mentioned gaps. Therefore, in addition to developing an HCI environment, the system provides a natural way to interact freely on a scenario through a data glove. Mainly, the system focus on the use of natural movements and gestural protocols (in HCI, set of gestures to establish communication between human and system) performed by hand [22, 23]. As an effective way to prove how positive can be this kind of interaction, it is worth to perform a user’s experiment that shows the richness of the virtual experience. The next section show the offered features by system.

2.1 Natural User Interface based on Data Glove

The system uses a data glove (integrated sensor textile glove) as a gestural interface that allows a real-time interaction where the user communicates through an avatar. In consequence, according to the mentioned multi-dimensional control tools, excepting the selection tool, it has been developed the next basic features:

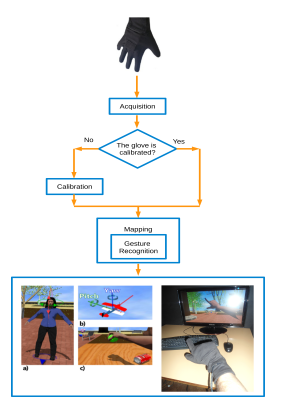

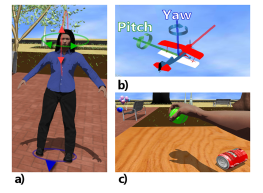

- Most of the known and implemented navigation options used by game and VR applications developers are: “walk”, “stop”, “run”, “jump”, change different views of the scenario, among others. Particularly, this system has implemented the “walk”, “stop” and change of view of the scenario (As shown in Figure 1 (a)). All of them are associated with a set of gestures (protocol) that performs each action. As an extra functionality of the system, it is possible to remotely control an air-plane toy included in the scenario (As shown in Figure 1 (b)). As it is possible to do two different types of navigation (avatar navigation and air-plane navigation), it is necessary to perform a gestural protocol to swap between them.

- While a user interacts naturally on the scenario, also has the need to perform handling actions in it. Basically, these actions are to be able to touch the virtual elements and grab them (As shown in Figure 1 (c)). Some involved factors for implementing this functionality are: physics treatment, proximity to objects (to grab) and avatar hand position.

System Control. This control tool relates to the user-application dialogue. In this approach, user-application dialogue implies to control the system by using simple and natural gestures. This feature is achieved through navigation and manipulation tools.

Figure 1: System’s Features: a) Change of View Avatar, b) Airplane Navigation, c) Grasping in a work’s table.

As a consequence of the correlational behaviour between user and avatar it would be nice to be able to visualise user hands and avatar hands as synchronised as possible. Thus, this system implements the next feature:

Real-time Imitation. This involves that avatar’s hands can mimic user’s hands through a refined model management, physics treatment and rendering tuning (frame rates). Realistic visualisation of the replicated gestures needs the model representation of hands and mapping of human hands’ motion into the model which is not straight-forward due to different sizes and joint structures of bones for the human hands. It is necessary to consider that some movements are not feasible by the user then the avatar should not be able to do it.

3 System Overview

For a proper and efficient operation of the system is necessary to consider certain particular operating features (As shown in Figure 2). It is worth mentioning that the used input device was an integrated sensor textile glove DG5 Vhand Data Glove 2.0 distributed by DGTech Engineering Solutions [24, 25]. This device has five embedded bend sensors which enables to accurately measure the finger movements, while an embedded 3 axes accelerometer allows for sensing both hand movements and hand orientation (roll and pitch).

The interaction between user and system implies that, at first, the direct acquisition must be performed by different sensors capturing performer’s actions. So, to use the system, the user should go through an implemented calibration process that will allow obtaining greater sensitivity. In order to this, it is necessary to establish the appropriate user maximum and minimum flexion degrees of fingers with the glove. The corresponding values will be provided with gestural protocols that will allow flexion limits to be recorded. These protocols were named ”Extended Hand ” and ”Closed Hand”.

Thereafter, the new bending, orientation, and acceleration values after calibration should be mapped to values within the avatar model domain (mapping), i.e, are matched to model values getting a transition from physically detected movement (real domain) to avatar model image movement (virtual domain).

In relation to gesture’s recognition, each gestural protocol was defined by a set of gestures, so each gesture consisted of the degrees, orientation and acceleration values previously stored in a database. The developed gestural protocols are forward walking, backward walking, stop walking, revolver, grasp, among others. Finally, to generate a particular event such as ”walk” on the scenario, user must execute a forward walking or a backward walking. The system is responsible for controlling, monitoring and supervising in real-time all the user’s actions/requests.

4 Evaluation and Results

McNamara and Kirakowski proposed a model of factors for understanding the interactions between humans and technology: User Experience (UX) and Usability [26]. The UX can be academically summarized as every aspect of the interaction between a user and a product. According to the most famous usability expert Jakob Nielsen, usability is a quality attribute that assesses how easy user interfaces are to use [27][28]. Also states that usability has five attributes which are: ease of learnability, ability to remember, efficiency, error rate and user satisfaction.

On the other hand, it is known that there are two method types to evaluate an HCI experiment: qualitative and quantitative form.

Thus, knowing that gestures allow executing predefined actions very quickly and naturally, it is interesting to develop a test based on wasted time while executing main actions on the virtual world. On this way, an ease of learnability test was designed and aimed to measure the quality of NUI system. Basically, the test analyzes how well user uses the interface to arrive at a specific scenario’s point and how well achieves to manipulate/modify the elements on a virtual work table (see Figure 1(c)).

4.1 Evaluation Test Design

An evaluation test was applied to a thirty participants’ group between fifteen and thirty-nine years old. Before test, the system’s goal and implemented gestural protocols were explained to each participant. Based on collected answers from a priori questionnaire about user’s computer experience, participants were divided into two subgroups: Basic and

Intermediate-to-Advanced users.

There were fifteen participants by subgroup. People that only use cellphone as a communication way and computer for their work were gathered together into basic experience group (Group 1). The intermediate-to-advanced experience group (Group 2) gathered digital natives (people who have grown up using technology like the Internet, computers and mobile devices). This group included girls and boys that have ever played video games.

During the experiment, each participant must execute two proposed tasks (ten repetitions per task). The main goal is to accomplish an evaluated challenge in time by timing the task. The following tasks were evaluated:

- Task 1 (Navigation feature): Initially, beginning at a specified point on the scenario, user must move to another already specified point through the defined gestural commands.

- Task 2 (Manipulation feature): The goal is to arrive at the virtual work table, to grasp an object and move it to another table’s place.

4.2 Tasks Performances

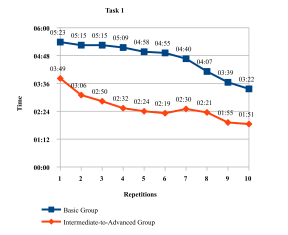

Figure 3 shows the recorded average time from each performed repetition for the execution of task 1 to both evaluated groups. Curves behave similarly but with different initial average time (first repetition). In basic group, the average time remained approximately constant for all replicates, showing that the execution’s ease was minimal. Particularly in the seventh repetition it arises the maximum local progress. In intermediate-to-advanced group, the execution time decreased progressively as increasing the repetitions, but in the sixth, seventh and eighth repetition there were certain regressions that are due to users’ attempts to improve their execution times (it might be because Murphy’s law).

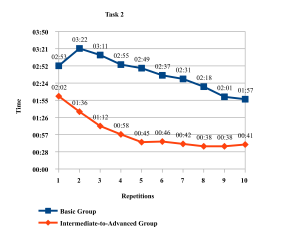

Similarly, Figure 4 shows the recorded times for the execution of task 2. As long as, in both cases, the average time decreased significantly as repetitions increase, it reveals an implicit incremental curve of ease of learnability. In basic group, excepting the first and second repetitions, the execution’s time decreased progressively in each repetition. In intermediate-toadvanced group, in first repetitions a high progress was gained, whereas in last repetitions the progression was constant.

While considering each step of curve as a line it is possible to obtain a gradient. Table 1 and Table 2 shows all gradients for each group in each task, meaning that when a gradient is zero, there is no variation in wasted execution time, so there is no progress and no regression at ease; when a gradient is under zero, wasted execution time was reduced, so there is a progress at ease; and finally, when a gradient is over zero, wasted time was increased, so there is a regression at ease.

| Gradient (seconds) to task 1 | |

| Group 1 | Group 2 |

| -00:08 | -00:43 |

| -00:00 | -00:16 |

| -00:06 | -00:18 |

| -00:11 | -00:08 |

| -00:03 | -00:05 |

| -00:15 | 00:11 |

| -00:33 | -00:09 |

| -00:28 | -00:26 |

| -00:17 | -00:04 |

Table 1: Gradient between repetitions for task 1

| Gradient (seconds) to task 2 | |

| Group 1 | Group 2 |

| 00:29 | -00:26 |

| -00:11 | -00:24 |

| -00:16 | -00:14 |

| -00:06 | -00:13 |

| -00:12 | 00:01 |

| -00:06 | -00:04 |

| -00:13 | -00:04 |

| -00:17 | 00:00 |

| -00:04 | 00:03 |

Table 2: Gradient between repetitions for task 2

| Average Time in seconds (standard deviation) | |

| Group 1 | Group 2 |

| -13,4 (11,1) | -13,1 (15,2) |

Table 3: Average and Standard Deviation of gradients for task 1

| Average Time in seconds (standard deviation) | |

| Group 1 | Group 2 |

| -6,2 (13,9) | -9 (10,7) |

Table 4: Average and Standard Deviation of gradients for task 2

According to this established statements, intermediate-to-advanced group shows a more accelerating and increasing ease of learnability than basic as was expected; the average and standard deviation of the gradient (Tables 3 and 4) clarify this affirmation.

These results show that when user experience is basic, ease of learnability execution time tends to reflect constant values till a specific point of repetitions were gradients decreases exponentially showing mayor ease of learnability execution time.

5 Conclusion and Future works

In HCI, virtual worlds are developed for users to interact with a computer, and a solution to provide this is through of developing of avatars; an efficient, realistic and immersive alternative.

The process of designing 3D human correlated avatars must consider verbal and non-verbal communication. As well as non-verbal behaviour appears to be a primary factor in human-human interaction it is also considered for human-computer interaction.

Particularly, gestures are first tools of human communication. Therefore, with the intention of harnessing and enhancing the natural abilities of the human, at the present time, there exist works involved in adding user technology to implement Natural User Interfaces into multi-RVMedia systems [29].

This work focuses on use of hands as interaction’s media. The development is based on the insertion of appealing devices such as data gloves within a virtual world, mostly, the research is addressed to real-time hand movements and gesture recognition providing the following features: hand tracking, navigation on stage and object manipulation, where for each feature, gestures play an important role and should be easy to learn and do not require any special attention from the user.

Presented preliminary user experiments consist in the evaluation of usability, in particular, the ease of learnability based on execution time where results are quantitative. From graphics it can be inferred that experienced users have no problems in adapting to new interface paradigms while basic users reflect a notable drawbacks till a threshold (60% repetitions). It will be a challenge to reduce this threshold by reducing system’ s shortcomings. Nevertheless, the computer experience and previous practice do not have to determine the system simplicity and comfortability.

Due to some systems aspects are beta version, future works will be oriented to improve the environment issues, such as hardware platform. We consider that the system should provide the necessary multi-VR media and multi-platform structure and optimise the handling of 3D objects in the scenario using new libraries of physics or reprogramming of existing ones.

Gestural communication in virtual environments continues to be a major challenging but we think that are on the path to new and interesting research.

- Daniel Wigdor and Dennis Wixon. Brave NUI World: Designing Natural User Interfaces for Touch and Gesture. Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, 1st edition, 2011.

- James J. Park, Han-Chieh Chao, Hamid R. Arab nia, and Neil Y. Yen. Advanced Multimedia and Ubiquitous Engineering: Future Information Technology – Volume 2. Springer Publishing Company, Incorporated, 1st edition, 2015.

- G. Tofighi, H. Gu, and K. Raahemifar. Visionbased engagement detection in virtual reality. In 2016 Digital Media Industry Academic Forum (DMIAF), pages 202–206, July 2016.

- David Demirdjian, Teresa Ko, and Trevor Darrell. Untethered gesture acquisition and recognition for virtual world manipulation. Virtual Reality, 8(4):222–230, 2005.

- H. Cheng, L. Yang, and Z. Liu. Survey on 3d hand gesture recognition. IEEE Transactions on Circuits and Systems for Video Technology, 26(9):1659–1673, Sept 2016.

- Siddharth S. Rautaray and Anupam Agrawal. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev., 43(1):1–54, January 2015.

- Rick Kjeldsen and Jacob Hartman. Design issues for vision-based computer interaction systems. In Proceedings of the 2001 workshop on Perceptive user interfaces, pages 1–8. ACM, 2001.

- Frol Periverzov and Horea T. Ilies¸. 3d imaging for hand gesture recognition: Exploring the software-hardware interaction of current technologies. 3D Research, 3(3):1, 2012.

- Piyush Kumar, Jyoti Verma, and Shitala Prasad. Hand data glove: a wearable real-time device for human-computer interaction. International Journal of Advanced Science and Technology, 43, 2012.

- Shitala Prasad, Piyush Kumar, and Kumari Priyanka Sinha. A wireless dynamic gesture user interface for hci using hand data glove. In Contemporary Computing (IC3), 2014 Seventh International Conference on, pages 62–67. IEEE, 2014.

- Nik Isrozaidi Nik Ismail and Masaki Oshita. Motion selection and motion parameter control using data gloves. In Games Innovation Conference (IGIC), 2011 IEEE International, pages 113–114. IEEE, 2011.

- Xiaowen Yang, Qingde Li, Xie Han, and Zhichun Zhang. Person-specific hand reconstruction for natural hci. In Automation and Computing (ICAC), 2016 22nd International Conference on, pages 102–107. IEEE, 2016.

- Shitala Prasad, Piyush Kumar, and Kumari Priyanka Sinha. A wireless dynamic gesture user interface for hci using hand data glove. In Contemporary Computing (IC3), 2014 Seventh International Conference on, pages 62–67. IEEE, 2014.

- Inrak Choi and Sean Follmer. Wolverine: A wearable haptic interface for grasping in vr. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, UIST ’16 Adjunct, pages 117–119, New York, NY, USA, 2016. ACM.

- Farid Parvini, Dennis Mcleod, Cyrus Shahabi, Bahareh Navai, Baharak Zali, and Shahram Ghandeharizadeh. An approach to glove-based gesture recognition. In Proceedings of the 13th International Conference on Human-Computer Interaction. Part II: Novel Interaction Methods and Techniques, pages 236–245, Berlin, Heidelberg, 2009. Springer-Verlag.

- L. N. Jofre, G. B. Rodríguez, Y. M. Alvarado, J. M. Fernández, and R. A. Guerrero. Non-verbal communication interface using a data glove. In 2016 IEEE Congreso Argentino de Ciencias de la Informática y Desarrollos de Investigación (CACIDI), pages 1–6, Nov 2016.

- Konstantinos Cornelis Apostolakis and Petros Daras. Natural User Interfaces for Virtual Character Full Body and Facial Animation in Immersive Virtual Worlds, pages 371–383. Springer International Publishing, Cham, 2015.

- Yikai Fang, Kongqiao Wang, Jian Cheng, and Hanqing Lu. A real-time hand gesture recognition method. In 2007 IEEE International Conference on Multimedia and Expo, pages 995–998. IEEE, 2007.

- MA Moni and ABM Shawkat Ali. Hmm based hand gesture recognition: A review on techniques and approaches. In Computer Science and Information Technology, 2009. ICCSIT 2009. 2nd IEEE International Conference on, pages 433–437. IEEE, 2009.

- William R Sherman and Alan B Craig. Understanding virtual reality: Interface, application, and design. Elsevier, 2002.

- Marcio S Pinho, Doug A Bowman, and Carla M Freitas. Cooperative object manipulation in collaborative virtual environments. Journal of the Brazilian Computer Society, 14(2):54–67, 2008.

- Prashan Premaratne. Human Computer Interaction Using Hand Gestures. Springer, Singapore, 2014.

- Mohamed Alsheakhali, Ahmed Skaik, Mohammed Aldahdouh, and Mahmoud Alhelou. Hand gesture recognition system. Information & Communication Systems, 132, 2011.

- L. C. Pereira, R. V. Aroca, and R. R. Dantas. Flexdglove: A low cost dataglove with virtual hand simulator for virtual reality applications. In 2013 XV Symposium on Virtual and Augmented Reality, pages 260–263, May 2013.

- H. Teleb and G. Chang. Data glove integration with 3d virtual environments. In 2012 International Conference on Systems and Informatics (ICSAI2012), pages 107–112, May 2012.

- Niamh McNamara and Jurek Kirakowski. Functionality, usability, and user experience: Three areas of concern. interactions, 13(6):26–28, November 2006.

- Regina Bernhaupt and Florian Floyd Mueller. Game user experience evaluation. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, pages 940–943. ACM, 2016.

- Ali Trkyilmaz, Simge Kantar, M. Enis Bulak, and Ozgur Uysal. User experience design: Aesthetics or functionality? pages 559–565. ToKnowPress, 2015.

- Y. Alvarado, N. Moyano, D. Quiroga, J. Fernández, and R. Guerrero. Augmented Virtual Realities for Social Developments. Experiences between Europe and Latin America, chapter A Virtual Reality Computing Platform for Real Time 3D Visualization, pages 214–231. Universidad de Belgrano, 2014.