A Reliable, Non-Invasive Approach to Data Center Monitoring and Management

Volume 2, Issue 3, Page No 1577-1584, 2017

Author’s Name: Moises Levy1, a), Jason O. Hallstrom2

View Affiliations

1Department of Computer & Electrical Engineering and Computer Science, Florida Atlantic University, 33431, FL, USA

2 Institute for Sensing and Embedded Network Systems Engineering, Florida Atlantic University, 33431, FL, USA

a)Author to whom correspondence should be addressed. E-mail: mlevy2015@fau.edu

Adv. Sci. Technol. Eng. Syst. J. 2(3), 1577-1584 (2017); ![]() DOI: 10.25046/aj0203196

DOI: 10.25046/aj0203196

Keywords: Data centers, DCIM, Infrastructure Monitoring, Management, Performance, Efficiency, Internet Of Things, Control Systems, Wireless Sensors, Sensing

Export Citations

Recent standards, legislation, and best practices point to data center infrastructure management systems to control and monitor data center performance. This work presents an innovative approach to address some of the challenges that currently hinder data center management. It explains how monitoring and management systems should be envisioned and implemented. Key parameters associated with data center infrastructure and information technology equipment can be monitored in real-time across an entire facility using low-cost, low-power wireless sensors. Given the data centers’ mission critical nature, the system must be reliable and deployable through a non-invasive process. The need for the monitoring system is also presented through a feedback control systems perspective, which allows higher levels of automation. The data center monitoring and management system enables data gathering, analysis, and decision-making to improve performance, and to enhance asset utilization.

Received: 30 May 2017, Accepted: 09 August 2017, Published Online: 21 August 2017

1. Introduction

There is significant room for improvement in current practices that address data center performance relative to benchmarks. A novel data center infrastructure monitoring approach was introduced in a paper originally presented at the 7th IEEE Annual Computing and Communication Workshop and Conference (CCWC) in 2017 [1], for which this work is an extension.

A data center is defined as a facility with all the resources required for storage and processing of digital information, and its support areas. Data centers comprise the required infrastructure (e.g., power distribution, environmental control systems, telecommunications, automation, security, fire protection) and information technology equipment (e.g., servers, data storage, and network/communication equipment). Data centers are complex and highly dynamic. New equipment may be added, existing equipment may be upgraded, obsolete equipment may be removed, and both prior and current generation systems may be in use simultaneously. Increasing reliance on digital information makes data centers indispensable for assuring data is permanently available and securely stored.

Data centers can consume 40 times more energy than conventional office buildings. IT equipment by itself can consume 1100 W/m2 [2], and its high concentration in data centers results in higher power densities. By 2014, there were around 3 million data centers in the U.S., representing one data center for every 100 people [3]. These data centers consumed approximately 70 billion kWh, or 1.8% of total electricity consumption [4]. Data center energy consumption experienced a nearly 24% increase from 2005-2010, 4% from 2010-2014, and is projected to grow by 4% from 2014-2020. In 2020, U.S. data centers are projected to consume about 73 billion kWh [4]. Emerging technologies and other energy management strategies may reduce projected energy demand.

According to legislation, standards, best practices, and user needs, data centers must satisfy stringent technical requirements in order to guarantee reliability, availability, and security, as they have a direct impact on asset utilization and data center performance. In order to comply with such requirements, equipment needs continuous monitoring, control, and maintenance to assure it is working properly. A data center infrastructure management (DCIM) system can be summarized as collectively comprising a suite of tools that can monitor, manage, and control data center assets [6].Technical issues and human errors may threaten a data center. Power and environmental issues are especially important, and might affect performance. Human errors can be diminished through monitoring, automation, and control systems. Intangible losses, such as business confidence and reputation are difficult to quantify. When problems arise, data centers may shut down for a period of time. The cost of downtime depends on industry, potentially reaching thousands of dollars per minute. The average cost associated with data center downtime for an unplanned outage is approximately $9,000 per minute, an increase of 60% from 2010 to 2016 [5].

The emerging Internet of Things (IoT) makes it possible to design and implement a low-cost, non-invasive, real-time monitoring and management system. IoT can be understood as a global infrastructure enabling the interconnection of physical and virtual “things” [7]. Gartner forecasts more than 20 billion connected things by 2020 [8]. According to Cisco, 50 billion devices will be connected to the internet by 2020, and 500 billion by 2030 [9]. Each device includes sensors collecting and transmitting data over a network. These new trends help to reevaluate how to monitor and manage data center infrastructure and IT equipment. IoT makes it possible for a data center monitoring and management system to be deployed rapidly through a non-invasive process, helping to establish an integrated strategy towards performance improvement, while lowering risk.

2. Background

Since the deregulation of telecommunications in the U.S. (Telecommunications Act of 1996), a number of standards and best practices related to telecommunications, and more recently to the data center industry have emerged. Appropriate standards are essential in designing and building data centers, including national codes (required), local and country codes (required), and performance standards (optional). Governments have enforced regulations on data centers depending on the nature of the business. Different entities have also contributed to generate standards, best practices, and recommendations for the industry. These organizations include: U.S. Department of Energy; U.S. Environmental Protection Agency; U.S. Green Building Council; European Commission; Japan Data Center Council; Japan’s Green IT Promotion Council; International Organization for Standardization; International Electrotechnical Commission; American National Standards Institute; National Institute of Standards and Technology; the Institute of Electrical and Electronics Engineers; National Fire Protection Association; Telecommunication Industry Association; Building Industry Consulting Service International; American Society of Heating, Refrigerating and Air-Conditioning Engineers; the Green Grid; and the Uptime Institute.

Recent standards for data centers make general recommendations that should be taken into consideration for data center infrastructure management. The ANSI/BICSI 002 standard “Data Center Design and Implementation Best Practices” [10] provides a chapter for DCIM with general recommendations for components, communication protocols, media, hardware, and reporting. The ISO/IEC 18598 standard “Information Technology – Automated Infrastructure Management (AIM) Systems – Requirements, Data Exchange and Applications” [11] includes a section on DCIM. Intelligent Infrastructure Management systems comprise hardware and software used to manage structured cabling systems based on the insertion or removal of a patch (or equipment) cord. This standard should increase operational efficiency of workflows, processes, and management of physical networks, helping to provide real-time information related to connectivity from cabling infrastructure (e.g., switches, patch panels).

Legislators have been increasingly concerned about how data centers perform. Federal data centers alone consume about 10% of total U.S. data center consumption. The Energy Efficiency Improvement Act of 2014 (H.R. 2126) [12] forces federal data centers to review energy efficiency strategies, with the ultimate goal of reducing power consumption and increasing efficiency and utilization. Likewise, in 2016, the Data Center Optimization Initiative required federal data centers to reduce their power usage below a specified threshold by September 2018, unless they are scheduled to be shutdown as part of the Federal Data Center Consolidation Initiative. To help data centers comply with legislation faster and more effectively, manual collection and reporting must be eliminated by the end of 2018. Automated infrastructure management tools must be used instead, and must address different metric targets, such as energy metering, power usage effectiveness, virtualization, server utilization, and facility monitoring [13]. The U.S. Data Center Usage Report of June 2016 [4] highlights the need for future research on performance metrics that better capture efficiency. Most recently, the Energy Efficient Government Technology Act (H.R. 306) [14] of 2017 required the implementation of energy efficient technology in federal data centers, including advanced metering infrastructure, energy efficient strategies, asset optimization strategies, and development of new metrics covering infrastructure and IT equipment.

Data center infrastructure management systems have been driven mainly by private industry. Previous experience in building automation systems, building management systems, energy management systems, facilities management systems, and energy management and control systems have enabled private industry to deploy similar solutions for the data center sector. However, these are not solutions explicitly designed for data centers. The leading companies who offer data center infrastructure management tools include Nlyte Software, Schneider Electric, and Emerson Network Power [15]. In addition, there are several reports from private industry with general guidelines and recommendations [16-24].

Academic work in data center infrastructure management includes research for specific areas, such as energy management systems [25]; distribution control systems [26]; modeling and control [27]; power efficiency [28-29]; power consumption models [30-31]; power management [32]; data center networks [33]; wireless network optimization [34-36]; thermal and computational fluid dynamics models [37-38]; wireless sensors for environmental monitoring [39]; power monitoring [40]; corrosion detection [41]; and improvement strategies, such as big data [42], genetic algorithms [43], and machine learning [44]. No previous work presents guidelines to develop a comprehensive data center monitoring and management system, considering recent advances in technology and the mission critical nature of this highly dynamic sector.

3. Data Center Monitoring and Management

3.1. Concept of Operations

This section describes quantitative and qualitative characteristics and objectives of the data center monitoring and management system. The system is envisioned to be reliable, non-invasive, wireless, sensor-based, low-cost, and low-power. It provides real-time measurements that enable the creation of a real-time data map for each parameter. It measures power usage across equipment, environmental and motion parameters across the physical space of the data center, and IT resource usage. This underscores the need for developing innovative approaches to reliable and non-invasive real-time monitoring and management.

Data center performance analysis requires understanding of facilities and IT equipment. Table 1 outlines the main components of a data center, categorized by power delivery and power consumption (e.g., IT equipment, environmental control systems, and other).

Table 1: Components of a data center

| Component | Description |

| Power delivery systems | Different sources of energy

Generators Switch gear Automatic transfer switches Electrical panels UPS systems Power distribution units Remote power panels Distribution losses external to IT equipment |

| IT equipment | Servers

Storage systems Network / communication equipment |

| Environmental control systems | Chillers

Cooling towers Pumps Ventilation Computer room air handling units Computer room air conditioning units Direct expansion air handler units |

| Other | Structured cabling systems

Automation systems Lighting Security Fire systems |

Real-time data collection can add value in several areas, including data center infrastructure control and management; performance optimization; enhancement of availability, reliability and business continuity [45]; reduction of total cost of ownership [46]; optimization of asset usage; and predictive analytics. A real-time monitoring and management system can highlight areas to reduce energy consumption, increase efficiency, improve productivity, characterize sustainability, and boost operations. The practice may also help to avoid or defer expensive facility expansion or relocation, via optimization of the current facility.

At least two classes of sensing devices are needed for data gathering: power sensing devices and environmental / motion sensing devices. For power sensing, we focus on non-invasive methods. Environmental data such as temperature, humidity, airflow, differential air pressure, lighting, moisture, motion, vibration, and cabinet door closure measurements are also required. Table 2 summarizes the requirements for these sensing devices.

Table 2: Requirements for sensing devices

| Requirement | Description |

| Reliability | Due to the dynamic nature of data centers, the equipment shall be reliable and easy to install, relocate, or replace. |

| Wireless | Wireless data transmission using a radio transceiver reduces the use of cables in the data center. To avoid interference with existing equipment in the data center, wireless monitoring devices should have low-power transmissions, low data transmission rates, and non-overlapping frequency ranges [47]. |

| Real-time | Sensing data in real-time helps to build situational awareness tools, such as reports and dashboards, which lead to immediate and more informed actions, reducing downtime and operating expenses. |

| Low-power | Low-power sensing devices enable an extended lifecycle before replacing batteries, reducing maintenance. |

| Battery operated | Battery operated devices eliminate the risk of connecting devices to an outlet, which could conflict with critical loads. |

| Low-cost | Low-cost microcontrollers and sensors make monitoring technologies available to all data centers. |

Obtaining real-time data can be challenging, especially in existing data centers that lack adequate instrumentation [48]. For sampling purposes, several factors must be considered. If the sampling rate is too high, excessive data could be collected. Conversely, if the sampling rate is too low, relevant data for real-time monitoring and prediction may be lost. Collecting the right data and understanding its nature is more important than simply collecting more data [45]. We must ensure that required information is not lost. In many cases, new data may be required; the system should be flexible, allowing addition of new sensors and retrieval of additional data from equipment. After sensing and gathering the required data, it must be stored, locally or remotely, for real-time processing and future analysis. Data analysis leads to more informed decisions.

3.2. Data Collection and Transmission

For data collection, different classes of sensing devices are required. It is also necessary to gather IT equipment resource utilization data through processor measurements.

Power Measurements:

For power consumption measurements, all data center IT equipment and its supporting infrastructure must be considered. This task could be accomplished in many ways, but we are interested in reliable and non-invasive methods. Most data center components, such as uninterruptible power supplies, power distribution units, remote power panels, electrical panels, and cooling systems offer real-time data through existing communication interfaces. To avoid additional cabling, the use of wireless transmission must be the first option.

A standard protocol should be used to access data on remote devices, such as the Simple Network Management Protocol (SNMP) over TCP/IP. In addition, appropriate security measures must be implemented, since the data retrieval feature could create a security vulnerability. For equipment without a communication interface, one option is to install a specific power meter with the required communication interface. It will be an additive component, applicable across different device types, reducing operational risk. Another alternative is to replace existing equipment with new units that include metering and communication interfaces.

Software developed for data collection must gather and filter data collected from sensing devices and transmit the data to one or more storage devices. All monitored devices must be evaluated to define the required parameters and sampling rates.

Environmental / Motion Measurements:

The described system measures environmental and motion parameters across the physical space of the data center. Equipment cabinets, and various measurement points at different heights must be considered to capture relevant environmental variables. Different areas without equipment, such as below the raised floor, the aisles, and other points, must also be taken into consideration.

Table 3. Parameters to be monitored

| Parameter | Description | Sensing |

| Temperature | Acceptable range required by equipment, and to optimize cooling systems and enhance efficiency. | Across the data center.

In each IT cabinet: front of the cabinet (bottom, middle, and top) may be sensed for cold air inlet, and back or top for exhaust. |

| Humidity | Acceptable range required to reduce electrostatic discharge problems (if humidity is low), and condensation problems (if humidity is high). | Across the data center. In each IT cabinet: in the middle of the air inlet. |

| Airflow | Equipment and infrastructure require airflow, as specified. | Below the raised floor.

Above perforated tiles. |

| Differential air pressure | Differential air pressure analysis could help to optimize airflow and cooling systems. If differential pressure between two areas is low or high, it indicates the airflow rate is also low or high. | Across the data center. Sensors are installed between two areas with varying air pressure (e.g., below and above the raised floor, inside and outside air plenum areas, between hot and cold aisles). |

| Water | Water sensing is required to prevent failures from cooling equipment, pipes, or external sources. | Near cooling system, water pipes, equipment, or potential risk areas. |

| Vibration | In case of earthquakes or excessive vibration, vibration sensors can trigger appropriate alerts. The sensors could also detect unauthorized installation, removal, or movement of equipment. | In each cabinet. |

| Lighting | Different levels of lighting according to human occupancy or security requirements. | Across the data center. |

| Security | Motion sensors could detect movement around cabinets. Contact closure sensors to enhance security systems. Airflow, temperature, and humidity in equipment could be affected if a cabinet door is opened, or if people are around the perforated tiles. Contact closures for access doors may be checked and tied to temperature variations nearby. | Door contact closures for cabinets and access doors, motion sensors, and surveillance cameras located nearby. |

| Fire systems | Fire alarms must be considered. | Data from fire system. |

Each sensed parameter covers a specific range, and depending on the equipment, the range varies. Newer technologies allow a broader range of environmental parameters. ASHRAE Technical Committee 9.9 has created a set of guidelines regarding the optimal and allowable range of temperature and humidity set points for data centers [49-51]. Manufacturers have also established the environmental optimal operating ranges for their equipment, which enables a more detailed analysis of operational ranges. The standard ANSI/BICSI-002 [10] recommends three different lighting levels (level 1 for security purposes, level 2 when unauthorized access is detected, and level 3 for normal conditions).

The parameters to be monitored across the data center are described in Table 3. All parameters must be analyzed to define sampling rates. Contingent on specific requirements, additional inputs or sensors may be added.

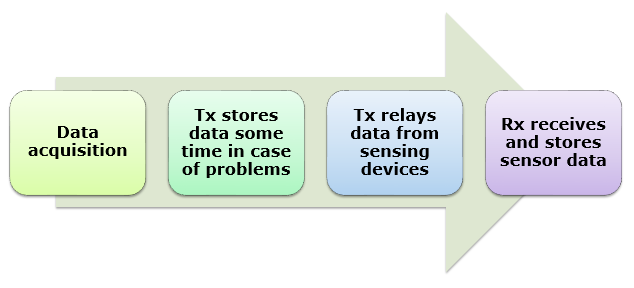

As shown in Figure 1, after sensor data is acquired, transmitters are responsible for relaying data from all sensing devices to a storage device. The transmitter must be capable of storing data for an established period of time in case transmission problems occur, to avoid losing data.

Figure 1: Data collection and transmission workflow

Figure 1: Data collection and transmission workflow

Given the limited (battery) energy available to each device, one data acquisition challenge is to maximize network lifetime, and to balance the use of energy among nodes. Network lifetime can be defined as the time the network is able to perform tasks, or as the minimum time before any node runs out of energy and is unable to transmit data. A multihop routing protocol should be used, ensuring there is an active path between each network device and the network’s sink [52].

The following aspects associated with the wireless sensor nodes deserve attention: device location, which depends on data center layout; new device additions or relocations, where communication is not disrupted; failure diagnostics (e.g. energy problems, disconnections); and redundancy requirements across critical tasks.

Processor Measurements:

Enhancements by some IT equipment manufacturers include the ability to directly access some measurements related to power, surrounding environment, and resource utilization for each device. These measurements could include parameters such as power utilization, air inlet temperature, airflow, outlet temperature, CPU usage, memory usage, and I/O usage. Platform level telemetry helps transform data center monitoring and management, allowing direct data access from the equipment processor. Comparison of processor measurements to environmental and power measurements, as well as analysis of the IT resource usage, can improve decision-making [53].

With IT resource utilization data available, IT resource optimization through workload allocation and balancing, as well as through other control strategies, is feasible. These measurements may not be available for all equipment. Querying this information is unlikely to interfere with IT equipment’s primary tasks.

3.3. Redundancy

Redundancy can be understood as the multiplication of systems to enhance reliability. Since data center components require maintenance, upgrade, replacement, and may fail, redundancy is required to reduce downtime. Critical components of the monitoring and management system may also fail, and sensitive data could be lost. To evaluate the need for monitoring and management redundancy, several factors must be considered.

The data center monitoring and management system should be designed according to the reliability tier of the data center and its related redundancy level [54]. If the data center presents redundancy (e.g., N+1, 2N, 2N+1), the monitoring and management system should provide the same redundancy level to preserve reliability. Conversely, if a data center has no redundancy in its IT equipment and supporting infrastructure (e.g., electrical, mechanical), redundancy may not be required. In addition, for the evaluation of the required redundancy of monitoring and management devices, mission critical IT equipment in the data center should be identified.

Data centers can use thousands of sensors to gather required data. For that reason, it is convenient to have general estimations of the number of sensing devices required for a given data center. Although the best way to calculate this is to analyze each data center in detail, there are guidelines to make preliminary estimates.

The first step is to estimate how many cabinets fit in the data center layout. Initially, the data center layout and information about the equipment must be examined. Most IT equipment is installed in a cabinet or open rack, with supporting rails 19in wide (Electronic Industry Alliance industry standard); adding space required for panels, doors, wiring and airflow increases the width to between 24in and 30in. The typical depth ranges from 36in to 48in, depending on the equipment. Standalone equipment may require special cabinets, which can increase the previous estimates. Considering a raised floor grid of 24in x 24in (industry standard), the cabinet’s area can be estimated as 24in x 48in, or 8 ft2. A similar area per cabinet is required for hallways, infrastructure facilities, and other non-IT equipment. The second step is to estimate how many sensing devices are required. Based on the previous step, for each cabinet, or for each 16 ft2, at least one environmental / motion sensing device will be required. Power sensing devices must also be considered; the total count can be estimated as the total number of elements (e.g., electrical facilities, cooling and ventilation systems) that support IT equipment. In addition, depending on spatial distribution, at least one transmitter should be in place to gather and transmit sensed data for storage and further processing. The final step is to review redundancy requirements and to estimate the total number of sensing and transmitter devices needed.

3.4. Data Storage, Processing, and Management

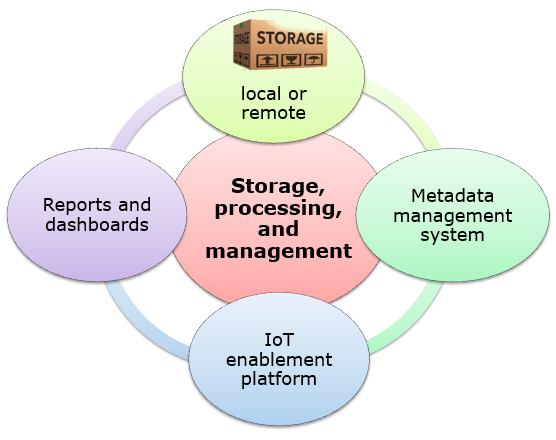

As shown in Figure 1, after gathering data, it is sent to the storage device, locally or remotely, to support future processing and reporting. The large amount of data collected must be organized to guarantee that it can be integrated, shared, analyzed, and maintained. A metadata management system is suggested. Metadata is understood as structured information that describes an information resource. It is, in effect, data about data. A metadata management system ensures that metadata is added correctly and provides mechanisms to optimize its use. A software interface must be developed to process and manage the information. Figure 2 shows a diagram of the different elements involved in data storage, processing, and management.

Figure 2: Storage, processing, and management

Figure 2: Storage, processing, and management

The use of an IoT enablement platform is a viable option to connect monitoring devices anywhere to the cloud. The key added value of these platforms is to simplify connectivity, data collection, and device management.

Reports and dashboards help to visualize a data center’s past and present performance. Dashboards are graphical user interfaces customized to user requirements, used to display key performance indicators to make them easier to read and interpret. Visualizing results in real-time supports responsive improvement. Warnings may be used for undesirable events. Furthermore, forecast reports can be used to predict how the data center will perform in the future.

Numerous reports can be generated from the data gathered by the monitoring and management system, such as real-time data reports for existing conditions; historical reports showing behavior with baselines and trends; statistical reports; and analytical reports showing asset utilization, performance, and other key indicators. In summary, different reports and dashboards translate data into information and strategic knowledge.

Proactive strategies are more desirable than reactive actions. Strategies may be undertaken based on what has been learned. Results will be measured as the system continues to collect data, reflecting the effectiveness of prior actions. This feedback often constitutes the basis for new actions [45].

3.5. Metrics

Data centers can be evaluated in comparison to the goals established or to similar data centers through the use of different metrics, as the performance cannot be understood with the use of a single metric [48]. Inconsistencies or variations in measurements can produce a false result for a metric, and for that reason, it is very important to standardize metrics. After thoroughly reviewing existing data center metrics, four areas are identified through which data center performance can be measured: efficiency, productivity, sustainability, and operations [55]. Table 4 presents general concepts for these metrics.

Table 4. Performance measurements

| Metric | Concept |

| Efficiency | Due to the high-energy consumption of the data center sector, efficiency has been given substantial attention. Efficiency indicators show how energy efficient site infrastructure, IT equipment, environmental control systems, and other systems are. |

| Productivity | It gives a sense of work accomplished and can be estimated through different indicators, such as the ratio of useful work completed to energy usage, or useful work completed to the cost of the data center. Useful work can be understood as the sum of weighted tasks carried out in a period of time, such as transactions, amount of information processed, or units of production. The weight of each task is allocated depending on its importance. |

| Sustainability | It is defined as development that addresses current needs without jeopardizing future generations’ capabilities to satisfy their own needs [56]. Measurements for sustainability include carbon footprint, the greenhouse gas footprint, and the ratio of green energy sources to total energy. |

| Operations | Measurements gauge how well managed a data center is. This must include an analysis of operations, including site infrastructure, IT equipment, maintenance, human resources training, and security systems, among other factors. |

The process to gather data to calculate metrics can easily be automated, as many parameters are updated automatically through the monitoring and management system. This allows some key indicators to be updated in real time. Other parameters are not easily automated. Instead, they derive from audits, human observation, evaluation, and analysis, and must be updated if and when a process changes. This is the case for some aspects of sustainability and operations. In addition, new variables will be measured, and new metrics will be created, as needs and technologies evolve.

Data center performance is the basis for designing and implementing plans aimed at improving key indicators. Asset usage is an important component of performance measurements, as it reflects how adequately the different data center assets are being used at specific points in time. Improving specific performance indicators leads to asset usage optimization.

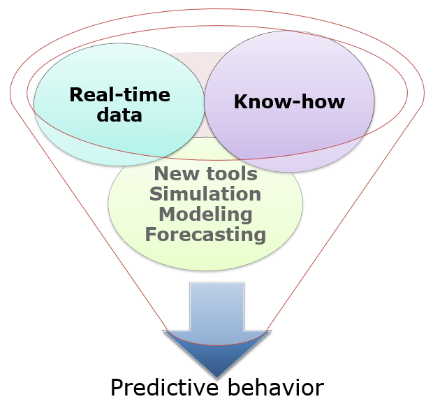

3.6. New Tools for Data Analytics

Considering the large amount of data collected, new tools must be developed to help users employ historical and real-time data, together with know-how, to undertake assertive actions. Predictive modeling enables simulation and forecasting of how a data center will perform in the future. As shown in Figure 3, combining real-time data from the monitoring and management system, know-how, and new tools (e.g., simulation, modeling, machine/deep learning) will lead to predictive analytics for the data center and its components. Simulations show how different components could perform or fail, allowing more time to evaluate and mitigate problems.

Simulation is an important tool for model validation, and for analyzing different scenarios. Models are safer, faster, and more affordable than field implementation and testing. Simulations help to explore how systems might perform under specific scenarios, including disturbances, equipment failure, different layout configurations, or specific control strategies. Historical data must be used to validate and calibrate the models developed for each specific data center. To transform data center predictive modeling from probabilistic to physics-based, tools such as computational fluid dynamics models for airflow, temperature, humidity, and other parameters must be used. Simulations can also help to understand how adaptive and resilient a data center is. Resiliency can be understood as the ability of a data center and its components to adapt existing resources to new situations.

Figure 3: Predictive Analytics

Figure 3: Predictive Analytics

To recognize new insights or predict future behavior, tools such as predictive analytics and machine/deep learning are needed. These tools involve algorithms that can learn from and make predictions about data without being explicitly programmed. Models should be constantly updated with real-time data to make predictions based on new realities. These tools lead to planned scenarios, rather than emergencies or failures, enabling more proactive decision-making.

3.7. End-to-end Resource Management

Data center optimization strategies should include all assets – power and cooling equipment, and IT equipment resources – to support end-to-end resource management. Resource management is iterative, enabling data center managers to plan and make adjustments. Examples include physical space planning for replacing, upgrading, or relocating equipment; detection of unused or idle IT equipment; capacity planning; optimization of cooling systems; power system optimization; IT resource optimization, including servers, storage, and networking equipment; workload allocation to control power and environmental parameters; workload balance among IT equipment; and virtualization. Comprehensive control strategies allow performance optimization.

4. Data Center Modeling and Control Systems

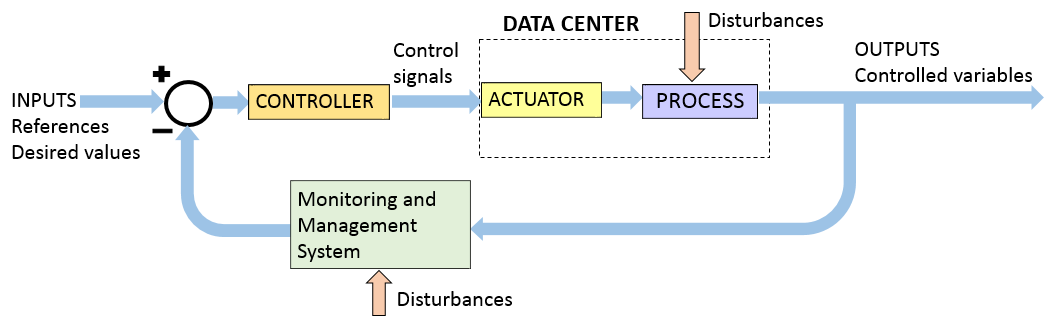

With increasing levels of automation in the data center, the role of control systems is growing. Data center monitoring systems are the main components of any such feedback control system; they deliver the required information so the control system can take appropriate actions. The control system comprises components or processes interconnected to achieve a function with multiple inputs and outputs. Optimization is a desirable objective, considering data center components are dynamic and change continuously with time, or with any other independent parameter.

From a control systems point of view, the data center can be described as a closed loop system with multiple inputs and multiple outputs, controllers and actuators, as shown in Figure 4. The desired values are generally based on standards, best practices, and/or user requirements. The system or process to be controlled is the IT equipment in the data center. Various devices and sensors are required to measure the variables to be controlled, such as power, temperature, humidity, air flow, differential air pressure, closure, motion, vibration, and IT equipment resource utilization. The actuator represents infrastructure equipment (e.g., cooling system, ventilation, security, fire system) and IT equipment resource management (e.g. virtualization, workload transfer, load balancing). The actuator will influence the process, and by consequence, the associated outputs / controlled parameters. The controller, as its name suggests, computes the control signals. The multiple outputs / controlled parameters are compared to the multiple inputs or desired values. Based on the error signal, or the difference between the desired values and the outputs, the controller takes corrective actions, affecting the actuators, so that the output values track the reference values within a desired margin.

Figure 4: Data center feedback control loop block diagram

Figure 4: Data center feedback control loop block diagram

Disturbances that can affect the behavior of the processes, undesired or unforeseen, should be taken into consideration. Feedback is required for adaptive and robust control. Feedback control allows corrective action regardless of the disturbance sources and decreases the sensitivity of the controlled parameters to process changes and other disturbances.

Each of the different data center components can be modeled through a mathematical description using a macro level approach, with inputs and outputs. After identifying all the measurements and parameters required, the data center monitoring and management system must gather all data inputs and outputs from various components, from which the key indicators will be calculated. The monitoring and management system allows reliable feedback control, measuring the controlled variables to adjust the manipulated variables; and also enables validation and calibration of the proposed models.

5. Conclusions

The described data center monitoring and management approach allows rapid deployment of a reliable, real-time monitoring system to improve operations, to enhance performance, and to reduce risk of failures. Using battery operated, low-power, wireless sensing devices, and retrieving data directly from equipment, information is collected through a non-invasive, continuous process.

Collected data can be used in many ways to make more informed decisions, to reduce downtime and cost of operations, to improve performance and key indicators, to optimize asset usage, to implement more informed actions and strategies, and to develop predictive behavior models. Data center professionals must think critically to determine what data is needed for each particular case, also considering future scenarios. This practice prevents missing important measurements and helps to avoid collecting unnecessary data.

Going forward, data center monitoring and management systems must include end-to-end resource management, covering both the IT equipment and supporting infrastructure. The ability to directly access measurements related to power, environment, and IT resource utilization opens new windows for optimization. Future data centers may avoid full time on-site staff, by relying on monitoring and management systems.

- M. Levy and J. O. Hallstrom, “A New Approach to Data Center Infrastructure Monitoring and Management (DCIMM),” IEEE CCWC 2017. The 7th IEEE Annual Computing and Communication Workshop and Conference. Las Vegas, NV, 2017.

- S. Greenberg, E. Mills, and B. Tschudi, “Best Practices for Data Centers: Lessons Learned from Benchmarking 22 Data Centers,” 2006 ACEEE Summer Study on Energy Efficiency in Buildings. Pacific Grove, CA, pp. 76–87, 2006.

- U.S. Department of Energy. Office of Energy Efficiency & Renewable Energy, “10 Facts to Know About Data Centers,” 2014. [Online]. Available: http://energy.gov/eere/articles/10-facts-know-about-data-centers.

- Ernest Orlando Lawrence Berkeley National Laboratory, “United States Data Center Energy Usage Report. LBNL-1005775,” 2016.

- Ponemon Institute LLC, “Cost of Data Center Outages. Data Center Performance Benchmark Series,” 2016.

- ECO Datacenter Expert Group – Association of the German Internet Industry, “Data Center Infrastructure Management Market Overview and Orientation Guide,” 2014.

- “Series Y: Global information infrastructure, internet protocol aspects and next-generation networks. Next generation networks – Frameworks and functional architecture models. Overview of the Internet of Things,” Recommendation ITU-T Y.2060, 2012.

- C. Geschickter and K. R. Moyer, “Measuring the Strategic Value of the Internet of Things for Industries. ID: G00298896,” 2016.

- Cisco Systems Inc., “Cisco and SAS edge-to-enterprise IoT analytics platform. White paper,” 2017.

- “Data Center Design and Implementation Best Practices,” ANSI/BICSI 002, 2014.

- “Information technology – Automated infrastructure management (AIM) systems – Requirements, data exchange and applications,” ISO/IEC 18598, 2016.

- The Senate of the United States. 113th Congress 2nd session, H.R. 2126, Energy Efficiency Improvement Act of 2014. 2014.

- T. Scott, “Data Center Optimization Initiative (DCOI). Memorandum,” 2016.

- The Senate of the United States. 115th Congress (2017-2018), H.R. 306, Energy Efficient Government Technology Act. 2017.

- Gartner Inc., “Magic quadrant for Data Center Infrastructure Management Tools,” 2016.

- Enterprise Management Associates (EMA), “Quantifying Data Center Efficiency: Achieving Value with Data Center Infrastructure Management. White Paper,” 2013.

- Forrester Research Inc, “The total economic impact of the Emerson DCIM solution,” 2013.

- IDC Research Inc, “White Paper, Datacenter Infrastructure Management (DCIM): Bringing Together the World of Facilities and Cloud Computing,” 2011.

- Sunbird Software, “DCIM Software and IT Service Management – Perfect Together DCIM: The Physical Heart of ITSM. White Paper,” 2015.

- Sunbird Software, “EO 13514 and Smart Federal Government Data Center Management. White Paper,” no. July, pp. 1–8, 2015.

- Datacenter Research Group. A division of Datacenter Dynamics, “Monitoring efficiently, White Paper,” 2011.

- Raritan Inc, “Virtualization and Data Center Management,” 2010.

- N. Rasmussen, “Electrical efficiency modeling for data centers. White paper # 113.,” Schneider Electric’s Data Center Science Center. 2011.

- T. K. Nielsen and D. Bouley, “How Data Center Infrastructure Management Software Improves Planning and Cuts Operational Costs. White Paper #107, Revision 3,” Schneider Electric’s Data Center Science Center. 2012.

- Y. Nakamura, K. Matsuda, and M. Matsuoka, “Augmented Data Center Infrastructure Management System for Minimizing Energy Consumption,” 2016 5th IEEE International Conference on Cloud Networking (Cloudnet). Pisa, pp. 101–106, 2016.

- M. Wiboonrat, “Distribution control systems for data center,” 2015 IEEE/SICE International Symposium on System Integration (SII). Nagoya, pp. 789–794, 2015.

- L. Parolini, B. Sinopoli, B. H. Krogh, and Z. K. Wang, “A cyber-physical systems approach to data center modeling and control for energy efficiency,” Proc. IEEE, vol. 100, no. 1, pp. 254–268, 2012.

- S. McCumber and S. J. Lincke, “Tooling to improve data center efficiency,” 2014 IEEE International Technology Management Conference, ITMC 2014. Chicago, IL, pp. 1–5, 2014.

- S. Kaxiras and M. Martonosi, “Computer Architecture Techniques for Power-Efficiency,” in Computer Architecture Techniques for Power-Efficiency, vol. 3, no. 1, Morgan & Claypool, 2008, pp. 1–207.

- M. Dayarathna, Y. Wen, and R. Fan, “Data Center Energy Consumption Modeling: A Survey,” IEEE Commun. Surv. Tutorials, vol. 18, no. 1, pp. 732–794, 2016.

- S. Rivoire, P. Ranganathan, and C. Kozyrakis, “A comparison of high-level full-system power models,” Conference on Power aware computing and systems (HotPower 2008). San Diego, CA, pp. 1–5, 2008.

- L. Ganesh, H. Weatherspoon, T. Marian, and K. Birman, “Integrated Approach to Data Center Power Management,” IEEE Trans. Comput., vol. 62, no. 6, pp. 1086–1096, 2013.

- W. Xia, P. Zhao, Y. Wen, and H. Xie, “A Survey on Data Center Networking (DCN): Infrastructure and Operations,” IEEE Commun. Surv. Tutorials, vol. 19, no. 1, pp. 640–656, 2017.

- K. Hong, S. Yang, Z. Ma, and L. Gu, “A Synergy of the Wireless Sensor Network and the Data Center System,” 2013 IEEE 10th International Conference on Mobile Ad-Hoc and Sensor Systems. Hangzhou, pp. 263–271, 2013.

- H. Shukla, V. Bhave, S. Sonawane, and J. Abraham, “Design of efficient communication algorithms for hotspot detection in data centers using Wireless Sensor Networks,” 2016 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET). Chennai, pp. 585–590, 2016.

- M. Wiboonrat, “Data Center Infrastructure Management WLAN Networks for Monitoring and Controlling Systems,” The International Conference on Information Networking 2014 (ICOIN2014). Phuket, pp. 226–231, 2014.

- C. D. Patel, R. Sharma, C. E. Bash, and A. Beitelmal, “Thermal considerations in cooling large scale high compute density data centers,” ITherm 2002. Eighth Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (Cat. No.02CH37258), vol. 2002. San Diego, CA, pp. 767–776, 2002.

- C. D. Patel, C. E. Bash, and C. Belady, “Computational fluid dynamics modeling of high compute density data centers to assure system inlet air specifications,” IPACK’01. The Pacific Rim/ASME International Electronic Packaging Technical Conference and Exhibition. Hawaii, 2001.

- M. G. Rodriguez, L. E. Ortiz Uriarte, Y. Jia, K. Yoshii, R. Ross, and P. H. Beckman, “Wireless sensor network for data-center environmental monitoring,” 2011 Fifth International Conference on Sensing Technology. Palmerston North, pp. 533–537, 2011.

- R. Khanna, D. Choudhury, P. Y. Chiang, H. Liu, and L. Xia, “Innovative approach to server performance and power monitoring in data centers using wireless sensors (invited paper),” 2012 IEEE Radio and Wireless Symposium. Santa Clara, CA, pp. 99–102, 2012.

- X. Liu, Y. Hu, F. Wang, Y. Pang, and H. Liu, “A Real-Time Evaluation Method on Internet Data Centre’s Equipment Corrosion by Wireless Sensor Networks,” 2016 International Symposium on Computer, Consumer and Control (IS3C). Xi’an, pp. 622–627, 2016.

- M. Hubbell et al., “Big Data strategies for Data Center Infrastructure management using a 3D gaming platform,” 2015 IEEE High Performance Extreme Computing Conference (HPEC). Waltham, MA, 2015.

- R. Khanna, H. Liu, and T. Rangarajan, “Wireless Data Center Management: Sensor Network Applications and Challenges,” IEEE Microwave Magazine, vol. 15, no. 7, pp. S45–S60, 2014.

- R. Khanna and H. Liu, “Machine learning approach to data center monitoring using wireless sensor networks,” 2012 IEEE Global Communications Conference (GLOBECOM). Anaheim, CA, pp. 689–694, 2012.

- J. Koomey and J. Stanley, “The Science of Measurement: Improving Data Center Performance with Continuous Monitoring and Measurement of Site Infrastructure,” 2009.

- J. Koomey, K. Brill, P. Turner, J. Stanley, and B. Taylor, “Uptime Institute white paper: A Simple Model for Determining True Total Cost of Ownership for Data Centers,” 2007.

- U.S. Department of Energy Federal Energy Management Program. By Lawrence Berkeley National Laboratory, “Data Center Energy Efficiency Measurement Assessment Kit Guide and Specification,” 2012.

- The Green Grid, “PUETM: A Comprehensive Examination of the Metric. White paper # 49,” 2012.

- ASHRAE Technical Committee 9.9, “2011 Thermal Guidelines for Data Processing Environments – Expanded Data Center Classes and Usage Guidance,” 2011.

- ASHRAE Technical Committee 9.9, “Data Center Networking Equipment – Issues and Best Practices,” 2012.

- ASHRAE Technical Committee 9.9, “Data Center Storage Equipment – Thermal Guidelines , Issues , and Best Practices,” pp. 1–85, 2015.

- S. Hussain and O. Islam, “An Energy Efficient Spanning Tree Based Multi-hop Routing in Wireless Sensor Networks.pdf,” IEEE Wireless Communications and Networking Conference. Kowloon, pp. 4386–4391, 2007.

- J. L. Vincent and J. Kuzma, “Using Platform Level Telemetry to Reduce Power Consumption in a Datacenter,” 2015 31st Thermal Measurement, Modeling & Management Symposium (SEMI-THERM). Folsom, CA, 2015.

- Uptime Institute, “Data Center Site Infrastructure Tier Standard: Topology,” 2012.

- M. Levy and D. Raviv, “A Novel Framework for Data Center Metrics using a Multidimensional Approach,” 15th LACCEI International Multi-Conference for Engineering, Education, and Technology: Global Partnerships for Development and Engineering Education. Boca Raton, FL, 2017.

- United Nations, “Report of the World Commission on Environment and Development: Our Common Future,” 1987.

Citations by Dimensions

Citations by PlumX

Google Scholar

Scopus

Crossref Citations

- Moises Levy, "Modeling and Simulation of Data Centers to Predict Behavior." American Journal of Science & Engineering, vol. 1, no. 1, pp. 11, 2019.

- Moises Levy, Daniel Raviv, "A framework for data center site risk metric." In 2017 IEEE 8th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), pp. 9, 2017.

- Elisa Benetti, Matteo Fracassetti, Gianluca Mazzini, "Case study for Data Center Distributed Monitoring." In 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), pp. 0248, 2022.

- V.M. Sivagami, K.S. Easwarakumar, "An Improved Dynamic Fault Tolerant Management Algorithm during VM migration in Cloud Data Center." Future Generation Computer Systems, vol. 98, no. , pp. 35, 2019.

- Manuel Holler, Christian Dremel, Benjamin van Giffen, Helen Vogt, "From Smart Connected Products to Systems of Systems: Case Study and Archetypes." In Product Lifecycle Management. Green and Blue Technologies to Support Smart and Sustainable Organizations, Publisher, Location, 2022.

- Gitanjali Mehta, Gaurav Mittra, Vinod Kumar Yadav, "Application of IoT to optimize Data Center operations." In 2018 International Conference on Computing, Power and Communication Technologies (GUCON), pp. 738, 2018.

- Moises Levy, Anitha Subburaj, "Emerging Trends in Data Center Management Automation." In 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), pp. 0480, 2021.

- Seil Lee, Hanjoo Kim, Seongsik Park, Seijoon Kim, Hyeokjun Choe, Sungroh Yoon, "CloudSocket: Fine-Grained Power Sensing System for Datacenters." IEEE Access, vol. 6, no. , pp. 49601, 2018.

- Moises Levy, Daniel Raviv, Jason O. Hallstrom, "Data Center Modeling Using a Cyber-Physical Systems Lens." In 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), pp. 0146, 2019.

- Moises Levy, "A Novel Framework for Data Center Risk Assessment." In 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), pp. 0148, 2020.

- Moises Levy, Daniel Raviv, Justin Baker, "Data Center Predictions using MATLAB Machine Learning Toolbox." In 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), pp. 0458, 2019.

- Moises Levy, Daniel Raviv, Justin Baker, "Data Center Simulations Deployed in MATLAB and Simulink Using a Cyber-Physical Systems Lens." In 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), pp. 0465, 2019.

- Linus Bächler, Shaun West, Jörg Schanze, "Service Discovery in Complex Business Ecosystems." In Smart Services Summit, Publisher, Location, 2021.

- K Jairam Naik, "An Alternate Switch Selection for Fault Tolerant Load Administration and VM Migration in Fog Enabled Cloud Datacenter." In 2021 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), pp. 531, 2021.

No. of Downloads Per Month

No. of Downloads Per Country